Anthropic principles agree on bigger future filters

post by XiXiDu · 2010-11-02T10:14:04.000Z · LW · GW · Legacy · 5 commentsContents

5 comments

I finished my honours thesis, so this blog is back on. The thesis is downloadable here and also from the blue box in the lower right sidebar. I’ll blog some other interesting bits soon.

My main point was that two popular anthropic reasoning principles, the Self Indication Assumption (SIA) and the Self Sampling Assumption (SSA), as well as Full Non-indexical Conditioning (FNC) basically agree that future filter steps will be larger than we otherwise think, including the many future filter steps that are existential risks.

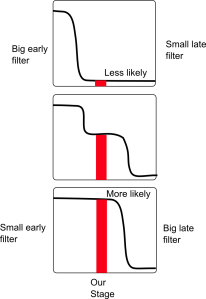

Figure 1: SIA likes possible worlds with big populations at our stage, which means small past filters, which means big future filters.

SIA says the probability of being in a possible world is proportional to the number of people it contains who you could be. SSA says it’s proportional to the fraction of people (or some other reference class) it contains who you could be. FNC says the probability of being in a possible world is proportional to the chance of anyone in that world having exactly your experiences. That chance is more the larger the population of people like you in relevant ways, so FNC generally gets similar answers to SIA. For a lengthier account of all these, see here.

SIA increases expectations of larger future filter steps because it favours smaller past filter steps. Since there is a minimum total filter size, this means it favours big future steps. This I have explained before. See Figure 1. Radford Neal has demonstrated similar results with FNC.

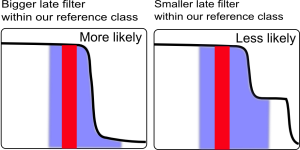

Figure 2: A larger filter between future stages in our reference class makes the population at our own stage a larger proportion of the total population. This increases the probability under SSA.

SSA can give a variety of results according to reference class choice. Generally it directly increases expectations of both larger future filter steps and smaller past filter steps, but only for those steps between stages of development that are at least partially included in the reference class.

For instance if the reference class includes all human-like things, perhaps it stretches from ourselves to very similar future people who have avoided many existential risks. In this case, SSA increases the chances of large filter steps between these stages, but says little about filter steps before us, or after the future people in our reference class. This is basically the Doomsday Argument – larger filters in our future mean fewer future people relative to us. See Figure 2.

Figure 3: In the world with the larger early filter, the population at many stages including ours is smaller relative to some early stages. This makes the population at our stage a smaller proportion of the whole, which makes that world less likely. (The populations at each stage are a function of the population per relevant solar system as well as the chance of a solar system reaching that stage, which is not illustrated here).

With a reference class that stretches to creatures in filter stages back before us, SSA increases the chances of smaller past filters steps between those stages. This is because those filters make observers at almost all stages of development (including ours) less plentiful relative to at least one earlier stage of creatures in our reference class. This makes those at our own stage a smaller proportion of the population of the reference class. See Figure 3.

The predictions of the different principles differ in details such as the extent of the probability shift and the effect of timing. However it is not necessary to resolve anthropic disagreement to believe we have underestimated the chances of larger filters in our future. As long as we think something like one of the above three principles is likely to be correct, we should update our expectations already.

5 comments

Comments sorted by top scores.

comment by steven0461 · 2010-11-03T20:38:17.228Z · LW(p) · GW(p)

SIA/FNC doesn't just prove the universe is full of alien civilizations, it proves each solar system has a long sequence of such civilizations, which is totally evidence for dinos on the moon.

Replies from: XiXiDu↑ comment by XiXiDu · 2010-11-04T09:21:04.636Z · LW(p) · GW(p)

Well, I'm not sure what to think as I'm not yet at the point that would allow me to read the paper. Neither regarding probability theory nor the concepts in question. But the conclusion seemed important enough for me to ask, even more so as Robin Hanson seems to agree and Katja Grace being a SIAI visiting fellow. I know that doesn't mean much, but thought it might after all be not totally bogus then (at least for use to show how not to use probability).

That dinos on the moon link is awesome. That idea has been featured in one Star Trek Voyager episode too :-)

Replies from: jfm, steven0461↑ comment by jfm · 2010-11-04T14:00:31.069Z · LW(p) · GW(p)

The novel Toolmaker Koan by John McLoughlin doesn't just feature dinosaurs on the moon, it features a dinosaur generation-ship held in the outer solar system by an alien machine intelligence. A ripping good yarn, and a meditation on the Fermi Paradox.

↑ comment by steven0461 · 2010-11-04T20:51:56.358Z · LW(p) · GW(p)

I'm not saying it's totally bogus. I do have a ton of reservations that I don't have time to write up.

comment by XiXiDu · 2010-11-04T16:45:34.622Z · LW(p) · GW(p)

According to SIA averting these filter existential risks should be prioritized more highly relative to averting non-filter existential risks such as those in this post. So for instance AI is less of a concern relative to other existential risks than otherwise estimated.