Thought as Word Dynamics

post by Paul Jorion · 2024-03-30T10:19:57.325Z · LW · GW · 0 commentsContents

Thought as Word Dynamics Paul J. Jorion, Université Catholique de Lille II. Statics III_ Dynamics IV. Implications 2. This Network is stored in the human brain 3. A talking subject experiences the dynamics as being "affective" II. Statics 4. The Network comprises a subset of the words (the "content words") of a particular natural language 5. The individual unit of the Network is a word-pair 6. Each such word-pair has at any time an affect value attached to it 7. The word-pairs and their affect value result from Hebbian reinforcement 9. The hereditary principle within the memory network [is isomorphic to the mathematical object called a "Galois Lattice"] 10. The endogenous principle is isomorphic to the mathematical object called a "P-graph" 11. The endogenous principle is primal 12. The hereditary principle is historical: it allows syllogistic reasoning and amounts to the emergence of "reason" in history III. Dynamics 13. The skeleton of each speech act is a path of finite length in the Network 14. A speech act is the outcome of several "coatings" on a path in the Network 16. The utterance of a speech act modifies the affect values of the word-pairs activated in the act 17. The gradient descent re-establishes an equilibrium in the network 18. Imbalance in the affect values attached to the network has four possible sources 19. In the healthy subject each path has inherent logical validity; this is a consequence of the topology of the network 20. Neurosis results from imbalance of affect values on the network preventing normal flow (Freudian "repression") 21. Psychosis amounts to defects in the network's structure (Lacanian "foreclosure") IV. Implications 22. Speech generation is automatic and only involves the four sources mentioned above 23. Speech generation is deterministic 24. There is no room for any additional "supra-factor" in speech act generation than the four mentioned above 25. One such superfluous "supra-factor" is "intentionality" triggered by consciousness or otherwise None No comments

Thought as Word Dynamics

Paul J. Jorion, Université Catholique de Lille

paul.jorion@univ-catholille.fr

The following is a manuscript written in the year 2000 meant to become a volume 2 of my French book Principes des systèmes intelligents published in 1989 by Masson in Paris.

The model presented here has been built over a number of years from several angles. It is truly multi-disciplinary in its concept as the evidence which has been gathered to support its plausibility has five main components: (i) mathematical objects that have been explored by the author in another context as part of his anthropological research on modelling kinship networks and so-called "primitive mentality" types of reasoning, (ii) intensive and extensive logic, (iii) linguistics, (iv) psychology — including Freudian metapsychology, (v) the introspective part of philosophy that amounts to twenty-five centuries of speculative cognitive science.

The ambition here is to provide a framework for speech acts, being specific enough about its statics and dynamics that it is testable as an Artificial Intelligence application (i.e. can be written as source code). The test began many years ago when, being part of British Telecom's "Connex" Project, I designed ANELLA: an "Associative Network with Emergent Logic and Learning Abilities" (1987-1990). The project is currently being revived as SAM: Self-Aware Machines (www.pribor.io).

- Overall principles:

1. Speech acts are generated as the outcome of a dynamics operating on a network

2. This network is stored in the human brain

3. A talking subject experiences the dynamics as being emotional or "affective"

II. Statics

4. The Network comprises a subset of the words (the "content words") of a particular natural language

5. The individual unit of the Network is a word-pair

6. Each such word-pair has at any time an affect value attached to it

7. The word-pairs and their affect value result from Hebbian reinforcement

8. The Network has two principles of organisation: hereditary and endogenous

9. The hereditary principle is isomorphic to the mathematical object called a "Galois Lattice"

10. The endogenous principle is isomorphic to the mathematical object called a"P-graph'

11. The endogenous principle is primal

12. The hereditary principle is historical: it allows syllogistic reasoning and amounts to the emergence of "reason" in history

III_ Dynamics

13. The skeleton of each speech act is a path of finite length in the Network

14. A speech act is the outcome of several "coatings" on a path in the Network

15. The dynamics of speech acts is a gradient descent in the phase space of the Network submitted to a dynamics

16. The utterance of a speech act modifies the affect values of the word-pairs activated in the act

17. The gradient descent re-establishes an equilibrium in the Network

18. Imbalance in the affect values attached to the Network has four possible sources

- Speech acts of an external origin, heard by the subject

- Bodily processes experienced by the speaking subject as « moods »

- Speech acts of an internal origin: thought processes as « inner speech » or hearing oneself speak (being a sub-case of 2.)

- Empirical experience

19. In the healthy subject each path has inherent logical validity; this is a consequence of the topology of the Network

20. Neurosis results from imbalance of affect values on the Network preventing normal flow (Freudian "repression")

21. Psychosis amounts to defects in the Network's structure (Lacanian « foreclosure")

IV. Implications

22. Speech generation is automatic and only involves the four sources mentioned above

23. Speech generation is deterministic

24. There is no room for any additional "supra-factor" in speech act generation than the four mentioned above

25. One such superfluous "supra-factor" is "intentionality" triggered by consciousness or otherwise

- Overall principles:

- Speech acts are generated as the outcome of a dynamics operating on a Network

The general hypothesis that "speech acts are generated as the outcome of a dynamics operating on a network" is specific when it states that the data, the "words", summoned in the generation of speech acts are structured in a network. It is also informative when it distinguishes two parts to the mechanism: a « statics », being the Network itself and a "dynamics" - so far unqualified - operating on it. It is somewhat trivial however in many other respects: one may wonder indeed if there is any logical alternative at all to the way the hypothesis is here formulated. For example, speech performance unfolds in time and is therefore out of necessity a dynamic process; also, any dynamics necessarily operates on a substrate constituting its "statics". In the case of speech performance the statics automatically comprises the building bricks of speech acts, i.e. the words that get combined sequentially into speech acts.

About the nature of the statics, unless the full complexity of speech performance is assigned to its dynamics, some of its structure is bound to reflect the static organisation of the data. Sure, there is no compelling reason for considering that these are structured at all: the converse hypothesis cannot even be dispelled that speech performance results from an extremely complex dynamics operating on unstructured data, sentences being generated through picking individual words on demand from a repository where they are randomly stored. At the same time, this converse hypothesis would suppose a highly uneconomical method for dealing with the task of generating a sequentially organised output. This would be unexpected as it has been observed that as soon as biological processes reach some level of complexity, the complexity spreads between the substrate and the dynamics operating on it (the process of « emergence », due to self-organisation).

If data ("words") are organised in a manner or other in their repository, one avenue for modelling such organisation is to represent it by the mathematical object known as a graph (a set of ordered pairs). A connected graph (we'll show that the connectedness of the graph is a condition for the rationality of the speech acts uttered) is what one refers to in non-technical terms as being a "network". In other words saying that the dynamics of speech performance operates on a network amounts to saying simply that its substrate of words is "in some way" and "in some degree" organised. Saying that this Network is connected amounts to saying that the full lexicon of the language is available whenever a clause is generated. [As will be shown below (section 21), in psychosis, only part of the lexicon is available at any one time for speech performance. Neurosis (section 20) corresponds to the less dramatic circumstances when individual words and therefore particular paths in the network are inaccessible, the whole lexicon remaining accessible, sometimes though through convoluted and cumbersome ways.]

2. This Network is stored in the human brain

It is clear that speech acts are produced from within a talking subject, as it is the mouth that utters them. This does not imply out of necessity that the Network mentioned above, along with its data, is stored within the talking subject. It can however be inferred that such is indeed the case, essentially for lack of a viable alternative.

Suppose that the Network were located elsewhere than within the talking subject, meaning that the substrate to speech acts lies outside his body. Then there would need to be for the talking subject some means through which he or she communicates with this outer Network, either that this external source acts as a sender and the speaker as a receiver, or that the talking subject can tap the source from a distance.

Quite interestingly, it is a distinctive feature of those individuals that the majority of humans regard as mentally deranged that they postulate the existence of such an outside source for speech acts and claim that their words, or their inner speech, is being interfered with by an obtrusive sender. [We will show below (section 21) why it should be expected from a network whose connectedness is broken that it assumes that it cannot be itself the source of the speech performance it utters. With connectedness lost, the disconnected parts of the network have ceased to communicate, they generate speech independently: the emergence of speech acts from another part of the network is perceived as being from an external source by every other part.]

If there is an external source to speech performance, whether it is acting as a sender or constitutes a repository accessed by the talking subject, there should be some circumstances where the communication is broken or at least impaired because of some physical obstacle interfering with it. Such impairments can be observed in the case of any electromagnetic waves: only gravitational waves are supposedly immune to blockage but their existence remains hypothetical. Nothing of the sort is observed with speech performance-. individuals swimming at the very bottom of the ocean, walking on the moon, or prisoners of a lead-coated concrete bunker don't show any reduction in their capacity for speech. [Their speech performance might be impaired by the circumstances but in this case, other causes are more likely candidates than distance from a sender: lack of oxygenation of the brain, sense deprivation, etc.]

It is therefore reasonable to assume that the Network acting as the substrate for speech performance, i.e. containing words, is internal to the subject.

Once admitted that the Network is located within the talking subject, its likely container needs unambiguously to be the brain. Indeed lesions to the brain, being accidental or clinically performed, as well as other types of interference, do impair speech performance in very general or in very specific ways. There is by now an abundant literature, that the likes of Broca or Wernicke initiated, showing what consequences in terms of aphasia or agnosia, i.e. impairments in speech performance, or thinking, of various natures, specific lesions of the brain induce or interfere with it functioning (the works of Saks, and Damasio and Damasio have popularised such accounts). Let us notice however that such observations, taken in isolation, are insufficient to invalidate the hypothesis of the externality of the Network: it could be indeed that lesions simply hinder reception from an external sender, or impair the brain's capacity at tapping an outer repository. It is only once admitted as most plausible that the body of the talking subject holds the Network, being the substrate for speech performance, that the brain shows to be the probable location for it.

Beyond this deductive probability, is there any further plausibility for the brain containing the type of network we're having in mind? There is indeed: the brain is known to contain a network constituted of nerve cells or neurones. In the coming pages we will constantly check if the Network we're talking about here and the one made of nerve cells can possibly be the same.

3. A talking subject experiences the dynamics as being "affective"

A thoroughly "physical" account of the objective dynamics of speech performance will be provided later. In the meantime we indicate that as far as the talking subject is concerned, its subjective experience of the dynamics of speech performance is — from the initiation of a speech act to its conclusion — one of an emotional, or "affective" nature. The view commonly held is that emotions hinder the expression of rational thinking. It is true that beyond a certain threshold, emotion may turn into various forms of disarray and impair speech performance. In normal circumstances however, the "expression of one's feelings" — which is the spontaneous way people describe the motive behind their speech acts —results in rational discourse. The reason why is that the Network underlying speech performance is structured, channelling speech performance along branching but constrained paths. In such way that the expression of one's feelings engenders out of necessity one or more series of meaningful sentences.

People claim they speak to "express their feelings", "to relieve themselves", "to get something out of their system" and such is indeed the subjective experience of speech performance: talking subjects experience a situation ranging from minor to serious dissatisfaction (the causes of which we'll investigate) and "talk their heart out" until, having reached the end of an outburst of speech, they feel relieved: feeling once again of a "satisfied mind". Until that is, of course, some renewed source of minor irritation launches the dynamics all over. We will show that from an objective point of view the dynamics is no doubt better described as the reaching of a potential well within a word-space under a minimisation dynamics, but it can also justifiably be described as an "affective dynamics", as for the talking subject the process is experienced as one of emotional relief. Also, the parameter determining the dynamics of the gradient descent within the word-space are the "affect" values associated with the words in the "word-space" that the Network constitutes.

II. Statics

4. The Network comprises a subset of the words (the "content words") of a particular natural language

In Indo-European languages there are two types of words. Every locutor has a very strong intuitive feeling of this. We have no difficulty when defining the meaning, of offering a definition, of words of the first type: "a rose is a flower that has many petals, often pink, a strong and very pleasant fragrance, a thorny stem", etc.; "a tire is a rubber envelope to a wheel, inflated with air", etc. With the second type, we're in real trouble: « the word 'nonetheless' is used when one wishes to suggest that while a second idea may — at first sight — look contradictory to one first expressed, it is however the case, etc.". When trying to define a word like "nonetheless" I typically cannot resolve myself to say that it "means" something, I'd rather claim — like I did above — that "it is used when...", and revealingly I am forced to express this usage by quoting — if not a true synonym of it, at least, as with "however"— a word which is used in very similar contexts. The first type of words are often called "content words", the second "framework-" or "structure words". [Not every language deals with such distribution of "content-" and "framework-words" in a similar way. Languages like Chinese and Japanese are much more sparing in their use of "framework-words" than Indo-European languages are. Archaic Chinese for one had very few of those and meaning was emerging essentially from the bringing together (if needed with added strategically set pauses) without further qualification of « content-words".]

Dictionaries have an easy time with the first and a rotten time with the second, doing like done here with "nonetheless": resorting to the cheap trick of referring to a closely related word, the meaning —the usage — of which the reader is supposedly more familiar with. The British philosopher Gilbert Ryle, interestingly called the first type "topic-committed" and the second "topic-neutral". He wrote: "We may call English expressions 'topic-neutral' if a foreigner who understood them, but only them, could get no clue at all from an English paragraph containing them, what that paragraph was about" (Ryle 1954: 116). In the technically unambiguous language used by the medieval logicians, the first were called "categoremes" and the second, "syncategoremes". [Ernest Moody sums up the issue in the following manner : « Les signes et les expressions à partir desquels les propositions peuvent être construites étaient divisés par les logiciens médiévaux en deux classes fondamentalement différentes : les signes syncatégorématiques, qui n'ont dans la phrase qu'une fonction logique ou syntaxique, et les signes catégorématiques (à savoir les "termes" proprement dits) qui ont un sens indépendant et peuvent être les sujets ou les prédicats des propositions catégoriques. On peut citer les définitions qu'a donné Albert de Saxe (1316-1390) de ces deux classes de signes, ou de "termes" au sens large : "Un terme catégorématique est celui qui, considéré par rapport à son sens, peut être le sujet ou le prédicat [...] d'une proposition catégorique. Par exemple, des termes comme 'homme', 'animal', 'pierre', sont appelés catégorématiques parce qu'ils ont une signification spécifique et déterminée. Un terme syncatégorématique, quant à lui, est celui qui, considéré par rapport à son sens, ne peut pas être le sujet ou le prédicat [...] d'une proposition catégorique. Appartiennent à ce genre, des termes comme 'chaque', 'aucun', 'quelque', etc. qui sont appelés signes d'universalité ou de particularité ; et semblablement, les signes de négation comme le négatif 'ne... pas...', et les signes de composition comme la conjonction 'et', et les disjonctions comme 'ou', et les prépositions exclusives comme 'autre que', 'seulement', et les mots de cette sorte" (Logique I). Au XIVe siècle, il devint habituel d'appeler les termes catégorématiques la matière (le contenu) des propositions, et les signes syncatégorématiques (ainsi que l'ordre et l'arrangement des constituants de la phrase), la forme des propositions » (Moody 1953 : 16-17).

Intuitively speaking we can understand this as meaning that "content-words" are essentially concerned with telling us what is the category, the "kind", the "sort" of thing we're talking about; while the second type of words, the "framework-words" are essentially playing a syntactic role, a "mortar" type of role — which would explain why we're at trouble explaining what they "mean" and feel more comfortable describing how they're being "used".

The Network we're talking about is made of "content-words": these are the building blocks of a network where roses connect with red and violets with blue. The other words, the "framework-words" are not part of this Network, they're stored in a different manner, they're summoned to make the "content-words" stick together, as the mortar of a particular kind that will make these words, or these combinations of words, work together within a clause. Like what was mentioned in an attempt to give a definition for "nonetheless": that it is used when the two states of things which are brought together may seem at first sight to be contradictory. In order to ease the clash, to relieve the affective discomfort that comes when contradictory states-of-affair are brought together, a word like "nonetheless" is pasted between the belligerents. With "nonetheless", the state-of-affairs evoked come from distant places in meaning-space with discrepant electrical charges: bringing them together creates an imbalance that needs to be resolved. The talking subject who's connecting in his speech the states-of-affairs that are on either side of the "nonetheless", cringes. So he stuffs between them a "contradiction insulator", a "compatibility patch" like nonetheless. And everything is once again fine. "The Duke knew that his best interest and the Princess's too was that he wouldn't try to see her again. Nonetheless, the following morning...". The "nonetheless" relieves my worry » I won't care for that Duke any more: if he's that kind of fool, well, good for him! What do I care!

"Framework-words" are part of what we will call the "coatings": the coatings that make out of the words found in a finite path along the Network a proper sentence.

5. The individual unit of the Network is a word-pair

As we said, mathematically speaking, a graph is a set of ordered pairs. It can be decomposed in elementary units of pairs, say "cat" and "feline", and each word can be part of more than one of such pairs: "feline" may be associated again, this time with "mammal", and "cat" with "whiskers", etc. Once admitted that what we're talking about is a network it becomes self-evident that its individual units are "word-pairs". It is however possible to go well beyond this trivial observation.

The origin of the medieval notion of the "categoreme" is in Aristotle's short treatise on words called "Categories". Here, the philosopher is only concerned with these words that can act as either a subject or a predicate in a sentence. "Blue" is predicated of the subject "violets" when I say that "violets are blue". Colour" is predicated of "blue", the subject, when I say that "Blue is a colour". It is clear that the words so distinguished as being able to act as subject or predicate amount to those I called earlier "content-words". Why should they be called "categoremes"? Because, Aristotle argues, they can be used in ten different ways, with ten different functions, because there are ten points of views from which "stuffs" can be looked at, the "various meanings of being"; these he calls categories. Here is his explanation:

"Expressions which are in no way composite signify substance, quantity, quality, relation, place, time, position, state, action, or affection. To sketch my meaning roughly, examples of substance are 'man' or 'the horse', of quantity, such terms as 'two cubits long' or 'three cubits long', of quality, such attributes as `white', 'grammatical'. 'Double', 'half ,’greater', fall under the category of relation; 'at the market place', 'in the Lyceum', under that of place', 'yesterday', 'last year', under that of time. 'Lying', 'sitting', are terms indicating position, 'shod', 'armed', indicate state; `to lance', `to cauterise', indicate action; `to be lanced', `to be cauterised', indicate affection. No one of these terms, in and by itself, involves an affirmation; it is by the combination of such terms that positive or negative statements arise. For every assertion must, as is admitted, be either true or false, whereas expressions which are not in any way composite such as 'man', `white', 'runs', 'wins', cannot be either true or false" (Aristotle, Categories, IV).

The most important in this passage are the final words: isolated terms, terms taken on their own cannot be regarded as either true or false: "it is by the combination of such terms that positive or negative statements arise". One can even go one step further: does a term in isolation mean anything? "Of course" is one tempted to say, indeed, as I said earlier, we're at no loss when asked to define a term like "rose". We gave as an example of doing this: "a rose is a flower that has many petals, often pink, a strong and very pleasant fragrance, a thorny stem". We spontaneously assigned the rose the category of substance, of being a flower; we assigned quantity to its petals for being many; we attributed the quality of being pink to its petals, etc. In other words, we brought the rose out of its isolation by connecting it with other words in sentences of which, as Aristotle observed, it will then be possible to say if they are true or false.

Out of the examples that Aristotle mentions, it is blatant that "double", "half', "greater", "two cubits long", "lying", "sitting", "shod", "armed", "runs", "wins" have no meaning unless they are said, predicated, of something else. But after a moment of reflection it becomes obvious that this applies to the other words too: "man", "horse", "white". As we've seen when looking at what is called the definition of a rose, they also, need to be said of something to come alive. In a passage of one of his dialogues, The Sophist, Plato has the Stranger from Elea making an identical point: "The Stranger: A succession of nouns only is not a sentence, any more than of verbs without nouns. […] a mere succession of nouns or of verbs is no discourse. [...] I mean that words like `walks, 'runs', 'sleeps,' or any other words which denote action, however many of them you string together, do not make discourse.[...] Or, again, when you say 'lion,' 'stag, 'horse', or any other words which denote agent — neither in this way of stringing words together do you attain to discourse; […] When any one says 'A man learns,' should you not call this the simplest and least of sentences? [...] And he not only names, but he does something, by connecting verbs with nouns; and therefore we say that he discourses, and to this connection of words we give the name of discourse" (Plato, The Sophist). [Griswold notices that — apart from Parmenides — the anonymous stranger is the single figure in all the dialogues who speaks like a full-blown philosopher; he observes also that while Socrates is present in The Sophist he remains almost mute (Griswold 1990: 365).]

Assuming that there is in the brain a Network being the substrate for speech performance, what would be its element, the smaller unit, to be stored in such a Network? We hold that it would be the "word-pairs" just described, instead of words in isolation. Synaptic connections seem the perfect locus for such storage: the place where the building blocks of the brain's biological network, the neurones, come together. Why not the isolated word? Because, as Aristotle saw it, "word-pairs" are true or false and, as we will see next, something being true or false, is the first condition for it having an affective value, i.e. what brings in motion the dynamics of speech performance.

6. Each such word-pair has at any time an affect value attached to it

The Stoic logicians held that every representation has an author and that no representation should be considered separately from its author's assent'. [In lmbert's words: "According to Sextus [Empiricus] a true representation is one 'of which it is possible to make a true assertion in the present moment'. ... That is to say that interpretation needs to take into account not only the determination of the action as to its occurrence and its objects, but also the determination of the action as to its witness" (lmbert 1999: 113).]

All utterances have an author who commits his person in varying degrees to what is said by him, while his quality (status, competence) determines for other locutors to what extent they can question these utterances and negotiate their content as (prospective) shared knowledge. To each representation that we hold we assent in a specific manner: we don't "believe" as strongly in all we know, we're not prepared to put our reputation at stake in a similar way with all we feel like saying. The way we express our assent to the words we utter expresses the degree in which we identify with them, staking our support with our person — spanning from the non-committal report of a fact in a quotation to the expression of a genuine belief.

The truth or falseness — and a number of possible degrees between these polar values — of a word-pair is stored along with it. Wittgenstein gives the following example: "Imagine that someone is a believer and says: 'I believe in the Final Judgement', and I reply 'Well, I'm not so sure. It is possible'. You would say there's an abyss between our views. Should he say 'It's a German aeroplane flying above us', and I would say 'It's possible. I'm not too sure', you would say that our views are pretty close" (Wittgenstein 1966: 53). The reason why dissenting slightly with the opinion expressed by someone about the Final Judgement or the presence of a German aeroplane reveal in one case a hostile rebuff and in the second a minor difference, resides in the affect values attached to either belief by the one who holds it. The strong identification of a speaker with his views on the Final Judgement renders any questioning of his opinion a rejection; conversely, his minor adhesion with the idea that there is a German plane above him makes any challenge of the view innocuous.

It is the association of affect values with word-pairs that led to the development of the psychotherapeutic technique of "induced association": one word is proposed as an inductor and the subject is asked to come up, "refraining as much as possible from thinking", with another word as a response. The condition that the association should be uninhibited by conscious censorship is supposed to ensure that the first word-pair retrieved is the one with the highest emotional value for the subject. In some early experiments by Jung and Riklin, one subject would associate "Father" with "drunk" and "piano" with "horrible". Jung commented: "the cement that holds together such complex [the word-pair] is the affect which these ideas hold in common" (Jung 1973 [1905]: 321).

The first principle of association is similarity in affective value. Memories, i.e. memory traces are linked to each other through the sensations which compose them and which they are sharing. Such network links allow recollection: the evocation of a memory. Any memory has the potential to represent itself (understood as both presenting itself anew and as "representation") in its whole, i.e. as a configuration of sensations, which were initially perceived simultaneously. Every sensation has a double ability: that of getting imprinted as a memory, i.e. as a configuration of correlated simultaneous sensations, and that of evoking — on a stage traditionally called "imagination" — old memories to which it partakes. Memory allows therefore a sensation to generate within imagination a deferred representation of its former instances. For example, the trumpeting of an elephant evokes within imagination the image of the animal as well as the fear it inspires when it charges.

It is such a network of affect values linked to the satisfaction of our basic needs, and deposited as layered memories of appropriate and inappropriate response, that allows "imagination" to unfold: to stage simulations of attempts at solution. What makes "The Lion, the Witch and the Wardrobe" an appealing title? That in the context of a child's world the threesome brings up similar emotional responses and are therefore conceptually linked (Jorion 1990a: 75-76). The classification of birds by the Kalam of New Guinea is a good example of how emotional association is the prototypical manner in which "stuffs" are associated because they elicit a similar emotional response: "…birds of mystical importance are likely to include representatives of two broad groups: those that normally maintain a considerable distance from man (many may be relatively rare) and which are selected for complex reasons, but, when encountered unexpectedly, are likely to be interpreted in highly mystical ways; and those that interact regularly and spontaneously with men and whose mystical significance derives mainly from the nature of the interactions. In the latter category are birds who call at men in the gardens and are taken as manifestations of ghosts, including some seen as bringing messages due to their chattering in a human-like manner. In the former category are birds who unpredictably and mysteriously startle men and disappear elusively, and are taken to be witches. In addition, birds of mystical significance are often the most salient and numerous species in the classificatory groups in which they occur" (Bulmer 1979: 57).

To any particular subject, a word like "apple" corresponds to the acoustic imprint "apple" and to the visual imprint of the word "apple" composed of the letters a-p-p-l-e. It is generally assumed that a subject would have an emotional response to a word like "apple". But what the technique of "induced association" shows is that words act in word-pairs. People therefore don't have any particular feelings about "apple": it all depends on the apple. The affect value attached to "apple of my eye" is likely to be different from that attached to the apple that Eve handed to Adam, while the apple in the "apples and pears" that one is not supposed to compare is likely to be pretty indifferent to most of us. These various apples sure enough are all called "apple", but apart from this identity in sound which the medieval logicians called "material", they don't share much else: emotional response to such various apples is too different for their identity as acoustic imprints to be more than a superficial likeness rather than a substantive one. ["Material" is the word applied by the medieval logicians to such likeness in sound or in writing: material as opposed to substantial that would apply to a likeness in meaning.]

The elements in the Network are therefore likely to be the word-pairs where apple meets sometimes "my eye", sometimes "Eve" and sometimes "pears". Each of these has an identity of its own, a very special affective value attached to it. It is this affect value that holds the word-pair together - like the forces holding the quarks of an elementary particle - and explains why each half acts as a handle for the other half of the word-pair. The stronger the affect value, the more inseparable the halves of the word-pair. It is the strength of the association that "induced association" exploits to draw a psychological diagnosis.

Practically, the assumption that there is affectively speaking more than one type of apple for a speaking subject suffices to relieve ambiguity, which is a classical difficulty in a sub-field of artificial intelligence known as "knowledge representation". How can a piece of software distinguish the fruit "kiwi" from the bird "kiwi". It cannot as long computers don't attach affect values to word-pairs. [ Unless someone does it on its behalf. This is what I did with ANELLA: I simulated affect values assigned to word-pairs and being modified dynamically through speech performance (Jorion 1990b).]

If elephants are no more than elephants, like an apple, irrespective of what kind of apple it is, there is no way to disambiguate a sentence like "I saw an elephant flying over New York". But if elephants show up in distinct word-pairs, the ambiguity is automatically relieved: the elephants in New York's Zoo do not belong to the same word-pairs as Dumbo the flying elephant.

7. The word-pairs and their affect value result from Hebbian reinforcement

The most common reason, apart from identity in affect value, for the association of words into word-pairs is proximity. This may come under various guises: resemblance covering the full range of each of the senses, contiguity in space or contiguity in time as provided by simultaneity or consecution. The two being recurrently evoked together, Hebbian reinforcement ensures that the connection between these two begins to "stick", i.e. that they are stored in conjunction in long term memory. So, kin get together; or the correlated parts of a single body where each part soon acts as a sign for the others: the tusks come along with the trunk; the hammer with the anvil, lightning and thunder; synonyms are closely related, and also and to no obvious purpose, at the "material" level, homonyms: trunk (torso) and trunk (suitcase) and trunk (snout), etc.

How do affect values get assigned to word-pairs? We hold that affect values are the way a talking subject experiences the strength of the association of the elements in a word-pair. Why would Jung' s patient respond "Drunk" to the stimulus "Father"? Because there has been Hebbian reinforcement: because the sorry story for this person was that her father was recurrently drunk. Not every association though is autobiographical" (see Rubin 1986), i.e. reflecting an individual's special circumstances: most are cultural, meaning that the recurrence of the same experiences for all members of the same cultural environment makes the association universally shared for them. Some exposure is of course not so much experienced as simply "found there" as an existing feature of the lexicon of the language a subject has learned. i.e. a funds shared by speakers of the same language.

The learning process, leading to the storage of a word-pair, is driven by punishment and reward. The process is clearly visible in language acquisition where the child (or any subject learning a new language) tests a word recently heard (not yet learnt though) within a word-pair, on the look-out for either approval or frowned eyebrows (the latter reflecting in the listener the clash of conflicting affect values that mismatched word-pairs engender). Generally speaking, Grice's views on relevance in conversation refers to the art of generating approved-of word-pairs (Grice 1975; 1978). Similarly, Wittgenstein's "the meaning is the use" amounts to "the meaning is the set of word-pairs" where a particular word is represented (Wittgenstein [1953] 1963: § 138-139).

8. The Network has two principles of organisation: hereditary and endogenous

a) "The Chinese way: "penetrable" vs "impenetrable" stuffs

Commentators have been divided over the centuries about where Aristotle's categories come from.

I remind here what they are in Aristotle’s words:

"Expressions which are in no way composite signify substance, quantity, quality, relation, place, time, position, state, action, or affection. To sketch my meaning roughly, examples of substance are 'man' or 'the horse', of quantity, such terms as 'two cubits long' or 'three cubits long', of quality, such attributes as `white', 'grammatical'. 'Double', 'half ,’greater', fall under the category of relation; 'at the market place', 'in the Lyceum', under that of place', 'yesterday', 'last year', under that of time. 'Lying', 'sitting', are terms indicating position, 'shod', 'armed', indicate state; `to lance', `to cauterise', indicate action; `to be lanced', `to be cauterised', indicate affection. No one of these terms, in and by itself, involves an affirmation; it is by the combination of such terms that positive or negative statements arise. For every assertion must, as is admitted, be either true or false, whereas expressions which are not in any way composite such as 'man', `white', 'runs', 'wins', cannot be either true or false" (Aristotle, Categories, IV).

Some hold that these exist in the physical world: according to them these ten manners of making word-pairs reflect the way the world presents itself to our senses (Imbert 1999); some have said instead that the categories reflect the way our mind operates (Sextus Empiricus); some others still hold that the categories simply reflect the grammar of the ancient Greek language and that this is where Aristotle found them (Trendelenburg quoted by Vuillemin 1967; Benveniste 1966).

Whatever the case, one of these categories has a sure footing in the physical world: that of "substance". There are two aspects to a "substance": its matter and its shape. Aristotle distinguishes "primary substances" and "secondary substances". Primary substances are particular entities such as individual men or horses ("neither asserted of a subject nor present in a subject"); secondary substances are such as the species or the genera wherein primary substances are included: iron is a primary substance, metal a secondary substance ("asserted of a subject but not present in a subject"). All other categories are "present in a subject", and some "asserted of a subject" as well. Sometimes it is also said that the species is the primary substance and the genus, the secondary: Oscar is a "man", a primary substance, and an "animal; a secondary substance. In any particular location there can only be one primary substance at the same time. If Peter is sitting on the chair, Paul can sit on his knees but he can't sit at the very same location as Paul. Unlike what happens with primary substances there is no difficulty in bringing together various secondary substances within the same physical location: when Oscar is alone in the kitchen, there is still there, simultaneously, a man, a biped, a mammal, a vertebrate, an animal and a creature.

Distinguishing things between being "penetrable" and "impenetrable" was central to archaic Chinese thought. [René Thom, the inventor of "catastrophe theory" has proposed a "semio-physics" where the concepts of "pregnancy" and "saliency" are central; these correspond broadly speaking to "penetrable" and "impenetrable": "It is therefore possible to regard a pregnancy as an invasive type of, fluid that spreads within the field of the salient forms perceived, the salient form playing the role of a crack' in reality through which the invasive fluid of the pregnancy percolates. Such propagation takes place under two modes: 'propagation through contiguity', 'propagation through similitude', which is the way that Sir James Frazer, in The Golden Bough, classified the magical actions of primitive man. […] contiguity and similitude enlist the respective topology and geometry of our "macroscopic" space; seen this way, there is in Pavlovian conditioning an underlying geometric base" (Thom 1988 : 21)].

In Chinese thought, there would be legitimate ways for combining the penetrable and the impenetrable, like "stone" and "hard", but also the impenetrable with the impenetrable, and this — unlike what happens in Western thought — would be the way that broader types, higher level concepts, are created. For instance "ox-horse" allows to compose the concept of "traction animals", "water-mountain", that of "nature". One can add up two impenetrable names to make a super-ordinate category. A classical paradox of early Chinese logic, Kung-Sun-Lun's claim that "White horse is no horse" derives from the suggestion that higher level concepts could derive similarly from combining penetrable with impenetrable (Hansen 1984; Graham 1989). [To be developed in section 11]. Aristotle's category of substance is an impenetrable, it acts as a substrate whereupon all the other categories can apply as so many coatings of "time", "place, "number", "quality", etc. without any of these getting in the way of any of the others. [That "substance" is a category unlike the other nine is something that Sir David Ross had noticed (Ross 1923: 165-66)]. The primary category of substance is the substratum presupposed by all the others (Ross 1923: 23).These nine categories are penetrables and as far as those are concerned there is no obstacle to piling them up on top of each other. When I say that "violets are blue", nothing prevents me indeed from saying at the same time that "violets are fragrant" or that "violets are pretty". If it is true that I cannot put the impenetrables violet and rose at the very same place at the very same time, I can do so with no difficulty with penetrables such as "blueness", "prettiness" or "fragrance", as long as a violet remains the substrate, the primary substance that allows them to do so.

b) The ancient Greek way: "essential" vs "accidental" properties

There is another distinction Aristotle made, relative to the way things and "states-of-affairs" are, or at least to the way they seem to us, that between "essential" and "accidental" properties. "Essential" properties are those that characterise as such a particular type of "stuff'. It is an essential property of a particular man that he is a speaking creature, or that he is aware of his own mortality. But that he is blind in one eye or that he doesn't shave his beard is an "accidental" property of his.

Concepts, universal words like "birds" or "bees", that is "labels" as I will consistently refer to them, are constituted only of essential properties and this is what makes them conceptual. Individuals, like you or I, "exemplars" as I will consistently call them, are bundles of properties, some essential, some accidental and this is what makes them empirical as opposed to conceptual. To a particular combination of essential properties corresponds a single "stuff' or "sort". This is why it is possible to define unambiguously a particular sort through its "essential" properties, i.e. the characterisation of its essence. When saying that man is a speaking creature who is aware of his own mortality, we're getting closer to the definition of man as a stuff distinct from every other. When saying that some men are blind in one eye or that some grow a beard we're moving away from the essence, to progress into the infinite variety of singular exemplars.

This feature, that "labels" only hold essential properties while "exemplars" combine essential and accidental properties allows (or is a consequence of) a very constructive relationship between exemplars and labels. Exemplars fall under labels, and the essential properties they possess can be seen as having been inherited from the labels they fall under. Exemplars inherit all the properties (out of necessity "essential": labels have no other) of all the labels they fall under, and these properties are essential to them as they are to these labels: they are inherent to their definition. I, as a man, inherit all the properties of all labels I am "underneath": from creature down to man, through animal, vertebrate and mammal. These are essential to these labels and therefore essential to me, their conceptual heir.

Aristotle said of predication that it can always be expressed as "to A, B belongs". "Blueness" belongs to "violets"; "colourfulness" belongs to "blue"; one "apple" belongs to Eve, another "apple" belongs to "my eye". Thus the principle for making word-pairs: to one half, the other half belongs. In common parlance, the elementary force that holds together the halves of a word-pair is expressed as a "is a" or "has a" relationship. That those are the two basic links that compose the Network was intuitively understood in the 1970s at the very beginnings of the knowledge representation debate in artificial intelligence: attempts were made to create entire "semantic networks" from "is a" and "has a" relationships. As will be shown, although insufficient, this assumption was inherently sound.

Broadly speaking, the "is a" relationship is what lies below the "hereditary" principle of organisation. The "has a", is what I call the "endogenous" principle of organisation. I said earlier that the "expression of one's feelings" leads — in normal circumstances — to rational statements, because the pathways being travelled over the Network are channelled. Pathways are etched in the mind/ brain and reflect recurrent usage. Conversely, etched pathways determine the relative ease of future similar associations. Most of the sophistication of our speech performance has its source here: it is the consequence of the fact that the Network - which is the substrate for speech act generation - possesses a very structured topology reflecting both the hereditary and the endogenous principles.

9. The hereditary principle within the memory network [is isomorphic to the mathematical object called a "Galois Lattice"]

What I call "labels" were called by the medieval logicians, categoremes, denoting at the general level as "universals", Aristotle's categories like substance, time, location, quantity, etc. Categoremes are only a subset of what I called earlier "content words" or concepts. The other subset of "content words" is constituted of proper nouns or "demonstratives". These — such as "Albert" — allow speaking of 'exemplars ". Not every exemplar however has got a proper noun such as "Albert", the second manner to give an exemplar a specificity is to refer to it in a deictic manner like in "this chair", i.e. through "showing" it with the help of a word like "this". Both "labels" and "exemplars" belong together to "hereditary" fields.

Sets provide a language for understanding the in/out duality of "labels" and "exemplars". Sets need not always to be regarded as completely defined. We can look at a set as a list of ordered pairs, one element of which, the label, stands for the other element: exemplars in the empirical world of what the label refers to (which might be "objects" but need not to be: words — set labels — may refer to other words, just as sets may refer to other sets). A set may be completely specified in terms of its label (intensive definition) without complete specification of its exemplars; conversely, a set may be completely specified in terms of its exemplars (extensive definition), the complete list of exemplars falling under the label, without full specification of its label. We may define operations on sets which are intensive, extensive, or both. [This is where the incompleteness of Aristotle's system kicks in: sets of labels account for the deductibility (rationality) of the world, the extensive collections of empiricals correspond to the necessity of enumerating non-deductible exemplars (expand).]

["Hereditary" fields (consistently referred to subsequently as F), are structured in the manner of a "Galois lattice" (Freeman and White 1993). As its name suggests, a Galois lattice is a member of the family' of mathematical objects called "lattices", i.e. a non-empty set subject to a partial order. A lattice is a set of elements partially ordered by an inequality < where any pair of elements x and y have a single least clement a (the "least upper bound" or join) such that x < a and y < a and a single greatest element b (the "greatest lower bound" or meet) such that b < x and b < y. A line diagram shows this ordering as oriented lines where x < y if and only if there is an upward path from x to y. [The standard references for Galois lattices are Birkhoff (1967) and repeated in Barbut and Monjardet (1970), Wine (1982) and Duquenne (1987). Lattice computation and drawing is available from Duquenne (1992). "Dual order" lattice is an apt description for the Galois lattice if one has in mind the duality of "intents"( labels) and "extents" (exemplars) closed under intersection.] Galois lattices are of a hierarchical nature and can be accessed either in a deductive manner, working down from labels to exemplars, or in an inductive manner, working up from exemplars to labels.]

A good example, though a deceptively simple one, of a hereditary field, is offered by taxonomies, where a cat is a feline, a feline is a mammal, a mammal is a vertebrate, a vertebrate is an animal and an animal is a creature. Such fields can be travelled in two directions, from the bottom up: if Tom is a cat, then he is automatically a feline, a mammal, a vertebrate, an animal and a creature, i.e. from exemplar to labels of widening generality. [Tom is within a biological taxonomy a "singular" or, in terms of Galois Lattice theory, a "join", the ultimate bottom of the structure, "creature" is a "meet", the ultimate top of a Galois lattice.][As Lucasiewicz was first to notice, Aristotle excludes from his theory of the syllogism both "joins", ultimate exemplars which "singulars" constitute, and "meets", ultimate tops, all encompassing "universals" such as "creature". The reason as he notes is that Aristotle wishes to develop a theory which applies only to categoremes which can appear equally as subject and predicate. Both joins and meets are boundaries: the meet because the chain of generalisation stops at its level, the join because the chain of inherited properties ends with it. "Aristotle emphasises that a singular term is not suited to be a predicate of a true proposition, as a most universal term is not suited to be a subject of such a proposition. […] he eliminated from his system just those kinds of terms which in his opinion were not suited to be both subjects and predicates of true propositions" (Lukasiewicz 1998 [1951]: 7).]

But a hereditary field can also be travelled from the top down, from the most inclusive label down to the singular. The relevance of hereditary fields lies in that the items linked through "is a" relationships, possess at the same time "has a" attributes, linking them in word-pairs with external labels that are inheritable from more general, to less general label. [These external labels are possibly part themselves of other F structures.] Taxonomies of such "penetrables" are however notoriously shallow, i.e. have few levels of organisation, colours for instance are not part of a hierarchy of more general "stuffs". These attributes or properties, link a label through a "has a" relationship with another label, under one of Aristotle's categories with the exception of substance. The relationship is of a "has a" type when considered from subject to predicate: "the King of France has baldness", or a "belonging" quality when seen from predicate to subject: "baldness belongs to the King of France".

Whatever property is attached to a label trickles down to the exemplars beneath it. The labels themselves are automatically inherited down along with their properties, hence the name "hereditary" for the field, And this applies from each level of labelling, down to the singular. Tom is a creature and inherits all properties belonging to creatures: he is an animal also and possesses the more specific features of animals: then, for being one, the more restricted set of features typical of mammals; finally those which only felines hold, as he is a feline. In some way, exemplars inherit down labels while labels inherit up exemplars. [The principle of the Galois Lattice was perfectly understood by John Duns Scotus. Etienne Gilson sums up his view thus: "Any division is the descent from a single principle towards innumerable particular species, and it is always complemented by a reunion ascending from the particular species up to its principle" (Gilson 1922: 15)].

The move up from exemplar and the move down from label are not symmetrical however: heredity of properties doesn't move upwards, properties such as retractile claws, are lost in their generality when moving up from feline to mammals; the female producing milk is lost in the upward move from mammal to vertebrate.

The move upwards [from the join to the meet] is one of inclusion under the "is a" mode: "Tom is a cat", "a cat is a feline", etc. The relationship is transitive: if a cat is a feline and a feline is a mammal, then a cat is a mammal, and if a mammal is an animal which in turn is a creature, then a cat is both an animal and a creature. But the relationship is anti-symmetric, the transitivity does not operate in the opposite direction: not all vertebrates are mammals and not every mammal is a feline. Should we wish to say something about mammals in relation to felines we need to express it as "some mammals are felines" with the implication that some other mammals are precisely no felines. This is why the set of elements is only partially ordered: the ordering does not apply to any pair of elements taken randomly: there is a whole contrast set of labels equally ordered at, for instance, the level of generality where "felines" reside, i.e. "canines", "rodents", etc. This characterisation in terms of "some" like in "some mammals are felines" is what the terminology of logic calls "quantifiers", the quantifier of "particularity" in this instance. The opposite, in terms of "all felines are mammals" is called "universality"; singularity is the quantifier applying to what-we call here "exemplars".

The illustration I gave of a biological taxonomy should not imply that all hereditary fields are similarly of a scientific nature. Nothing prevents a hereditary field from supposing, for instance, that all snakes are witches, while some men are snake-witches, etc. Within the Western world, in antiquity, before the advent of modern science, taxonomies were shallow and one of the most ambitious early attempts at establishing a many-level taxonomy was Aquinas' about angels. There were according to the Angelic(al) Doctor six levels of hierarchy among angels, in descending order from God to man: starting on top with the Seraphims, the Cherubs, the Thrones, the Dominations, the Virtues, the Principalities, the Archangels, down to the "guardian" angels of men, i.e. angels properly so called. [Aquinas supposed a hereditary if imperfect process for angels to transmit their knowledge: "... each angel transmitted to the angel below the knowledge that it received from above, but only in particularised fragments according to the capacity of intelligence of the angel beneath it" (Gilson 1927: 164).]

10. The endogenous principle is isomorphic to the mathematical object called a "P-graph"

The other type of fields that constitute the Network we call "endogenous" (consistently referred to subsequently as G). We hold that these are structured as a P-graph, an algebraic structure being a particular type of dual of a graph, which I first described in 1984 (Jorion & Lally 1984; Jorion 1990b; White and Jorion 1992; White and Jorion 1996). The P-Graph is a particular type of dual of a graph: data (typically "words") are associated with the edges of the graph, the relations between the data, with the nodes: nodes typically stand for word-pairs. The P-Graph is the mathematical object underlying ANELLA, the AI project mentioned in the introduction. The P-Graph — in particular its uncanny way of growing — is compatible with the architecture of an actual biological neural network, its emergent logical and learning abilities are similar to those displayed by human beings. As we will see, a P-graph, or "G" sub-structure connects categoremes across Galois lattices. A P-graph edge exists between two elements if there exists a homomorphism between the lattices they belong to (analogic link), e.g. eye / window; if they sound the same or write the same ("material" connection), e.g. humidity / humility; if they hold an emotional connection (the Jungian "complex"), e.g. father / drunk. Hereditary and endogenous fields criss-cross, and categoremes belong to both in different capacities. In one way, G structures connect F structures. Seen otherwise. F structures provide local organisation to G structures.

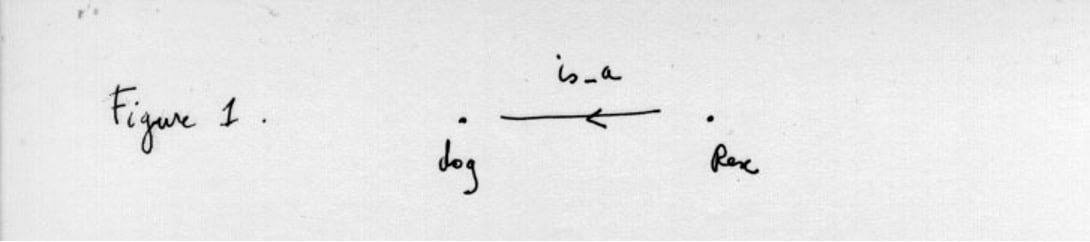

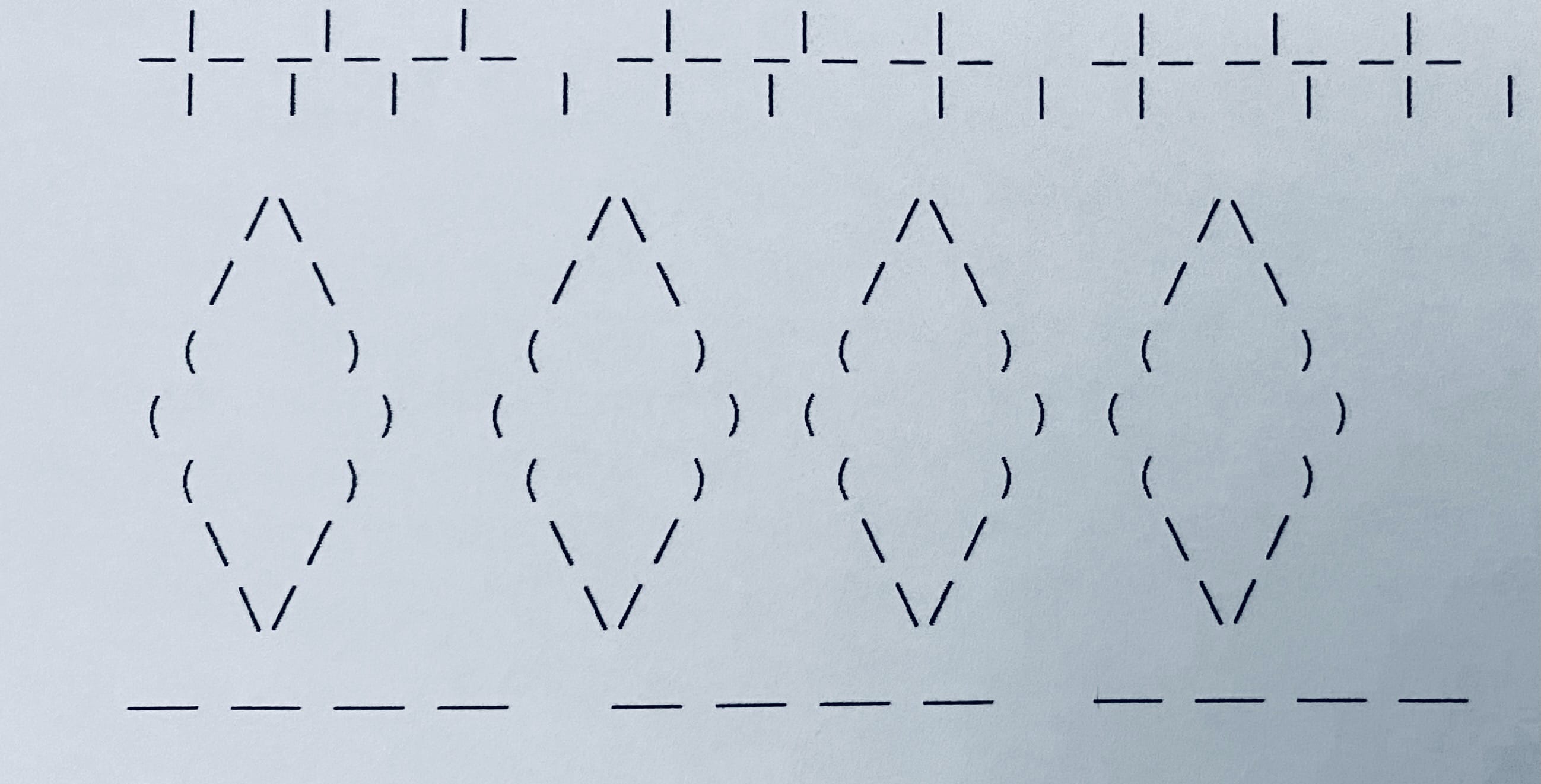

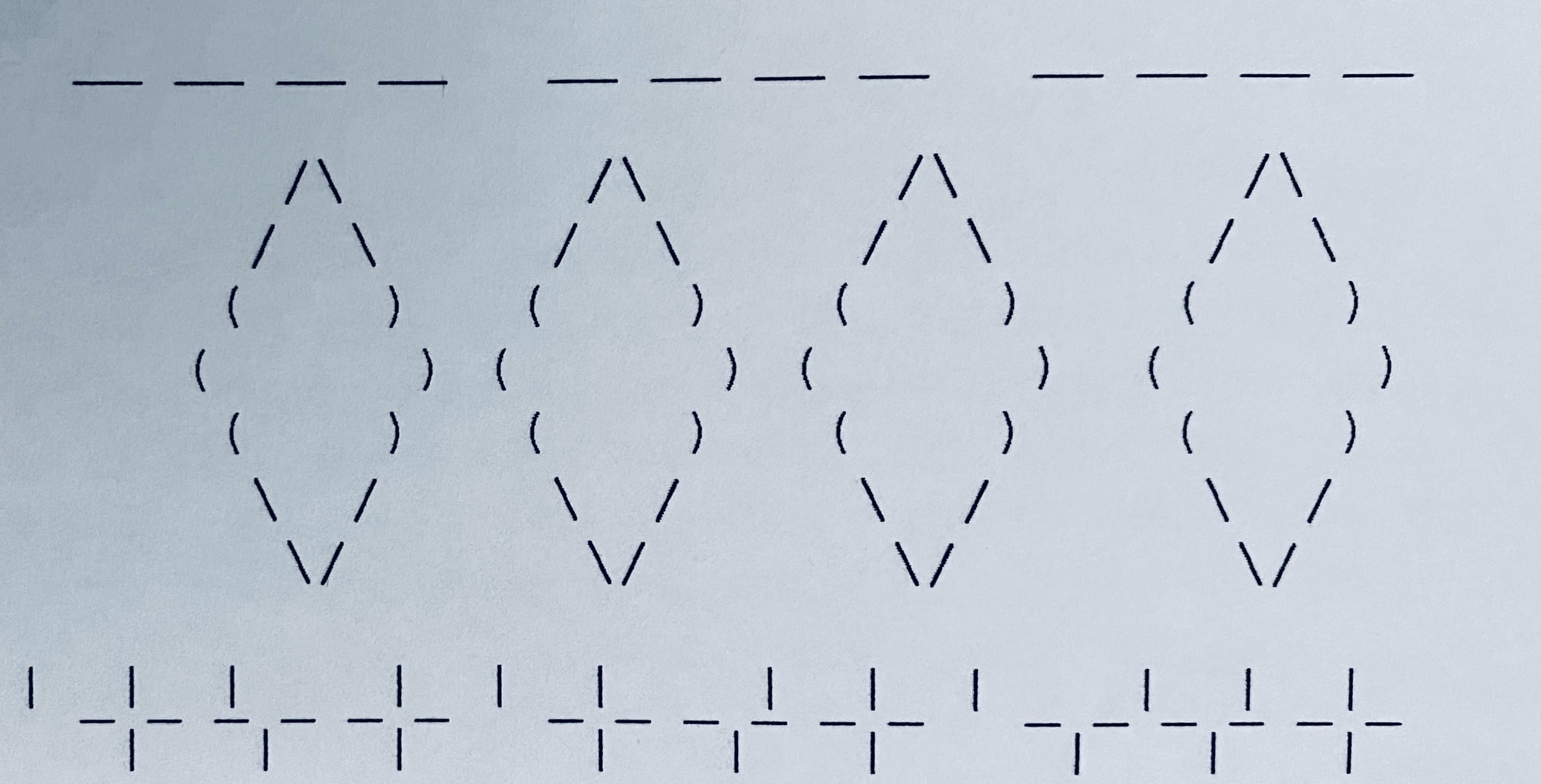

None of the shortcomings of Quillian-type semantic networks used for knowledge representation are displayed by the P-Graph representation of a neural network. In contrast with a classical semantic network, concepts are attached to the edges of a graph and relations its nodes. Thus instead of dealing with a semantic network as in figure 1,

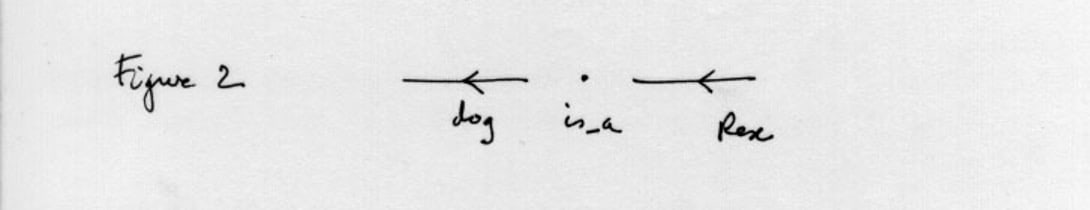

one has a situation as in figure 2:

Such transpose is not unique as there is more than one way for transposing the nodes of a graph into edges and edges into nodes, i.e. for obtaining the dual of a graph.

What possible translation is there for such a dual semantic network in terms of an actual biological neural network? In the particular instance of our illustration, "boy" would be attached to a ramification of the axon or to its end-synapse, "meets" to the cell body of the connected neurone and "girl" to a ramification of its axon.

At first glance the dual semantic network does not seem to present any overwhelming advantage over the traditional semantic network scheme. It does however in terms of its neuro-biological plausibility and in more than one way. Let us see why on a couple of illustrations.

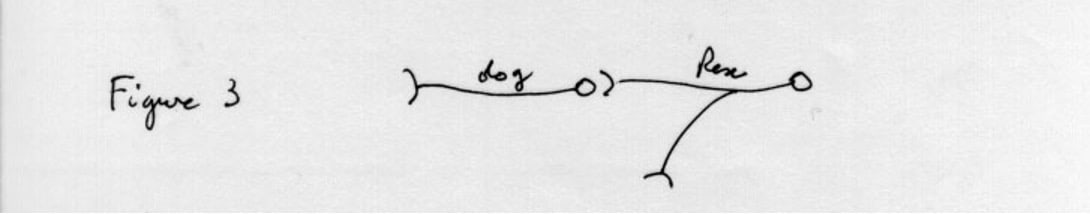

On the figures depicting the examples, neurone mappability is emphasised through a slightly modified representation of a directed graph: instead of using as its building blocks either nodes or edges, "graphic neurones" are used — a "graphic neurone" being composed in this instance of a node and a set of outward-branching edges. (This convention is of course precisely that holding in the visualisation of [formal] neural networks). To emphasise a biological neurone interpretation of the figures, no arrow is drawn on an edge, and diverging edges from the same node depart somewhere down a common stem suggesting the ramifications of the axon ending each with a "synapse". Figure 3 depicts this clearly.

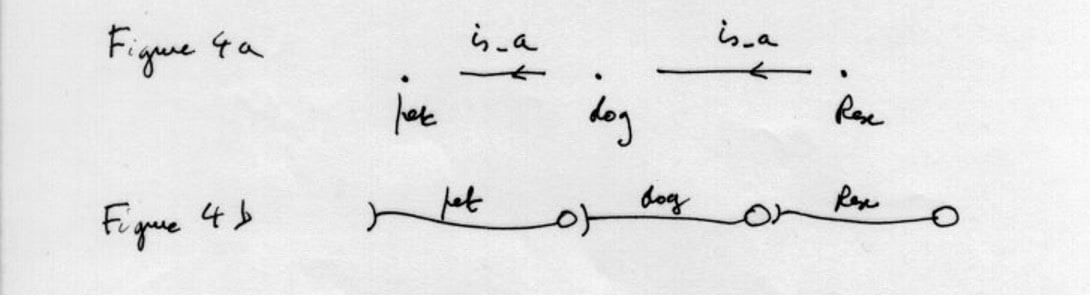

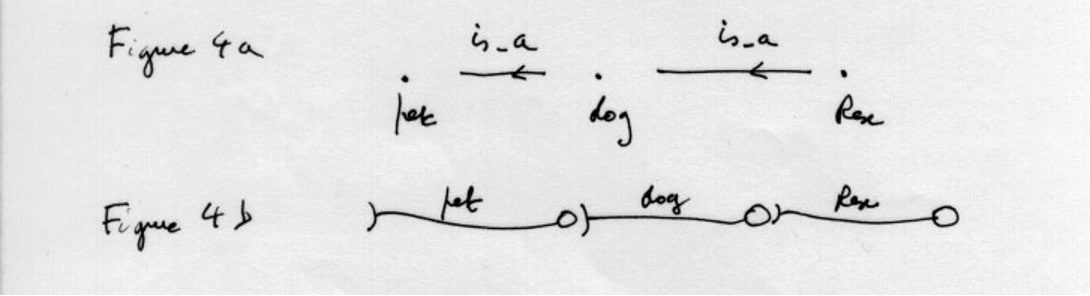

Figures 1 and 2 display the straightforward construction of the P-Dual of a simple semantic network containing only two concepts: "Rex" and "dog". Let us add now to the picture the additional concept of a "pet". Figure 4 a and b reveal that here again there is no special difficulty in transposing from the classical template to a P-Graph.

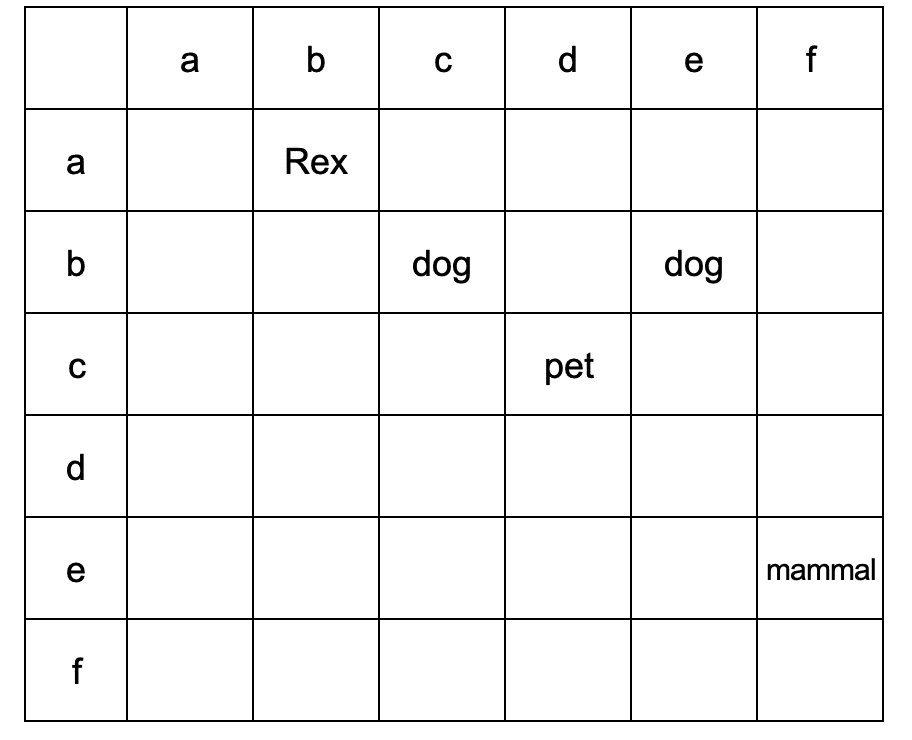

With more intricate cases a specific transpose method becomes however indispensable. This is easily provided by the auxiliary method of an adjacency matrix for the initial template graph. The principle is simple: a double entry table is constructed where nodes of the template graph are located with respect to their location between edges. The matrix is used in a later step as a guide for drawing the P-Graph.

If one specifies now that in addition to being a pet, a dog is also a mammal, Figure 5 shows how this would be represented in a classical semantic network.

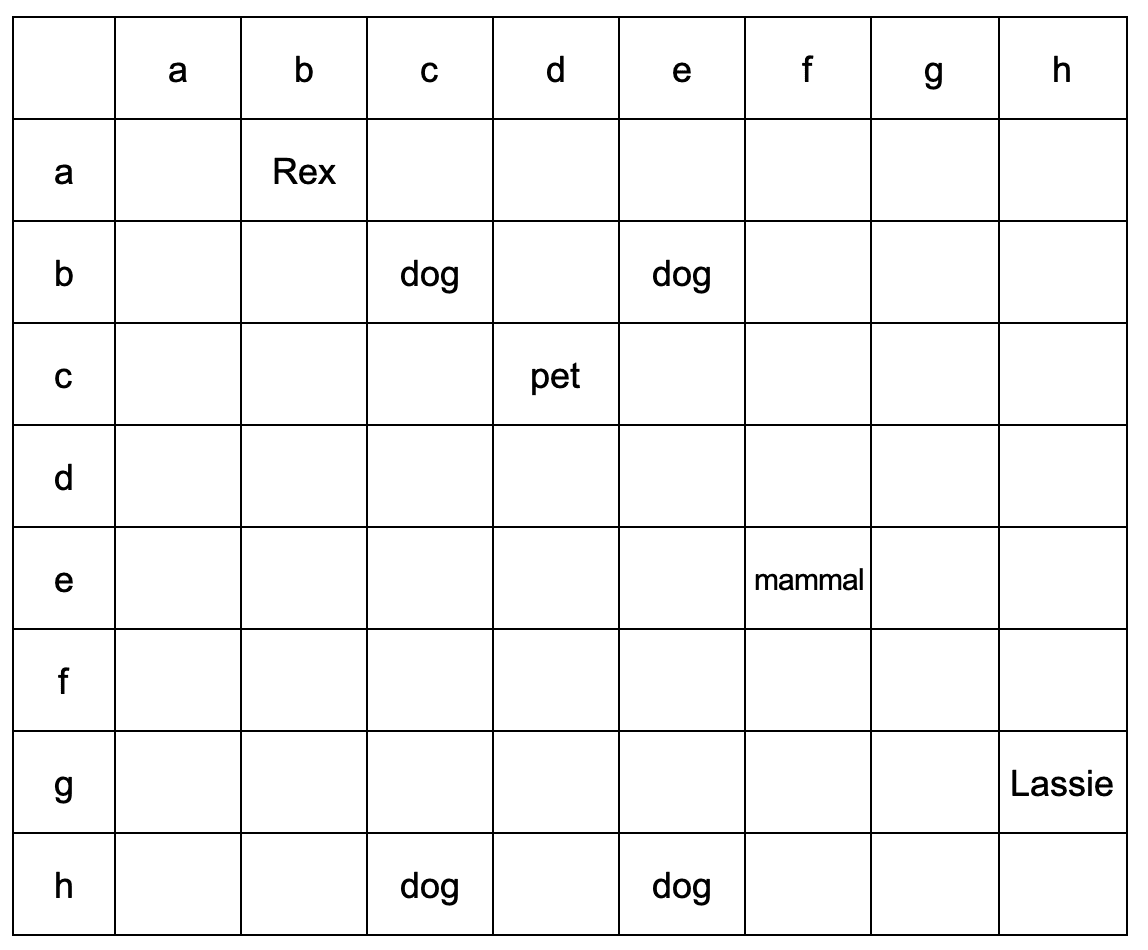

Here is the adjacency matrix corresponding to figure 5:

One notices that if the rule for building the adjacency matrix is indeed that of locating a node between edges, some auxiliary edges (a, d and f are required lest "Rex", "pet" and "mammal" are absent from the matrix (what such constraint expresses of having no isolated node in the template graph is in fact the condition for the "neuro-mappability" of the graph).

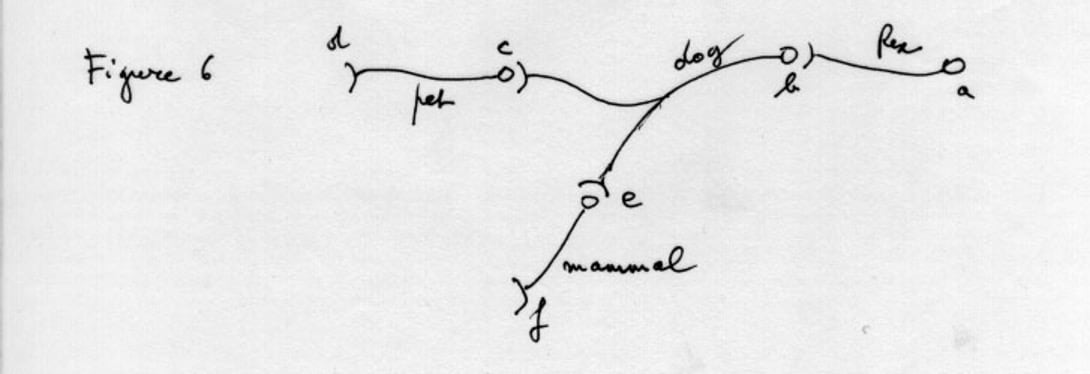

One is now in a position for constructing the P-Graph by assigning nodes the names of the former edges, and assigning the new edges the labels of the former nodes (the number of nodes in this particular type of a dual is the same as the number of edges in the template). One proceeds in the following manner: having posited the nodes a, b, c, d, e and f, the edges existing between them are drawn as instructed by the adjacency matrix. For instance, there is now an edge "dog" between b and c and another edge "dog' between b and e, etc. Figure 6 shows the derived graph.

If one wants to examine what has happened to the P-Graph with the addition of "mammal", we can compare figure 4 b) with figure 6. A new neurone has shown up to represent "mammal", and "dog" has branched out: a ramification has emerged as a shoot towards "mammal".

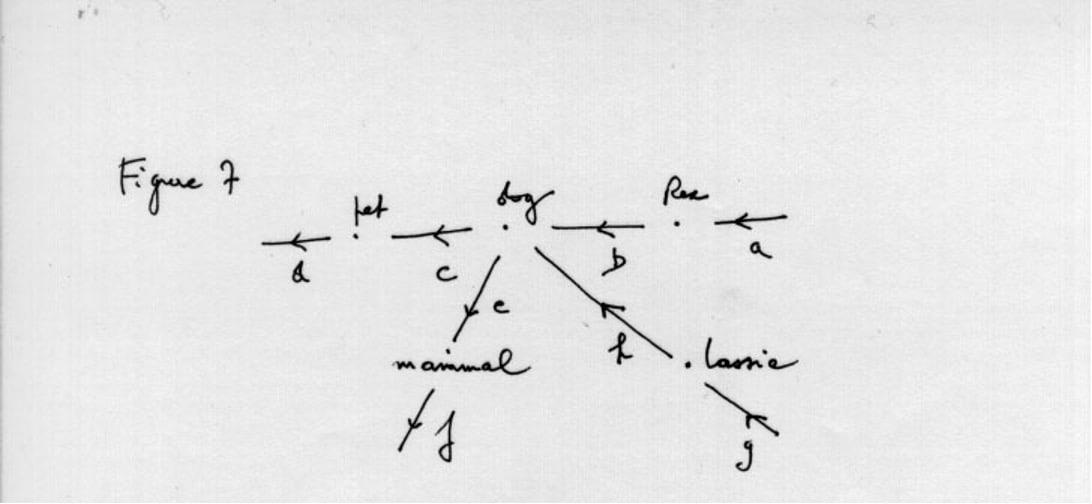

If one introduces now a second dog, "Lassie", in the picture, Figure 7 shows first the classical semantic network representation.

And here is the adjacency matrix:

Let us build the P-Graph accordingly, i.e. as shown in figure 8.

A new "Lassie" neurone has appeared, and from it has sprung a new "dog" neurone which has itself shot two ramifications towards the connections held by the original "dog" neurone. In such a way that there are now altogether four "dog" synapses belonging to two distinct "dog" neurones.

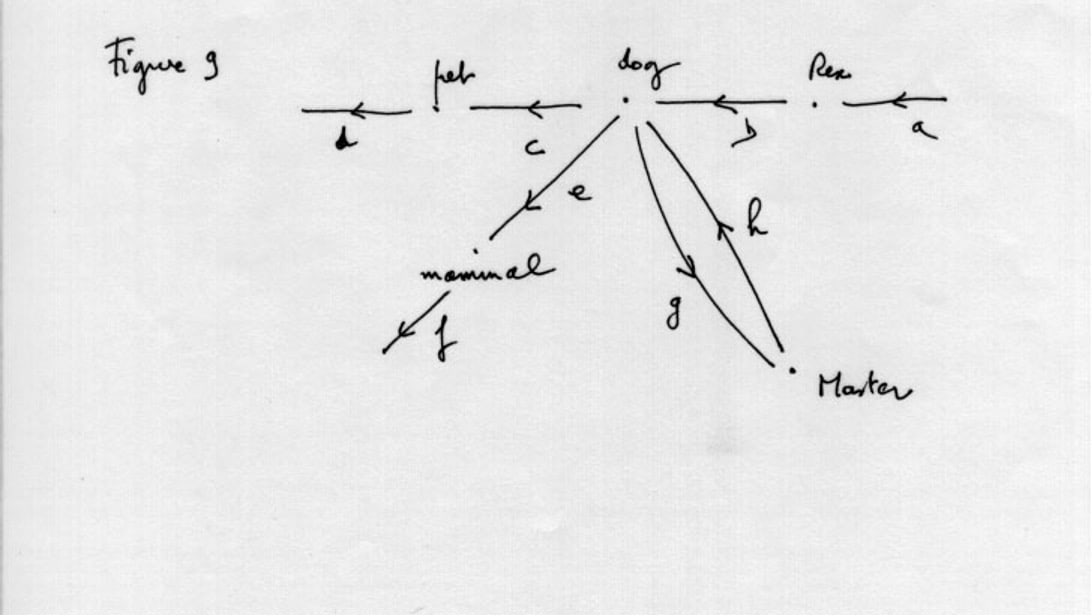

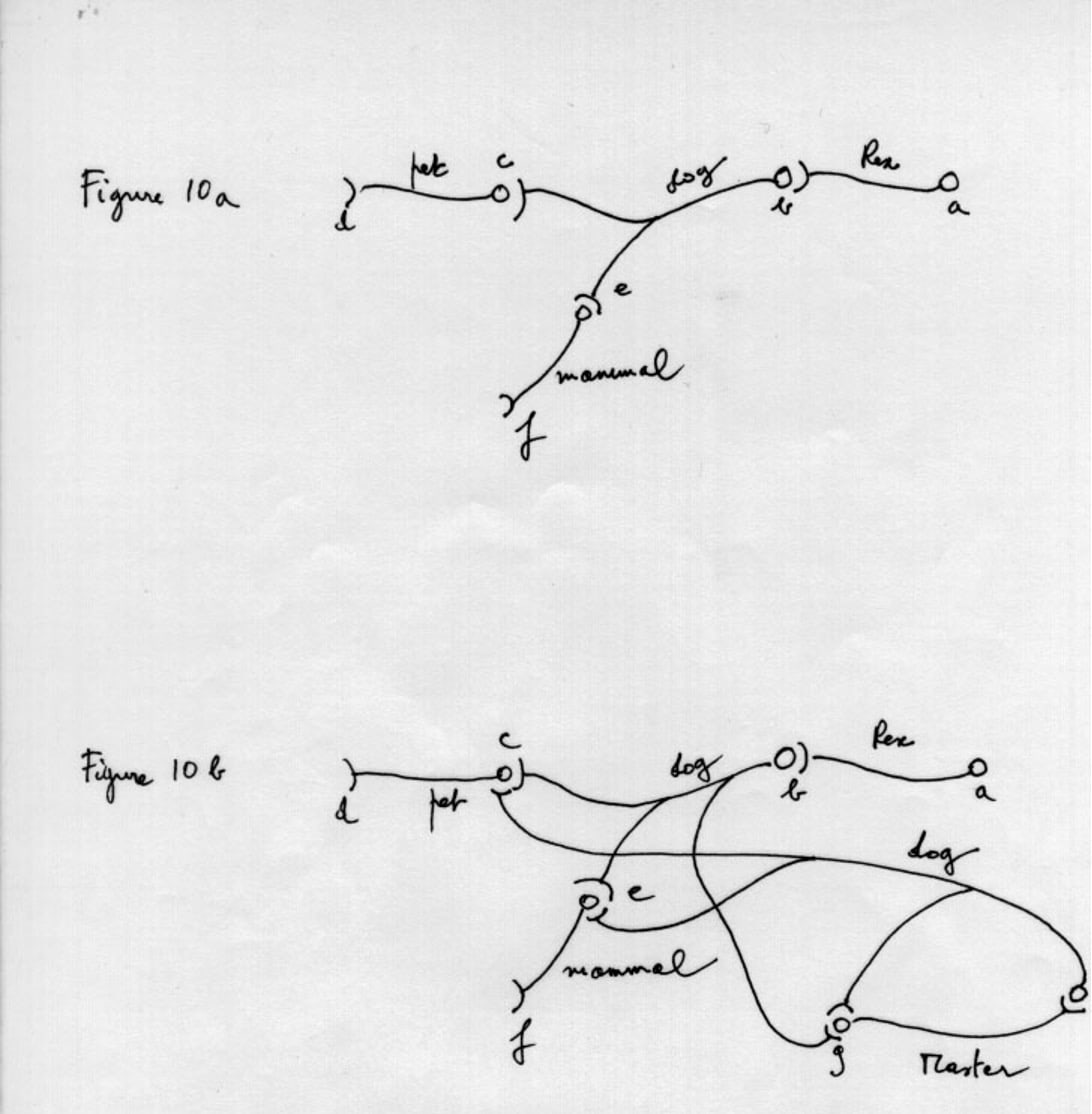

Now for a final illustration. Let us drop Lassie and go back to how things stood at an earlier stage when we only had as elements "Rex", "dog", "pet" and "mammal". And let us add "master" whereby we are introducing a new relation of a "has_a" type. Now a pet has a master, but a master has as well a pet. Hence the classical semantic network representation as in figure 9.

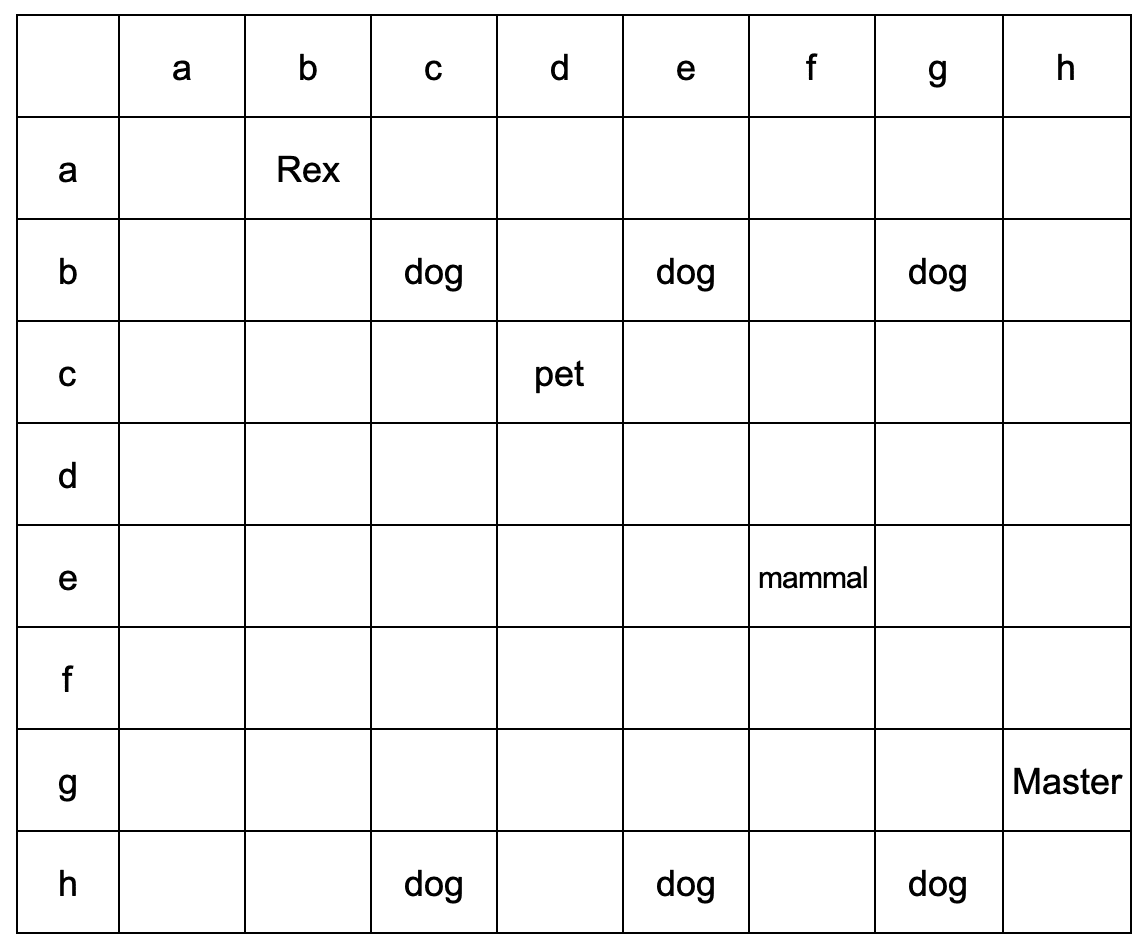

And the adjacency matrix that ensues:

The locations of some elements have now become trickier. Notice for instance, "dog" between b and g and between h and g, etc. Figure 10 b) shows the resulting configuration.

Compare with figure 10 a) (corresponding to figure 6) to see what the irruption of "master" has meant in terms of the P-Dual. Firstly, a new "master" neurone has shown up. Secondly, the original "dog" neurone has shot a third ramification towards this additional neurone. Thirdly, an entirely new "dog" neurone has appeared, duplicating the first one — but not perfectly: only as far as synapses are concerned. Fourthly, the new "dog" neurone has established an odd type of symmetrical connection with the "master" neurone; a cycle has appeared in the network between a "dog" neurone and a "master" neurone: one of the synapses of "dog" connects with the "master" cell body while one of the synapses of "master" connects with the "dog" cell body.

One could pursue with illustrations of this kind.

Until we added "master" with its reciprocal relations "master has dog" and "dog has master', neurone bodies were only liable to a single interpretation: the classical "is_a" relationship of semantic networks. Should it be the case that there is only one interpretation for the neurone body, there would be no necessity whatever for attaching any labels to the nodes of a P-Graph: each node would be read out as "is_a" with no ambiguity ensuing. Things changed when we introduced "master" in the graph: from then On, a node; had to be interpreted as meaning either "is_a" or "has_a", necessitating therefore appropriate labelling of nodes. What happened with the "has_a" relation was the intervention of a cycle between the related concepts - which does not exist with the "is_a" relationship. in such a way that labelling the nodes could easily he replaced by a simple decoding of the local configuration. One could issue a rule of the type "should there be an immediate cycle between two neurones, read the node as meaning 'has_a' else read it as 'is_a'".

As has become clear by now, the P-graph model has specific strengths compared to its earlier competitors:

1. it is consistent with the currently known properties of the anatomy and physiology of the nervous system.

2. also, because the P-graph is the dual of a classical semantic network, a word is automatically distributed between a number of instances of itself.

3. these instances arc clustered as to individual semantic use.

4. as announced in section 6, the scourge of knowledge representation, ambiguity, is automatically ruled out e.g. kiwi the fruit and kiwi the animal being only associated through one relationship, the "material" (meaningless) one of homophony, their confusion does not arise: they reside in distant parts of the P-graph.

5. the growth process of the graph explains why early word traces are retrieved faster than those acquired later: the number of their instances is out of necessity large as they have acted repeatedly as "anchor" for the inscription of new words in the process of language acquisition (this allows to do without the extraneous hypothesis that Michael Page mentioned in a recent article in Behavioral and Brain Sciences: " ... a node of high competitive capacity in one time period tends to have high competitive capacity in the next" - 2000: 4.4 Age-of-Acquisition Effects).

Resemblance is no longer a question only of distances measured over a neural network, it covers as well topological similarities. For instance, synonyms do not require to be stored physically close to each other in the brain (indeed it is unlikely they would as synonyms are typically acquired at different times in life rather than simultaneously) as long as they are part of isomorphic configurations of elementary units of meaning. Topological similarity may suffice for resonances to develop between homomorphic sub-networks, allowing synonyms to vibrate in unison.

From what has just been said, the obvious interpretation of the learning process in a Network is that each time a new signifier is added, a number of edges (determined by the P-Graph algorithm) are created representing a number of distinct neurones. As such however, the growth process of a P-Graph cannot reflect the actual learning process taking place in the cerebral cortex.

If the cerebral cortex of a new-born is such that each neurone is connected to a large number of other neurones - i.e. is mappable on a quasi-complete graph - then the structuring of the network for memory storage purposes implies for each neurone a dramatic destruction of most of its existing connections, the remaining ones becoming "informed" precisely because of their drastic reduction in number.

A Network is therefore constituted of two parts: a "virgin", unemployed part composed of quasi-completely connected neurones, and another part, active for memory storage composed of sparsely connected neurones. Learning a new word would then mean including a number of such "virgin" quasi-complete neurones within the active Network, attaching the new signifier's label to their axial ramifications, and making them significant by having most of their connections removed at the exception of those which have become meaningful through their labelling.

An existing neurone would therefore intervene actively in memory storage as soon as it has become structured, i.e. as soon as most of its connections have been severed, the few remaining ones encoding from then on a specific information. Viewed in this way, learning would not consist of the addition of new neurones but of the colonisation of existing but "virgin" neurones belonging to an unemployed part of the cerebral cortex. [It is possible in this case to suppose that such structuring is not strictly deterministic but results from some type of Darwinian competition such as described by Edelman and co-workers (Edelman 1981; Finkel, Reeke & Edelman 1989). If Edelman's analysis is correct, then it may even be possible to imagine that the newly colonised neurones are actually distracted from some other function they were performing until then.]

The only major constraint on such "pruning" for learning purposes would be that the network remains a connected graph (that there remains at least one path connecting each node to every other node), that is that there are no disjoined sub-graphs. [See section 21 where it is argued that here lies the origin of psychosis.] The smaller the number of edges, the more significant is the information contained in the network, as the reduced topology becomes concomitantly more significant. The issue is parallel to that of percolation, but so to speak, in a reversed manner, i.e. pruning should develop as much as can do but not beyond the percolation threshold. [The ultimate means for diminishing the number of edges in the graph is through allowing it to degenerate into a tree. This would not mean that a single completely ordered hierarchy obtains as hierarchies defined by distinct principles can intertwine. Such a principle should not however be sought for as the G relationship has a valuable role to play in the net. The definition of an "even number" is for instance decomposed in the following manner by a module of ANELLA: "even is_a number has_a divisor is_a two". It is clear that the "has_a" is here highly significant and could not possibly be replaced by a "is_a" relation. The "is_a" relationship introduces however a very effective structuring principle in a net as is revealed in contrast by "primitive mentality" where the "has_a" relationship is predominant — if not the only existing one (see on this Jorion 1989).]

The simple rule for neurone colonisation embeds topological information into a P-graph while ensuring redundancy in the representation of any individual word, confirming Page's insight that "localist models do not preclude redundancy" (Page 2000: "They do not degrade gracefully").

Aristotle is the father of what he named Analytics, the ancestor of what we nowadays call "logic". What analytics proposed with the theory of the syllogism are the principles of accurate reasoning or in Aristotle's own words, of "not contradicting oneself". [Aristotle: "The intention of the present treatise is to find a method through which we will be able to reason from generally admitted opinions relating to all problem submitted to us and which will allow us to eschew, when developing an argument, to say anything which would be (self-) contradictory" (Topica, 100 a 18).] Let us take a categoreme, say "cat", and let us consider it in two word-pairs: "cat-whisker" and "cat-feline". If we wish to produce sentences with these pairs, we can say "a cat has whiskers" or "whiskers belong to a cat", and "a cat is a feline" or "some felines are cats". Let us define the distance between the two halves of a word-pair, i.e. between "cat" and "whisker" and between "cat" and "feline", as being the unit, "1". What Aristotle's theory of the syllogism proposes are the rules for designing a sentence that makes sense between "feline" and "whiskers" through "cat", here called the "middle term". In other words, a syllogism provides the rules for valid sentence-making between concepts at a distance of "2" from each other in the Network.

The way this works is well known: "Whiskers belong to cats", "cats are felines" thus (reversal of focus) "Some felines are cats", hence "Whiskers belong to some felines". Ernest Mach (the physicist and philosopher of science) who left his last name as a unit for the speed of sound regarded the task of science as operating nothing more but nothing less than "mental economies" (Mach 1960 [1883]: 577-582). The syllogism is the basic tool for mental economy: we have established with the help of two word-pairs where "cat" is involved, a bridge between the two other halves of these word-pairs: "whiskers" and "felines". We have shortcut "cat" in the conclusion of a syllogism bringing together "whiskers" and "felines" and made thus a "mental economy". John Stuart-Mill held that the syllogism is trivial: it does not offer more information in the conclusion than there was beforehand; the leap, according to him, is in one of the premises: the intellectual boldness is in holding the cat to be a feline, the rest is nothing more than "having brought one's notes together" (in Blanché 1970: 251-252). This is true: the contribution of the syllogism doesn't lie in additional information content, it is in what has just been shown: it offers valid ways for connecting concepts at a distance "2" in the Network. And because hereditary fields, F structures, are transitive through the inclusion of concepts, they potentially offer the ways for connecting concepts at any distance from each other: if "some felines have whiskers" through cats and "felines are mammals" then "some mammals have whiskers", and also "some animals have whiskers", etc.

What Aristotle accomplished with his analytics represents a particular solution to formulating a logic, not necessarily a universally valid one, but at the same time sufficiently resilient that it repels successfully the suspicion that thought is a mostly fallible or culturally variable process. In our terminology, his solution consists of attaching to the top of every F cone the essential attribute of substantiality. Nonetheless at the symbolic level, Aristotle's "reduction" to a materiality that was at least in principle hierarchical provided the foundation for a kind of geometric principle of ordering. Aristotle's use of line diagrams in geometric form as a representation of syllogisms (Ross 1923: 33) antedates the equivalent use of Venn diagrams. The ability to do so — to reduce all syllogisms to line diagrams with a single kind of line, or Venn diagrams with a single kind of set representation of extensional definitions, is foundational to a hierarchical view of logic. In many ways Aristotle was formalising what we call the G operator by embedding abstract (penetrable) properties within a substantial (impenetrable) context. It remained for the F operator as a hierarchical operator to be developed.

The difficulties with Aristotle's formulation are that (1) it forces all content words to be treated as if they were within F cones assigning implicit properties through the inheritance of essential properties, while at the same time (2) it assumes that abstract or concrete definitions cannot exist and be handled on their own (e.g., within F cones), that is, that the potential connection between implicit labels and exemplars is everywhere necessary and defined, i.e. (3) that all propositions can be translated without informational loss into statements of the "has_a" form "A has B" therefore (4) there is no room for our distinction between the F operator implying the heredity of implicit labels (which assumes the possibility of both actual labels [substantives] and implicit labels but does not require their simultaneous presence), and the G operator, which may be thought of as of the form "A has B" where the G operator does not bestow hereditary properties when compounded in the form "A has B has C". [Actually, Aristotle states that all propositions can be expressed as "a belongs to b": ... to state attribution Aristotle does not say "B is A", but "to A, B belongs" (see Hamelin [1905] 1985: 158). Only the relation between primary substance and secondary substance is properly of an (inclusive) "a is b" nature.]