Thoughts about Policy Ecosystems: The Missing Links in AI Governance

post by Echo Huang (echo-huang) · 2025-02-01T01:54:54.333Z · LW · GW · 0 commentsContents

Summary Key findings: Main issues identified: Recommendations: Understanding ISO 26000: A Model for Effective Policy Ecosystems The Foundation and Evolution of ISO 26000 How the ISO 26000 Ecosystem Works Evidence from Practice Learning from Ecosystem Dynamics The NIST AI RMF and Modern AI Governance: Understanding the Ecosystem The Framework's Structure Current State of AI Governance Gaps in the Current System Building Better AI Governance: Recommendations from CSR Experience Learning from CSR Governance Success Requirements for Better AI Governance Accordingly Implementation Priorities Possible Future Research Directions Clarification None No comments

Summary

Reading full research (with a complete reference list)

This article examines how voluntary governance frameworks in Corporate Social Responsibility (CSR) and AI domains can complement each other to create more effective AI governance systems. By comparing ISO 26000 and NIST AI RMF, I identify:

Key findings:

- Current AI governance lacks standardized reporting mechanisms that exist in CSR

- Framework effectiveness depends on ecosystem integration rather than isolated implementation

- The CSR ecosystem model offers valuable lessons for AI governance

Main issues identified:

- Communication barriers between governance and technical implementation

- Rapid AI advancement outpacing policy development

- Lack of standardized metrics for AI risk assessment

Recommendations:

- Develop standardized AI risk reporting metrics comparable to GRI standards

- Create sector-specific implementation modules while maintaining baseline comparability

- Establish clear accountability mechanisms and verification protocols

- Build cross-border compliance integration

Understanding ISO 26000: A Model for Effective Policy Ecosystems

The Foundation and Evolution of ISO 26000

ISO 26000, established in 2010, is one of the most comprehensive attempts to create a global framework for social responsibility. Its development involved experts from over 90 countries and 40 international organizations, creating a global standard. Unlike narrower technical frameworks, ISO 26000 takes a holistic approach to organizational accountability, recognizing that an organization's social and environmental impact directly affects its operational effectiveness.

What makes ISO 26000 particularly interesting is its ecosystem integration. The framework doesn't operate alone - it's part of a sophisticated web of interconnected standards, reporting mechanisms, and regulatory requirements. This integration isn't accidental; it's a deliberate response to the limitations of voluntary frameworks.

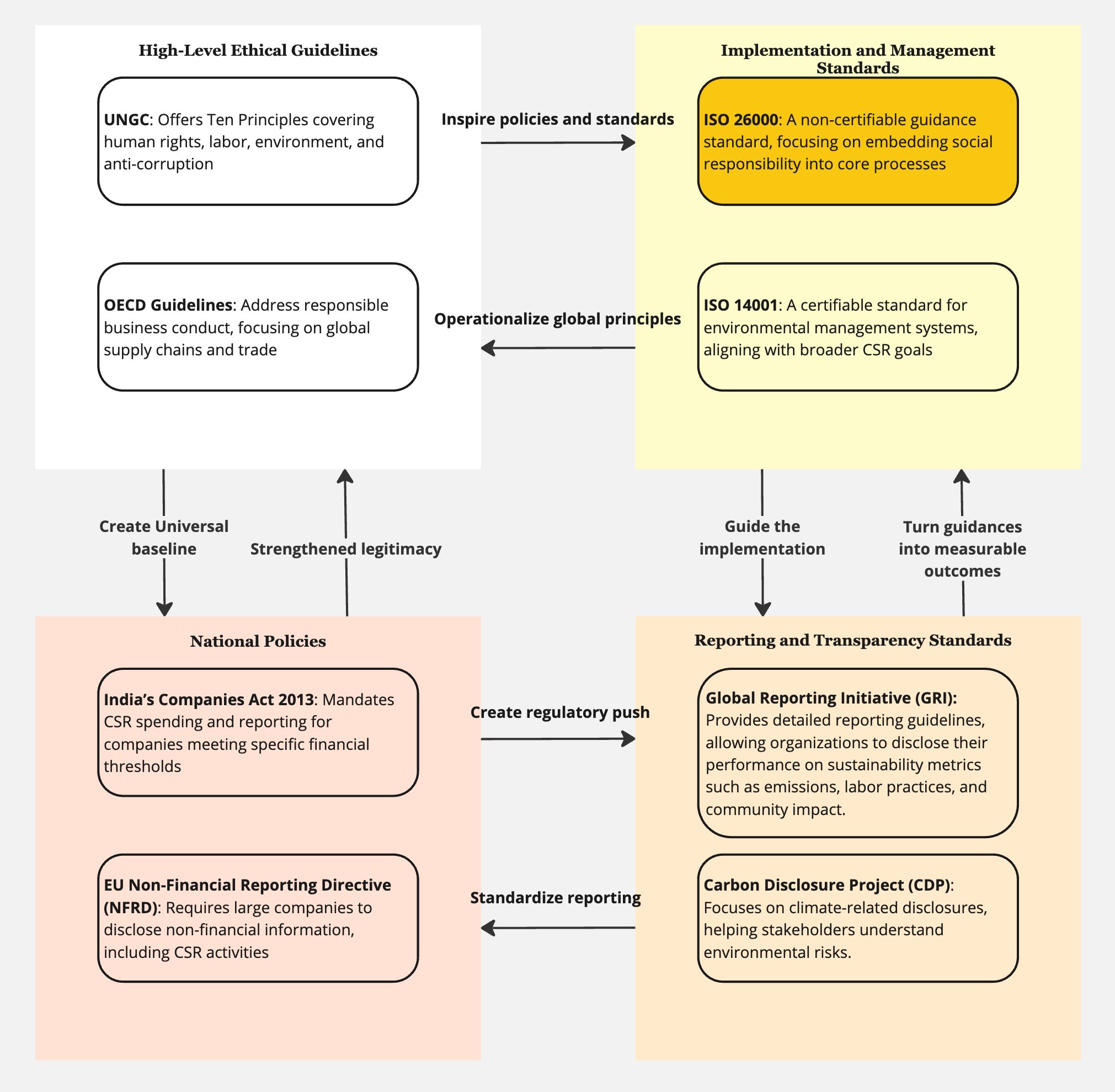

How the ISO 26000 Ecosystem Works

The ecosystem surrounding ISO 26000 operates through multiple layers of interaction. At its foundation, high-level ethical frameworks like the UN Global Compact and OECD Guidelines establish basic principles. ISO 26000 then transforms these principles into practical guidance, while maintaining enough flexibility for local adaptation.

The framework's effectiveness comes from its strategic position within this ecosystem. It serves three crucial functions:

- Clarifying CSR concepts and scope

- Supporting adaptation of global principles to local contexts

- Bridging various sustainability initiatives

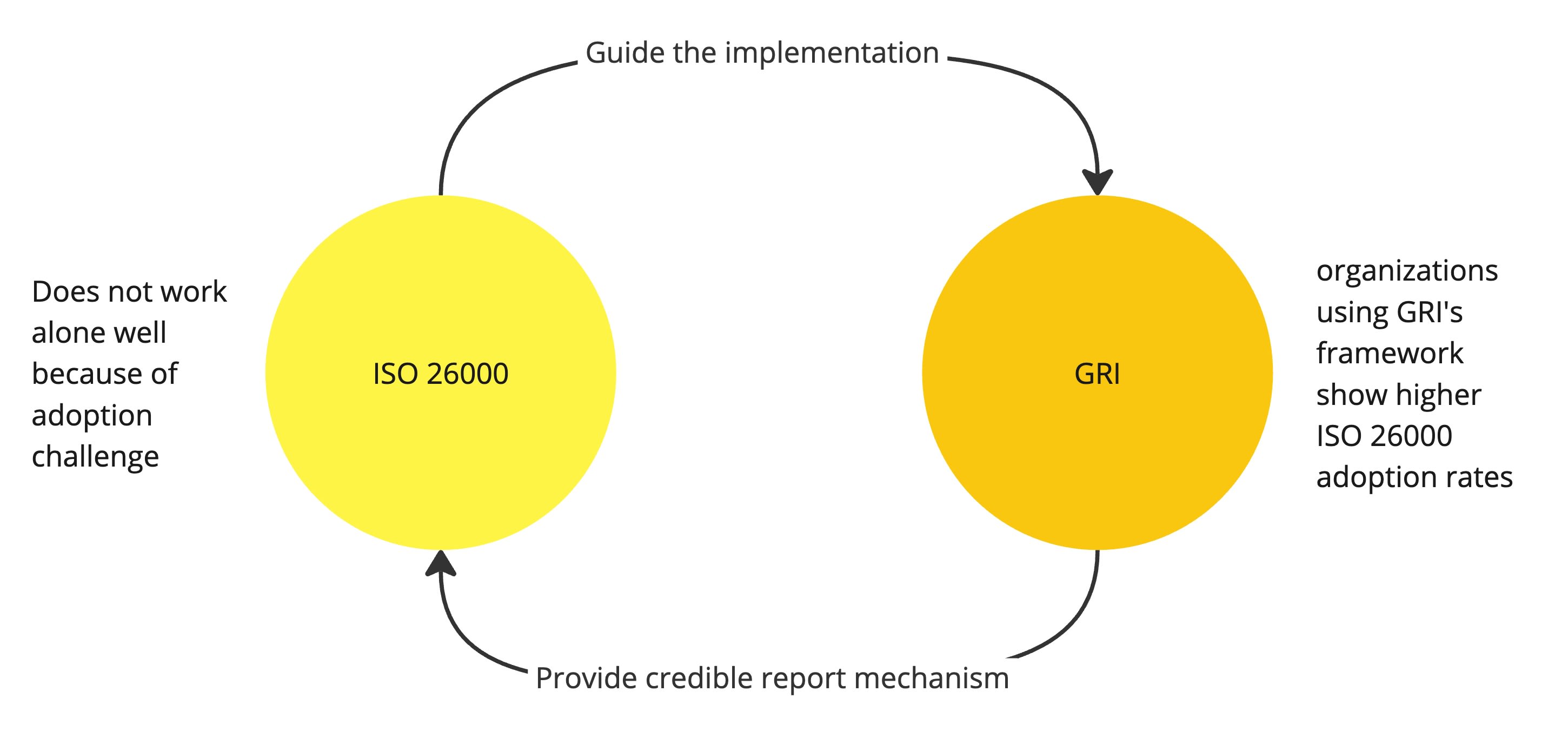

However, the real power of the ecosystem becomes apparent when we look at how different components work together. For example, while ISO 26000 provides comprehensive guidance, its non-certifiable nature could be a weakness. The ecosystem addresses this through complementary mechanisms like GRI (Global Reporting Initiative), which provides standardized metrics for measuring outcomes. Research shows that organizations using GRI's framework achieve higher ISO 26000 adoption rates, demonstrating how different parts of the ecosystem strengthen each other.

Evidence from Practice

Toyota's implementation of ISO 26000 in India provides a concrete example of how this ecosystem approach works in practice. The company successfully integrated global standards with local requirements by:

- Using ISO 26000 as a framework for social responsibility

- Aligning with Indian CSR mandates

- Implementing GRI reporting metrics

- Coordinating with ISO 14001 environmental standards

Learning from Ecosystem Dynamics

The ISO 26000 ecosystem reveals several important principles for effective governance:

- voluntary frameworks work best when supported by complementary mechanisms. While ISO 26000 itself isn't certifiable, its integration with other standards and reporting frameworks creates effective accountability pathways.

- successful implementation requires flexibility. The ecosystem approach allows organizations to adapt global principles to local contexts while maintaining consistency with international standards.

- standardized reporting plays a crucial role. The GRI and CDP transform abstract principles into measurable outcomes, enabling meaningful evaluation of organizational performance.

The NIST AI RMF and Modern AI Governance: Understanding the Ecosystem

The NIST AI Risk Management Framework (AI RMF), launched in January 2023, represents a major effort to create practical AI governance. Its success or failure could significantly impact how we manage AI risks in the coming years. This analysis examines how the framework operates within the broader AI governance ecosystem.

The Framework's Structure

The NIST AI RMF approaches AI risk management through four interconnected functions:

- GOVERN: Builds organizational culture for risk management

- MAP: Identifies and contextualizes AI-related risks

- MEASURE: Provides tools for risk assessment

- MANAGE: Guides practical risk response

This structure offers systematic guidance while maintaining flexibility - a feature given the diverse contexts where AI systems operate. Each function breaks down into specific actions and outcomes, providing concrete steps for organizations to follow.

Current State of AI Governance

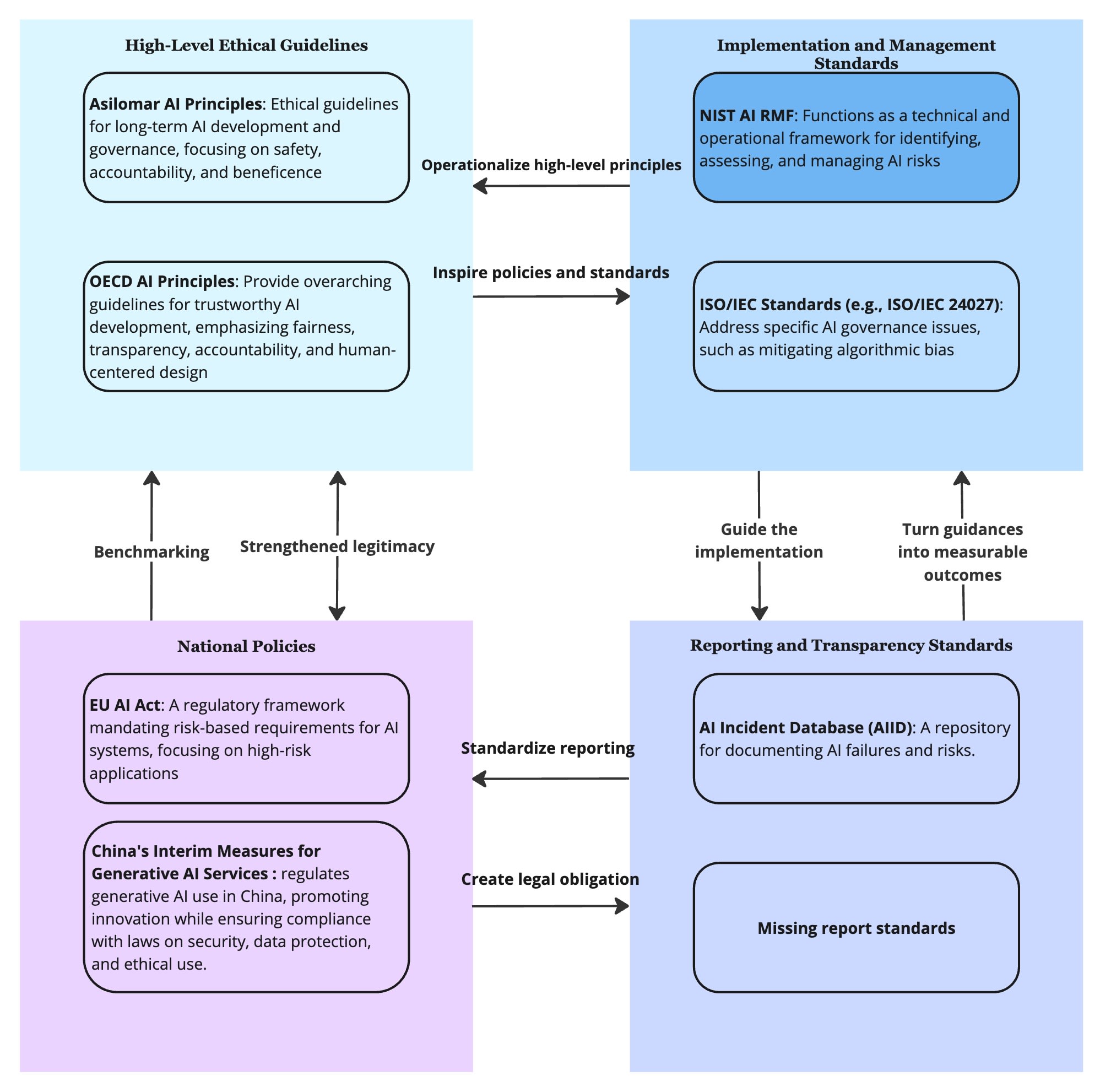

The AI governance ecosystem currently operates through several mechanisms:

First, high-level principles like the OECD AI Guidelines and Asilomar AI Principles establish foundational norms around fairness, transparency, and safety. The NIST AI RMF translates these abstract principles into practical risk management steps - similar to how ISO 26000 operates in the CSR domain, but with specific attention to AI challenges.

Second, technical standards bodies (like ISO/IEC JTC 1 SC 42) are developing specific guidelines for AI systems. These complement the NIST framework by addressing particular challenges like algorithmic bias.

Third, national and regional regulations (such as the EU AI Act and China's Generative AI measures) create legal frameworks for enforcement. These regulations often reference or incorporate elements from voluntary frameworks like NIST AI RMF.

Gaps in the Current System

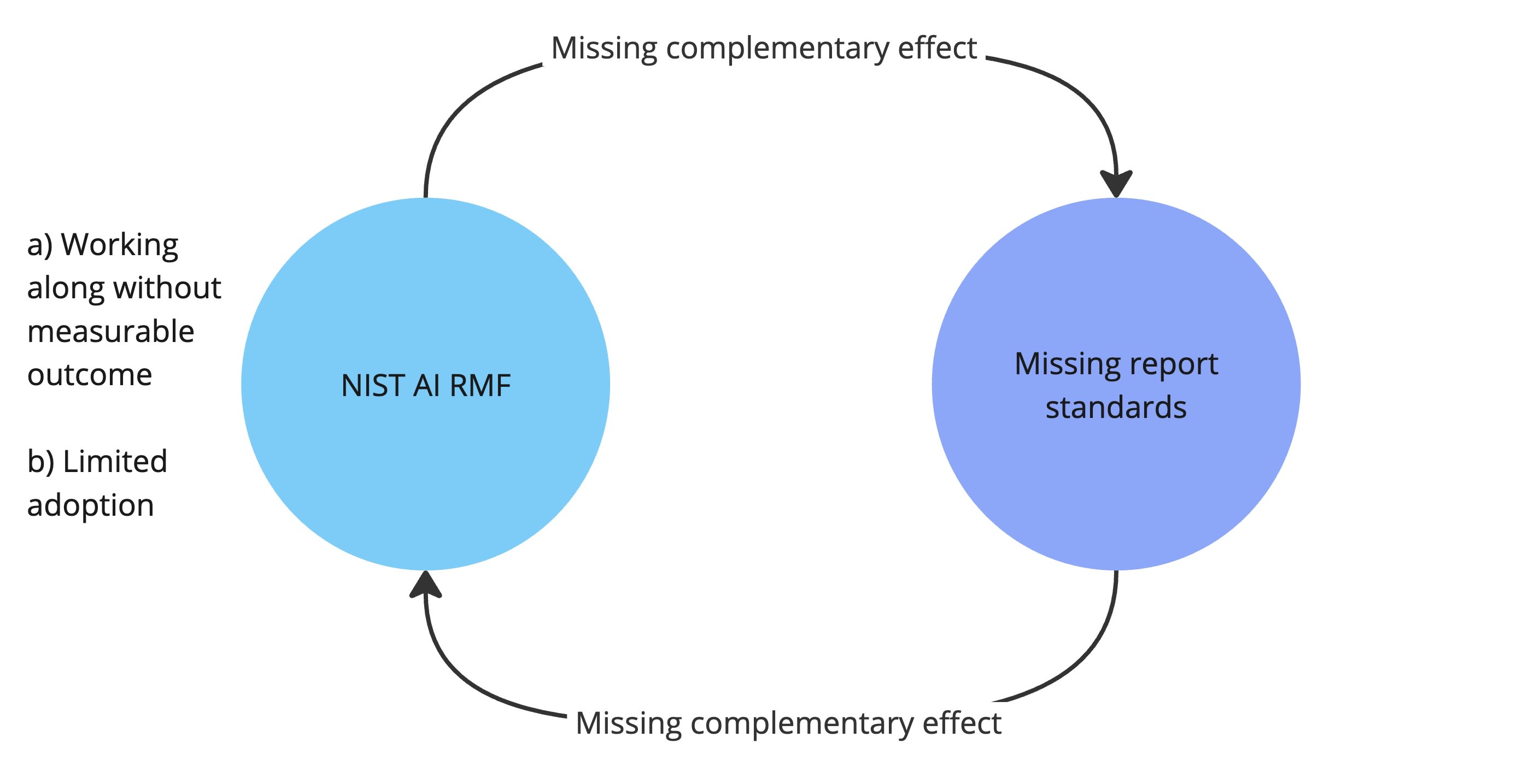

Despite these mechanisms, the AI governance ecosystem shows significant weaknesses:

Reporting Standards

The ecosystem lacks standardized reporting mechanisms comparable to GRI in the CSR domain. Current AI transparency reporting remains fragmented, with companies like Microsoft using inconsistent metrics and methodologies. A prominent initiative - the Artificial Intelligence Incident Database (AIID) - demonstrates the current limitations. The AIID exhibits limitations through its voluntary nature and lack of regulatory backing.These makes it difficult to:

- Compare AI systems across organizations

- Track progress in risk management

- Hold organizations accountable

- Implementation Challenges

Organizations face several obstacles when implementing the framework:- Communication barriers between policy and technical teams

- apid AI advancement outpacing governance mechanisms

- Complex AI systems resisting simple evaluation metrics

- Coordination Problems

The current system struggles with:- Global fragmentation in governance approaches

- Jurisdictional conflicts (like EU-specific requirements)

- Balancing innovation with risk management

Building Better AI Governance: Recommendations from CSR Experience

Current AI governance faces a critical problem: while frameworks like NIST AI RMF provide good guidelines, we lack standardized ways to measure and report AI risks. This creates real-world consequences, as seen in cases like UnitedHealth's problematic AI deployment. The CSR domain solved similar challenges through integrated reporting systems, offering valuable lessons for AI governance development.

Learning from CSR Governance Success

The CSR ecosystem demonstrates that effective governance requires more than guidelines and robust reporting and verification mechanisms. GRI's transformation of abstract ISO 26000 principles into measurable metrics made corporate responsibility tangible and comparable. This success stemmed from clear metrics, stakeholder engagement, and external verification processes, all while maintaining sector-specific flexibility.

Organizations using GRI standards consistently show higher ISO 26000 adoption rates and more meaningful sustainability improvements. This success offers a clear model for AI governance development, highlighting the importance of systematic measurement and verification.

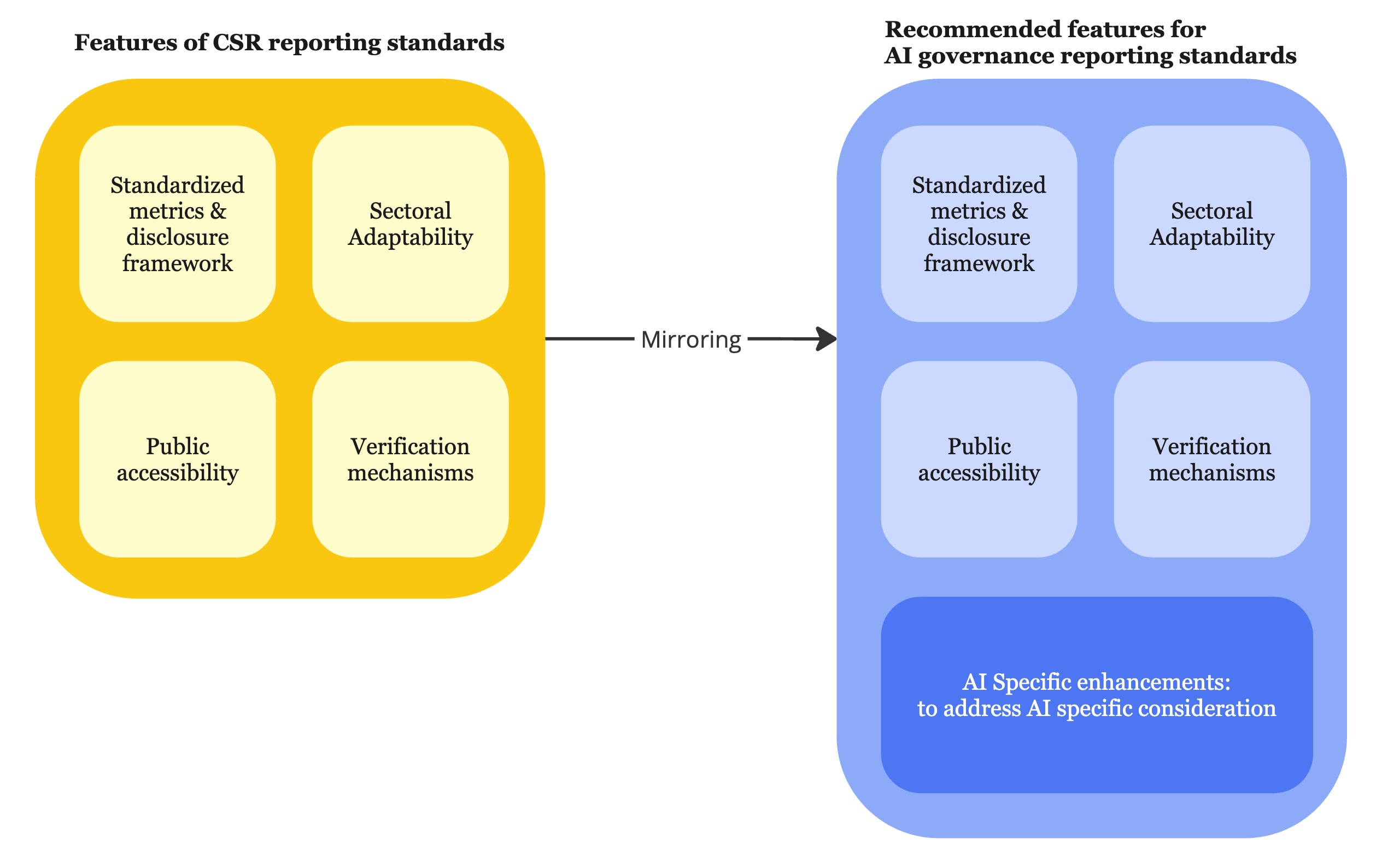

Requirements for Better AI Governance

Standardized metrics represent the foundation of effective AI governance. Drawing from CSR experience, we need comprehensive measures for algorithmic bias, model explainability, and system accountability. These metrics must balance consistency with contextual flexibility, acknowledging that different sectors may require varying approaches while maintaining comparable baselines.

Technical documentation in AI systems demands unprecedented detail and precision. Unlike traditional corporate processes, AI documentation must capture complex model architectures, training methodologies, and performance characteristics. This technical depth should integrate seamlessly with risk assessment protocols, creating a comprehensive view of system behavior and potential impacts.

The dynamic nature of AI systems necessitates a fundamental shift in reporting frequency. While traditional corporate reporting operates on annual cycles, AI systems require continuous monitoring and assessment. Regular system modification reports, emerging risk assessments, and performance drift monitoring must become standard practice, enabling rapid response to developing issues.

Accordingly Implementation Priorities

1. Metric Development and Standardization

Collaboration between technical experts and policymakers must lead to the development of a robust framework for measuring AI risk. This framework should establish clear baselines while allowing for sector-specific adaptation.

2. Verification Infrastructure

Independent verification mechanisms must evolve alongside reporting standards. These mechanisms should combine technical expertise with practical implementation experience, ensuring meaningful oversight.

3. Cross-Border Integration

Global AI deployment demands harmonized reporting standards that work across jurisdictions while maintaining consistent benchmarks. This integration should reduce compliance redundancy while ensuring comprehensive coverage.

Possible Future Research Directions

Future research must address two fundamental challenges. First, ecosystem effectiveness evaluation requires a systematic analysis of how voluntary frameworks interact with regulations and how different standards influence organizational behavior. This research should examine both successful and failed governance initiatives and identify key factors for effective implementation.

Second, metrics development demands rigorous investigation into standardized measures for AI trustworthiness. This research must balance technical precision with practical applicability, ensuring that resulting metrics serve both oversight and improvement purposes.

Clarification

This analysis was conducted as a final project for the AI Governance course at AI Safety Hungary, benefiting from their guidance and oversight. The research aims to contribute to the growing body of work on AI governance ecosystems while acknowledging its limitations as an academic exercise.

I appreciate constructive dialogue and critical examination of the arguments and recommendations presented. For further discussion or to provide feedback, please contact: huihan@uni.minerva.edu

0 comments

Comments sorted by top scores.