The map of the risks of aliens

post by turchin · 2016-08-22T19:05:55.715Z · LW · GW · Legacy · 21 commentsContents

21 comments

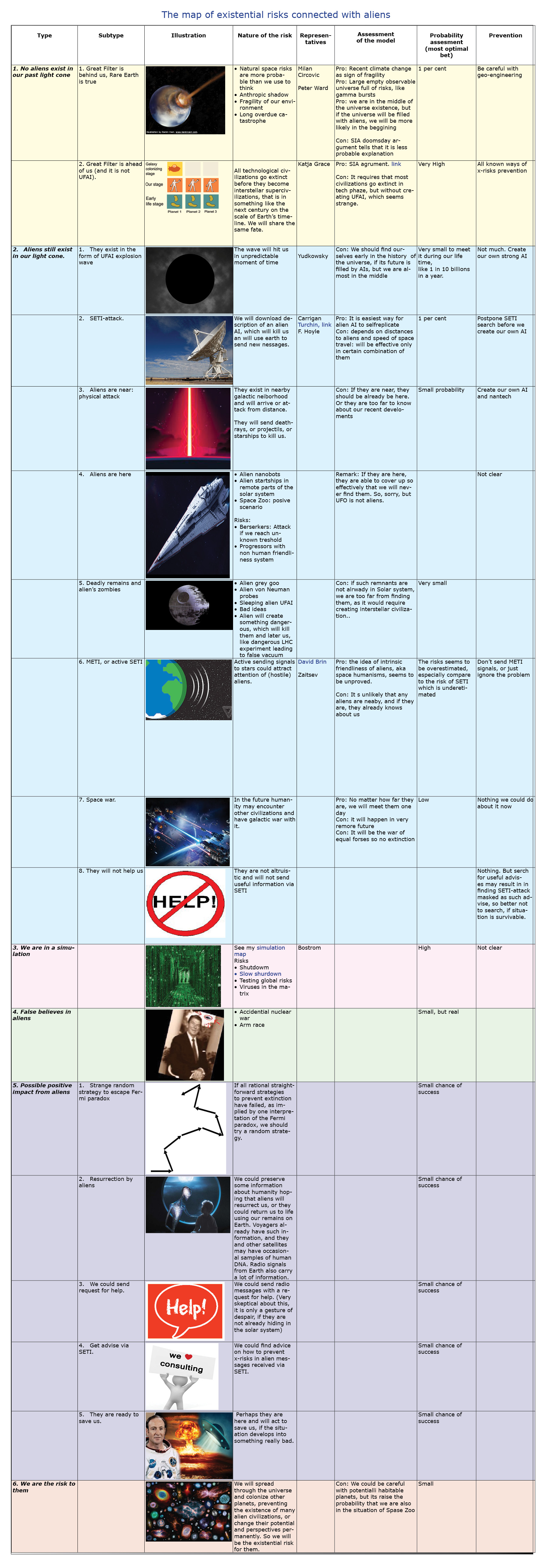

Stephen Hawking famously said that aliens are one of the main risks to human existence. In this map I will try to show all rational ways how aliens could result in human extinction. Paradoxically, even if aliens don’t exist, we may be even in bigger danger.

1.No aliens exist in our past light cone

1a. Great Filter is behind us. So Rare Earth is true. There are natural forces in our universe which are against life on Earth, but we don’t know if they are still active. We strongly underestimate such forces because of anthropic shadow. Such still active forces could be: gamma-ray bursts (and other types of cosmic explosions like magnitars), the instability of Earth’s atmosphere, the frequency of large scale volcanism and asteroid impacts. We may also underestimate the fragility of our environment in its sensitivity to small human influences, like global warming becoming runaway global warming.

1b. Great filter is ahead of us (and it is not UFAI). Katja Grace shows that this is a much more probable solution to the Fermi paradox because of one particular version of the Doomsday argument, SIA. All technological civilizations go extinct before they become interstellar supercivilizations, that is in something like the next century on the scale of Earth’s timeline. This is in accordance with our observation that new technologies create stronger and stronger means of destruction which are available to smaller groups of people, and this process is exponential. So all civilizations terminate themselves before they can create AI, or their AI is unstable and self terminates too (I have explained elsewhere why this could happen ).

2. Aliens still exist in our light cone.

a) They exist in the form of a UFAI explosion wave, which is travelling through space at the speed of light. EY thinks that this will be a natural outcome of evolution of AI. We can’t see the wave by definition, and we can find ourselves only in the regions of the Universe, which it hasn’t yet reached. If we create our own wave of AI, which is capable of conquering a big part of the Galaxy, we may be safe from alien wave of AI. Such a wave could be started very far away but sooner or later it would reach us. Anthropic shadow distorts our calculations about its probability.

b) SETI-attack. Aliens exist very far away from us, so they can’t reach us physically (yet) but are able to send information. Here the risk of a SETI-attack exists, i.e. aliens will send us a description of a computer and a program, which is AI, and this will convert the Earth into another sending outpost. Such messages should dominate between all SETI messages. As we get stronger and stronger radio telescopes and other instruments, we have more and more chances of finding messages from them.

c) Aliens are near (several hundred light years), and know about the Earth, so they have already sent physical space ships (or other weapons) to us, as they have found signs of our technological development and don’t want to have enemies in their neighborhood. They could send near–speed-of-light projectiles or beams of particles on an exact collision course with Earth, but this seems improbable, because if they are so near, why haven’t they didn’t reached Earth yet?

d) Aliens are here. Alien nanobots could be in my room now, and there is no way I could detect them. But sooner or later developing human technologies will be able to find them, which will result in some form of confrontation. If there are aliens here, they could be in “Berserker” mode, i.e. they wait until humanity reaches some unknown threshold and then attack. Aliens may be actively participating in Earth’s progress, like “progressors”, but the main problem is that their understanding of a positive outcome may be not aligned with our own values (like the problem of FAI).

e) Deadly remains and alien zombies. Aliens have suffered some kind of existential catastrophe, and its consequences will affect us. If they created vacuum phase transition during accelerator experiments, it could reach us at the speed of light without warning. If they created self-replicating non sentient nanobots (grey goo), it could travel as interstellar stardust and convert all solid matter in nanobots, so we could encounter such a grey goo wave in space. If they created at least one von Neumann probe, with narrow AI, it still could conquer the Universe and be dangerous to Earthlings. If their AI crashed it could have semi-intelligent remnants with a random and crazy goal system, which roams the Universe. (But they will probably evolve in the colonization wave of von Neumann probes anyway.) If we find their planet or artifacts they still could carry dangerous tech like dormant AI programs, nanobots or bacteria. (Vernor Vinge had this idea as the starting point of the plot in his novel “Fire Upon the Deep”)

f) We could attract the attention of aliens by METI. Sending signals to stars in order to initiate communication we could tell potentially hostile aliens our position in space. Some people advocate for it like Zaitsev, others are strongly opposed. The risks of METI are smaller than SETI in my opinion, as our radiosignals can only reach the nearest hundreds of light years before we create our own strong AI. So we will be able repulse the most plausible ways of space aggression, but using SETI we able to receive signals from much further distances, perhaps as much as one billion light years, if aliens convert their entire home galaxy to a large screen, where they draw a static picture, using individual stars as pixels. They will use vN probes and complex algorithms to draw such picture, and I estimate that it could present messages as large as 1 Gb and will visible by half of the Universe. So SETI is exposed to a much larger part of the Universe (perhaps as much as 10 to the power of 10 more times the number of stars), and also the danger of SETI is immediate, not in a hundred years from now.

g) Space war. During future space exploration humanity may encounter aliens in the Galaxy which are at the same level of development and it may result in classical star wars.

h) They will not help us. They are here or nearby, but have decided not to help us in x-risks prevention, or not to broadcast (if they are far) information about most the important x-risks via SETI and about proven ways of preventing them. So they are not altruistic enough to save us from x-risks.

3. If we are in a simulation, then the owners of the simulations are aliens for us and they could switch the simulation off. Slow switch-off is possible and in some conditions it will be the main observable way of switch-off.

4. False beliefs in aliens may result in incorrect decisions. Ronald Reagan saw something which he thought was a UFO (it was not) and he also had early onset Alzheimer’s, which may be one of the reasons he invested a lot into the creation of SDI, which also provoked a stronger confrontation with the USSR. (BTW, it is only my conjecture, but I use it as illustration how false believes may result in wrong decisions.)

5. Prevention of the x-risks using aliens:

1. Strange strategy. If all rational straightforward strategies to prevent extinction have failed, as implied by one interpretation of the Fermi paradox, we should try a random strategy.

2. Resurrection by aliens. We could preserve some information about humanity hoping that aliens will resurrect us, or they could return us to life using our remains on Earth. Voyagers already have such information, and they and other satellites may have occasional samples of human DNA. Radio signals from Earth also carry a lot of information.

3. Request for help. We could send radio messages with a request for help. (Very skeptical about this, it is only a gesture of despair, if they are not already hiding in the solar system)

4. Get advice via SETI. We could find advice on how to prevent x-risks in alien messages received via SETI.

5. They are ready to save us. Perhaps they are here and will act to save us, if the situation develops into something really bad.

6. We are the risk. We will spread through the universe and colonize other planets, preventing the existence of many alien civilizations, or change their potential and perspectives permanently. So we will be the existential risk for them.

6. We are the risks for future aleins.

In total, there is several significant probability things, mostly connected with Fermi paradox solutions. No matter where is Great filter, we are at risk. If we had passed it, we live in fragile universe, but most probable conclusion is that Great Filter is very soon.

Another important thing is risks of passive SETI, which is most plausible way we could encounter aliens in near–term future.

Also there are important risks that we are in simulation, but that it is created not by our possible ancestors, but by aliens, who may have much less compassion to us (or by UFAI). In the last case the simulation be modeling unpleasant future, including large scale catastrophes and human sufferings.

The pdf is here:

21 comments

Comments sorted by top scores.

comment by James_Miller · 2016-08-22T19:32:21.358Z · LW(p) · GW(p)

Excellent. My personal theory is that the universe is fine-tuned for both life and for the Fermi paradox with a late great filter because across the multiverse most lifeforms such as us will exist in such universes in part because without a great filter intelligent life will quickly turn into something not in our reference class and then use all the resources of their universe and so make their universe inhospitable to life in our reference class.

Replies from: turchin, torekp↑ comment by turchin · 2016-08-22T20:01:46.039Z · LW(p) · GW(p)

Really interesting turn. As I understand you mean that in some universes UFAI will eat almost all matter quickly, and there will be not much other earth-like civilization, but in other universes with late non-AI filter there will be more instances of earth-like civilizations.

So, if yes, what could be such filter? It should be a property of the universe it self, not a civilization, so probably it should be some think like black hole catastrophe in hadron collider? (It also should be local, not the false vacuum transition for the same reasons). Or simple runaway global warming? But it is not universal enough.

Replies from: James_Miller↑ comment by James_Miller · 2016-08-22T21:29:35.259Z · LW(p) · GW(p)

Yes, this is what I mean. Part of what makes it an effective filter might be that it's hard to detect. If it's a "black hole catastrophe in hadron collider" then its technologically much easier to build the collider than to realize that the collider will create a black hole. I've suggested that this kind of possibility should cause us to put lots of resources into looking for the ruins of dead alien civilizations.

Replies from: turchin↑ comment by turchin · 2016-08-22T21:45:32.621Z · LW(p) · GW(p)

The one possible way to argue against this grim perspective is to suggest that probability is distorted by simulation argument. If at least one super AI will be created it will create millions of simulations (to numerically solve Fermi paradox, e.g.) and it will overweight the number of real civilization. But will it save them from the said black hole?

Replies from: James_Miller↑ comment by James_Miller · 2016-08-23T00:55:41.205Z · LW(p) · GW(p)

Then the Fermi simulation paradox is "why is the universe so old?" If the universe gets quickly colonized then most of the simulations of civilizations that have not yet made contact with aliens will have universes much younger than ours.

Replies from: turchin, Prometheus↑ comment by Prometheus · 2016-08-27T05:15:12.507Z · LW(p) · GW(p)

It could be the universe is only "old" by our standards. Maybe a few trillion years is a very young universe by normal standards, and it's only because we've been observing a simulation that it seems to be an "old" universe.

Replies from: James_Miller↑ comment by James_Miller · 2016-08-27T06:02:31.278Z · LW(p) · GW(p)

This is certainly possible. But if we are in a simulation of the base universe then it's strange that we experience the Fermi paradox given the universe's apparent age.

↑ comment by torekp · 2016-08-29T22:23:30.023Z · LW(p) · GW(p)

Can you please clarify "our reference class"? And are you using some form of Self-Sampling Assumption?

Replies from: James_Miller↑ comment by James_Miller · 2016-08-30T04:21:39.028Z · LW(p) · GW(p)

It's meant to be vague and you are right to call me on it.

comment by Prometheus · 2016-08-27T04:58:31.148Z · LW(p) · GW(p)

There's also the possibility that the universe is filled with aliens, but they are quiet in order to hide themselves from a more advanced alien civilization or UFAI. And this advanced civilization or UFAI acts as a Great Filter to those who do not have the sense to conceal themselves from it. This would assume that somehow aliens had a way of detecting the presence of this threat, perhaps by intercepting messages from alien civilizations before they were destroyed by it. Either that, or there is no way of detecting the aliens or UFAI, and all civilizations are doomed to be destroyed by it as soon as they start emitting radio signals.

Replies from: turchincomment by vinceguilligan · 2016-08-22T22:53:27.424Z · LW(p) · GW(p)

There are other posibilites not discussed here: We may be the creation of aliens, and they may have contact with humanity but with little intervention.

Replies from: James_Miller, turchin↑ comment by James_Miller · 2016-08-23T00:59:57.316Z · LW(p) · GW(p)

If the zoo hypothesis is correct then aliens are massively interfering with us by giving us a false understanding of the fate of life in the universe sort of like if a doctor falsely tells a patient that everyone with the patient's condition has died. The patient might engage in risky activities that he otherwise wouldn't have.

↑ comment by turchin · 2016-08-22T23:30:12.743Z · LW(p) · GW(p)

The map discusses only risks, not all possible solutions of the Fermi paradox (there will be another map about it). If we are creation of aliens, the question is where they are now - if they are still here, they could terminate us.

If there is a contact, but no intervention, so they will not send us any useful or dangerous knowledges, but just will send "Hi", than there is no risk (but may be opportunity cost as they will not provide important information how prevent risks)

comment by morganism · 2016-08-23T00:13:18.693Z · LW(p) · GW(p)

There is also the risk of a non--AI wave, another panspermia event.

If there were another wave of optimized bio-life that is tracking on the galactic wind, we could be inundated with a fast growing, and very flexible life forms that could maybe fill all biological niches, or uptake all free nutrients.

Outcompeted in evolution by a microbe would be distressing...

Replies from: morganism, turchin↑ comment by morganism · 2016-08-23T00:31:27.753Z · LW(p) · GW(p)

There is also the factor that what we know about stellar evolution is way off.

Is possible that larger stars flare more often than we think.

Is also possible that all the theories of disc accretion are totally wrong. One of the first discovered discs has already dissipated. There are lots of problems with all the planetesimals formation theories. Micron,centimeter, meter and kilometer models all show disruption in even slow collisions. Meteorites all show major fractionation, and re-cementing with mud. Combinations of glass crystals, amorphourous glasses, and crystalline minerals, with greater than 2 orders of magnitude in the formation temps. Major planets gaining and loosing angular momentum and changing places in the solar system. If DA is wrong, it would appear that the only reason we have lasted this long, is that we are 4 deg off the plane of the solar system. It seems to me that entire planetary cores must be tossed out of the star, and wander thru the system disrupting rotations and orbits. The cores of the ice giants, are reputed to be near the size of the Jovian moons, Mars and Earths moon also. Just saying...

Replies from: turchin↑ comment by turchin · 2016-08-23T02:22:29.696Z · LW(p) · GW(p)

It all supports Rare Earth idea. But it has two type of factors: which had ended, and which are still here, like absence of nearby gamma ray burst. The second type is real danger, as some of them may be long overdue.

The best situation would be that rare earth theory is true, but all dangers are in the past. But it also means that the future of the whole Universe is in the hands of humankind.

comment by ThisSpaceAvailable · 2016-08-23T18:06:14.602Z · LW(p) · GW(p)

"Also there are important risks that we are in simulation, but that it is created not by our possible ancestors"

Do you mean "descendants"?

Replies from: turchin