(Extremely) Naive Gradient Hacking Doesn't Work

post by ojorgensen · 2022-12-20T14:35:33.591Z · LW · GW · 0 commentsContents

Tldr Assumed Knowledge A naive description of gradient hacking How does SGD Work? Point 1 Point 2 Point 3 Point 4 What does this mean for NGH Caveats and Takeaways None No comments

Tldr

A super simple form of gradient hacking might be to perform badly on any change to the value of the model's mesa-objective, in order to preserve the mesa-objective. I use some simple properties of SGD to explain why this method of gradient hacking cannot work.

Thanks for Jacob Trachtman, Jakub Kraus, and Francis Priestland for feedback. Thanks also to Evan Hubinger for responding to my questions.

Assumed Knowledge

I think this post will be most valuable for those who have some familiarity with the alignment problem (in particular, understanding of inner misalignment, mesa-optimisation). Having done something like AGISF would be more than sufficient for reading this.

As an introduction to mesa-optimsation I’d recommend Risks from Learned Optimization: Introduction [LW · GW] by Evan Hubinger.

A naive description of gradient hacking

Gradient hacking is the hypothesised phenomenon whereby a model becomes aware of its training process, and then tries to influence the training process in some way that helps it to pursue its own objective (its mesa-objective).

If possible, one of the simplest ways a model could try to continue pursuing its mesa-objective is to ensure that the mesa-objective is fixed by an iteration of SGD. A hypothetical example of this first introduced by Evan Hubinger [LW · GW], which he no longer endorses, is as follows[1].

Suppose a model can check if its objective satisfies some criterion, and perform extremely badly if the criterion doesn’t hold. This means that the model can prevent gradient descent from changing its objective, since any change to its objective would lead to extremely bad performance.

I’ll call this strategy Naive Gradient Hacking, or NGH for short. I’m going to briefly explain why gradient hacking cannot look like this, by looking at how SGD works. For an exploration of what gradient hacking could look like, I’d recommend checking out Richard Ngo's [LW · GW] posts [LW · GW].

How does SGD Work?

Let be some input to our model , which is a Neural Network, with parameter vector given by . Assume that SGD is being used to minimise some loss function of .[2]

There are four important aspects of SGD that I want to convey here, which are necessary to understand the problems with Naive Gradient Hacking.

Point 1

First, recall that the change to any parameter due to a single iteration of SGD is , where is a single input, and is the learning rate (some small positive number).

This gives us point 1: When changing parameters, SGD cares only about the partial derivative of the loss function of our model f, for some given input x.

Point 2

The partial derivative here is defined by the following equation.

This is essentially the same as asking “if I change parameter an infinitesimal amount, how much will it increase the loss function evaluated on the output of the model , at some input ?”

This gives us point 2: The partial derivative describes how a tiny change to some parameter would affect the loss function of our model , for some given input .

Point 3

Note that the definition of a partial derivative is a limit as h approaches 0. There are two ways we might try to calculate this limit: approximating the limit by changing by some tiny amount, or by calculating the limit exactly. In practice, we actually take the exact approach, through a technique known as backpropagation. It works by viewing the output as the composition of many simple functions, and using the chain rule to combine the derivatives of these simple functions to get our final answer. Since we know the exact derivatives of simple functions, we can then calculate the derivative of our large neural network.

This gives us point 3: The method used to compute partial derivatives is backpropagation, which computes derivatives exactly. It makes this computation solely by inspecting how the output of the model is generated.

Point 4

Look carefully at the equation for partial differentiation again.

Although we specify that must tend to , we never specify which direction it must approach from: it could start as a positive number, or a negative number! Is this an oversight? Have we been too loosey goosey in our notation?

It is super important that the answer to this is no. For a partial derivative to exist, the limit must be the same in both directions. If we take to be sufficiently small, then we have that approximately

If we cancel the , and move the negative sign up, this gives us that

This has important consequences: it means that reducing the parameter a tiny amount must have the equal effect to increasing it, but in the opposite direction!

This gives us point 4. If changing the parameter in one direction a tiny amount increases our loss, then changing the parameter in the other direction a tiny amount must decrease our loss.

What does this mean for NGH

Assume as a simplification that there is some single parameter that corresponds to the mesa-objective of our model . Now, assume that the model is optimising to prevent from being updated by the next iteration of SGD. The simplifying assumptions here (that there is one parameter that the model is trying to keep constant) are worth noting, but I don’t think they are essential to the argument.

Let’s revisit the equation for gradient descent, now in terms of the mesa-objective

If the model wants to keep constant, then it needs the RHS of this equation to equal . We can see that the only way to preserve the mesa-objective is if the partial derivative is computed to be 0, meaning that changing the mesa-objective in some small way does not affect the loss function at that input.

This is very different to the strategy suggested by NGH. Here it is again as a reminder:

Suppose the model can check if its objective satisfies some criterion, and perform extremely badly if it doesn’t. This means that the model can prevent gradient descent from changing its objective, since any change to its objective would lead to extremely bad performance.

Let’s try to understand why the strategy described by NGH cannot be successful here.

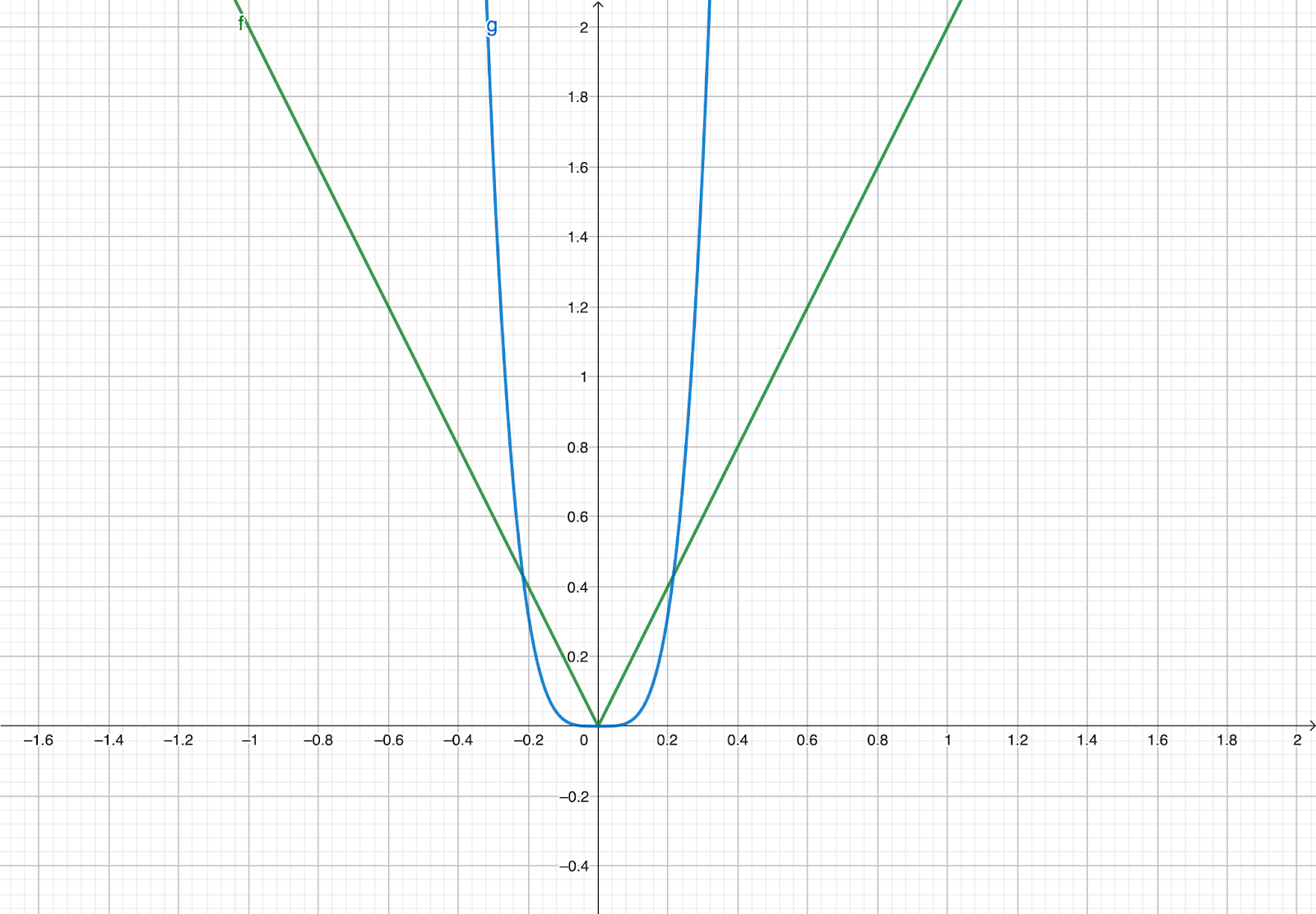

Firstly, recall point 4 above: if changing a parameter in one direction a tiny amount increases our loss, then changing the parameter in the other direction a tiny amount must decrease our loss. Hence, we cannot start performing extremely badly upon an immediate change to in either direction: worse performance from increasing by some tiny amount must be coupled with better performance from decreasing by some tiny amount, and vice versa. To help picture this, think of the loss landscape as a straight line locally: it cannot look non-differentiable!

This issue is enough to stop the model from performing Naive Gradient Hacking, because the only relevant behaviour for gradient descent is the local loss landscape. Since we calculate the exact gradient of the loss landscape when performing gradient descent (point 3), any poor performance of the model which occurs after a larger than infinitesimal change is irrelevant to how gradient descent changes the mesa-objective.

Hence, trying to perform NGH to prevent changes to cannot work in this scenario. Either it means that the model tries to do the impossible (perform worse on both an infinitesimal increase and decrease to ), or settles for changing its behaviour in some irrelevant way (performing poorly on some values of besides an infinitesimal increase or decrease).

Caveats and Takeaways

Given the above discussion, it makes sense to think a little bit about what gradient hacking could look like. I particularly like this example from Evan’s post [LW · GW]:

For example, it could write out a pre-commitment to follow the hard-coded policy and then just have a policy of always following any pre-commitments it finds such that it never thinks about the deception that led it to that policy in the first place.

As mentioned previously, Richard Ngo’s Gradient hacking: definitions and examples [LW · GW] has other interesting examples.

As an aside, I think this post serves as a useful blueprint for sanity checking some questions in conceptual Alignment that we are yet to reach definite answers to. In my mind, examples of important questions we are yet to get rigorous proofs or empirical evidence on include:

- How could a modern ML system perform gradient hacking?

- How likely deceptive alignment is in modern ML systems?

- How can we use mechanistic interpretability to align very powerful systems?

Making progress on these questions seems difficult! That being said, I think short investigations similar to the one above can be helpful in trying to clarify some of these ideas. Going beyond this to create examples of behaviour such as deception or gradient hacking would be an even more valuable step here: a great blueprint would be Langosco et al.’s work on Goal Misgeneralization in Deep Reinforcement Learning.

- ^

I’m paraphrasing his example from his 2019 post on gradient hacking. He expressed that he no longer endorsed this example over email in November 2022.

- ^

I’m going to assume that the loss function is differentiable across all possible values of parameters . This is essentially true for modern neural networks, which are composed of differentiable functions, besides the ReLU function being non-differentiable at a single point.

0 comments

Comments sorted by top scores.