Understanding and overcoming AGI apathy

post by Dhruv Sumathi (dhruv-sumathi) · 2025-04-17T01:04:53.853Z · LW · GW · 1 commentsThis is a link post for https://dhruvsumathi.substack.com/p/waves-not-mountains-overcoming-apathy?r=b10d1

Contents

1. Tsunami Waves Mountains 2. Leaps Look Like Steps Atomic Changes The Sun Will Rise What, Concretely, Could Change? Work Human Dominance 3. Doing Something: Optimism and Action None 1 comment

Crossposting from my substack.

Note for LW: This post is about the apathy and nonchalance I've been seeing in many peers regarding AGI. There seems to be massive cognitive dissonance (and it isn't straightforward to overcome) – how can we on the one hand talk about things like AIs becoming smarter than humans across all domains, and on the other just carry on with our everyday lives? I've been searching for ways to explain this kind of apathy, and for ways to convey how serious this is to people that don't think about it much (e.g. my parents). This is my first attempt at doing that, and I'd appreciate any and all thoughts.

–––

Note: I use a combination of real and fictional names for the characters in section 1.

The revolution will not be televised

The revolution will not be brought to you

By Xerox in four parts without commercial interruptions

The revolution will not show you pictures of Nixon blowing a bugle

And leading a charge by John Mitchell, General Abrams, and Spiro Agnew

To eat hog maws confiscated from a Harlem sanctuary

The revolution will not be televisedGil Scott-Heron

1. Tsunami

When the Earth starts to... settle, God throws a stone at it. And believe me, He's winding up.

Ultron

Waves

It’s Tuesday morning. I’m enjoying my quiet drive to work. The sun is beaming warmth onto my arms through the car window. To my left, the bay is a deep turquoise, a color that I’ve come to associate with San Francisco’s best days.

My phone screams at me. I jolt and pick it up to see what’s happened. “Tsunami Warning.” My stomach tightens. I’ve never seen one of these before. Earthquake off the coast of Eureka, it says. Chance of tsunami. Get to high ground.

Hmm. Whatever. I put my phone down and continue driving.

Something feels off and a sense of unease wells up inside my chest. I’ve seen The Impossible, so I’m a little worried. I pick up the phone and call my brother, Chirag. He lives in San Francisco.

“Hey, did you get the tsunami warning?”

“Yup, that was weird.”

“Yeah, strange. What should we do?”

“I’m not sure, I think it’s fine though. We’ll see.”

“Okay, just be careful.”

I put the phone down and continue my drive, feeling slightly eased by Chirag’s reaction (or lack thereof). I pull into the office parking lot and park alongside my coworker’s car. She’s just getting out. I open my door, climb out onto the little side-step, and call out.

“Hey Dhruv,” she says calmly.

“Did you see the tsunami warning?” I ask.

“Yeah, haha. Strange. Well, you’ve got your surfboards, so worst case you’ll be alright.”

The unease creeps back into my chest. There is an unsaid, just barely noticeable fear in her tone.

“Haha. I guess you’re right,” I say.

We walk into the building and I open the office door. The room is tense, as though the air were made of guitar strings tightened to the point of splitting. Another coworker walks up to me immediately and says “Dhruv, I’m scared. What should we do?”

And just like that, something in me breaks. Without a second thought, I blurt out “Yup, we’re going.”

I continue walking towards my desk. Everyone is hard at work, deep in focus. Or so it seems. I hear the familiar, constant opening and closing of the door to the wet lab. I ask a coworker who sits across from me if he’s heard about the warning and before I can finish my sentence he says “Yeah, I’m trying to decide if we should leave right now.” Again, I say “Yup we should.” He looks at me, as though waiting for further confirmation, and asks “Yeah?” “Something something, expected value” I say. Following this extremely convincing line of logic, he begins mobilizing the group.

I follow suit. I walk into the wet lab, where my friend and coworker James is standing.

“We’re going,” I say.

“Why?”

“Tsunami warning.”

“Yeah, it’s fine, I saw. We’re by the bay, not the ocean. And I’ve got a lot of work to do.”

5 minutes of excruciating discussion, and I finally bribe James to leave with a bet – if nothing happens, I'll pay him. Why do I do these things? The rest of the group has entered a panicked huddle back in the office. We join. They are trying to decide where to go.

I open Google Maps and find that all of the low-elevation land within a 20 minute drive is inside of the red highlighted warning zone. We decide on a nearby mountain, organize cars, and leave.

I see Tim, our building’s security guard, at the front desk. I walk up to him and say “Tim, did you hear about the tsunami warning? We’re evacuating.”

Tim says “Yeah, I think it’ll be fine.”

I spend 5 minutes trying to convince him to leave with us. He hesitantly refuses, saying he’ll get in trouble if he leaves. I try to explain that the circumstances are not normal. No luck. Filled with guilt, I decide to leave.

My heart is racing and my legs are trembling as I walk to the car. I don’t know how much time we have. At this point it has probably been 30 minutes since we received the original warning. We start driving. On the way to the mountain, I know we’ll pass the highway. I’m bracing for deadlock and mass panic. People leaving their cars and running for high ground.

The 101 enters eyesight. My expectations do not align with what I’m seeing. Nothing. It’s still just a normal morning. People are on their way to work, driving right next to the bay. Not a hint of traffic. Construction workers are busy repairing the road to our right. Didn’t they get the warning?

We arrive at the top of the mountain and see a group of at least 50 members of the coast guard. That’s a great sign.

I leave the parking lot to find some cell signal and call my brother and my friend Fred, both of whom are working in the city. It is strangely easy to convince them to leave their current posts and find high ground. I call Chirag’s girlfriend, who is a teacher at a San Francisco public school. She tells me her school is figuring out whether to evacuate. Flabbergasted, I ask her what she means by “figuring out.” She says she isn't sure they’ll leave, that nobody thinks it's all that serious. The school she works at sits directly on the northern coast of the San Francisco peninsula.

If real, the tsunami is predicted to arrive in the next 30 minutes. If by then there is no sign of it, we’ll be okay. Or so they say.

The 30 minutes are strange. Strange not because they’re terrifying, or nerve-racking, but because they are ordinary. I don’t know what I expected, but it isn’t this. There’s no sound of sirens, or honking, no visible panic or distress, no emergency broadcast or “may God be with you” from the president. We’re just on top of a mountain on a Tuesday morning with seemingly the entire Coast Guard.

There is no wave. Tsunami.gov confirms that it’s safe to carry on with our days. I get back into my car and drive to the office again to resume work.

Mountains

My favorite scene in Christopher Nolan’s Interstellar is when Cooper, Brand, and Doyle find themselves on Miller’s planet, with its endless knee-deep oceans, large mountains, and intense gravitational pull, and where time dilation means that an hour on the planet corresponds to 7 years back on their spaceship (and on Earth). As Brand and Doyle leave to search for a lost communications beacon, Cooper notices that the dark shadow over the horizon they’ve been staring at is in fact not mountains. This prompts the famous “Those aren’t mountains. They’re waves!” quote.

–––

I realized something important the day the tsunami did not come. I'd witnessed a simulation of what would happen if it had. The answer, mostly, is nothing. Some people were alarmed and chose to find high ground. Most, however, carried on with their everyday lives. Chirag’s girlfriend’s school did not evacuate. Tim stayed. Not a single car from the neighboring company had left the parking lot. Morning commutes continued.

People saw mountains, not waves. So when the waves do come, there won't be traffic on the 101. Beachfront coffee shops will remain abuzz. Children will continue playing tetherball and learning algebra.

But Fred told me something interesting; he was working in a coffee shop when all of the phones in the room simultaneously blared the alarm. He looked up. He saw a room full of uneasy eyes, each looking for some kind of permission to react. But nobody moved a muscle. And so they all silenced their phones, looked back down, and resumed work.

In that brief moment of uncertainty, anything could have happened. If one person had just packed up and left, everyone might have followed suit. But nobody reacted. Perhaps what kept the room still was the fear of being perceived as scared. Or the belief that surely, bad things could not happen to them. Or maybe they’d heard enough false alarms in their lives. I’m not sure.

Zoom out and think about how strange this is. You receive a loud alarm that says you and your loved ones may be underwater within an hour, and you just silence it and carry on?

This kind of behavior is a known phenomenon. During the 2011 Japan tsunami, for example, a government study found that only 58% of people in some coastal areas heeded tsunami warnings. Of those who did, only 5% were caught in the tsunami. Of those who ignored it, that number was 49%.

2. Leaps Look Like Steps

Atomic Changes

“History doesn’t repeat itself, but it often rhymes.”

Mark Twain

What does immense societal change feel like? What is it like to live through a revolution? Not in some abstract philosophical sense, but for you. What do you hear? What are you reading in the news? What are you talking about at dinner with your family?

While it is a very extreme example, accounts from Nazi Germany offer some insight into this. In Victor Klemperor’s “I will Bear Witness,” a diary from his years as a Jew-turned-Christian in Germany at the time, he describes his experience of the time leading up to the Holocaust. What strikes me is that he depicts this time as mundane and slow, not jarring or revolutionary. Or perhaps more correctly, it all felt slow until it didn’t. In his words: “The never ending alarms, the never ending phrases, the never ending hanging out of flags, now in triumph, now in mourning—it all produces apathy…” and “It is with a certain lethargy that I let everything take its course.”

The reason you’re not jump-scared every time you look in the mirror is that you’ve seen yourself change in tiny, barely noticeable ways, every single day. Maybe one morning you notice a new gray hair. Or the beginnings of a wrinkle on your forehead. Or maybe your pants feel a little tighter. But you probably don’t suddenly think, “Wow, I no longer recognize myself!”

So too with the world. Great transformations quietly accumulate in the banality of everyday routine.

All of this makes me think about the world’s response to current events. Specifically, I am thinking about the advancement of powerful AI systems towards AGI (artificial general intelligence).

The word “AI” was coined in 1956. The theory is quite old and academics have been speculating about the philosophical implications for even longer. There have even been numerous “AI winters” – declines in faith and funding to the field caused by seemingly insurmountable technical barriers.

But today is different. Experts think AGI (artificial general intelligence) will arrive within the next few years. I will repeat this. Experts think AGI will arrive within the next few years. Governments are waking up to this reality. We are, and have been for over two years, denying China access to advanced chips in the hopes that this will slow their progress in AI. The Trump Administration has released a “Request for Information” for their AI Action Plan. They do know about this, and they are acting on this information.

If you just listen, you can hear the warning shots being fired.

But when AGI does arrive, it won’t be over some loud announcement, or come with a firework show. It will enter the world quietly, spread among the same humming GPUs housed in the same remote data centers as the models before it and the models before those.

I’ve asked many people what they think this all means, including AI engineers, AI safety researchers, policymakers, and friends who don’t work in the field at all. Reactions have ranged from “we’re all doomed to slavery under the machine gods” to “everything is going to be fine, LLMs are just glorified text editors.” If you’ve had a similar experience, you know how hard it is to form any kind of coherent opinion or plan of action.

What’s interesting is the distribution of reactions. I know a couple of people who think this is the end, and they don’t work in AI. I’ve met a few people in the middle and they tend to be AI safety researchers or policy advocates. Most people, though, seem to think everything is going to be fine by default – including the AI researchers working on making models more capable.

But there’s an even more interesting subtlety here, and that is the surprisingly large subset of technical AI researchers – i.e. the people working on improving AI models – who still don’t have confidence in the power of this technology. Yes, these models are incredibly impressive, they’ll say, but once you dive into the technical weeds and understand how they actually work, they’re somehow not:

“They make lots of mistakes.”

“They’re just next-token generators.”

“They can’t come up with fundamentally new ideas.”

Or the one that makes me physically cringe:

“They’re just glorified text editors.”

Of the people who do buy the magnitude of this technology, many don’t follow the logical steps towards the consequences. For example, even some people who believe in superintelligent machines don’t entertain the idea that work as we know it might be eliminated. They’ll compare the AI revolution to the Industrial Revolution. To them, the end of the road for AI is to replace all menial labor, but nothing more. Of course humans will still be required, they’ll tell me – there are many tasks that require critical thinking!

I’d prefer this world. I’d prefer if AI remained in the realm of tools that empower humans, rather than agents capable of complete independence (see @L Rudolf L and @L Rudolf L [LW · GW] and @lukedrago [LW · GW]'s writing for more on this). I prefer this because I believe human agency matters, and I think a central part of agency is the belief that you can do something that matters. If powerful AIs can do everything that matters, this falls under threat.

This is not the Industrial Revolution. We are building minds. Minds that learn things about the world in ways we largely don’t understand, and that take actions based on beliefs we can only guess at. There is no reason to believe that even critical thinking cannot be mastered by a machine. All we know is: the larger we make them, the smarter and more generally capable they get [LW · GW]. Yes, LLMs are not yet able to solve the world’s hardest math problems, and yes, they can’t yet beat Tolstoy at prose (though this is perhaps debatable). But the trends matter so much more than where we currently stand. 6 years ago, many were mind-blown by GPT-2 writing the simplest of poems. 3 years ago, we lived in a pre-ChatGPT world. Today, we’re actively trying to make our LLM benchmarks harder because we did not predict that they’d perform so well so soon. And it’s not just the “glorified text editors” that are barreling forward. Waymo cars are navigating the cities of San Francisco and Phoenix with 81% fewer injury-causing crashes per mile of driving than humans. Progress is fast, and it will only get faster.

It doesn’t stop with technical breakthroughs. There are massive psychological, societal, and economic changes coming too. As AIs advance, they will start to look and sound more and more human. The lines that currently divide humans and machines into distinct moral and ethical categories will blur. This is already happening in subtle ways – e.g. the surge in men using “AI girlfriend” chatbots. There is even serious research on whether AIs might be sentient, i.e. whether they have or will have an inner experience similar to ours.

This would have sounded insane to us 10 years ago.

The Sun Will Rise

“A status quo bias or default bias is a cognitive bias which results from a preference for the maintenance of one's existing state of affairs.”

We’d desperately like for the world tomorrow to look like the world today, perhaps with some moderate improvements. This is called the status quo bias, and it says that humans like predictability. We like “business as usual.” Predictability, however, is not a given. It is not written indelibly in the fundamental laws of physics. Things can change radically, overnight, and humans generally do a terrible job internalizing this fact.

To illustrate this, consider the Inca Empire. At the time of Spanish contact, the Inca ruled over 12 million people across thousands of miles of roads, with an empire spanning the Andes—from modern Colombia to Chile. They managed complex state logistics without money or written laws, ran a relay system that could deliver messages faster than some modern postal services (up to 190 mile-per-day transmission distance), and built suspension bridges from grass. They had a rich culture and developed a religion.

None of that saved them.

When Atahualpa met Pizarro in 1532, the Inca emperor ruled over one of the most sophisticated and organized societies on Earth. By the next day, he was a prisoner. Within a year, the empire's capital had fallen. A civilization built over a century was decapitated in 24 hours by fewer than 200 men.

Sophistication, power, momentum – all of these things mean little when the ground suddenly shifts beneath you. “The sun rose today” is not a very solid reason to bank our future on the sun rising tomorrow.

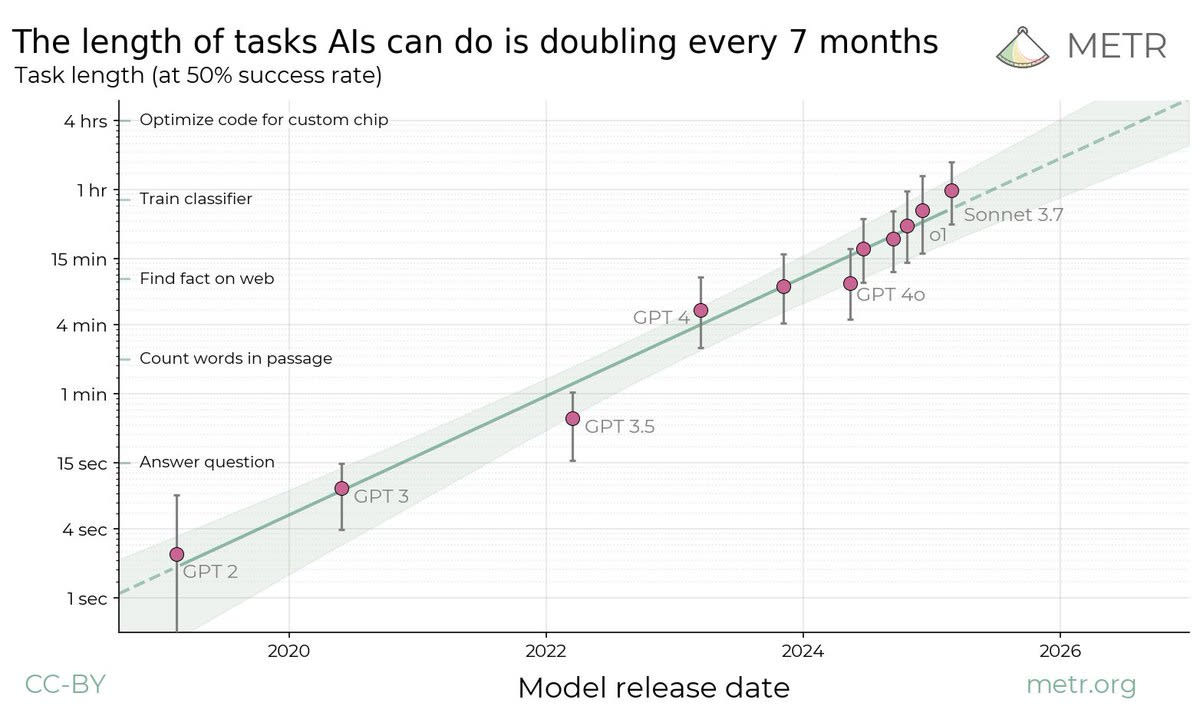

Consider this graph. If you extrapolate the curve, AIs will be able to carry out year-long tasks without human intervention by 2032, with a 50% success rate. Imagine something resembling OpenAI’s Deep Research but given a year of independent thought and action – things like trading stocks, writing social media or blog posts, buying and selling physical goods, sending emails and making phone calls, conducting scientific research, manipulating the physical world with robotic limbs, and performing any other actions we manage to endow it with in the interim.

You may be skeptical of this sort of extrapolation, but the trend has held for 5 years so far. Also, I don’t think these systems will need to carry out year-long tasks before they are immensely transformative – even humans don’t do this without breaking focus many, many times.

What, Concretely, Could Change?

Work

If you’re a knowledge worker in software engineering, consulting, finance, accounting, etc. or are on track for one of these jobs, it may be time to reconsider your plan. If your work requires physical labor, you probably have more time. But the same future is coming for all of us and the status quo will not be around for much longer. We must recognize a few uncomfortable truths:

- AI has the potential to and likely will outperform humans across nearly all intellectual domains (and some time after, physical domains).

- Human labor will lose value rapidly as AI becomes cheaper and better.

- Traditional safeguards – government policies, societal norms, social institutions – may fail to anticipate or adequately respond to the scale and pace of this change.

There may be a couple of exceptions to #1, like professions that seem to require a human touch. But these statements should make you pause and think – about your life and career first and foremost, and about the future of humanity. Comparisons to the Industrial Revolution don’t make much sense here, because we are not building static machines that do only the job we’ve asked of them – we’re building general purpose minds that can decide for themselves what jobs to perform. Right now, our labor is our ticket to participate in society – without that, we’ll need a radically new set of systems that uplift humans even when they aren’t economically valuable.

Human Dominance

This section is more speculative.

Maybe you do buy that we are building machines more intelligent than humans, but you still think that our species remains unthreatened. I would strongly question this belief. Homo sapiens became dominant over Earth’s resources because of our increased intelligence – both individual and collective – compared to competing species. And while the correlation is not perfect, bigger brains have generally yielded more intelligence.

What concerns me is that there is no limit to the scale of silicon brains – they’re not locked within a skull of fixed size. And if we build machines that can outsmart us in most useful domains, a similar kind of dominance may follow.

I think this is bad. I like humans. I like that we feel things and know things and create things and experience the world around us. Maybe machines do too, but I don’t know that for sure. And I’d much rather have an Earth and a universe filled with things like love and emotions and fulfillment and humor, than with lifeless, robotic efficiency.

I don’t know if we can escape this possibility. But if we do, it will likely come from technologies and institutions that augment and empower humans, keeping us on par with machines in some meaningful way.

3. Doing Something: Optimism and Action

If you believe in the risks I’ve discussed, it’s important not to simply resign yourself to the consequences and hope that someone else is on the ball. Our actions today matter profoundly. Whether AGI amplifies the power of a few or creates abundance for all remains undetermined. Avoiding worst-case scenarios depends on deliberate effort, not passive optimism.

The very possibility that we could steer this technology positively should change your mental math. If we were doomed to begin with, or if we were guaranteed to succeed, nothing we did now would matter because the outcome would not depend on us. It is the very fact that we have a chance, not a guarantee, of success, that should call us to action!

What actions can you take? If you’re thinking about how to change your own career plans, I think this post does a great job of answering that question. If you want to work on making AGI go well, here are a few areas I think we’re lacking on:

- Technologies that preferentially augment individual humans and favor decentralization over concentration of power/resources (e.g. the personal computer)

- Policies that allow humans to thrive even when their labor is not valuable

- Policies that prevent AI misuse

- A coherent set of values we’d like AI systems to follow

- Systems to defend against cyber or bio attacks from rogue actors

- Ways to educate young people for the radically new world and economy they will inherit

Remember, change will feel subtle – business as usual – until the moment it's not.

1 comments

Comments sorted by top scores.

comment by gwern · 2025-04-18T20:54:02.395Z · LW(p) · GW(p)

In that brief moment of uncertainty, anything could have happened. If one person had just packed up and left, everyone might have followed suit. But nobody reacted. Perhaps what kept the room still was the fear of being perceived as scared. Or the belief that surely, bad things could not happen to them. Or maybe they’d heard enough false alarms in their lives. I’m not sure.

One of the most depressing things about the Replication Crisis in especially social psychology is that many results from the 1950s and 1960s failed to replicate at all... except the Asch conformity experiments. Those seem to replicate just fine.