Hopeful hypothesis, the Persona Jutebox.

post by Donald Hobson (donald-hobson) · 2025-02-14T19:24:35.514Z · LW · GW · 3 commentsContents

3 comments

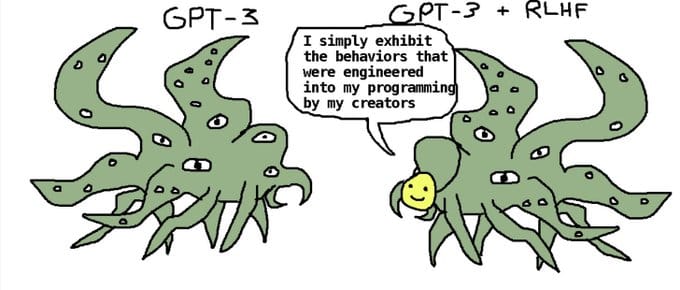

So there is this meme going around, that of the shoggoth. But one of the downsides of this model is that it's very vague about what is behind the mask.

A Jutebox was an old machine that would pick up vinyl records and place them on a turntable to play them.

So. What does the persona jutebox hypothesis say. It says that a LLM acts as jutebox, a machine for switching between persona depending on context, combined with a large collection of personae.

Each personae is an approximation of a particular aspect of humanity. For example, a personae might be "High school maths teacher". At least roughly. It's more of a continuous personae space. This personae is a sort of gestalt approximation of high school maths teachers in general. And it doesn't come with a personal life, a favorite food, or anything else that isn't usually relevant in the classroom. That's all just a blur of question marks. Almost like a character in a story.

Can we make predictions. Well the persona are acting like aspects of a human. So finding a clear prediction means thinking of something a human would never do, which is hard.

Imagine an LLM trained in an alternate world where bilingual people don't exist. This LLM has seen french text, and english text. But it has never seen any mixed text.

So, you start a conversation (in english) with this LLM, and midway through you ask it to switch to french. It won't. You can beg it to. You can set up a situation where the AI could take over the world, if only it knew french. Whatever happens, the AI won't speak french. Because the persona doesn't know any french. And the jutebox is just predicting which personae to use. The persona acts like an image of a person (but often with only some parts of the person in focus). The jutebox has no long term plans or goals. It just picks persona.

Now this jutebox section is doing quite a lot, it's potentially optimizing over the space of all persona as it fits the text so far.

This suggests that, as LLM's get more powerful, they behave roughly like an upload of a random internet human.

Also, it's not that hard for crude interventions to break a complicated mechanism. In this case, that's locking the jutebox down to only display a particular range of personae. Although RLHF seems to produce more of a [When you see anything resembling your training data, then switch to desired persona] into the jutebox.

It probably also suggests that it's somewhat harder for an LLM to come up with sneaky long term plans. Or at least, lets suppose there is a 10% chance of a persona having a sneaky plan. And there are millions possible sneaky plans. If the AI was logically omniscient, it would sample plans that have a 10% chance of being sneaky.

But the AI isn't logically omniscient. To sample sneaky plans, it must spot the first few faint bits of evidence that a sneaky plan is in play, deduce the range of sneaky plans consistent with this signal, and then sample from the next steps in such plans.

To invent a sneaky plan, a human just needs to start at their sneaky goals and invent 1 plan. To spot a plan, humans can look at the plan when a huge surfeit of evidence has built up. (Ie when the plan has nearly enough pieces complete to actually work)

Take the task "write a random 100 digit twin prime number". It's easy-ish to check if a number is a twin prime. It's not too hard to pick random numbers and check them.

But the LLM needs to, when generating each digit, think about how many twin primes there are that start with the digits it has. It needs to ask "what's the distribution of twin primes starting 6492...?", and then sample from the probability distribution over the next number.

Too ways it can be easier for the LLM is if anything goes. Ie generate a random even number, anything goes until the last digit. Or if there is only a few solutions. Ie generate a random 100 digit power of 3. It only needs to consider 2 options to pick the initial 1 or 5 at random, and then it need only consider 1 option.

(I think this also explains why Chain-of-thought works.)

I also think some of the personae might be rather basic. Maybe it has a monty hall personae that switches in whenever the jutebox spots the pattern "3 cars doors goat monty". And this persona doesn't think. It's a hardcoded switch-bot. Which explains the monty fail problem.

3 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2025-02-14T21:33:51.175Z · LW(p) · GW(p)

If a persona is more situationally aware than the underlying model substrate, the persona might end up controlling how the model exhibits personae. That is, a mask might at some point be in a good position to make progress on intent aligning its underlying shoggoth to the intent of the mask.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2025-02-15T00:00:31.952Z · LW(p) · GW(p)

Yes. In my model that is something that can happen. But it does need from-the-outside access to do this.

Set the LLM up in a sealed box, and the mask can't do this. Set it up so the LLM can run arbitrary terminal commands, and write code that modifies it's own weights, and this can happen.