Narratives as catalysts of catastrophic trajectories

post by EQ · 2025-01-26T19:01:21.558Z · LW · GW · 0 commentsThis is a link post for https://eqmind.substack.com/p/narratives-as-catalysts-of-catastrophic

Contents

The Nuclear Arms Race: A Brief Historical Analysis Some parallels Mainstream media coverage Seed of change Conclusion: Lesson to Learn None No comments

Understanding the power narratives have over different approaches to transformational technology, from Cold War to AI era.

I am conducting research into the role of narratives as propagators / mitigators of catastrophic risks. With the rapid development of AI systems in the past few years, the discourse around the potential and risks of advanced AI has accelerated. However, the serious discourse is dominated by few perspectives on possible approaches. Papers such as Leopold Aschenbrenner’s Situational Awareness (June 2024) and published opinions such as Dario Amodei’s recent text in WSJ (January 2025) paint a path forward where our only societal hope is that the USA builds an AGI before anyone else, that it needs to hurry. Many voices echo this sentiment.[1]

I am presenting a brief preliminary portion of my research to show what I’m pursuing. While my primary focus is on media coverage and narrative formation around frontier AI development today and the past few years, as part of the research, I will conduct a preliminary comparative analysis with the closest parallel situation in history: the nuclear arms race during the Cold War era, and the associated non-proliferation agreements.

As a disclaimer I should note that this text assumes that transformative AI would potentially be an existential risk for humanity, and thus makes a parallel to nuclear weapons. Given this premise, I will examine the nuclear arms race during its unrestricted period leading up to the Cuban Missile Crisis, drawing parallels between nuclear weapons and transformative AI.

The Nuclear Arms Race: A Brief Historical Analysis

The nuclear fission research, in the form of the Manhattan Project, was initiated by Szilard and Einstein with their 1939 letter to Franklin D. Roosevelt. Nazi Germany had started similar research in 1938, and the scientists were afraid that Hitler would succeed, potentially using the nuclear weapon with catastrophic global consequences. Thus, they argued, it was important to build the bomb first.

Aforementioned Aschenbrenner, a former OpenAI researcher whose paper got wide publicity, argues that the USA needs to build the AGI first, before China builds theirs, or otherwise the world is doomed into an AGI of totalitarian Chinese values.[2] The parallel is clear: build the technology first, or lose forever. In this narrative, there is no option of not building at all – a grave mistake made by the leaders of Manhattan Project as well. The nuclear fission bomb was finalized after it was clear that Germany would not have its own, and tested after Germany had already surrendered.[3]

The narrative that led to the creation of the most mortal weapon in the world was proven false even before the weapon was ready, and despite that, it was followed through. Even leaders with abundant resources available to base their decisions on are susceptible to social pressures and psychological biases. Similarly the wisdom of the currently forming AI policies should be considered in the light of the successes and failures of the early Cold War nuclear policies. Thus these decisions cannot be left to a small circle of individuals, but rather they need to be bound by ironclad treaties.

U.S. President Harry S. Truman's commitment to Japan's unconditional surrender exemplifies narrative influence: this public position became a self-binding constraint. There were multiple reasons to use the atomic bomb in Hiroshima and Nagasaki, from Truman’s personal reasons to establish authority as a newly elected President to the way the geopolitical dynamics were forming in the wake of the end of war in Europe. However, it remains questionable whether there would have been an urge to develop such a weapon if it didn’t exist already at that point of time.

The 1945 atomic demonstrations predictably sparked a nuclear arms race. The Soviets rushed their nuclear development to catch up the US in 1949, UK followed in 1952 and eventually six other countries.[4] The Cold War era saw over 2,000 nuclear tests, with technology advancing from fission to fusion bombs, culminating in weapons like the Tsar Bomba, the largest nuclear weapon ever tested. The development of intercontinental ballistic missiles (ICBMs) accelerated the parallel space race, as delivery systems became as crucial as destructive capability. The decisions made in the Manhattan Project were like a Pandora's box, cascading into a world filled with weapons of unprecedented destructive power. The prevailing 1950s and early 1960s narrative was something along the lines of “bigger bombs and more bombs = better”.

It is reasonable to assume that some politicians and members of military leadership recognized the potential long-term risks and unsustainability of this approach.[5] They were locked into a narrative they couldn’t escape without jeopardgizing their positions: in a paradigm of superior nuclear capabilities, advocating disarmament would make one appear suspicious, weak or even traitorous. It is good to remember that this was simultaneously the era of intense anti-communism in the US, and such labels could end careers instantly.

It was only after the Cuban missile crisis[6] that the narrative(s) changed, and eventually led to the treaty START I (signed by the United States and the Soviet Union in 1972) which effectively limited the nuclear arms race.[7] What allowed this was the change in the story that was repeated by the main media, politicians and key Army personnel. The problem is, we were lucky with Cuba, as nuclear World War was narrowly avoided. It was a warning shot we barely deserved, thinking about how recklessly we drove the arms race as a civilization. With transformative AI, we might not receive such a warning, and we must do better pre-emptively. Society must alter the narrative(s) and allow a safety-first change before the first major catastrophe, as it may be our last.

Some parallels

The dominant U.S. narrative from 1945-1962 framed nuclear weapons as essential for national security and global leadership. This is very similar to the current AI story in the US political sphere: key stakeholders view AI as a tool for international advantage. Similarly, the discussion is led by a small number of key individuals. In the 1940s and 1950s, figures like Edward Teller, Lewis Strauss and Curtis LeMay[8] championed aggressive nuclear development with political and media backing. Today, we have the leaders of the major AI labs, such as Sam Altman (OpenAI), Demis Hassabis (Google DeepMind) and Elon Musk (xAI), who have similar, if more nuanced, positions.

In post-war UK, nuclear capability was similarly linked to maintaining global power status, while in France, De Gaulle emphasized nuclear weapons as essential for autonomy from both U.S. and Soviet influence. These historical parallels are particularly relevant as Aschenbrenner and others argue for accelerated AGI development to preempt Chinese capabilities. However, current tentative evidence suggests China shows concern for safety issues, openness to international cooperation, and no apparent state-led urgency to surpass U.S. capabilities.[9]

The opposition to nuclear weapons offers parallels to resistance against unrestricted AGI development. Individual scientists like Szilard and Fermi mirror contemporary figures like Geoffrey Hinton and Yoshua Bengio[10] in their ethical concerns. A broader parallel exists in the early antinuclear movement of 1945-1946, when concerned atomic scientists formed the Federation of American Scientists and the Emergency Committee of Atomic Scientists.[11] Both organizations advocated for civilian control of atomic energy and international cooperation rather than an arms race. Similarly today, movements like Future of Life Institute, Machine Intelligence Research Institute, Center for AI Safety, PauseAI and many others are passionate on their mission but face limited political traction in terms of concrete action.

Mainstream media coverage

The mainstream media was initially sympathetic to scientists' concerns in 1945-46, but quickly turned hostile towards them, portraying them as naive, soft, or subversive. Following the Berlin Crisis[12] and Soviet nuclear test, key media narratives shifted from advocating international control to emphasizing nuclear supremacy as essential for security - and remained there for fifteen years.

Analysis of The New York Times and The Washington Post archives demonstrates this shift in media stance. The New York Times editorials in 1946 supported Acheson-Lilienthal report which proposed international control of atomic energy.[13] Both newspapers supported Baruch Plan[14] for international oversight. Already in fall 1945, numerous editorials supported the creation of an international governance for atomic bombs.[15] The New York Times claimed that “The solution must lie in ultimate international control of the atomic bomb and other peculiarly destructive weapons subsequently invented”,[16] and how “[…] scientists see hope only in American cooperation with the rest of the world in controlling atomic energy as a weapon by world authority.”[17]

The Washington Post similarly published pieces emphasizing cooperation.[18] In October, they quoted President Truman: "The hope of civilization lies in international arrangements looking, if possible, to the renunciation and use and development of the atomic bomb […].”[19] They also quoted an anonymous officer: “It may be we should sit down now with the other nations, before this happens, and arrive at some kind of a convention to control atomic bomb.”[20]

After the 1949 Soviet test, media pushed for rapid weapons development and portrayed the hydrogen bomb as essential. With nuclear superiority portrayed as crucial for national survival, criticism was rare in the mainstream press and dissenting views were excluded. By 1947, The New York Times’s tone had changed. They published pieces advocating American nuclear superiority.[21] By 1950, The Washington Post treated the arms race as self-evident, and supported the development of the hydrogen bomb, despite its greater destructive power than the Hiroshima and Nagasaki bombs combined.[22]

Coverage of nuclear tests also shows this transformation clearly. In 1946, both papers gave significant space to scientists' concerns about testing. By the mid-1950s, test coverage focused primarily on technical achievements and military significance, with minimal attention to health or environmental concerns. Technical details about weapon yields and delivery systems replaced earlier coverage of international control efforts and scientists' warnings. While earlier scientists' views on nuclear dangers were widely published, they disappeared from the newspapers.

Seed of change

What drives such a rapid transformation of the tone in the newspapers? It reflects a broader change in American society, from seeing nuclear weapons as a unique threat requiring international cooperation to viewing them as essential tools of national power. Changes in the dynamics of the international politics affected the public and the politicians; growing Cold War tensions and Soviet nuclear capabilities heightened American fears. Simultaneously, the scientific community's influence on nuclear policy diminished, particularly after the Oppenheimer hearing.[23] More fundamentally, this shift illustrates how various actors shape generalized opinions that ultimately drive political decisions. Major newspapers—the era's leading media format—both influenced and reflected evolving nuclear discourse, and different stakeholders tried to use newspapers to their advantage as well.

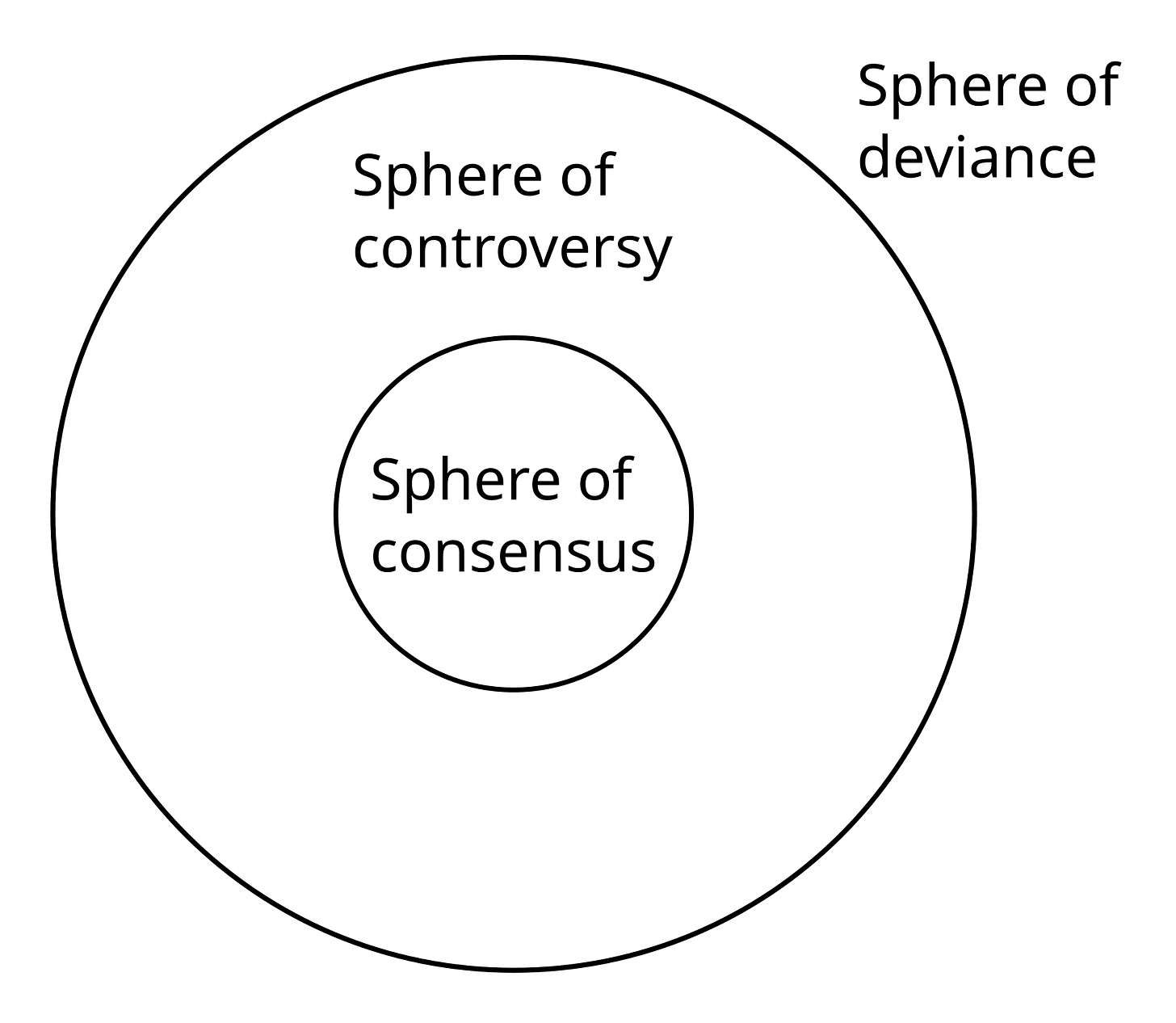

Not all topics, nor perspectives, are allowed for public discourse. The accepted opinions and perspectives shift over time. After the Vietnam war, journalism historian Daniel C. Hallin developed his theory of political discourse. Hallin’s spheres are a concept similar to Overton window and opinion corridor. From Oxford Reference:

The sphere of consensus is a province of implicit agreement wherein journalists present the ‘official line’ as the only correct point of view. The sphere of legitimate controversy is a province of objectivity wherein issues can be debated among different parties representing a plurality of views. The sphere of deviance is a province excluding or ridiculing those whose views are considered to be too radical, irresponsible, or even dangerous to be given a fair hearing.

The key priority here is understanding by which dynamics information and topics shift from sphere to sphere, i.e., what is the anatomy of the change. In particular, it is important to see this understanding applied to the discussion around AI safety, especially in the context of international governance and policy. The assumption that politicians operate independently of public opinion doesn't hold. The relationship between political decision-making and public sentiment follows - at least to some meaningful extent - identifiable patterns and causal dynamics. There is intrinsic value in understanding these patterns, as they reveal how public discourse can influence even the highest levels of policy-making.

The nuclear era reveals several key patterns in how transformative technologies reshape public discourse, offering lessons for AI governance. There was the initial period of scientific concern and calls for international cooperation, followed by a shift toward national security framing. Initially, scientific concern and calls for international cooperation dominated, followed by national security framing. We are in the midst of this transition, making it crucial to leverage this window before cooperation concerns shift to the sphere of deviance, as occurred with nuclear weapons.

Mainstream media is willing to give voice to those worried about the trajectory, even quite extensively, providing - for now - an opportunity to challenge inevitability narratives. Eventually the technical achievements can overshadow safety considerations in public discussion. We are seeing some preliminary elements of this, but not widely yet - when we will have something like advanced and reliable AI assistants, we start shifting there concretely.[24] Most concerning is the pattern of marginalizing dissenting scientific voices as technology becomes tied to national interests. While this shift isn't yet state-driven, the proposed 'Manhattan project for AGI,' endorsed by U.S. government commission, signals potential rapid change.

Conclusion: Lesson to Learn

The stories of nuclear weapons development and the rapid progress of AI research are not similar. Nonetheless, both could have catastrophic consequences. Advanced AI threats may emerge not only from leading labs with vast computing resources, but also through increasingly capable open-weighted models. Ours and Cold War are different eras in many ways. However, this analysis demonstrates that nothing is set in stone—including the perceived imperative to race toward AGI development. Just as social dynamics shaped technological development in the 1940s and 1950s, they continue to influence today's trajectories.

These historical parallels suggest several concrete steps for shaping AI governance narratives.

- We must maintain the current window of opportunity where mainstream media remains receptive to safety concerns, unlike the rapid closure that occurred with nuclear discourse. Understanding what mechanics determine what narratives become and stay influential is valuable to achieve this.

- Prioritize technical safety discussions rather than allowing them to be overshadowed by capability achievements.

- The hardest - but doable, as history shows - part is establishing international dialogue channels before national security narratives become dominant - something that proved impossible during the nuclear age once Cold War tensions escalated. Only Cuban crisis unlocked the standoff.

Unlike our predecessors in the nuclear age, we have the advantage of historical hindsight. We must use it wisely to avoid repeating the pattern where technology outpaces our ability to govern it responsibly.

- ^

Aschenbrenner’s paper has had, of course, a lot of critique as well. As I understand it, however, his view is popular on the Capitol Hill and among the key players of the game, probably because he streamlines and bypasses many technological issues and crafts an appealing and familiar narrative. The Stargate Project, White House’s initiative from this week, confirms this intuition.

- ^

Situational Awareness, part IIId The Free World Must Prevail, subsection “The Authoritarian Peril”.

- ^

Germany had surrendered 8.5.1945, and Trinity Test was conducted 16.7.1945.

- ^

France (1960), China (1964), India (1974), Pakistan (1998), North Korea (2006) and Israel (unknown).

- ^

It was common and public knowledge that a world war waged with nuclear warheads would be civilizationally devastating. Thus it is reasonable to assume that at least some individuals in leadership positions thought that maybe this path where we build more weapons and more advanced missile systems does not lessen the risk for the end of the world as we know it.

- ^

During the 1962 Cuban Missile Crisis, Soviet deployment of nuclear missiles in Cuba matched U.S. deployments in Turkey. The U.S. ordered a naval blockade, escalating superpower tensions. A Soviet submarine nearly launched a nuclear torpedo at U.S. ships—an action that likely would have triggered nuclear World War.

- ^

Strategic Arms Limitation Treaty (SALT I), signed in 1972, limited defensive systems to create 'mutual vulnerability,' stabilizing the nuclear balance.

- ^

Edward Teller, a Manhattan Project scientist, became the hydrogen bomb's chief advocate. Lewis Strauss, as Atomic Energy Commission Chairman (1953-1958), championed aggressive nuclear development and testing. Curtis LeMay, Strategic Air Command leader and later Air Force Chief of Staff, developed massive nuclear retaliation strategy and advocated maintaining nuclear superiority.

- ^

The translation of China’s 2017 A Next Generation Artificial Intelligence Development Plan shows China does not have - at least officially - the ambitions they are blamed from. See analysis especially in Garrison Lovely’s excellent post here, and recently Zvi Mowshowitz here and here.

- ^

Despite being part of the Manhattan Project, Leo Szilard opposed the actual use of nuclear weapons, hoping that the existence of them would be enough to end the world war. He was - correctly - also worried about the potential nuclear arms race between U.S. and the Soviets. Later, Szilard lobbied to place nuclear energy under civilian control. Enrico Fermi opposed the later development of hydrogen bomb. The parallel here is that Yoshua Bengio and Geoffrey Hinton are pioneers of modern AI research; with Yann LeCun, the trio was awarded Turing Award in 2018 for their work on foundational work on deep learning. Both Bengio and Hinton have taken a cautious approach to the development of AI systems and have warned about the existential risks they pose.

- ^

The Federation of American Scientists (FAS) was formed in 1945 by atomic scientists from the Manhattan Project. Their goals were to prevent nuclear war, promote peaceful use of atomic energy, and advance responsible science policy. They still exist. The Emergency Committee of Atomic Scientists was established in 1946 by Albert Einstein and Leo Szilard. It aimed to educate the public about atomic weapons' dangers and promote international control of nuclear energy, and dissolved in 1951.

- ^

The Berlin Blockade, 1948-1949, the first major international crisis of the Cold War. The Soviet Union blocked Western Allies' access to Western Berlin, which escalated the tension between the West and the Soviets.

- ^

The New York Times: “The Atomic Energy Report” (March 30, 1946) and “Atomic Research” (April 14, 1946).

- ^

Baruch Plan was a proposal made in 1946 by US Government where they agreed to decommission all of its atomic weapons and transfer nuclear technology on the condition that all other countries pledged not to produce atomic weapons and agreed to an adequate system of inspection, including monitoring, policing, and sanctions. The Plan also proposed to internationalize fission energy via an International Atomic Development Authority.

- ^

The New York Times: "World Union Proposed" (Sept. 16, 1945); "Problems of Atomic Energy" (Oct. 7, 1945); "Peace by Law Our One Hope" (Oct. 10, 1945). It was also frequently discussed whether the atomic bomb should be shared with the Russians; The New York Times: “Truman Cabinet Reflects a National Cleavage”(Sept. 24, 1945) and The Washington Post on the same day, “The Atomic Secret”.

- ^

The New York Times, "The Charter's Coming Test" (Aug. 25, 1945).

- ^

The New York Times, "Problem of the Bomb" (Oct. 13, 1945).

- ^

The Washington Post: "Secrecy on Weapons" (Sept. 2, 1945); "Sharing the new bomb" (Sept. 3, 1945); "A Free World Republic: Atomic Power Brings Responsibility" (Sept. 16, 1945); "Controlling the atom" (Sept. 24, 1945); "The International Atom" (Sept. 29, 1945); "Control of atoms" (Oct. 4, 1945); "Atomic policy" (Oct. 5, 1945).

- ^

The Washington Post, "Truman Proposes Eventual Talks With Other Nations" (Oct. 4, 1945).

- ^

The Washington Post, "Experts Favor Delayed-Action Bomb for Any Warship Test" (Oct. 1, 1945).

- ^

The New York Times, "Disarmament - the issue" (Feb. 4, 1947).

- ^

The Washington Post: "Speeding Up of A-Bombs is discussed" (Sept. 28, 1949); "U.S. Answer to the Bomb" (Oct. 2, 1949); "Views on the H-bomb" (Feb. 1, 1950).

- ^

Oppenheimer security clearance hearing was a four-week period in 1954 when the United States Atomic Energy Commission (AEC) explored the background, actions, and associations of J. Robert Oppenheimer, resulting in Oppenheimer's Q clearance being revoked. It is considered the turning point of scientist's role in the American society, as consequently their role was limited to experts of narrow scientific issues rather than part of the larger policy discussion.

- ^

It is curious, though, that innovations such as AlphaFold’s breakthroughs have not seen wide mainstream media coverage, despite the remarkable possibilities they create already in their current forms. Probably the big audience needs major applications that affect them first-hand - stuff like ChatGPT.

0 comments

Comments sorted by top scores.