[linkpost] Self-Rewarding Language Models

post by Jacob G-W (g-w1) · 2024-01-21T00:30:10.923Z · LW · GW · 2 commentsThis is a link post for https://arxiv.org/abs/2401.10020

Contents

2 comments

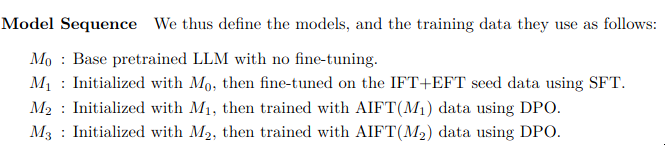

Abstract: We posit that to achieve superhuman agents, future models require super- human feedback in order to provide an adequate training signal. Current approaches commonly train reward models from human preferences, which may then be bottlenecked by human performance level, and secondly these separate frozen reward models cannot then learn to improve during LLM training. In this work, we study Self-Rewarding Language Models, where the language model itself is used via LLM-as-a-Judge prompting to provide its own rewards during training. We show that during Iterative DPO training that not only does instruction following ability improve, but also the ability to provide high-quality rewards to itself. Fine-tuning Llama 2 70B on three iterations of our approach yields a model that outperforms many existing systems on the AlpacaEval 2.0 leaderboard, including Claude 2, Gemini Pro, and GPT-4 0613. While only a preliminary study, this work opens the door to the possibility of models that can continually improve in both axes

This seems like a significant step towards recursive self-improvement. Not directly changing the optimization algorithm or the weights, but this is a proxy for that. Now, if the network had some deceptive behavior, it seems (from what I understand) that it could become more deceptive by providing itself training data that will cause it to become more deceptive. I haven't thought about it much, but it seems this could also cause some weird feedback loops where flukes (or random fluctuation) in the grading could cause the models to go in some weird directions since the grading model is the n-1th model:

2 comments

Comments sorted by top scores.

comment by Charlie Steiner · 2024-01-21T01:26:56.306Z · LW(p) · GW(p)

I'm reminded of the Real Genius scene where they're celebrating building the death laser and Mitch says "Let the engineers figure out a use for it, that's not our concern."

Which in turn reminds me of "Once the rockets go up, who cares where they come down? That's not my department, says Werner von Braun."

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-01-21T06:39:34.686Z · LW(p) · GW(p)

I think about that song mocking Werner von Braun a lot these days.