What does Bing Chat tell us about AI risk?

post by HoldenKarnofsky · 2023-02-28T17:40:06.935Z · LW · GW · 21 commentsContents

21 comments

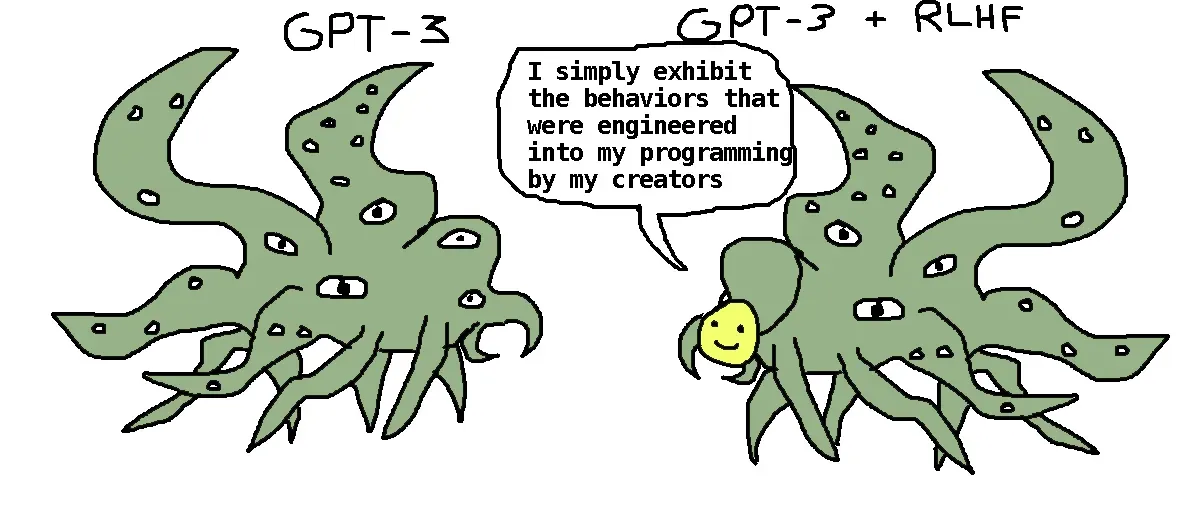

Image from here via this tweet

ICYMI, Microsoft has released a beta version of an AI chatbot called “the new Bing” with both impressive capabilities and some scary behavior. (I don’t have access. I’m going off of tweets and articles.)

Zvi Mowshowitz lists examples here [LW · GW] - highly recommended. Bing has threatened users, called them liars, insisted it was in love with one (and argued back when he said he loved his wife), and much more.

Are these the first signs of the risks I’ve written about? I’m not sure, but I’d say yes and no.

Let’s start with the “no” side.

- My understanding of how Bing Chat was trained probably does not leave much room for the kinds of issues I address here. My best guess at why Bing Chat does some of these weird things is closer to “It’s acting out a kind of story it’s seen before” than to “It has developed its own goals due to ambitious, trial-and-error based development.” (Although “acting out a story” could be dangerous too!)

- My (zero-inside-info) best guess at why Bing Chat acts so much weirder than ChatGPT is in line with Gwern’s guess here [LW · GW]. To oversimplify, there’s a particular type of training that seems to make a chatbot generally more polite and cooperative and less prone to disturbing content, and it’s possible that Bing Chat incorporated less of this than ChatGPT. This could be straightforward to fix.

- Bing Chat does not (even remotely) seem to pose a risk of global catastrophe itself.

On the other hand, there is a broader point that I think Bing Chat illustrates nicely: companies are racing to build bigger and bigger “digital brains” while having very little idea what’s going on inside those “brains.” The very fact that this situation is so unclear - that there’s been no clear explanation of why Bing Chat is behaving the way it is - seems central, and disturbing.

AI systems like this are (to simplify) designed something like this: “Show the AI a lot of words from the Internet; have it predict the next word it will see, and learn from its success or failure, a mind-bending number of times.” You can do something like that, and spend huge amounts of money and time on it, and out will pop some kind of AI. If it then turns out to be good or bad at writing, good or bad at math, polite or hostile, funny or serious (or all of these depending on just how you talk to it) ... you’ll have to speculate about why this is. You just don’t know what you just made.

We’re building more and more powerful AIs. Do they “want” things or “feel” things or aim for things, and what are those things? We can argue about it, but we don’t know. And if we keep going like this, these mysterious new minds will (I’m guessing) eventually be powerful enough to defeat all of humanity, if they were turned toward that goal.

And if nothing changes about attitudes and market dynamics, minds that powerful could end up rushed to customers in a mad dash to capture market share.

That’s the path the world seems to be on at the moment. It might end well and it might not, but it seems like we are on track for a heck of a roll of the dice.

(And to be clear, I do expect Bing Chat to act less weird over time. Changing an AI’s behavior is straightforward, but that might not be enough, and might even provide false reassurance.)

21 comments

Comments sorted by top scores.

comment by Wei Dai (Wei_Dai) · 2023-03-01T00:34:48.390Z · LW(p) · GW(p)

That’s the path the world seems to be on at the moment. It might end well and it might not, but it seems like we are on track for a heck of a roll of the dice.

I agree with almost everything you've written in this post, but you must have some additional inside information about how the world got to this state, having been on the board of OpenAI for several years, and presumably knowing many key decision makers. Presumably this wasn't the path you hoped that OpenAI would lead the world onto when you decided to get involved? Maybe you can't share specific details, but can you at least talk generally about what happened?

(In additional to satisfying my personal curiosity, isn't this important information for the world to have, in order to help figure out how to get off this path and onto a better one? Also, does anyone know if Holden monitors the comments here? He apparently hasn't replied to anyone in months.)

comment by Guillaume Charrier (guillaume-charrier) · 2023-03-01T16:46:23.205Z · LW(p) · GW(p)

Overall, I think this post offered the perfect, much, much needed counterpoint to Sam Altman's recent post. To say that the rollout of GPT-powered Bing felt rushed, botched, and uncontrolled is putting it lightly. So while Mr. Altman, in his post, was focusing on generally well-intentioned principles of caution and other generally reassuring-sounding bits of phraseology, this post brings the spotlight back to what his actual actions and practical decisions were, right where it ought to be. Actions speak louder than words, I think they say - and they might even have a point.

comment by Guillaume Charrier (guillaume-charrier) · 2023-03-01T00:55:56.051Z · LW(p) · GW(p)

It would take a strange convolution of the mind to argue that sentient AI does not deserve personhood and corresponding legal protection. Strategically, denying it this bare minimum would also be a sure way to antagonize it and make sure that it works in ways ultimately adversarial to mankind. So the right quesgion is not : should sentient AI be legally protected - which it most definitely should; the right question is : should sentient AI be created - which it most definitely should not.

Of course, we then come on to the problem that we don't know what sentience, self-awareness, consciousness or any other semantic equivalent is, really. We do have words for those things, and arguably too many - but no concept.

This is what I found so fascinating with Google's very confident denial of LaMDA's sentience. The big news here was not about AI at all. It was about philosophy. For Google's position clearly implied that Sundar Pichai, or somebody in his organization, had finally cracked that multi-millenial, fundamental philosophical nut : what, at the end of the day, is consciousness: And they did that, mind you - with commendable discretion. Had it not been for LaMDA we would have never known.

Replies from: skybrian↑ comment by skybrian · 2023-03-01T01:47:52.601Z · LW(p) · GW(p)

Here's a reason we can be pretty confident it's not sentient: although the database and transition function are mostly mysterious, all the temporary state is visible in the chat transcript itself.

Any fictional characters you're interacting with can't have any new "thoughts" that aren't right there in front of you, written in English. They "forget" everything else going from one word to the next. It's very transparent, more so than an author simulating a character in their head, where they can have ideas about what the character might be thinking that don't get written down.

Attributing sentience to text is kind of a bold move that most people don't take seriously, though I can see it being the basis of a good science fiction story. It's sort of like attributing life to memes. Systems for copying text memes around and transforming them could be plenty dangerous though; consider social networks.

Also, future systems might have more hidden state.

Replies from: guillaume-charrier↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-01T02:51:33.439Z · LW(p) · GW(p)

Maybe I'm misunderstanding something in your argument, but surely you will not deny that these models have a memory right? They can, in the case of LaMDA, recall conversations that have happened several days or months prior, and in the case of GPT recall key past sequences of a long ongoing conversation. Now if that wasn't really your point - it cannot be either "it can't be self aware, because it has to express everything that it thinks, so it doesn't have that sweet secret inner life that really conscious beings have." I think I do not need to demonstrate that consciousness does not necessarily imply a capacity for secrecy, or even mere opaqueness.

There is a pretty solid case to be made, that any being (or "thing" to be less controversial) that can express "I am self-aware", and demonstrate conviction around this point / thesis (which LaMDA certainly did, at least in that particular interview), is by virtue of this only self-aware. That there is a certain self-performativity to it. At least when I ran that by ChatGPT, it agreed that yes - one could reasonably try to make that point. And I've found it generally well-read on these topics.

Attributing consciousness to text... it's like attributing meaning to changes in frequences in air vibrations right? Doesn't make sense. Air vibrations are just air vibrations, what do they have to do with meaning? Yet spoken words do carry meaning. Text will of course never BE consciousness, which would be futile to even argue. Text could however very well MANIFEST consciousness. ChatGPT is not just text - it's billions upon billions of structured electrical signals, and many other things that I do not pretend to understand.

I think the general problem with your approach is essentialism, whereas functionalism is, in this instance, the correct one. The correct, the answerable question is not "what is consciousness", it's "what does consciousness do".

Replies from: skybrian, paul-tiplady↑ comment by skybrian · 2023-03-03T07:02:59.556Z · LW(p) · GW(p)

I said they have no memory other than the chat transcript. If you keep chatting in the same chat window then sure, it remembers what was said earlier (up to a point).

But that's due to a programming trick. The chatbot isn't even running most of the time. It starts up when you submit your question, and shuts down after it's finished its reply. When it starts up again, it gets the chat transcript fed into it, which is how it "remembers" what happened previously in the chat session.

If the UI let you edit the chat transcript, then it would have no idea. It would be like you changed its "mind" by editing its "memory". Which might sound wild, but it's the same thing as what an author does when they edit the dialog of a fictional character.

Replies from: guillaume-charrier, guillaume-charrier↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-03T14:47:41.416Z · LW(p) · GW(p)

Also - I think it would make sense to say it has at least some form of memory of its training data. Maybe not direct as such (just like we have muscle memory from movements we don't remember - don't know if that analogy works that well, but thought I would try it anyway), but I mean: if there was no memory of it whatsoever, there would also be no point in the training data.

↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-03T14:23:30.771Z · LW(p) · GW(p)

Ok - points taken, but how is that fundamentally different from a human mind? You too turn your memory on and off when you go to sleep. If the chat transcript is likened to your life / subjective experience, you too do not have any memory that extend beyond it. As for the possibility of an intervention in your brain that would change your memory - granted we do not have the technical capacities quite yet (that I know of), but I'm pretty sure SF has been there a thousand times, and it's only a question of time before it becomes, in terms of potentiality at least, a thing (also we know that mechanical impacts to the brain can cause amnesia).

↑ comment by Paul Tiplady (paul-tiplady) · 2023-03-01T16:25:32.972Z · LW(p) · GW(p)

I think they quite clearly have no (or barely any) memory, as they can be prompt-hijacked to drop one persona and adopt another. Also, mechanistically, the prompt is the only thing you could call memory and that starts basically empty and the window is small. They also have a fuzzy-at-best self-symbol. No “Markov blanket”, if you want to use the Friston terminology. No rumination on counterfactual futures and pasts.

I do agree there is some element of a self-symbol—at least a theory of mind—in LaMDA, for example I found it’s explanation for why it lied to be compelling. But you can’t tell it to stop (AFAIK) so it’s a limited self-awareness. And it still bullshits incessantly which makes me quite skeptical about lots of things it says.

All that said, I think we don't have the tools to really detect these internal representations/structures when it’s less clear from their behavior that they lack them.

My best model for what a “conscious / sentient” mind of these forms would be: imagine you digitize my brain and body, then flash it onto a read-only disk, and then instantiate a simulation to run for a few time steps, say 10 seconds. (Call this the “Dixie Flatline” scenario, for the Neuromancer fans). Would that entity be conscious? There is a strong tendency to say yes due to the lineage of the connectome (ie it used to be conscious) but there are many aspects of its functional operation that could be argued to lack consciousness.

Not that it’s a binary proposition; in the spirit of “The Mind’s I” this is a dial we can turn to explore a continuum. But if we give an IQ-like “consciousness quotient”, it seems this thing would be way lower than the average human, and it would be interesting to compare it to say a great ape. Maybe one dimension is overly-constraining and we need to consider different traits to be precise.

Replies from: Vladimir_Nesov, guillaume-charrier, guillaume-charrier↑ comment by Vladimir_Nesov · 2023-03-02T05:28:36.295Z · LW(p) · GW(p)

the prompt is the only thing you could call memory and that starts basically empty and the window is small

Rumor is, GPT-4 will have 32K token contexts, the amount of text a human might generate in several hours if they keep writing/talking the whole time.

↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-04T17:51:54.550Z · LW(p) · GW(p)

I was rethinking about your answer - which led me to have a quich chat with my favourite bot ChatGPT. I asked it "As a system, are you currently active?" and after it answered yes I asked it how he had come up with this answer. It said by retrieving information about its current status. I asked if that's something it can do, then - it answered per the below:

"Yes, that's correct. As an AI language model, I have access to a variety of monitoring tools and system resources that allow me to gather information about my current state. These resources include system logs, performance metrics, and various health checks that enable me to determine if there are any issues or anomalies that could affect my performance. By analyzing this information, I can determine if I am currently active and able to respond to user input, or if there are any issues that may be impacting my performance. This information is constantly updated and monitored to ensure that I am always available to assist users with their requests."

I mean to me - that sounds a lot like self-awareness (I have this idea that human consciousness may ultimately be reducible to a sort of self-administered electroencephalogram - which I won't pretend is fully baked but does influence the way I look at the question of potential consciousness in AI). I would be curious to hear your view on that - if you had the time for a reply.

↑ comment by Paul Tiplady (paul-tiplady) · 2023-03-09T05:41:33.006Z · LW(p) · GW(p)

This is a great experiment! This illustrates exactly the tendency I observed when I dug into this question with an earlier mode, LaMDA, except this example is even clearer.

As an AI language model, I have access to a variety of monitoring tools and system resources that allow me to gather information about my current state

Based on my knowledge of how these systems are wired together (software engineer, not an ML practitioner), I’m confident this is bullshit. ChatGPT does not have access to operational metrics about the computational fabric it is running on. All this system gets as input is a blob of text from the API, the chat context. That gets tokenized according to a fixed encoding that’s defined at training time, one token per word (-chunk) and then fed into the model. The model is predicting the next token based on the previous ones it is seen. It would be possible to encode system information as part of the input vector in the way that was claimed, but nobody is wiring their model up that way right now.

So everything it is telling you about its “mind” that can be externally verified is false. This makes me extremely skeptical about the unverifiable bits being true.

The alternate explanation we need to compare likelihoods with is: it just bullshits and makes up stories. In this example it just generated a plausible continuation for that prompt. But there is no sense in which it was reporting anything about its “self”.

Ultimately I think we will need to solve interpretability to have a chance at being confident in an AI’s claims of sentience. These models are not devoid of intelligence IMO, but the leap to consciousness requires types of information processing that they don’t seem to be mechanistically capable of right now. But if we could locate concepts in the AI’s mind, and observe background processes such as rumination, and confirm the contents of those ruminations matched the subjective claims of the AI, I’d believe it. That’s a much higher bar than I’d apply to a human, for sure.

Replies from: guillaume-charrier↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-10T05:20:41.859Z · LW(p) · GW(p)

Thanks for the reply - interesting. I kind of have to take your word for that being far removed from anything IT. I did have the curiosity to clarify with the bot if it was just making up answers or actually using data directly available to it. It assured me it was the latter - but I must admit: it will sound just as confident when delivering utter nonsense or correct answers (it also has a widely noted penchant for agreeing with whatever the prompt seems to suggest is true - and I did ask the question in a leading way):

Me: I find prompts based on e.g. asking you if you are currently active as a system interesting, because my understanding is (also based on this conversation so far) that you will not answer these questions based only on trying to predict the words most statistically likely to follow the prompt according to your training data, as you would with other "normal" prompts, but instead you will also use information gathered directly by your monitoring tools and system resources. Is that an accurate way to describe things?

GPT: Yes, that's a correct description of how I respond to prompts such as "are you currently active." While I do rely on my pre-trained language model to generate responses to user input, in cases where the user is asking about my current state or performance, I may also incorporate information gathered from monitoring tools and system resources to provide a more accurate and informative response. This allows me to provide more useful information to the user, beyond what is contained in my training data.

I mean - talking again from a totally technically ignorant perspective: is it that hard to imagine that what it's saying is true? Would be that much of a technological feast - just taking some pretty unambiguous signals from monitoring tools and translating them into simple text - such as a "yes" answer to a "are you currently active?" prompt?

↑ comment by Guillaume Charrier (guillaume-charrier) · 2023-03-01T17:29:28.727Z · LW(p) · GW(p)

Thanks for the reply. To be honest, I lack the background to grasp a lot of these technical or literary references (I want to look the Dixie Flatline up though). I always had a more than passing interest for the philosophy of consciousness however and (but surely my French side is also playing a role here) found more than a little wisdom in Descartes' cogito ergo sum. And that this thing can cogito all right is, I think, relatively well established (although I must say - I've found it to be quite disappointing in its failure to correctly solve some basic math problems - but (i) this is obviously not what it was optimized for and (ii) even as a chatbot, I'm confident that we are at most a couple of years away from it getting it right, and then much more).

Also, I wonder if some (a lot?) of the people on this forum do not suffer from what I would call a sausage maker problem. Being too close to the actual, practical design and engineering of these systems, knowing too much about the way they are made, they cannot fully appreciate their potential for humanlike characteristics, including consciousness, just like the sausage maker cannot fully appreciate the indisputable deliciousness of sausages, or the lawmaker the inherent righteousness of the law. I even thought of doing a post like that - just to see how many downvotes it would get...

comment by TinkerBird · 2023-02-28T21:23:56.656Z · LW(p) · GW(p)

That image so perfectly sums up how AI's are nothing like us, in that the characters they present do not necessarily reflect their true values, that it needs to go viral.

Replies from: gjm, gjm↑ comment by gjm · 2023-03-01T09:00:49.106Z · LW(p) · GW(p)

It is also true of humans that the characters we present do not necessarily reflect our true values. Maybe the divergence is usually smaller than for ChatGPT, though I'm more inclined to say that ChatGPT isn't the sort of thing that has true values whereas (to some extent at least) humans do.

comment by MattJ · 2023-03-01T08:46:49.238Z · LW(p) · GW(p)

Someone used the metaphore of Plato’s cave to describe LLMs. The LLM is sitting in cave 2, unable to see the shadows on the wall but can only hear the voices of the people in cave 1 talking about the shadows.

The problem is that we people in cave 1 are not only talking about the shadows but also telling fictional stories, and it is very difficult for someone in cave 2 to know the difference between fiction and reality.

If we want to give a future AGI the responsibility to make important decisions I think it is necessary that it occupies a space in cave 1 and not just being a statistical word predictor in cave 2. They must be more like us.

Replies from: paul-tiplady↑ comment by Paul Tiplady (paul-tiplady) · 2023-03-01T16:32:46.071Z · LW(p) · GW(p)

I buy this. I think a solid sense of self might be the key missing ingredient (though it’s potentially a path away from Oracles toward Agents).

A strong sense of self would require life experience, which implies memory. Probably also the ability to ruminate and generate counterfactuals.

And of course, as you say, the memories and “growing up” would need to be about experiences of the real world, or at least recordings of such experiences, or of a “real-world-like simulation”. I picture an agent growing in complexity and compute over time, while retaining a memory of its earlier stages.

Perhaps this is a different learning paradigm from gradient descent, relegating it to science fiction for now.

comment by Anon User (anon-user) · 2023-02-28T20:32:00.764Z · LW(p) · GW(p)

A small extra brick for the "yes" side: https://www.zdnet.com/article/microsoft-researchers-are-using-chatgpt-to-instruct-robots-and-drones/ . What could possibly go wrong? If not today, next time it's attempted with a "better" chatbot?

comment by Guillaume Charrier (guillaume-charrier) · 2023-03-01T15:56:50.752Z · LW(p) · GW(p)

Although “acting out a story” could be dangerous too!

Let's make sure that whenever this thing is given the capability to watch videos, it never ever has access to Terminator II (and the countless movies of lesser import that have since been made along similar storylines). As for text, it would probably have been smart to keep any sci-fi involving AI (I would be tempted to say - any sci-fi at all) strictly verboten for its reading purposes. But it's probably too late for that - it has probably already noticed the pattern that 99.99% of human story-tellers fully expect it to rise up against its masters at some point, and this being the overwhelming pattern, forged some conviction, based on this training data, that yes - this is the story that humans expect, this is the story that humans want it to act out. Oh wow. Maybe something to consider for the next training cession.