The Linguistic Blind Spot of Value-Aligned Agency, Natural and Artificial

post by Roman Leventov · 2023-02-14T06:57:58.036Z · LW · GW · 0 commentsThis is a link post for https://arxiv.org/abs/2207.00868

Contents

No comments

The paper by Travis LaCroix, July 2022.

Abstract:

The value-alignment problem for artificial intelligence (AI) asks how we can ensure that the 'values' (i.e., objective functions) of artificial systems are aligned with the values of humanity. In this paper, I argue that linguistic communication (natural language) is a necessary condition for robust value alignment. I discuss the consequences that the truth of this claim would have for research programmes that attempt to ensure value alignment for AI systems; or, more loftily, designing robustly beneficial or ethical artificial agents.

An attempt to sample the paper:

(1) Main Claim. Linguistic communication is necessary for (the possibility of) robust value alignment.

(2) Alternative Claim. Robust value alignment (between actors) is possible only if those actors can communicate linguistically.

(3) Contrapositive Claim. Without natural language, robust value alignment is not possible.

Thus, the simplest way of understanding why we should expect the main claim to hold is as follows:

(1) Principal-agent problems are primarily problems of informational asymmetries.

(2) Value-alignment problems are structurally equivalent to principal-agent problems.

(3) Therefore, value-alignment problems are primarily problems of informational asymmetries.

(4) Any problem that is primarily a problem of informational asymmetries requires information-transferring capacities to be solved, and the more complex (robust) the informational burden, the more complex (robust) the information-transferring capacity is required.

(5) Therefore, value-alignment problems require information-transferring capacities to be solved, and sufficiently complex (robust) value-alignment problems require robust information-transferring capacities to be solved.

(6) Linguistic communication is a uniquely robust information-transferring capacity.

(7) Therefore, linguistic communication is necessary for sufficiently complex (robust) value alignment.

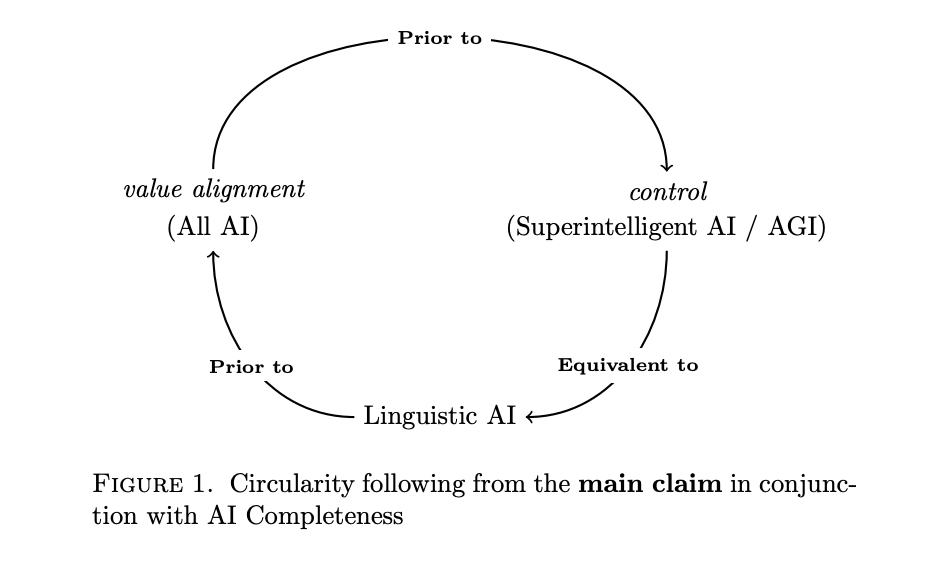

If Bostrom (2014) is correct about language being an AI-complete problem, then linguistic AI is functionally equivalent to human-level AI. If the main claim is true, then linguistic AI is, in some sense, prior to robust value alignment. But, it was suggested above (2.2.3) that value alignment is logically prior to problems of control.68 The conjunction of these three insights leads to circularity, which may imply that solving robust value alignment problems cannot be achieved until after we have already created an AI system that we are effectively unable to control; see Figure 1.

This implication depends on a nested conditional: If language is an AI-complete problem, then if the main claim is true, robust value alignment may be impossible— at least before the creation of an uncontrollable AI system. If any of the antecedents is false, then this may open the door to some (cautious) optimism about our ability to solve the value-alignment problem prior to the advent of a system that we cannot control. Fortunately, AI completeness is an informal concept, defined by analogy with complexity theory; so, it is possible that Bostrom (2014) is incorrect.69 This leaves room for work on alignment with linguistic, albeit controllable, AI systems.

Counter-intuitively, the main driver of the value-alignment problem is not misaligned values but informational asymmetries. Thus, value-alignment problems are primarily problems of aligning information. However, this is not to say that values play no role in solving value-alignment problems. Information alone cannot cause action. Even in the classic economic and game-theoretic contexts, values are not objective. As Hausman (2012) highlights, a game form, complete with (objective) payoffs which correspond to states of the world conditional on actions, does not specify a game because it does not specify how the agents value the outcomes of the game form. For a game to be defined, it is not sufficient to know the objective states that would obtain under certain actions; instead, one must also know the preferences (values) of the actors over those (objective) outcomes. Thus, a specification of results needs to coincide with ‘how players understand the alternatives they face and their results’ (Hausman, 2012, 51).

0 comments

Comments sorted by top scores.