Why Job Displacement Predictions are Wrong: Explanations of Cognitive Automation

post by Moritz Wallawitsch (moritz-wallawitsch) · 2023-05-30T20:43:49.615Z · LW · GW · 0 commentsContents

Job Augmentations Examples Explanations of Automation I. Thiel II. Chollet III. Deutsch HCI Research Distributed Cognition Skills, Rules, Knowledge (SRK) Framework Scaling Laws Economics and Regulation Footnotes None No comments

Cross post from https://scalingknowledge.substack.com/p/why-job-displacement-predictions

Most predictions about job displacement through large language models (LLMs) are wrong. This is because they don’t have good explanations of how LLMs and human intelligence differ from each other and how they interact with our evolving economy.

Some of the bad, explanation-less predictions include OpenAI’s “80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of GPTs”[1] and Goldman Sachs’ “300 million full-time jobs around the world could be automated”.

Expert opinion won't get us far as the growth of knowledge is unpredictable. Stone Age people couldn’t have predicted the invention of the wheel since its prediction necessitates its invention. We need a hard-to-vary explanation to understand a system or phenomenon.

Job Augmentations Examples

Software Engineers: Tools like Copilot and AI startups are automating small tasks such as creating PRs or writing the code base for a game. Some might assume that this mirrors the industrial revolution's process of breaking down craftsmen's work into smaller, automatable units for SWEs. However, building a software company is not building an assembly line product. It is building the factory. The productionized unit of output is the usage of the software service. Engineers will always be essential for building the factory and maintaining the codebase, leveraged AI tools. Even if one model writes the prompt for another model we still need engineers to prompt the root of the chain.

Doctors: Google's report on Med-PaLM 2[2], an LLM trained on medicine data achieving 85.4% on a med-school-like exam, may lead some to believe doctors' jobs will be automated. In reality, only certain uncreative aspects will. LLMs can aid doctors in symptom analysis and diagnosis suggestions, but expertise in emotional intelligence and moral discernment for treatment selection remains vital. Medicine is also a physical craft more so than a digital one. A medical LLM bot could be used to provide emotional support and answer questions 24/7, but most will still prefer to talk to a human or have them look at their diagnosis.

Lawyers: LLMs can assist lawyers in researching case histories, identifying precedents, and drafting legal documents. However, lawyers are still crucial for interpreting complicated laws, providing strategic advice, negotiating settlements, and representing clients in court. LLMs can automate repetitive tasks, allowing lawyers to focus on the intricacies of cases and clients' needs.

Directors and Producers: In the recent months we’ve seen lots of people posting realistic looking pictures, videos or full rap songs claiming that they are “AI-generated”. That is incorrect. These media are created by humans augmented by AI. LLMs can generate scripts, recommend scene compositions, synthesize voices, or even create entire video sequences, but the vision, creativity, and storytelling abilities of directors and producers are indispensable for crafting compelling imagery, films, and shows. LLMs can take over the technical aspects, enabling creative professionals to focus on the emotional impact and artistic elements of their projects.

Explanations of Automation

I. Thiel

Peter Thiel describes his theory of Substitution vs Complementarity[3] as:

[M]en and machines are good at fundamentally different things. People have intentionality—we form plans and make decisions in complicated situations. We’re less good at making sense of enormous amounts of data. Computers are exactly the opposite: they excel at efficient data processing, but they struggle to make basic judgments that would be simple for any human.

The stark differences between man and machine mean that gains from working with computers are much higher than gains from trade with other people. We don’t trade with computers any more than we trade with livestock or lamps. And that’s the point: computers are tools, not rivals.

[T]echnology is the one way for us to escape competition in a globalizing world. As computers become more and more powerful, they won’t be substitutes for humans: they’ll be complements.

In other words—LLMs augment human cognition; they don't replace it. They are too different to compete directly.

If aliens land on Earth tomorrow, they will likely want to collaborate with us (thereby increasing their wealth). This is because their knowledge is different from ours and because they will have discovered that collaboration fosters problem-solving more than conquest does.

Humans have a limited memory (though forgetting can be mitigated with learning software), but LLMs can function as an immensely large interpolative database that can compress a large fraction of the corpus of human knowledge.

LLMs lack disobedience, agency, universality, and creativity (and context/long-term memory)—all of which are fundamental parts of human intelligence.

II. Chollet

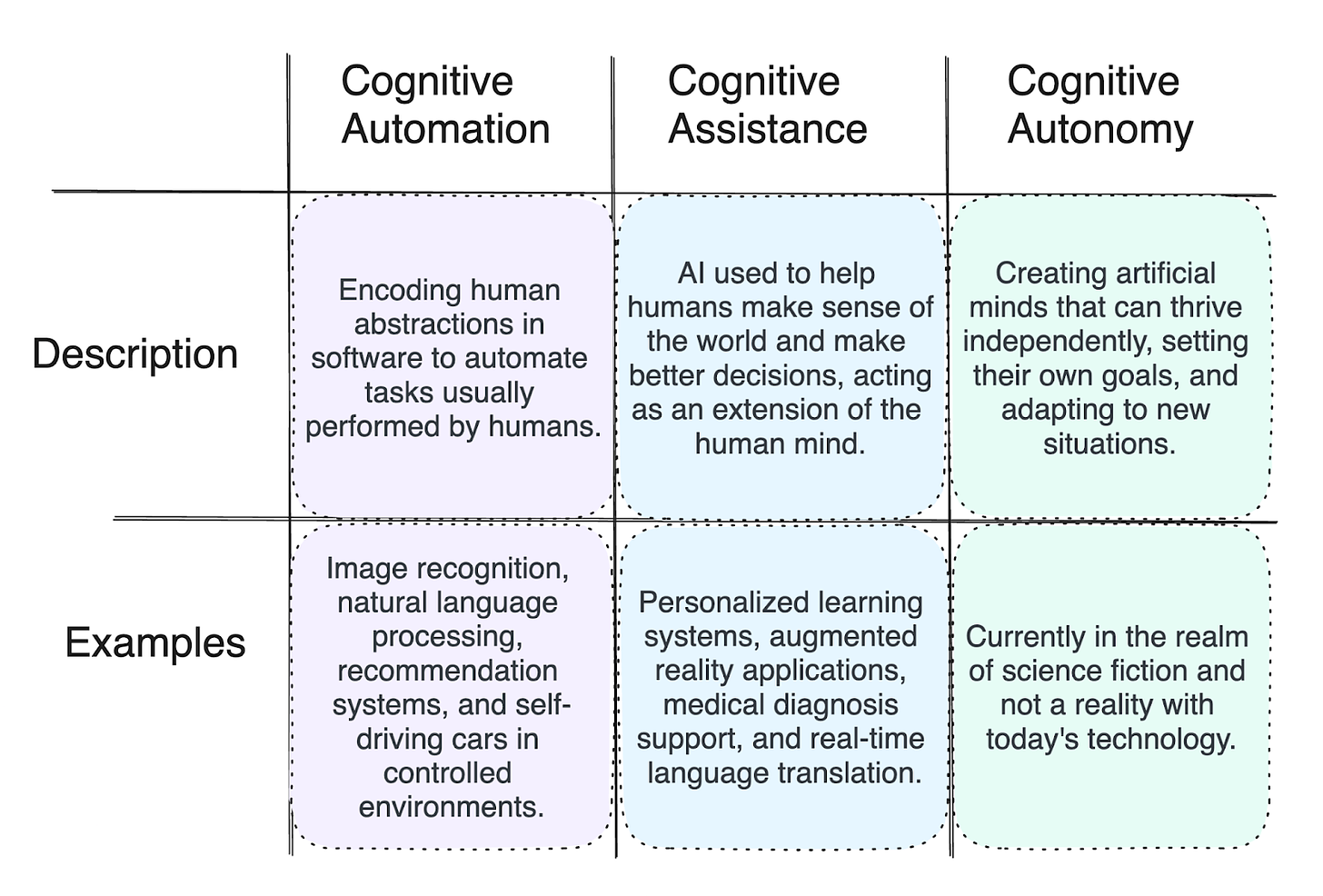

The AI researcher and deep learning textbook author François Chollet describes three types of cognitive automation: 1. Cognitive automation, 2. Cognitive assistance, and 3. Cognitive autonomy. He writes[4]:

Cognitive automation can happen via explicitly hard-coding human-generated rules (so-called symbolic AI or GOFAI), or via collecting a dense sampling of labeled inputs and fitting a curve to it (such as a deep learning model). This curve then functions as a sort of interpolative database—while it doesn’t store the exact data points used to fit it, you can query it to retrieve interpolated points, much like you can query a model like StableDiffusion to retrieve arbitrary images generated by combining existing images.

The differences between automation and assistance in his framework seem imprecise. The third category, cognitive autonomy, also feels more like a continuum. A product can have high or low autonomy. The recent hype around AutoGPT and BabyGPT (applications that use recursive mechanisms to help GPT create prompts for GPT) display this lack of autonomy. These bots are notorious for getting stuck. They lack the ability to navigate the problem/search space with open-endedness. I’m not saying these are insurmountable technical obstacles. I’m just pointing out that we currently have no autonomous and general AI tools. And I don’t see any evidence why we should have these very soon. (If you have a strong argument for the opposite feel free to leave a comment).

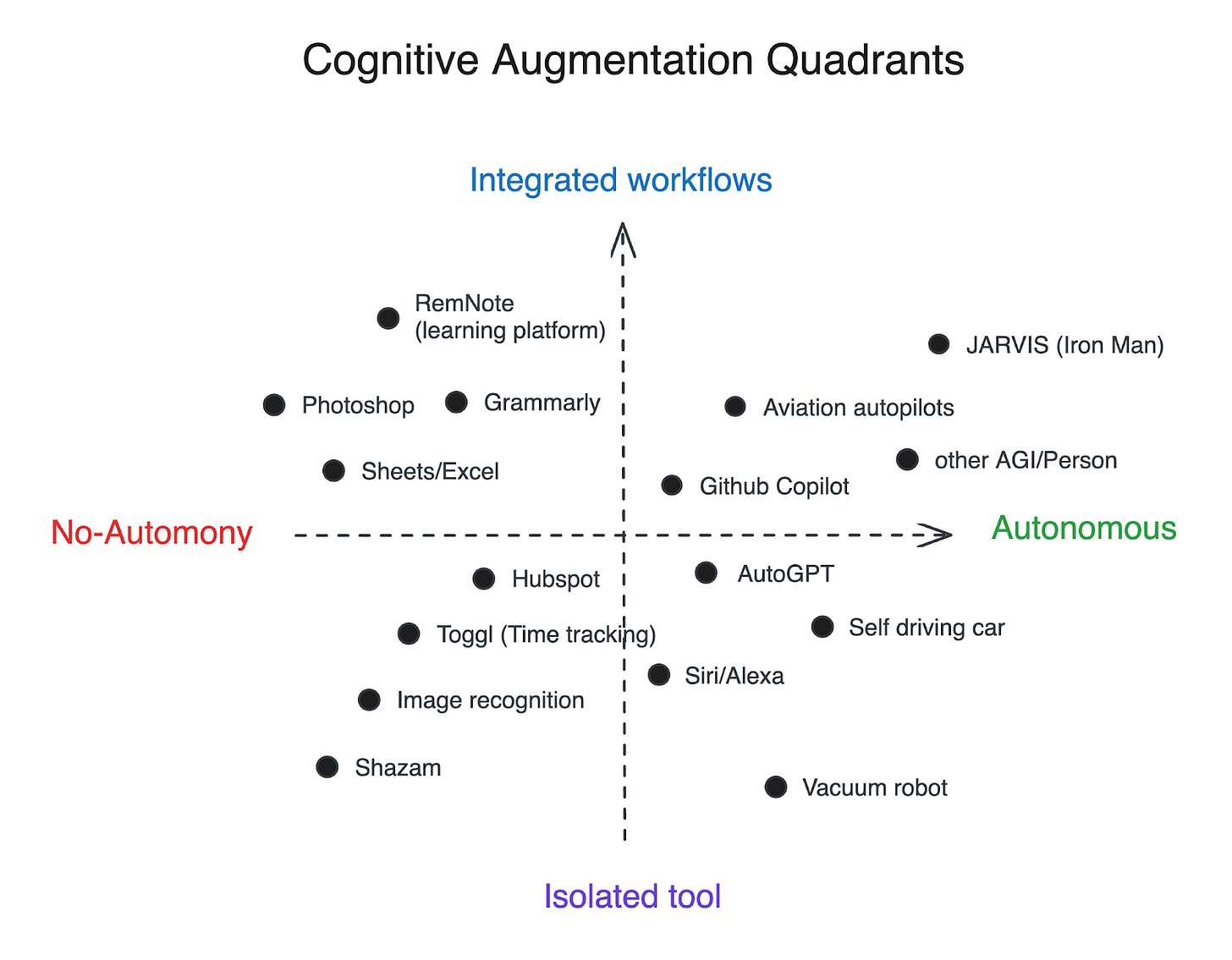

I proposed we think of these two categories as the same, with systems falling on a continuum of workflow integration and autonomy:

I picked the term integrated workflow as the opposite of “isolated tool” here because a workflow represents a collection of cognitive tasks. Products in the upper part of a graph allow its users to augment an entire collection of their cognitive processes, not just a small part.

III. Deutsch

The physicist and founder of the field of quantum computation, David Deutsch wrote[5]

Edison said that research is one per cent inspiration and ninety-nine per cent perspiration – but that is misleading, because people can apply creativity even to tasks that computers and other machines do uncreatively.

[It] is a misleading description of how progress happens: the ‘perspiration’ phase can be automated – just as the task of recognizing galaxies on astronomical photographs was. And the more advanced technology becomes, the shorter is the gap between inspiration and automation.

Writing a ChatGPT prompt for a high-converting landing page is creative work that requires inspiration. Writing the copy is the perspiration phase that is continually automated to the delight of copywriters that can now focus on the creative part of their work.

HCI Research

Human-computer Interaction research is a helpful field when thinking about the automation of cognitive tasks:

Distributed Cognition

Distributed Cognition defines cognition as a collection of cognitive processes that can be separated in space and time. This can be a single process within one human’s brain (multiple brain areas), or it can be several processes managed by multiple components distributed across tools, agents, or a communication network. The cognition required to fly a large passenger plane is distributed over the personnel, sensors, and computers both in the plane and on the ground[6]. We can classify two classes of distributive cognition: shared cognition and off-loading. Shared cognition is shared among people (or agents) through common activity such as conversation where there is a constant change of cognition based on the other person's responses. An example of off-loading would be using a calculator.

These categories correspond somewhat to the axes in my Cognitive Augmentation Quadrants framework. Shared cognition corresponds to integration and offloading corresponds to autonomy.

It is a deep curiosity of mine to what extent knowledge generation can be distributed across multiple brains.

Skills, Rules, Knowledge (SRK) Framework

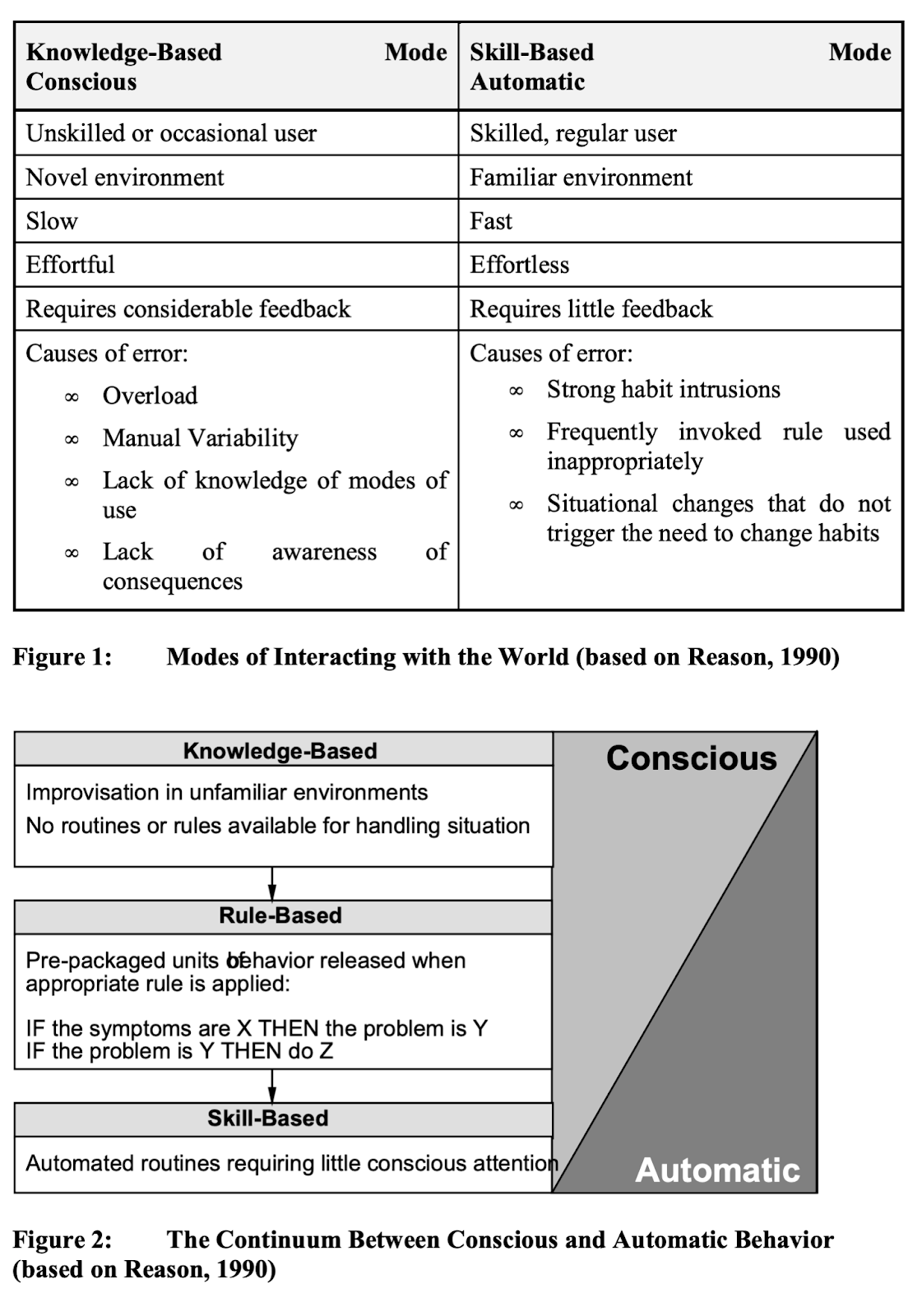

The SRK framework classifies human cognitive processes into three categories: skill-based, rule-based, and knowledge-based behavior (Rasmussen, 1983).

Claiming that a job will be automated away is extremely imprecise. A single job consists of many skills, including ones that are used extremely rarely or involve improvisation in an unfamiliar environment.

In machine learning, this is also called the "long tail problem," the phenomenon where the model makes errors because certain rare events are not represented in the training dataset[7]. The reason we still have TSA officers is to hedge against the long tail problem of TSA weapon detection models. Another example is modern self-driving car technology. It relies on black box (end-to-end trained) models that do not have any explicit built-in knowledge of the laws of physics or ethics. A human driver generalizes to an extremely rare and never-seen situation, while a deep learning model operating on induction cannot.

The above graphic shows that the third category of skill-based cognitive processes is more automatable than the others.

Scaling Laws

The Scaling Laws hypothesize that LLMs will continue to improve with increasing model size, training data, and compute.

Some claim that at the end of these scaling laws lies AGI. This is wrong. It is like saying that if we make cars faster, we’ll get supersonic jets. The error is to assume that the deep learning transformer architecture will somehow magically evolve into AGI (gain disobedience, agency, and creativity). Professor Noam Chomsky also called this thinking emergentist[8]. Evolution and engineering are not the same.

In his book, The Myth of Artificial Intelligence, AI researcher Eric J. Larson shares similar views, criticizing multiple failed "big data neuroscience" projects writing that “technology is downstream of theory”. AGIs (People) create new knowledge at runtime, while current LLM/transformer-based models can only improve if we give them more training data.

Gwern’s “Scaling hypothesis AGI” is based on the claim that GPT-3 has somewhere around twice the “absolute error of a human”. He calculates that we’ll reach “human-level performance” once we train a model 2,200,000 X the size of GPT-3[9]. He assumes that training AGI becomes a simple $10 trillion investment (in 2038 if declining compute trends continue).

The mistake here is assuming that human-level performance on an isolated writing task is a meaningful measurement of human intelligence. Just because an AI model performs on par with humans in one specific task doesn't mean it has the same general intelligence exhibited by humans. A calculator is better than a human at calculating, but it doesn’t replace the work of a mathematician conjecturing new theorems etc.

Economics and Regulation

As I outlined, there is no valid technical or economic reason to believe that we’ll have unforeseen unemployment. We’ll see massive task-automation not job-automation. Quite the opposite. ChatGPT has been around for close to half a year now, and unemployment is still at an all-time low[10].

The last 5 years of LLM innovation since the invention of the transformer in 2017 have certainly been impressive. Many are baffled by the pace of improvement and sophistication of the technology. But there is no reason to believe we’ll suddenly invent a significantly more generally intelligent architecture that will bring about something surprising. Matt Ridley in The Evolution of Everything wrote:

[W]here people are baffled, they are often tempted to resort to mystical explanations.

The new LLM tooling will empower millions of humans with powerful writing tools competing in an increasingly globalized internet economy. LLMs are powerful but are no substitute for human creativity and autonomy.

The idea that jobs are a limited resource is wrong, as it assumes that the economy is zero-sum. Human desire is infinite. We’ll always come up with new desires that require new jobs.

Even if there is a large job displacement, that is desirable because it makes people move to more productive jobs. The lack of work for each individual is only temporary until they find a new job. Arguing that these people are too unintelligent to find a new job also doesn't make sense because we can ask: How did they get the job that they lost in the first place?

They can create new knowledge at run-time, something current LLMs can’t. Some might argue that knowledge retrieval proves the opposite. But knowledge retrieval or fine-tuning a new model based on new data, for example, Using Low-Rank Adaptation of Large Language Models (LoRA)[11], are not knowledge creation. They are techniques to give the model access to more data at inference time.

The most important rule for human progress is to “never remove the means of error correction”, as Karl Popper wrote. If a human is doing a task that can be more productively done by a machine, that is an error in resource allocation. Did the Gutenberg printing press displace a few hundred scribes, illuminators, and bookbinders, or did it lead to billions of humans gaining access to knowledge, enabling the Enlightenment and eliminating poverty and suffering?

It is hard for most people to fathom what harm regulations create. This holds especially for general purpose technologies like nuclear or LLMs. For example, without the destructive nuclear energy regulations, we could enjoy energy as cheap to meter, fueling billions of life-enhancing devices, vehicles, and facilitating remarkable advancements in transportation, thus promoting global commerce, wealth creation, and thus reduction of poverty and suffering.

Regulating AI (for whatever reason imaginable) is potentially worse than regulating nuclear power because it is a cognitive enhancement. Regulating AI is regulating the ability to augment our intelligence and create knowledge.

Thanks to Zeel Patel, Johannes Hagemann, Brian Chau, Markus Strasser, Luke Piette, Farrel Mahaztra, Logan Chipkin, Danny Geisz, James Hill-Khurana and Jasmine Wang for feedback on drafts of this.

Footnotes

[1]: https://openai.com/research/gpts-are-gpts

[2]: https://sites.research.google/med-palm/

[3]: Thiel, Zero to One, ch. 12 “Man and Machine”

[4]: AI is cognitive automation, not cognitive autonomy

[5]: The Beginning of Infinity, ch 2. “Closer to Reality”

[6]: Hutchins, E. (1995), How a Cockpit Remembers Its Speeds.

[7]: See Melanie Mitchell, Artificial Intelligence: A Guide for Thinking Humans (p. 100)

[8]: In ML street talk podcasts episode.

[9]: https://gwern.net/scaling-hypothesis

[10]: Some, for ex., some recent stats here.

[11]: https://arxiv.org/abs/2106.09685

0 comments

Comments sorted by top scores.