Extortion and trade negotiations

post by Stuart_Armstrong · 2016-12-17T21:39:30.166Z · LW · GW · Legacy · 28 commentsContents

Fairness and equity The all important default None 28 comments

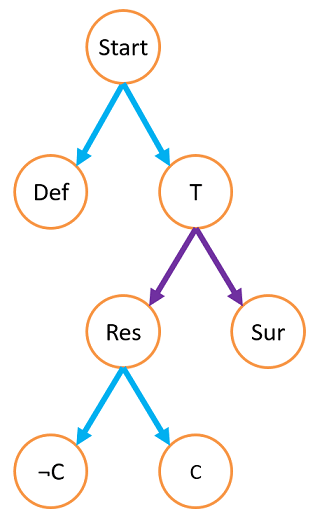

To illustrate an old point - that it's hard to distinguish between extortion and trade negotiations - here's a schematic diagram of extortion, alternating actions by player B (blackmailer/extorter//blue) and V (victim//violet):

The extorter can let the default Def happen, or can instead do a threat, ending up in point T. Then the victim can resist (Res) or surrender (Sur). If the victim resists, the extorter has the option of carrying out their threat (C) or not doing so (¬C).

You need a few conditions to make this into a extortion situation:

- Sur has to be the best outcome for B (or else the extortion has no point).

- To make sure that T is a true threat, Sur has to be worse than Def for V, and C has to be worse that ¬C and Def for B.

- And to make this into extortion, C has to be worse than Def for V.

A mere threat doesn't make this into extortion. Indeed, trade negotiations are a series of repeated threats from both sides, making offers with the implicit threat that they will walk away from the deal entirely if that offer is not accepted.

But if C is worse that Def for V, then this seems a true extortion: V will end up worse if they resist a extorter who carries out their threats, and they would have much preferred that the extorter not be able to make credible threats in the first place. And the only reason the extorter made the threats, was to force the victim to surrender.

Fairness and equity

Note that this is not about fairness or niceness. It's perfectly possible to extort someone into giving you fair treatment (depending on how you see the default point, many of the boycotts during the civil right movement would count as extortion).

The all important default

This model seems clear; so why is it so hard to identify extortion in real life? One key issue is disagreement over the default point. Consider the following situations:

- During the Cuban missile crisis, it was clear to the Americans that the default was no nuclear missiles in Cuba, and the soviets were recklessly violating this default. To the USSR, the default was obviously that countries could station nuclear missiles on their allies' territories (like the Americans were doing in Turkey). Then, once the blockade was in place and the soviet ships were on their way, the default seems to be nuclear war (meaning that practically nothing could count as extortion in this situation).

- Take a management-union dispute, where management wants to cut pay. The unions can argue that this violates long-standing company policy of decent pay. Management can retort that the default is actually to be a profitable company, and that their industry is currently in decline, so declining pay should be the default. After a bit of negotiating, the two seem to reach the framework of a decent understanding - is this now the default for further negotiations?

- "You must give me something to satisfy my population!" "Now, I'm fine with this, but when our army arrives, I'm not sure I can control them." "Well, I'll try and talk to my president, but he's crazy! Throw me some sort of bone here, will you?" All these types of arguments are an attempt to shift the default in their favour. Sure, the negotiator isn't threatening you, they just needs your help to contain the default behaviour of their people/army/president.

- Two people are negotiating to trade water and food. If no trade is reached, they will both die. Can "both dying" be considered a reasonable default? Or is "both trade at least enough to ensure mutual survival" a default, seeing as "both dying" is not an outcome either would ever want? Can a situation that will never realistically happen be considered a default?

- Take the purest example of blackmail (extortion via information): a photographer snaps shots of an adulterous couple, and sells the photos back to them for much money. Blackmail! But what if they just happened to be in a shot that the photographer was taking of other people? Then the photographer is suppressing the photos to help the couple, and charging a reasonable fee for that (what if the fee is unreasonable? does it make a difference?). But what if the photographer deliberately hangs around areas where trysts often happen, to get some extra cash? Or what if they don't do that deliberately, but photographers who don't hang around these areas can't turn a profit and change jobs, so that only these ones are left?

These examples should be sufficient to illustrate the degree of the problem, and also show how defaults are often forged by implicit and explicit norms, so extortions are clearest cut when they also include norm violation, trust violation, or other dubious elements. behaviours. In a sense, picking the default is the important thing; picking exactly the right default is less important, since once the default is known, people can adjust their expectations and behaviour in consequence.

28 comments

Comments sorted by top scores.

comment by paulfchristiano · 2016-12-17T22:49:33.149Z · LW(p) · GW(p)

Although it is standard practice around here, "blackmail" is a weird name for this phenomenon. Why not "extortion"?

In the broader world, "blackmail" is a particular kind of extortion in which the threat is to reveal information (occasionally people use it more broadly, particularly in "emotional blackmail," which seems to just come from the same kind of sloppiness that led this community to call it "blackmail"). Calling bargaining "blackmail" sounds weird because bargaining has nothing to do with revealing information, but it is quite common to recognize a fine line between bargaining and extortion.

On topic:

Presumably the distinction between extortion and trade is in counterfactuals: in the extortion case the target is better off if they are known to be unwilling to respond to extortion, while in the trade case the target is better off if they are known to be willing to respond to trade. That seems like the most promising way to get at a formal distinction. Certainly this is tricky and we don't yet have a good enough understanding of decision theory to make clean statements. But I'm not sure if thinking about it as being about a "default" is useful though, we can take a step forward by replacing default with "what would happen if the trader/extorter didn't think that trade/extortion was possible." This makes it clear that norms are mostly relevant insofar as they bear on this counterfactual.

It makes sense that an extorter would like to convince the target of extortion that things would have been much worse for the target if they weren't expected to play ball, in exactly the same way that the extorter would like to convince the target that things will be much worse for the target if they don't actually play ball, or that a trader who wants to sell X would like to convince people that X is more valuable.

This kind of deception seems pretty straightforward compared to the more sticky issue of trying to make logically prior commitments, and the general fact that we don't understand very well how decision theory should work.

Replies from: Stuart_Armstrong, The_Jaded_One, Stuart_Armstrong↑ comment by Stuart_Armstrong · 2016-12-18T11:07:26.457Z · LW(p) · GW(p)

I'd like people not to threaten to hit me to get my stuff. I'd like people to trade with me. I'd like people who trade with me not to precommit to taking 90% of the gains from trade. I'd also like people who trade with me not to precommit to taking 10% of the gains from trade.

Hell, if someone is going to hit me anyway, I'd like the option of paying them a little to make them hit less hard.

It seems to me that counterfactual is just another word for default - ie the alternative that would have happened if they'd not decided to extort/trade.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2016-12-19T17:41:28.373Z · LW(p) · GW(p)

It seems to me that counterfactual is just another word for default - ie the alternative that would have happened if they'd not decided to extort/trade.

Sure, we can use default as another name for a particular counterfactual. Note that many people around here are already asking "how should we compute logical counterfactuals?" Thinking about defaults suggests an emphasis on different considerations, like norms, which seem like the wrong place to start.

(Note also that "what would have happened if they'd not decided to extort/trade" isn't the right counterfactual, so if that's what "default" means then I don't think that defaults are the important questions. We care about counterfactuals over our behavior, or over other peoples' beliefs about our behavior.)

↑ comment by The_Jaded_One · 2016-12-18T10:08:18.678Z · LW(p) · GW(p)

"what would happen if the trader/extorter didn't think that trade/extortion was possible."

This is a good way of defining 'default', though honestly evaluating that counterfactual could prove problematic.

↑ comment by Stuart_Armstrong · 2016-12-18T11:11:24.976Z · LW(p) · GW(p)

Do you expect that decision theory will reach a single conclusion here, or that it'll be a more scissor-paper-stone situations (hence the ideal outcome is to minimise the loss to extorsion and maximise the gain to trade, while accepting they're in tension)?

comment by bogus · 2016-12-18T13:37:18.673Z · LW(p) · GW(p)

Take the purest example of blackmail: a photographer snaps shots of an adulterous couple, and sells the photos back to them for much money. Blackmail! But what if they just happened to be in a shot that the photographer was taking of other people? Then the photographer is suppressing the photos to help the couple, and charging a reasonable fee for that (what if the fee is unreasonable? does it make a difference?). But what if the photographer deliberately hangs around areas where trysts often happen, to get some extra cash? Or what if they don't do that deliberately, but photographers who don't hang around these areas can't turn a profit and change jobs, so that only these ones are left?

Right. I think the best definition of extortion, if there's one, has to do with expending costly resources in order to gain a better bargaining position in a zero-sum way. There's also a general notion that agreements should be "free and non-coercive" if we are to avoid extortion, which then calls for some notion of property rights (quite possibly a better name than "defaults"). But what default property assignments should we choose?

Generally, the most efficient assignment will attempt to simply replicate the post-trade outcome - here, we're either going to say that a photographer can be found liable for snapping shots of adulterous couples, or that couples should expect to take precautions in order to avoid being photographed, depending on what's most sensible. This of course involves a "least cost principle", so in some way we're back to the earlier notion that avoiding zero-sum resource waste is what's key to this distinction. But of course there are other considerations, including equity and preserving good incentives under limited information - all of these issues are quite familiar to social scientists.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2016-12-19T11:32:45.534Z · LW(p) · GW(p)

I like this, and I think I know what you mean, but does "simply replicate the post-trade outcome" have a precise meaning here?

Replies from: bogus↑ comment by bogus · 2016-12-19T11:43:21.030Z · LW(p) · GW(p)

The idea is that an assignment of property rights should be such that agents do not need to engage in trade negotiations thereafter simply to fix a bad assignment. This is because negotiation is itself costly, and sometimes even impossible (as in the case of asymmetric information). Thus, property assignments should try to anticipate the outcome of these "fixup" negotiations. For instance, when the Soviet Union has the right to station nukes in Cuba then we need to negotiate in order to fix this, when it's quite clear ex-ante that the negotiations will result in no nukes being allowed in Cuba.

Replies from: Lumifer, Stuart_Armstrong↑ comment by Lumifer · 2016-12-20T16:36:44.163Z · LW(p) · GW(p)

Thus, property assignments should try to anticipate the outcome of these "fixup" negotiations.

Generally speaking, yes, but the whole point of relying on the market as opposed to central planning is that quite often you do not know (or are mistaken about) the outcome of the negotiations.

For instance, it's not obvious to me at all that ex ante it was clear that the Soviet nukes would not be allowed in Cuba.

↑ comment by Stuart_Armstrong · 2016-12-20T13:04:37.898Z · LW(p) · GW(p)

when it's quite clear ex-ante that the negotiations will result in no nukes being allowed in Cuba.

That is not clear, especially given the precedent of Turkey.

But thanks for clarifying that definition!

comment by Academian · 2016-12-20T12:31:18.997Z · LW(p) · GW(p)

I've been trying to get MIRI to switch to stop calling this blackmail (extortion for information) and start calling it extortion (because it's the definition of extortion). Can we use this opportunity to just make the switch?

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2016-12-20T13:01:21.196Z · LW(p) · GW(p)

Did so, changed titles and terminology.

comment by Dagon · 2016-12-19T03:05:06.230Z · LW(p) · GW(p)

Why is it a problem? Taboo "blackmail" and figure out what distinction you're really trying to identify. I suspect it's just violation of norms. Likely with added spice of confusion that there is some moral difference between action and inaction.

Replies from: fubarobfusco, Stuart_Armstrong↑ comment by fubarobfusco · 2016-12-19T05:34:47.249Z · LW(p) · GW(p)

I think it goes beyond violation of norms. It has to do with the sum over the entire interaction between the two parties, as opposed to a single tree-branch of that interaction. In one case, you are in a bad situation, and then someone comes along, and offers to relieve it for a price. In the other case, you are in an okay situation and then someone comes along and puts you into a bad situation, then offers to relieve it.

This can also be expressed in terms of your regret of the other party's presence in your life. Would you regret having ever met the trader who sells you something you greatly need? No. You're better off for that person having been around. Would you regret having ever met the extortionist who puts you into a bad situation and then sells you relief? Yes. You'd be better off if they had never been there.

It matters that the same person designed the situation, causing the disutility in order to be able to offer to relieve it. Why? Because an extortionist has to optimize for creating disutility. They have to create problems that otherwise wouldn't be there. They have to make the world worse; otherwise they wouldn't be able to offer their own restraint as a "service".

Tolerance of extortion allows the survival of agents who go around dumping negative utility on people.

Contrast the insurer with the protection racket. The insurer doesn't create the threat of fire. They may warn you about it, vividly describe to you how much better off you'd be with insurance if your house burns down. But the protection racket has to actually set some people's stuff on fire, or at least develop the credible ability to do so, in order to be effective at extracting protection money.

Replies from: Stuart_Armstrong, Dagon↑ comment by Stuart_Armstrong · 2016-12-19T11:39:21.201Z · LW(p) · GW(p)

Confused as well by insurers who are protection rackets. The Mafia did provide some pluses as well as costs, and governments with emergency services will lock you up if you fail to pay the taxes that support them.

↑ comment by Dagon · 2016-12-19T15:10:12.430Z · LW(p) · GW(p)

Tolerance of extortion allows the survival of agents who go around dumping negative utility on people.

This is backwards. Tolerance of dumping negative utility allows extortion. The problem is the ability/willingness to cause harm, not the monetization.

↑ comment by Stuart_Armstrong · 2016-12-19T11:31:15.393Z · LW(p) · GW(p)

Taboo "blackmail" and figure out what distinction you're really trying to identify.

I'm trying to identify the distinction between trade and blackmail ^_^

Replies from: Dagon↑ comment by Dagon · 2016-12-19T15:04:08.719Z · LW(p) · GW(p)

Unpack further, please. Are you trying to understand legal or colloquial implications of the words in the English language, or are you more concerned with clusterings of activities for some additional shared attributes?

Replies from: kokotajlod↑ comment by kokotajlod · 2016-12-19T16:31:22.779Z · LW(p) · GW(p)

As I understand it, the idea is that we want to design an AI that is difficult or impossible to blackmail, but which makes a good trading partner.

In other words there are a cluster of behaviors that we do NOT want our AI to have, which seem blackmailish to us, and a cluster of behaviors that we DO want it to have, which seem tradeish to us. So we are now trying to draw a line in conceptual space between them so that we can figure out how to program an AI appropriately.

Replies from: Dagon, Vaniver↑ comment by Dagon · 2016-12-20T00:15:03.674Z · LW(p) · GW(p)

As I understand it, the idea is that we want to design an AI that is difficult or impossible to blackmail, but which makes a good trading partner.

You and Stuart seem to have different goals. You want to understand and prevent some behaviors (in which case, start by tabooing culturally-dense words like "blackmail"). He wants to understand linguistic or legal definitions (so tabooing the word is counterproductive).

Replies from: Stuart_Armstrong, kokotajlod↑ comment by Stuart_Armstrong · 2016-12-20T13:03:15.622Z · LW(p) · GW(p)

? No, I have the same goals as kokotajlod.

↑ comment by kokotajlod · 2016-12-20T14:43:57.090Z · LW(p) · GW(p)

"You want to understand and prevent some behaviors (in which case, start by tabooing culturally-dense words like "blackmail")"

In a sense, that's exactly what Stuart was doing all along. The whole point of this post was to come up with a rigorous definition of blackmail, i.e. to find a way to say what we wanted to say without using the word.

Replies from: Dagon, Lumifer↑ comment by Dagon · 2016-12-20T18:06:21.276Z · LW(p) · GW(p)

Now I'm really confused. Are you trying to define words, or trying to understand (and manipulate) behaviors? I'm hearing you say something like "I don't know what blackmail is, but I want to make sure an AI doesn't do it". This must be a misunderstanding on my part.

I guess you might be trying to understand WHY some people don't like blackmail, so you can decide whether you want to to guard against it, but even that seems pretty backward.

Replies from: kokotajlod↑ comment by kokotajlod · 2016-12-21T15:23:51.225Z · LW(p) · GW(p)

You make it sound like those two things are mutually exclusive. They aren't. We are trying to define words so that we can understand and manipulate behavior.

"I don't know what blackmail is, but I want to make sure an AI doesn't do it." Yes, exactly, as long as you interpret it in the way I explained it above.* What's wrong with that? Isn't that exactly what the AI safety project is, in general? "I don't know what bad behaviors are, but I want to make sure the AI doesn't do them."

*"In other words there are a cluster of behaviors that we do NOT want our AI to have, which seem blackmailish to us, and a cluster of behaviors that we DO want it to have, which seem tradeish to us. So we are now trying to draw a line in conceptual space between them so that we can figure out how to program an AI appropriately."

Replies from: Lumifer↑ comment by Lumifer · 2016-12-21T15:59:07.132Z · LW(p) · GW(p)

What's wrong with that?

It's a badly formulated question, likely to lead to confusion.

there are a cluster of behaviors that we do NOT want our AI to have, which seem blackmailish to us

So, can you specify what this cluster is? Can you list the criteria by which a behaviour would be included in or excluded from this cluster? If you do this, you have defined blackmail.

Replies from: kokotajlod↑ comment by kokotajlod · 2016-12-23T18:45:00.179Z · LW(p) · GW(p)

"It's a badly formulated question, likely to lead to confusion." Why? That's precisely what I'm denying.

"So, can you specify what this cluster is? Can you list the criteria by which a behaviour would be included in or excluded from this cluster? If you do this, you have defined blackmail."

That's precisely what I (Stuart really) am trying to do! I said so, you even quoted me saying so, and as I interpret him, Stuart said so too in the OP. I don't care about the word blackmail except as a means to an end; I'm trying to come up with criteria by which to separate the bad behaviors from the good.

I'm honestly baffled at this whole conversation. What Stuart is doing seems the opposite of confused to me.

↑ comment by Lumifer · 2016-12-20T16:14:40.440Z · LW(p) · GW(p)

come up with a rigorous definition of blackmail

To avoid reinventing the wheel, I suggest looking at legal definitions of blackmail, as well as reading a couple of law research articles on the topic. Lawyers and judges had to deal with this issue for a very long time and they need to have criteria which produce a definite answer.