Unit economics of LLM APIs

post by dschwarz, nikos (followtheargument), Lawrence Phillips, kotrfa · 2024-08-27T16:51:22.692Z · LW · GW · 0 commentsContents

No comments

Disclaimer 1: Our calculations are rough in places; information is sparse, guesstimates abound.

Disclaimer 2: This post draws from public info on FutureSearch as well as a paywalled report. If you want the paywalled numbers, email dan@futuresearch.ai with your LW account name and we’ll send you the report for free.

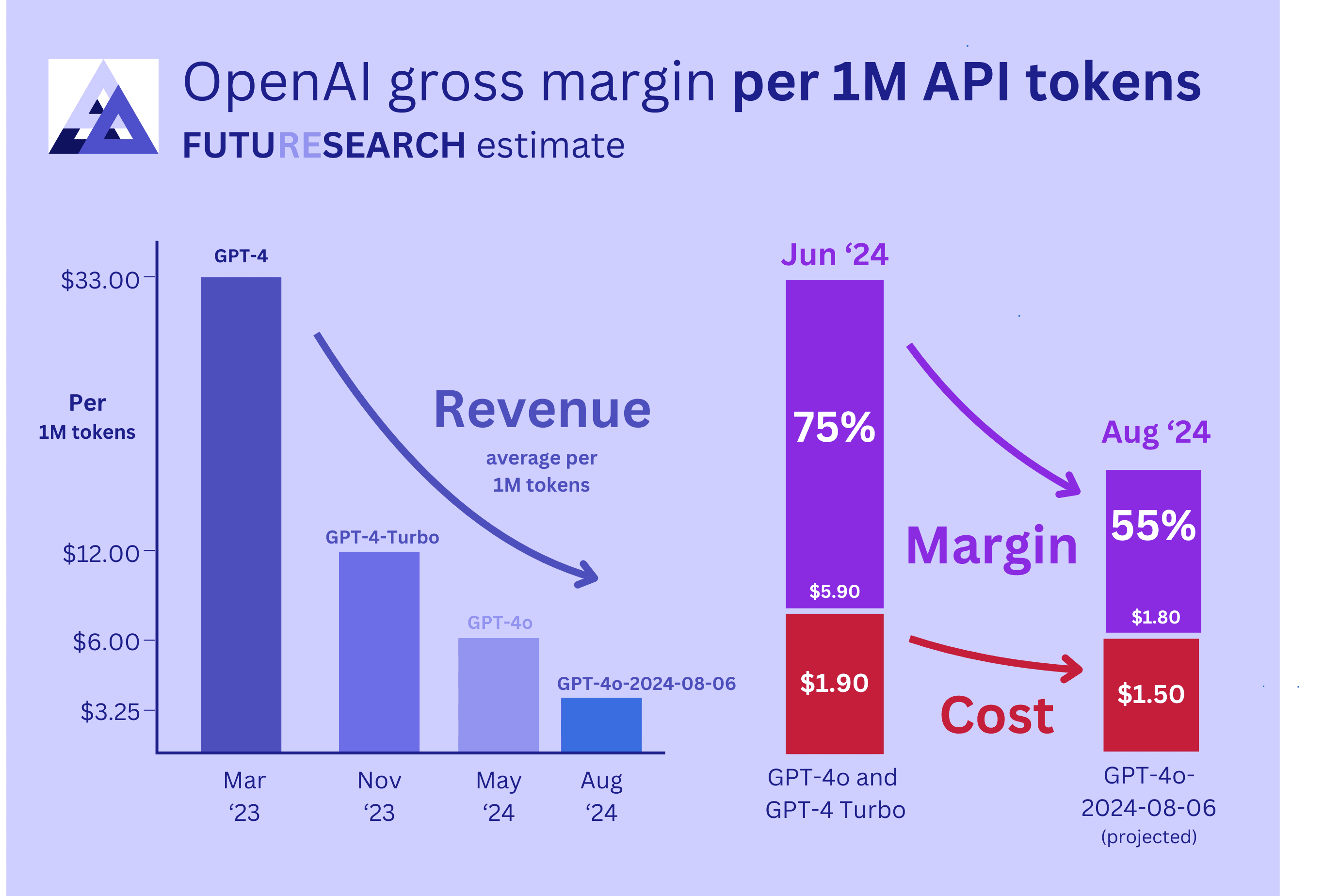

Here’s our view of the unit economics of OpenAI’s API. Note: this considers GPT-4-class models only, not audio or image APIs, and only direct API traffic, not usage in ChatGPT products.

- As of June 2024, OpenAI's API was very likely profitable, with surprisingly high margins. Our median estimate for gross margin (not including model training costs or employee salaries) was 75%.

- Once all traffic switches over to the new August GPT-4o model and pricing, OpenAI plausibly still will have a healthy profit margin. Our median estimate for the profit margin is 55%.

- The Information implied that OpenAI rents ~60k A100-equivalents from Microsoft for non-ChatGPT inference. If this is true, OpenAI is massively overprovisioned for the API, even when we account for the need to rent many extra GPUs to account for traffic spikes and future growth (arguably creating something of a mystery).

- We provide an explicit, simplified first-principles calculation of inference costs for the original GPT-4, and find significantly lower throughput & higher costs than Benjamin Todd’s result [LW · GW] (which drew from Semianalysis).

Summary chart:

What does this imply? With any numbers, we see two major scenarios:

Scenario one: competition intensifies. With llama, Gemini, and Claude all comparable and cheap, OpenAI will be forced to again drop their prices in half. (With their margins FutureSearch calculates, they can do this without running at a loss.) LLM APIs become like cloud computing: huge revenue, but not very profitable.

Scenario two: one LLM pulls away in quality. GPT-5 and Claude-3.5-opus might come out soon at huge quality improvements. If only one LLM is good enough for important workflows (like agents), it may be able to sustain a high price and huge margins. Profits will flow to this one winner.

Our numbers update us, in either scenario, towards:

- An increased likelihood of more significant price drops for GPT-4-class models.

- A (weak) update that frontier labs are facing less pressure today to race to more capable models.

If you thought that GPT-4o (and Claude, Gemini, and hosted versions of llama-405b) were already running at cost in the API, or even at a loss, you would predict that the providers are strongly motivated to release new models to find profit. If our numbers are approximately correct, these businesses may instead feel there is plenty of margin left, and profit to be had, even if GPT-5 and Claude-3.5-opus etc. do not come out for many months.

More info at https://futuresearch.ai/openai-api-profit.

Feedback welcome and appreciated – we’ll update our estimates accordingly.

0 comments

Comments sorted by top scores.