[link, 2019] AI paradigm: interactive learning from unlabeled instructions

post by the gears to ascension (lahwran) · 2022-12-20T06:45:30.035Z · LW · GW · 0 commentsThis is a link post for https://jgrizou.github.io/website/projects/thesis

Contents

Approach None No comments

Sent by a friend, I thought this was an interesting reference folks on lesswrong should know about. I have not attempted to grok this paper. If anyone thinks I should, please reply and let's schedule a time to read and discuss it in order to explain it to each other and catch mistakes; I have a very hard time doing anything without someone else agreeing with me that it's important enough to socialize about, if I could I would join a full time study group network. My agency is quite weak.

Providing you neither know the game of chess nor the French language, could you learn the rules of chess from a person speaking French? In machine learning, this problem is usually avoided by freezing one of the unknowns (e.g. chess or french) during a calibration phase. During my PhD, I tackled the full problem and proposed an innovative solution based on a measure of consistency of the interaction. We applied our method to human-robot and brain-computer interaction and studied how humans solve this problem. This work was awarded a PhD prize handed by Cédric Villani (2010 Fields Medal).

Approach

Can an agent learn a task from human instruction signals without knowing the meaning of the communicative signals? Can we learn from unlabeled instructions? [1]

The problem resemble a chicken-and-egg scenario: to learn the task you need to know the meaning of the instructions (interactive learning), and to learn the meaning of the instructions you need to know the task (supervised learning).

However, a common assumption is made when tackling each of the above problems independently: the user providing the instructions or labels is acting consistently with respect to the task and to its own signal-to-meaning mapping. In short, the user is not acting randomly but is trying to guide the machine towards one goal and using the same signal to mean the same things. The user is consistent.

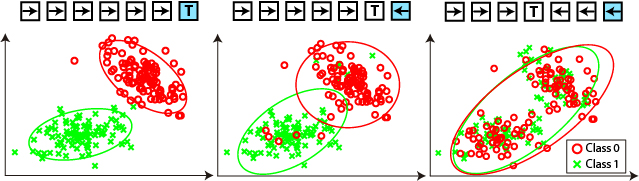

Illustration of consistency. The same dataset is labelled according to 3 different tasks. The left labelling is the most consistent with the structure of the user signals - indicating the user was probably teaching that task.Hence, by measuring the consistency of the user signal-to-meaning mapping with respect to different tasks, we are able to recover both the task and the signal-to-meaning mapping, solving the chicken-and-egg problem without the need for an explicit calibration phase. And we do so while avoiding the combinatorial explosion that would occur if we tried to generate hypothesis over the joint [task, signal-to-meaning] space, most prevalent when the signals are continuous.

Our contribution is a variety of method to measure consistency of an interaction, as well as a planning algorithm based on the uncertainty on that measure. We further proposed method to scale this work to continuous state domains, infinite number of tasks, and multiple interaction frame hypothesis. We further applied these methods to a human-robot learning task using speech as the modality of interaction, and to a brain-computer interfaces with real subjects.

Some thoughts:

- this doesn't seem like it carries alignment all on its own by any means, but it does seem like this objective has some nice properties.

- still can be critiqued with this recent humor post [LW · GW] by saying, idk, but aren't you just kinda using a simple ruler on a complex model? and yeah, seems like ensuring the model doesn't try to have more information than it can plausibly have is in fact pretty important for making the thing work, no particular reason to believe this approach is the best one for ensuring that's normalized well

- see also natural abstractions, which more or less just plugs into this

https://www.youtube.com/watch?v=w62IF3qj8-E

0 comments

Comments sorted by top scores.