AI as a computing platform: what to expect

post by Jonasb (denominations) · 2024-06-22T19:55:42.774Z · LW · GW · 0 commentsThis is a link post for https://www.denominations.io/ai-as-a-computing-platform/

Contents

The mirage of internet data Data locus / data loca A coin for your operator A different kind of hardware play Even the best of us process some amount of information Good algorithm, bad algorithm None No comments

Let's just assume for the sake of argument advances in AI continue to stack up.

Then at some point, AI will become our default computing platform.

The way of interacting with digital information. Our main interface with the world.

Does this change everything about our lives? Or nothing at all?

As a machine learning engineer, I've seen "AI" mean different things to different people.

Recent investments in LLMs (Large Language Models) and adjacent technologies are bringing all kinds of conversational interfaces to software products.

Is this conversational interface "an AI" (whatever that means)?

Or does "an AI" need to be agentic to transform the way the economy works? To change our human OS? To complete the "AI revolution"?

Either way, I couldn't help but notice that a lot of the hype around AI is about AI applications–not about AI as a computing platform.*

So I wanted to see where the AI hype would lead us when taken at face value

And given that my AI and genAI-powered searches didn't yield meaningful results, I decided to come up with some conjectures of my own.

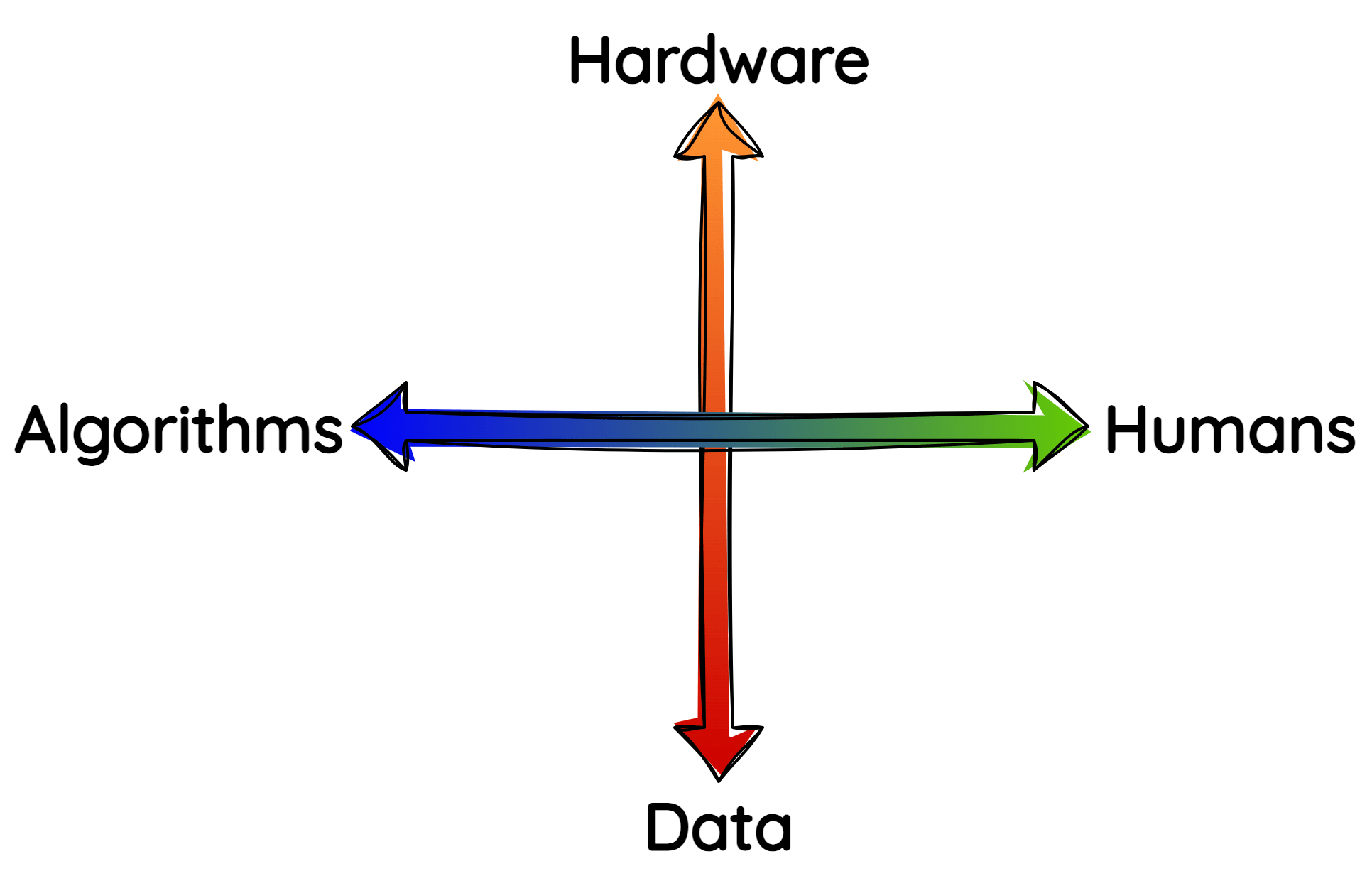

I've decided to align these conjectures with the sun, the moon, and the holy quadrity of A.I.

So let's see what happens if we do decide to hand over the keys to "an / the AI".

The mirage of internet data

For (the human-developed and adopted technology of) AI to deliver broad-strokes, sweeping societal changes it needs data to control our world.

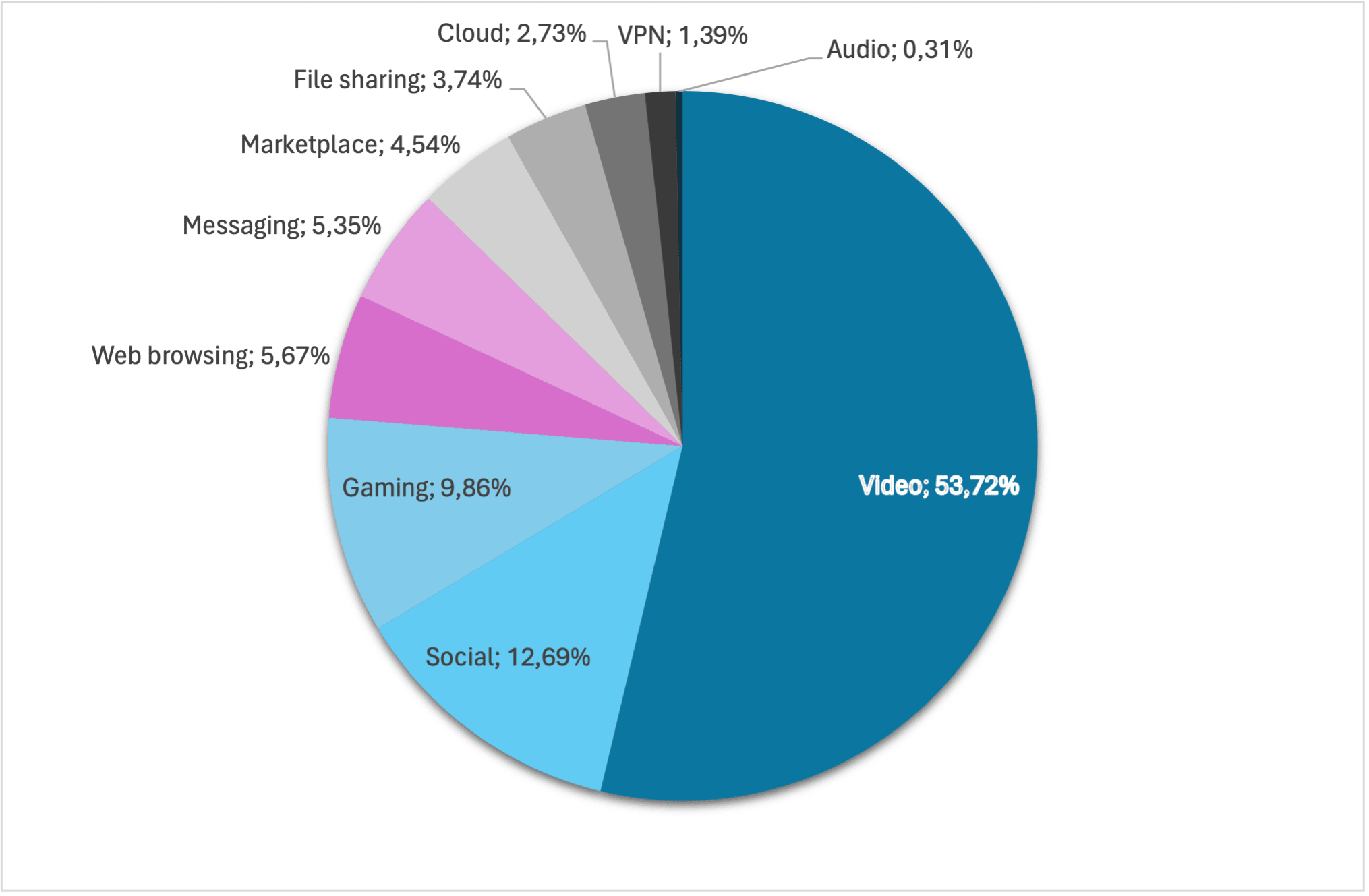

And in terms of volume, most of the available data we have is internet data.

As can be seen in the diagram above, the vast majority of that internet data is video. Which, let's be honest, is mostly generated for entertainment purposes.

Let's oversimplify things a bit and classify this treasure trove of data:

- Leisure: non-work digital human activity (blue+purple tones in the diagram).

- Business: data generated for commercial purposes (grey+purple tones).

- Public: governments, science, NGOs and other data (too small / NA).

We can maybe generously claim that legitimate business use makes up 10-15% of all internet data, and public use less than 1%.

The overwhelming majority (>75%) is dedicated to leisure activities.

That is all great, you might think, but we still have tons of data.

The problem is that most of this data is useless for improving human lives.

It's not even a problem of finding the right insights with big data analytics.

It's what this data represents – what it can tell us.

Internet data is data that is collected on human behaviour in the digital domain.

And our digital behaviour has an inherently distorted relation with the real-world problems we face as a species. It's a very poor proxy for the things that matter to our social, mental and biological wellbeing.

In fact, it is as good as useless for anything except churning out cultural artefacts like LLMs (large language models) and VGMs (video generation models).

And even there, some AI researchers are questioning the value of all the text and video data on the internet when it comes to lifting these models to a higher plane.

They argue that LLMs got their reasoning capabilities not from reading Reddit, but when LLM developers started adding computer code to LLM training data.

Data locus / data loca

Then there is the issue of where all this internet data is being stored and processed.

Most of it is stored across the systems, devices and databases of different vendors.

This makes it a lot harder to train comprehensive AI systems for a human OS.

As a result, data and AI plays naturally tend towards monopolies.

And these monopolies, right now, are maintained by commercial parties.

That is not a bad thing in and of itself, incentives and all that, but it will lead to all sorts of dystopian scenarios in the future if AI actually ends up running our world.

Either way, I hope you understand that contrary to the "data is everywhere, you just need to know how to apply it" shtick a lot of software and AI vendors push, good quality data for solving real-world problems is actually incredibly rare.

As it is, the only thing we can hope to achieve by applying AI to internet data is to reduce some of the friction in online transactions and interactions.

That is hardly the world-changing impact of AI we're being promised.

A coin for your operator

Which means that for AI to take over, we need to become more intentional about data collection.

Right now, a lot of the data used to train AI systems and applications is a byproduct of human use of digital devices.

In a sense it is a "free" or emergent property of our current technologies.

And I know from experience that building AI applications on data that is only loosely connected to the intended use case will result in poor performance.

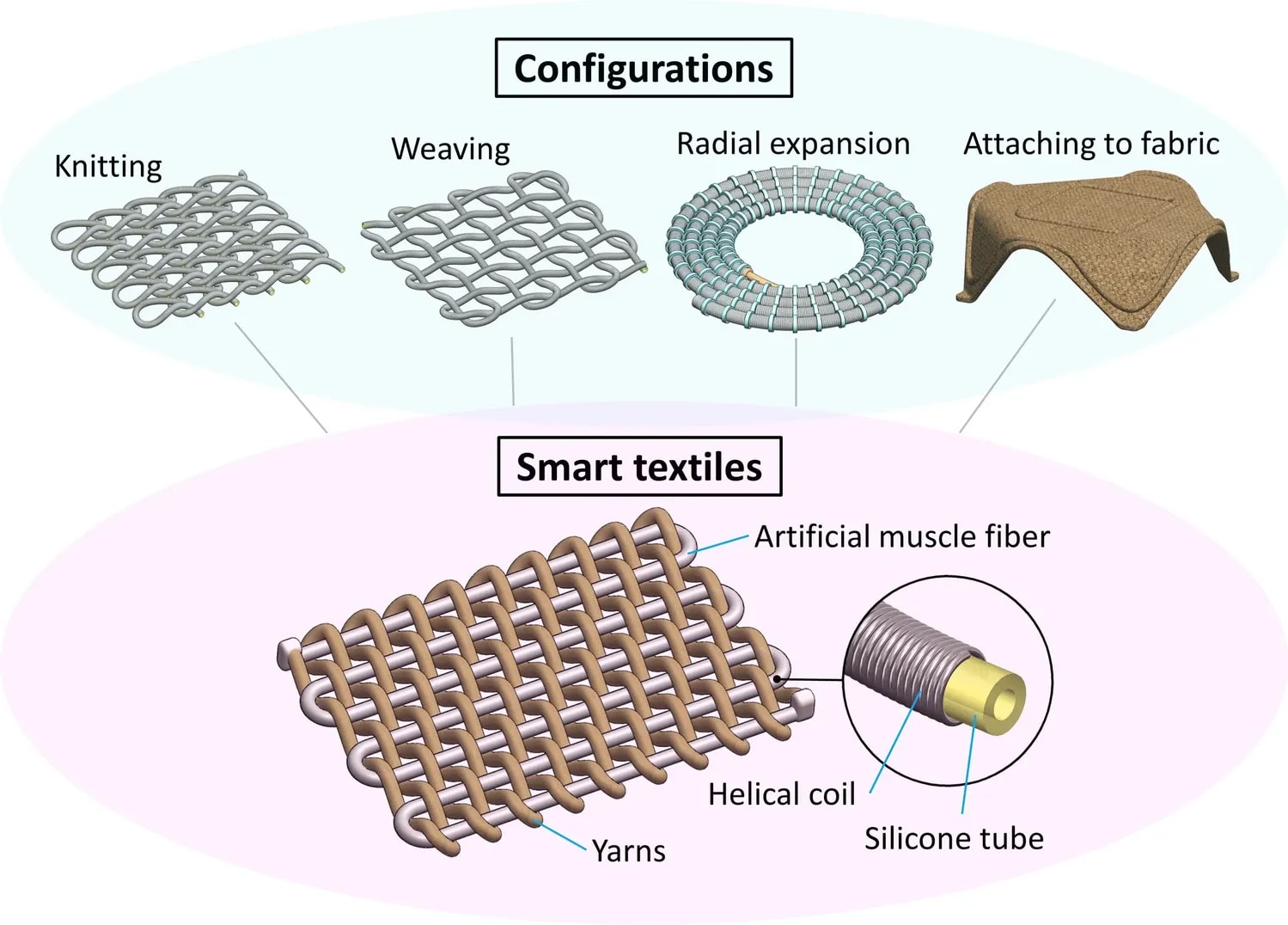

So what kind of data collection technologies do we need?

For the human experience, the obvious candidates are implants and wearables.

Brain implants would allow us force-feed Instagram reels at higher bandwidths than ever before, but would this really make our lives better?

Given the lack of progress in neuroscience, I'm sceptical about the ability of neurotechnology to positively impact human health and wellbeing.

Of course, better brain implants will probably be a big boost for scientific advances in this field.

Personally I'm also less interested in sticking technology in my brain and more excited about the possibilities of smart wearables.

Wearables that go beyond the current state of the art of devices like the Rabbit R1 and the Humane AI pin, that are screenless, non-intrusive and safe.

That allow AI to enrich the human experience without subtracting from it with the interference of digital devices.

Whichever is your personal preference, the debate between nativists–who'd want to limit AI computations to specific solutions–and empiricists–which includes the AGI & transhumanist club, who believe that data is everything–is one that will probably define the reach of future AI computing platforms.

But if we are talking about civilisation-scale changes, what we do in our free time maybe matters less than aggregate-level efficiencies we could gain.

By deploying AI to optimise things like power grids, factories, traffic etc.

By allowing AI to operate systems with data from less sexy and more maintenance heavy data collection stations on vital infrastructure.

A different kind of hardware play

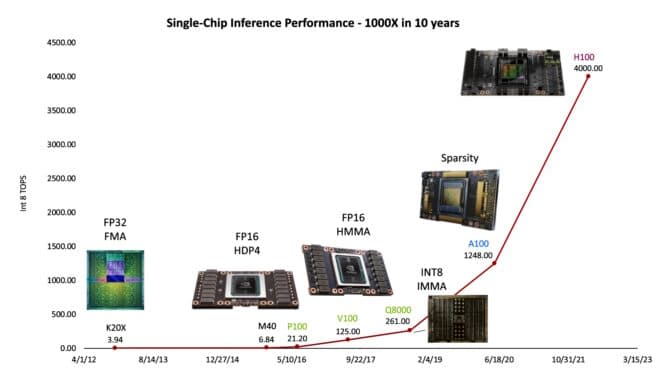

These AI deployments would require a different hardware play from the one we are seeing right now, with the skyrocketing value of Nvidia's share price.

With everybody and their grandmother harking in silicon.

That is no doubt a smart play, but even exponentially smaller and faster GPUs won't solve the "AI everywhere" premise of a society running on an AI OS.

Innovations like Groq (GPUs dedicated to LLM inference) will give us more talking computers by bringing down the cost-to-performance ratio of GPUs.

But do we actually need more talking computers?

Do you want to talk to your fridge? Your shoes?

Having one personal AI assistant will probably be enough for most of us.

The question then becomes, what should this AI assistant be able to do?

Should it be the one talking to your fridge and ordering your groceries based on input from your AI nutrition coach?

Is that AI nutrition coach running on the same device as your AI assistant?

And while it is ordering groceries, is it communicating with real persons in the grocery store on the other end, or with their store AI?

Another way of putting this question is–if LLMs and their descendants are the "brain" of an AI computing platform, what is its body?

There is a purely technical answer: this "body" will be a hybrid mesh of sensors and semi-smart appliances feeding it with information to take actions in its "world"–a world of APIs with commercial and government AI systems.

Then there is the human answer: it's you. You will be its body, its agent.

Even the best of us process some amount of information

Which, if you are okay with that, hey, great for you.

Eventually this is a personal choice. But it is a choice that in the aggregate will have society-wide effects.

The most important effect will be on interpersonal relations–on the fabric of our societies.

The more we let AI operating systems define our lives, the less human we will become.

We will start anthropomorphising our AI assistants**, and loose more of our humanity as these interactions with AI systems start redefining what is normal.

We did the same thing with social media, and look where it got us (that's a rhetorical question–wading knee-deep in baseless anxiety, mostly).

And AI will have a much more profound impact on us than social media, since our AI technologies have advanced to the point where we can build systems that can look more human than humans do, to us.

We've long since crossed over the uncanny valley, at least digitally. In fact, we've been jumping over it in droves these last two years.

And by letting our interactions with AI redefine what is normal, we are at risk of numbing and dumbing down millions of years of evolutionary progress. Progress that has refined our visual, emotional and social information processing skills.

We might paradoxically end up collectively stupider for it–with all the information of the human race at our fingertips.

And the thing is, these AI assistants are not built by humans but by corporations.

Just let that sink in. One non-human entity (a corporation) produces another non-human entity. This technological artefact (your AI assistant) then controls your life and tells you what to do.

Not only does it do that, but you are paying its owner money to tell you what to do.

It sounds like a pretty weird fetish to me, to be honest. But you do you.

Even if these corporations are properly regulated to only serve your (consumer / their customers') best interest–which let's face it, will probably not happen–it would still be a strange place to be in.

Good algorithm, bad algorithm

Anyway, if throughout this blog I've given you the impression that I'm anti-AI, anti-innovation and anti-technology, I can assure you that I am not.

What I am is anti-bandwagon.

And the current AI hype train is as bad of a bandwagon as I've ever seen.

With not enough people looking at the aggregate impact of these technologies.

In a business context, defining metrics for AI systems to optimise is a relatively straightforward process–at least if you know what you are doing.

And there are a lot of horrible, boring tasks out there that are being performed by human agents just because they carry some sort of economic value.

It would be amazing to see more of these tasks automated by AI systems, freeing up humans to work on more interesting and fulfilling things.

Yet in life, over-optimising on existing data–over-indexing on the present–can potentially leave us less resilient, less open to change, and less successful in the long term (this also holds for businesses by the way, or any kind of data-driven decision-making).

That doesn't mean I don't think there isn't a place for AI in our personal, private and social lives.

But I do think we should stop believing the narrative that AI will be somehow better than us.

Or that "AI" should take over the world as an AI operating system.

AI systems will perform better on individual tasks, yes, because these systems are trained on more data than a human could process in a thousand lifetimes.

Our data processing capabilities are fuelling that amazing progress.

But it doesn't make these AI systems better than or superior to us***.

Chess players still play chess. Go players still play go. Humans will be human.

So let's not make AI into some kind of new-age religion.

*) Ironically, less that 24 hours before publishing this post a team at Rutgers University open-sourced "AIOS": https://github.com/agiresearch/AIOS. The AI hype train moving at full speed 😅

**) Although Ethan Mollick makes a good case why treating your conversational AI assistants as people will get you better results. If you're interested in exploring this line of thought further, I've written a deep dive about human-AI relations not that long ago.

***) Except as a value proposition to organisations–cheap labour that doesn't go on sick leave, doesn't complain and never stops working, what kind of Shareholder Value Maximising Executive (SVME™) would say no to that? So definitely look at how much AI exposure your current role has, which tasks in your job you could automate or improve by using AI tools, and how you can re-skill to stay relevant.

0 comments

Comments sorted by top scores.