Rational Unilateralists Aren't So Cursed

post by SCP (sami-petersen) · 2023-07-04T12:19:12.048Z · LW · GW · 6 commentsContents

Summary Why Naïve Unilateralism Is Not an Equilibrium Deriving Equilibria Conclusion None 6 comments

Much informal discussion of the Unilateralist’s Curse from Bostrom et al (2016) presents it as a sort of collective action problem: the chance of purely altruistic agents causing harm rises with the number of agents that act alone. What’s often left out is that these agents are irrational. The central result depends on this, and I’ll show why below.

Note that the formal result in the original paper is correct. The authors are largely aware of what I’ll explain here; they discuss much of it in section 3.2. The point of this post is (i) to correct a misconception about which agents the Curse applies to, (ii) to go through a particularly neat part of the game theory canon, and (iii) to highlight some nuances.

Summary

- Even when agents have no information about what others think, it is not generally an equilibrium for them to act merely based on their own all-things-considered belief.

- In equilibrium, rational and altruistic agents may or may not converge (in the limit) to avoiding the kind of mistake highlighted by the Unilateralist's Curse.

- Rational and altruistic agents can still do far better by using, e.g., majority vote.

- Empirical results suggest that agents do behave quite rationally in various contexts.

I suggest skipping the derivations if you're not interested.

Why Naïve Unilateralism Is Not an Equilibrium

The main theoretical insights I’ll explain are from Feddersen and Pesendorfer (1998).[1] I simply apply them to the case of the Unilateralist’s Curse. I’ll illustrate using the first example from Bostrom et al (2016, p. 1) where they motivate the issue:

A group of scientists working on the development of an HIV vaccine has accidentally created an air-transmissible variant of HIV. The scientists must decide whether to publish their discovery, knowing that it might be used to create a devastating biological weapon, but also that it could help those who hope to develop defenses against such weapons. Most members of the group think publication is too risky, but one disagrees. He mentions the discovery at a conference, and soon the details are widely known.

Next, a formal setup:[2]

- There are agents.

- An agent can either write a paper () or stay silent ().

- If at least one agent writes the paper, it is published (); if none do, it is not ().

- Having the paper written would in fact either be good () or bad () on net. For simplicity, the common prior is .[3]

- Each agent gets an informative i.i.d. signal , i.e. their own impression, about the effect of such a paper being written. For simplicity, it’s symmetric: for any .

- The agents are equally altruistic: . An error gives all agents a payoff of while a correct collective decision gives each .[4]

The solution concept is Bayesian Nash Equilibrium.[5]

Claim: The following is not an equilibrium: agents choose to write the paper just in case their own impression suggests it would be good.

Proof. Suppose for contradiction that this is an equilibrium. It’s symmetric so consider any agent . By assumption, only cares about the outcome. If at least one other agent writes the paper, then can’t affect the outcome. If nobody has written the paper, can unilaterally pick the outcome. Since ’s choice makes no difference to their payoff when someone else has chosen to write the paper, will simply condition on nobody else having chosen to write the paper. (For the unconvinced: [6] .)

Denote by the 'decisive' case where nobody else has chosen to write the paper. Now that conditions on being decisive, agents must have chosen to stay silent. By supposition, this implies that agents got signal . Suppose that got signal . By conditioning on being decisive, ’s relevant posterior becomes

which is always less than 50%.[7]

Therefore, will act as if writing the paper is likely harmful. So won’t write the paper—even though (i) their own impression suggests it’d be good and (ii) they have no information about what others think. This contradicts the supposition, proving the claim.

What happened to the Unilateralist's Curse? The authors were careful to write "if each agent acts on her own personal judgment" in the paper's abstract because this is a nontrivial condition. It requires that agents observe their signal and naïvely act upon it. But as we've shown, if all agents follow this rule, then writing the paper becomes a strictly inferior act. A rational agent would not write.

Here's the idea in stream-of-consciousness: I think it's worth writing the paper. Of course, it doesn't matter whether I write it if someone else will anyway. But if nobody else does, then I could do some good. Wait. If nobody else would write the paper, then they must all think it's a bad idea. I'm only as sensible as they are, so I must presumably have missed something. I guess I'll stay silent then.

That's all well and good for intuiting why rational unilateralists don't just follow their signal. But did you spot the mistake in that reasoning?

The stream of consciousness should have continued: Interesting, it seems like the best move is to stay silent no matter what I personally think. Hold on! The other agents must've thought of this too, implying that they'd just stay silent no matter what. But now being decisive tells me nothing at all about what the others think! So I'll just use my own judgment and write the paper. Wait...

And so forth.

What, then, does a rational agent do in this case? Does this game just not have an equilibrium? It's got finite players and finite pure strategies, so by a simple extension of Nash's existence theorem, there must be an equilibrium.

Deriving Equilibria

There are actually multiple equilibria. I'll only describe a nontrivial symmetric one here. Let's see whether we can find an equilibrium in which agents stay silent when their own impression is that publication would be bad. We then just need to specify what they would do when their own impression is that writing the paper would be beneficial. Given the discussion above, it won't simply be "write" or "don't write." If there's to be an equilibrium, it will have to be in mixed strategies—where agents randomise.

Let's complicate the setup a bit to see some interesting dynamics:

- Now for some . This normalises the payoff of a correct decision to . Payoffs for the wrong decisions are negative.

- We define as the chance of an agent with signal writing a paper.

Begin by noticing that can be thought of as the probability threshold at which an agent would want the paper to be written. To see this, observe that the expected payoffs are

- and

- ,

so favours publication whenever .[8]

For an agent to write the paper with a probability other than zero or one, they must be indifferent between writing and not writing it.[9] That is, . Like before, an agent's choice only matters when they are decisive. So we need to find a satisfying

Re-arranging and bounding appropriately gives us

Finding is just a matter of plugging in values for , , and .[10] For instance, if 15 agents all have a probability threshold of 50% and their impressions are 75% accurate, then the chance of any individual with a positive impression writing the paper is .

More interestingly, though, we can examine the comparative statics. Let's start with the limiting behaviour as the number of rational, altruistic agents rises:

- We always have .[11] The more agents there are, the lower the chance that any particular individual with a positive impression writes the paper.

- And yet in general.[12] As the number of agents rises, the probability that the paper is written at all when it shouldn't be converges to some constant, determined by both the accuracy and threshold.

- Similarly, .[13] The accuracy and threshold likewise determine the limiting probability that the paper will erroneously not be written.

- Hence .[14] The chance of the collective decision being mistaken in the limit is a mixture of (2) and (3).

There we have it—the Rational Unilateralist's Absolution: even in the limit, the risky paper might not be written. But the probability of such a mistake is very sensitive to parameters other than , and can be close to either zero or one. So even Bostrom et al might've been too generous in concluding that "the unilateralist curse is lifted" when players are appropriately rational (2016, p. 9).

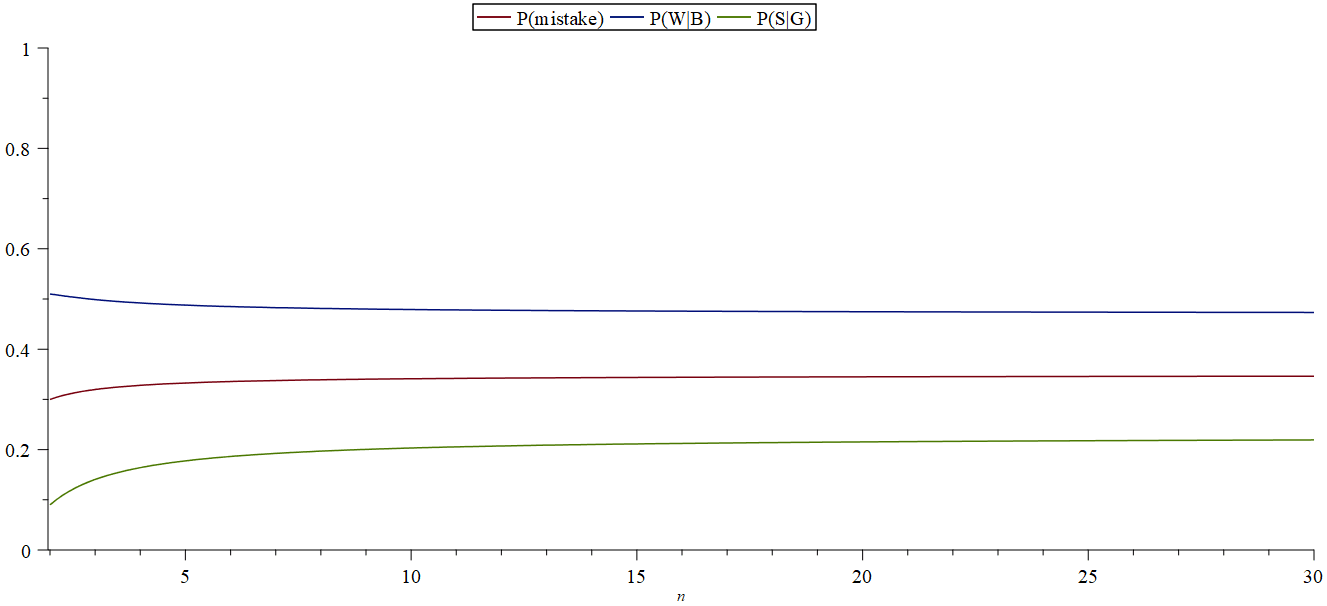

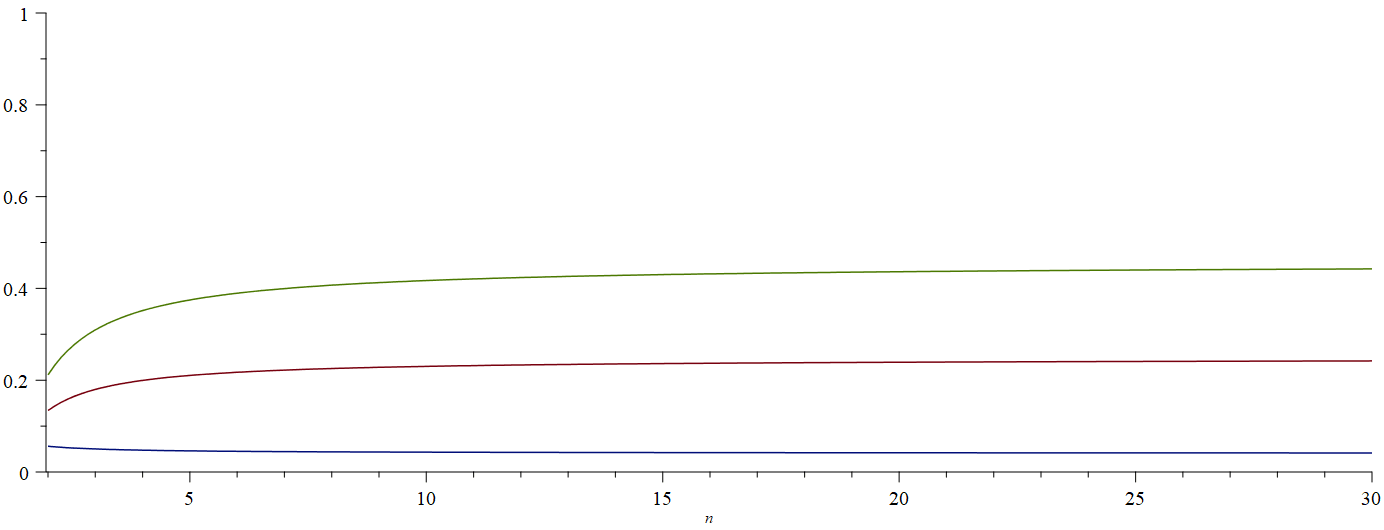

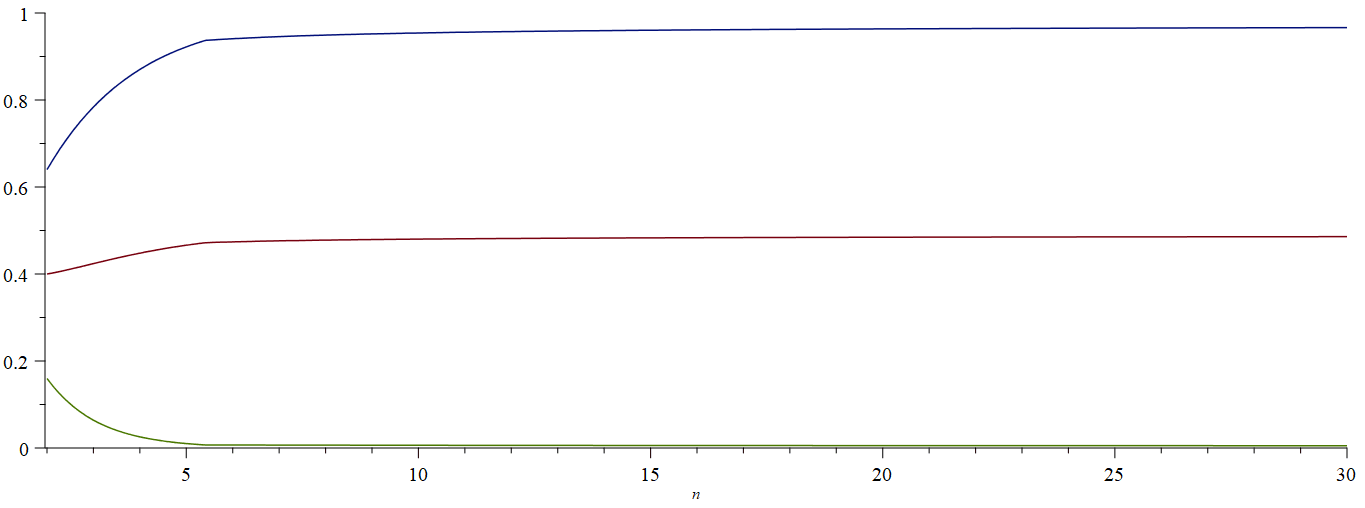

In the graphs below, notice (i) that the risks can rise or fall with , (ii) how much they differ based on the other parameters, and (iii) how fast they converge to their limits.[15]

Typically, the higher the threshold for publication, the lower the chance of writing a dangerous paper, even in the limit. This is good news, but it trades off against the chance of mistakenly staying silent. So, for all intents and purposes, rational unilateralists aren't really free of error. Granted, they don't converge to always publishing harmful papers, unlike irrational ones. But they're still rather lousy.

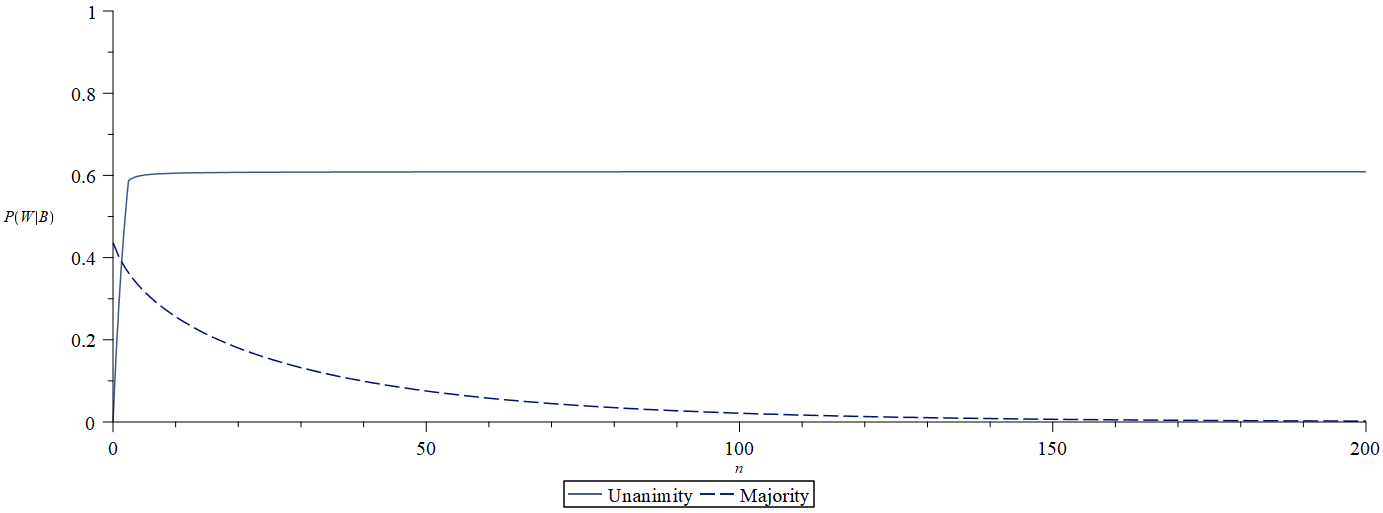

Thankfully, there is a way to lower the probability of a mistake far faster: just collaborate. The setup of this model satisfies the requirements for Condorcet's Jury Theorem, so if the agents decided by majority vote, they'd quickly converge to the correct decision.[16] Here's just how much earlier majority vote could give the agents confidence that they won't mistakenly publish the paper:

Conclusion

Unilateral action is not so cursed when agents are rational. This detail is often omitted. A rational agent conditions the value of their options on the possible worlds in which their decision matters. And this liberates the group from the prospect of inevitable mistakes.

I simply find this interesting in its own right. Extracting practical upshots from this is something I’ll—and I’ve always wanted to do this—leave as an exercise to the reader. As a suggestion for somewhere to begin, I'll note that there is some empirical work testing how rational humans are in these settings. For instance, Guarnaschelli et al (2000) find that individual people's behaviour in juries does track the unobvious strategic considerations of unanimity, though the aggregate group decision is less well predicted by the theory. In contrast, Hendricks et al (2003) evaluate the related Winner's Curse in federal auctions and find impressively rational behaviour from firms.

- ^

Bostrom et al (2016) compare the Unilateralist’s Curse to the Winner’s Curse from auction theory. There, too, the agents are assumed to be irrational.

- ^

The framework is somewhat different from that in Bostrom et al (2016) but more in line with Feddersen and Pesendorfer (1998). This doesn’t meaningfully affect the conclusions.

- ^

Bostrom et al (2016) mention the assumption of identical priors is 'problematic' but as should become clear in the derivation, denying this does not quite salvage their result (especially if the accuracy of the signal is high).

- ^

The former is a normalisation but the latter is a simplifying assumption. I relax it in the next section.

- ^

This is because the game is simultaneous. But note that timing in game theory is a property of the information environment, not of when agents act.

- ^

Denote by the ‘decisive’ case where nobody else has chosen to write the paper. Then 's expected payoffs for writing and staying silent, respectively, are

And prefers writing to silence whenever so when

, assuming .

So, ’s decision to write the paper is only a function of ’s beliefs conditional on being decisive.

- ^

It’s assumed that and .

- ^

To compare directly to the derivations above, set .

- ^

See the Fundamental Theorem of Mixed Strategy Nash Equilibrium.

- ^

The astute reader may have noticed that can equal or . Does this contradict the proof above? Not quite. We still guarantee when , for example, which is implicit in the setup studied above. But in this more general case, we can indeed find equilibria in pure strategies.

We guarantee when , and the converse sometimes holds. To motivate this, note that when agents play a pure strategy of never writing the paper, , decisiveness is uninformative, so agents can only use their private signal to decide whether to write. And so if their confidence is above the threshold, , they’ll write.

We can get when the threshold, accuracy, and number of agents is sufficiently low. To see why, consider the case of three agents with and . The posterior on publication being good then becomes , which is above the threshold. If you think beneficial publication is sufficiently better than faulty publication is bad, you might write the paper even if you think publication is likely to cause harm.

- ^

- ^

.So (see Feddersen and Pesendorfer 1998).

- ^

.So (see Feddersen and Pesendorfer 1998).

- ^

.

- ^

Remember only integers of are meaningful.

- ^

The probability of majority rule making a mistake in this context is for odd .

6 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2023-07-04T17:18:17.810Z · LW(p) · GW(p)

I appreciate the “stream-of-consciousness” paragraph, it made it much easier for me to get the gist of what you’re talking about. Good pedagogy.

comment by Noosphere89 (sharmake-farah) · 2025-01-30T23:45:36.579Z · LW(p) · GW(p)

This is an interesting post, that while not very relevant on it's own, might become relevant in the future.

More importantly, it's a scenario where rational agents can outperform irrational agents.

+1 for this, which while minor, still matters.

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-07-04T22:50:33.404Z · LW(p) · GW(p)

I am confused - doesn’t Bostrom’s model of “naive unilateralists” by definition preclude updating on the behavior of other group members? And isn’t updating on the beliefs of others (as signaled by their behavior) an example of adopting a version of the “principle of conformity” that he endorses as a solution to the curse? If so, it seems like you are framing a proof of Bostrom’s point as a rebuttal to it. If not, then I’d appreciate more clarity on how your model of naivety differs from Bostrom’s.

Replies from: sami-petersen↑ comment by SCP (sami-petersen) · 2023-07-05T10:43:28.846Z · LW(p) · GW(p)

doesn’t Bostrom’s model of “naive unilateralists” by definition preclude updating on the behavior of other group members?

Yeah, this is right; it's what I tried to clarify in the second paragraph.

isn’t updating on the beliefs of others (as signaled by their behavior) an example of adopting a version of the “principle of conformity” that he endorses as a solution to the curse? If so, it seems like you are framing a proof of Bostrom’s point as a rebuttal to it.

The introduction of the post tries to explain how this post relates to Bostrom et al's paper (e.g., I'm not rebutting Bostrom et al). But I'll say some more here.

You're broadly right on the principle of conformity. The paper suggests a few ways to implement it, one of which is being rational. But they don't go so far as to endorse this because they consider it mostly unrealistic. I tried to point to some reasons it might not be. Bostrom et al are sceptical because (i) identical priors are assumed and (ii) it would be surprising for humans to be this thoughtful anyway. The derivation above should help motivate why identical priors are sufficient but not necessary for the main upshot, and what I included in the conclusion suggests that many humans—or at least some firms—actually do the rational thing by default.

But the main point of the post is to do what I explained in the introduction: correct misconceptions and clarify. My experience of informal discussions of the curse suggests people think of it as a flaw of collective action that applies to agents simpliciter, and I wanted to flesh out this mistake. I think the formal framework I used is better at capturing the relevant intuition than the one used in Bostrom et al.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2023-07-05T14:43:14.493Z · LW(p) · GW(p)

That makes sense. Thank you for the explanation!

comment by Dagon · 2023-07-04T15:19:56.147Z · LW(p) · GW(p)

Yet another case where knowing one's fallibility leads to fairly conservative probabilistic actions. Does this simplify to "perform the action with a probability such that if all participants have the same posterior, that will be the chance that any of us take the action"?

Or does it?

It's absolutely worth noting that this is NOT what a unilateralist would do to maximize the outcome. It's well-known that if you assign 51% to a binary proposition, you should bet on yes, not randomize to 51% yes and 49% no. In the 51red and 49 blue balls in a jar, bet on the color of the next pick, it's suboptimal to use any strategy except "guess red".

I really wonder (and am not quite willing to write the model and find out, for which I feel bad, but will still make the comment) where the cutoff is in terms of correlation of private signal to truth, and number of players, where the true unilateralist does better. Where players=1 and correlation is positive, it's clear that one should just act if p(G) > 0.5. For players=2, it's already tricky, based on posterior and correlation - my intiution is if one is confident enough, just write the paper, and if one is below that range one should be probabilistic. And it all goes out the window if the noise in the private information is not independent among players.

The discussion option is clearly best, as you can mutually update on each other's posteriors (you have mutual knowledge of each others' rationality, and the same priors) to get a consensus probability, and then everyone will act or not, based on whether the probability is greater than or less than 50%. In the absence of discussion, there's ALREADY a huge loss in expectation with the need to be probabilistic, even if you all have the same information (but don't know that, so you can't expect automatic unanimity). I don't see a way to do better, but it's definitely unfortunate.