Post-Rationality and Rationality, A Dialogue

post by agilecaveman · 2018-11-13T05:55:06.551Z · LW · GW · 2 commentsContents

2 comments

A dialogue between R (rationalist) and P (post-rationalist)

Any resemblance of these fictional characters to real people is not a coincidence because nothing is ever really a coincidence.

R: I sincerely challenge you to give a purely positive account of "post-rationality" which makes no mention of rationality concepts drawn from there which are being denied or criticized. The way that a smart atheist, so challenged for a positive account of what they do believe in, can explain purely positive ideals of probability and decision which make no mention of God.

P: One key insight that has appeared in multiple post-rationalists’ texts is the causal relationship between morality, identity and group conflict. Rationalists and other “systematic” thinkers, such as libertarians craft their identity based on a particular set of principles, be it the desire to be “less wrong” as measured by probability calibration, or a desire to shape the world according to liberty or non-aggression.

They happen to exist in conflict with other group that for some reason can’t seem to follow their obvious and common-sense principles. For rationalists, those groups might be religious people clinging to “faith,” journalists failing to cite proper statistics when reporting on outliers, politicians who “paint a picture” or “persuade” while not adhering to the facts. The rationalists occasionally wonder why the world isn’t rational, hasn’t adopted their insights or doesn’t see the extreme value of knowing the True Law of Thinking.

The post-rationalist insight is that the conflict between rationalists and non-rationalists is not an arbitrary feature of the landscape of the universe, but rather the originator of both groups. By the virtue of an In-group existing, there must be an Out-group and there must have existed an original conflict that gave birth to the division.

This conflict could have taken many forms, from ethnic, political, inter-institutional, old money vs new money to one guy simply killed in one-against all sacrifice. Whatever it was, the conflict drove the division and eventually necessitated the creation of labels. Our rational thinkers and Their irrational believers. Vs Our God-fearing people and Their heretic iconoclasts.

The labels were not created out of thin air, rather they generally had to capture some real aspects of the human psyche, common sense reasoning and ethics for both sides. They necessarily have also had to omit some as well.

R: This seems like and interesting historical point, but I fail to see how this historical question has a lot of bearing on modern rationality. Sure, in the past, there were people who called themselves more rational than others, but they were farther away from the true Bayesian mathematical understanding of reasoning than we are. Besides, if the key point of post-rationality is understanding this causal relationship, I don’t see how this fundamentally alters the theory. A rationalist should still be able to investigate the history, form proper beliefs about it and make the necessary decisions.

P: If you investigate this idea enough, it challenges every concept, even a meta-level one, such as the importance of beliefs at all, however it’s easiest to see this at work fundamentally challenging the rationalist notion of “values,” or as some people say, “one’s utility function.” In the rationalist worldview, people somehow spring into the world “full of values” that they implicitly or explicitly carry forth into the world. Perhaps you might have seen some studies about how political attitudes carry across genetically or culturally, but they seem to you of little relevance in terms of the self-reinforcing imperative to optimize the world according to one’s values of “utility function.” Effective Altruists, for example, would not exist as a group in a world where their version of “doing the most good” was as obvious as breathing air. The only reason Effective Altruism can function as a group is due to EA being able to demarcate a boundary not just conceptually, but also tribally. On the other side of this divide there exist people with seemingly diametrically opposed values that also work very hard to change the world to their liking. While they might not call this work “optimizing one’s values,” it will use the same strategies of various levels of effectiveness. You can constantly see arguing on social media, fighting for the limited attention of the elites, writing snarky blog posts, etc., etc.

A better approach is to recognize that “optimizing one’s values” is an inherently conflictual stance that put two people or groups into unnecessary competition that could be resolved. How to do so in practice is a trickier question.

R: Hold on, this simply sounds the value you happen to hold is something like “peacefulness” or “avoidance of inter-group conflicts, including verbal ones”. This might seem like a fine value, but I don’t see any reason to a-priori elevate this value to a more sacred status over many of the forms of utilitarianism.

P: The value of “peacefulness” or other related concepts is an ok approximation and an attempt to see a new and more complex paradigm in terms of a simpler one. However, the situation is more complex here. Let’s imagine two hypothetical people – Alice and Bob, who are in a value conflict with each other. Alice wants variable X to go as high as possible. Bob wants X to go as low as possible, however doesn’t care if X is below a certain minimum value (say 0). Bob is stronger and more powerful than Alice.

What would happen in this situation in the rationalist worldview is Alice constantly “trying to optimize” X, where they keep putting effort into moving X up. Bob, noticing that X moved from its desired value of 0, would use his power to push it back down. Alice would lament the condition but could justify her actions that “counterfactually” she did something because X could be even lower if she didn’t try to optimize it. What doesn’t seem to come to mind is that “optimization of values” itself that Alice is doing is causing Bob’s reaction.

You can substitute many things for X in this case. X could be “attention devoted to issues outside of people’s locale”, X could be “equality in every dimension between certain groups”, X could be “desire to be left alone by the government.”

R: this still seems like the wrong set of background assumptions on the part of A – if they rationally understood the situation, they would realize that X cannot be moved in that way and either act accordingly. If X is not the only thing they care about, that means looking at thing other than X.

P: Looking at things other than X, rather than continuing hopeless fights is in fact one of the possible desirable outcome. I suspect that at least some part of A does realize what is happening, but that’s not necessarily vital to the point. The point is that the entire optimization “frame of mind” can become counterproductive when faced with this situation. Also, the adversarial nature of “values” is not the exception, it is the rule.

R: This seems similar to Robin Hanson’s idea of “trying to pull the political will sideways to the wedge issues.” So, even if I suppose that the structure of society is such as to make optimization inherently adversarial, I still don’t see the way that changes anything in the theoretical framework. People still optimize their values in the whatever way possible.

P: Once again, the idea of “pulling sideways” is good, but it again slightly under-estimates the required mental model. It’s not that you first understand your values, then you look around and see if there are adversaries and work around them. By the virtue of having verbal “values” at all, you are guaranteed to have adversaries. Think of post-rational insight on values as a government agency’s required giant warning label that comes with every “identity,” or “value” – this is bound to cause arbitrarily complex conflict if you push it hard enough. This is not still, a fully general argument for nihilism, there is still right and wrong action at the end of the day and understanding value-based reasoning is still a good tool. Rationality also has warning labels, such as “keep your identity small.” But the idea is to have a good causal model of how identity comes into being in order to understand the reasoning behind such a suggestion.

R: If situations are more common than others, that is still within the realm of rationality. Also, the historical origination of identity does not matter, since values “screen off” the causal process that has created them. It doesn’t really matter for my action if I am a robot created by one party to fight another. Whatever I believe my imperative to be, I will be doing. I could be wrong or deluded about my true values, which is a separate error, but if there is alignment and understanding, the historical facts of culture and evolution do not matter.

P: In a pure isolated universe, where you are the only rational being, it doesn’t matter. However, it matters when you realize you have adversaries, who might in some ways not be too different from you. Perhaps running a similar algorithm and copying each other’s object-level tactics to use against each other, all while claiming to be different due to diverging values. The situation changes then, as you can achieve a better overall outcome if you learn to cooperate or “non-optimize.”

R: Ah, so of course, the situations changes if you suddenly encounter agents running algorithms like your own. However, this situation is covered in general by other parts of rationality which extend the Bayesian formalism, such as “super-rationality,” “logical connections”, “UDT” or “a-causal trade”. Is post-rationality just repackaging those?

P: I think as far as a developmental guide is concerned, I would have wished for rationality to have more explicit guidelines about which is advanced material and which isn’t. In terms of mapping decision theories to Kegan levels, I would put EDT ~ kegan3 (I copy successful people’s actions because the actions are correlated with success), CDT is ~ kegan4 (I limit myself to those actions where I have a good model of them causing success), which is where most of rationality is. Properly mastering UDT is kegan5(I act such that I am a proper model to those who are similar or wish to imitate me), which includes a true and hard to acquire information which people algorithms are identical to which one’s of one’s own. (for more discussion on Kegan see here: Developing ethical, social, and cognitive competence and here: Reconciling Inside and Outside view of Conflict and Identity )

R: But, if those are the mathematical foundation of post-rationality, then it still seems to be contained within the label of “difficult parts of rationality.”

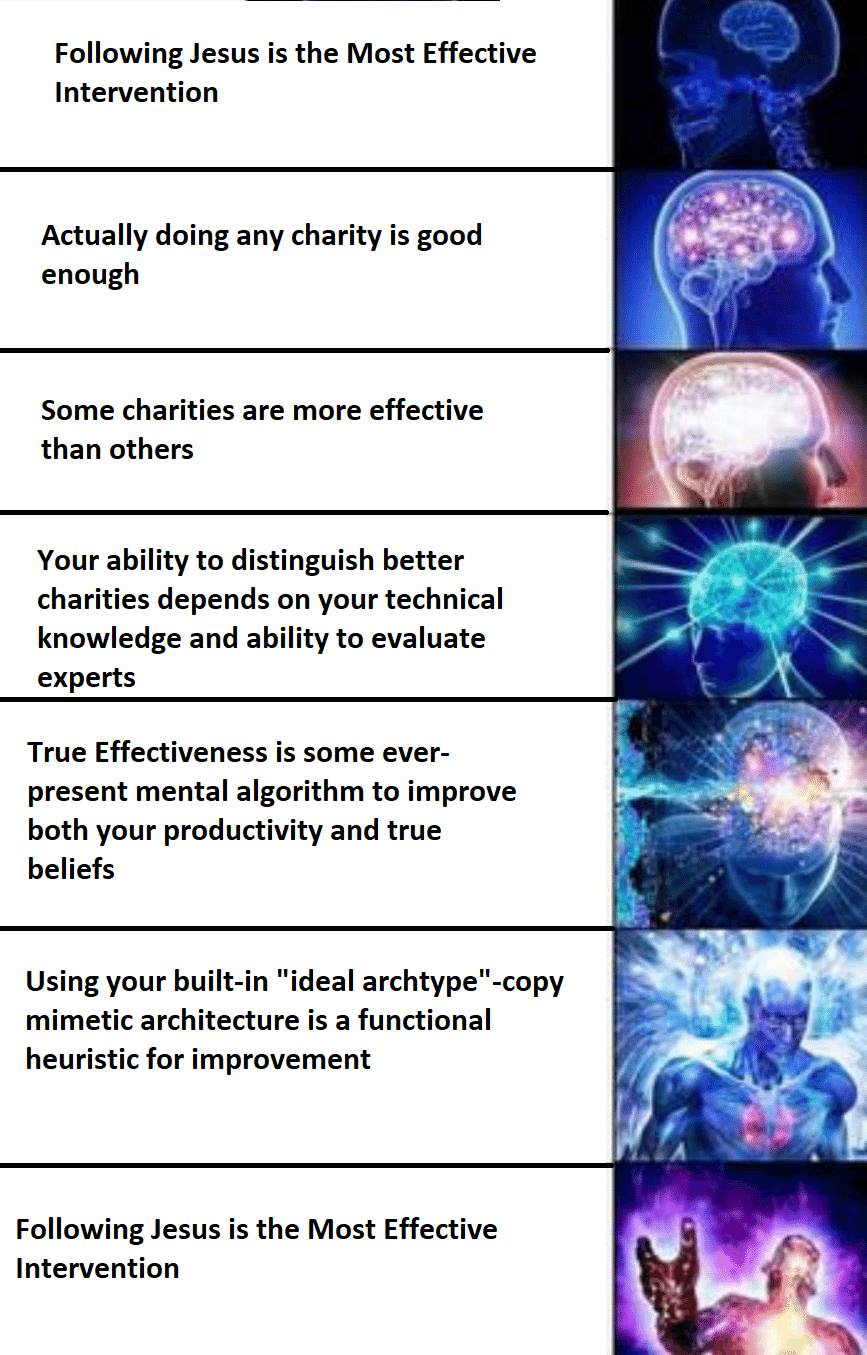

P: There is a lot more mathematical foundational content in post-rationality that rationality, but before getting there, the primary disagreement about the mathematical foundations is that of using math prescriptively vs descriptively. The questions being “here is how the proper beliefs updates should work” vs “how is any of this brain stuff working in the first place?”. The rationalist view being – this person’s actions do not look like a proper “belief update” – therefore it’s wrong! The post-rationalist view being closer to first asking the question of what mathematical model does fit this person’s behavior, or to be more precise – which particular social patterns and protocols enable and encourage this behavior. The person could still be wrong, but the causal structures of wrongness are worth identifying. More importantly, “rightness” should be a plausibly executable program in the human brain, thus “rightness of human action and belief algorithms” could be approximated and approached better with “do what this ideal human” is doing, rather than try to “approximate” this theoretical framework. Humans do not have that great of a time with commands such as “approximate this math,” compared to approximate this guy. Not that “approximate this math isn’t a useful skill per say.

R: But there are clear examples of people being wrong. A person claiming that both drowning and non-drowning are evidence of witchcraft is wrong on every foundation. In general, to be wrong, implies a certain standard that a set of beliefs could be judged by. This standard needs to be coherent and consistent, otherwise everything can be wrong and right simultaneously. Given Cox’s theorems, the only consistent way to structure beliefs is through probability.

P: Of course, the witchcraft guy is wrong. Of course, a standard of rightness and wrongness needs to be consistent. The trouble arises from two directions – when there are explicit challenges to the theory from within the theory and when the conclusion conflicts with common sense. The trouble with Bayesians theoretically is that it is possible to break the abstraction of probability with something as simple as the sleeping beauty problem. Suddenly the neat questions of what the probability of heads is is not so simple anymore and we must rely on other common-sense arguments to see how to approach the situation. Of course, there are several theoretical problems approximating Bayesian updates and actions in an embedded, limited agent who also need to reason about logical facts, which then, of course, begs the question of whether this theoretical framework is a good starting place.

From a post-rationalist and common-sense perspective, it’s also possible to break probability abstractions through the ideas of “belief as a tool” or “self-fulfilling confidence,” where a seemingly irrational belief in success can be helpful.

R: Belief as a tool sounds like a good way to shoot yourself in the foot by trying to outsmart your own updating process by providing the “belief” as the answer instead of the update procedure.

P: Just like rationality can be good way to shoot yourself in the foot by trying to force a procedure, rather than by following the existing meta-procedure. Now, I don’t mean to be too negative here. Post rationality is not “anti-rationality”. As the name post-rationality implies, it’s still important for people to first learn rationality or a similar systematic way of thinking well enough to pass the Ideological Turing Test for it. Then and only then it’s a good idea to move on to a fuzzier way of reasoning.

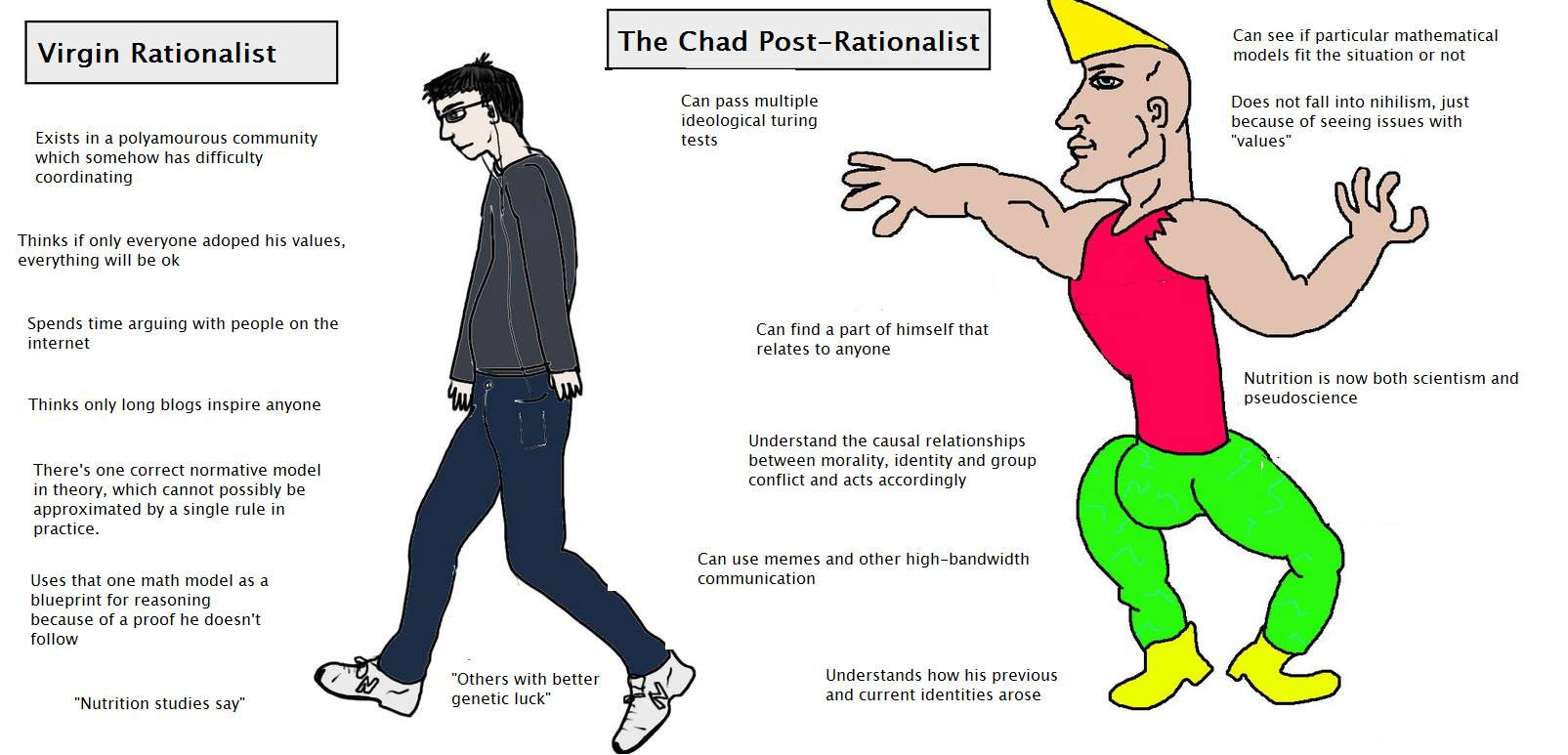

That said, there are several other memes in the rationality community that are probably actively harmful to further development. Polyamory likely causes a lot of friction and in-ability to co-ordinate. So, learning theoretical rationality is all well and good, while absorbing a lot of the adjacent ideas could be harmful.

R: But coming back to the original question. Besides the “conflict causes identity,” “super-rationality” and “descriptive mathematics”, what other positive formal ideas does post-rationality suppose?

P: if I had to carve out the mathematical foundation of post-rationality, it would be roughly equivalent to all of math minus the Bayesian probability theory. I can imagine a parallel universe in which there is a different form of “logical rationality” where people have the idea that thinking is about making correct logical inferences over beliefs. They could view probabilistic statements of “it’s going to rain with 40% probability” as something that they would an assign truth / falsehood to, rather than assigning probabilities directly. You can implement Bayesian reasoning on top of this framework if you really want.

R: But this is an extremely inefficient way to accomplish probabilistic reasoning.

P: But making an argument about “efficiency” of human computation is moving away from the idea of “laws of reasoning” and more towards the idea of “the kinds of laws of reasoning that work on human hardware,” which is a more meta-worldview. More broadly speaking, you can use a lot of math to model many processes inside the human or society and create different visions of “proper” reasoning based on those math models. However, the important piece is still a feedback loop of “common sense” that enables you to know you have gone too far. Otherwise you could end up with such silly ideas as destroying all of nature to signal your “compassion”.

On a more positive note, the simplest analogy here is:

post-rational thinking about thinking: all of math :: rational thinking about thinking : Bayesian theory.

Also, post-rational thinking about thinking is also not the whole of post-rationality either. Some people are better theoreticians, some people are better decision makers.

R: It still seems that some of post-rationality comes from a desire to socially distance oneself from rationalist or rationalist leadership or particular aspect of rationality’s community such as polyamory.

P: Well, that’s kind of half of a post-rationalist insight. The other half is that such desire for distancing is not evidence against the validity of the theory, but rather evidence for it. There are frequent tensions within the community – people feel neglected or feel that the competitions for prestige are too under-mining. You could trace these to certain “causes” – selecting from pools of people with certain personality quirks, or certain forms of social organization.

You can also look a little deeper and see that it’s not just the frame of “beliefs with probabilities” that can get you in trouble. The frame of the “individual” itself can become problematic when over-used. To be fair, this is not exclusive to rationality, but rather a common thread that cuts across nearly all Kegan 4 philosophies. EA, libertarianism, following Jordan Peterson also have an “individualist” bent to them. And while saving kids from malaria, not relying on handouts and cleaning your room are useful things to do, just like statistics is also a useful thing to know, the “end game” isn’t really there. For example, over-emphasizing individual’s capacity for action pushes leaders away from defining clear roles or more directly assigning tasks.

A “free” marketplace of status untouched by either strong norms or strong leadership is different than a marketplace of money and has a lot more ways to fail and degenerate into ever-escalating conflicts over minute things. Certainly a group of motivated people using lots of willpower can still potentially accomplish a project in between the status fights of going even more meta, but at the end most of them are going to be asking themselves – how could we do this better?

The point here, is not to dismiss individualism entirely. It is yet another raft you use to cross the river of the modern world. The point of post-rationality is to show a person who has learned rationality and found themselves attuned with its premises, but averse to its “logical” and “practical” conclusions that there isn’t something wrong with them, but rather that there are better philosophies to follow.

2 comments

Comments sorted by top scores.

comment by Sewblon · 2020-12-23T18:01:55.482Z · LW(p) · GW(p)

But isn't this all self-defeating? If the post-rationalist is right, then they are just one part of the dyadic conflict between themselves and rationalists. So from an outsider's perspective, neither is more correct than the other, anymore than any conflict between a group with set of values {X} is more correct than the group if set of values {Y} from the perspective of the post-rationalist. From the rationalists perspective, any attempt by the post-rationalist to defend their position will either be outside the bounds of rationality, and therefore be pointless, or it will be within the bounds of rationality, and therefore be self-contradictory, because it uses reason to argue against reason. You can't use reason at all if reason is invalid in the case that you are concerned with. But if reason is valid in the case in which you are concerned with, then the rationalist stance is the correct stance by definition. There doesn't seem to be any way to justify the conversion of either the rationalist, or the outsider with no interest in the conflict to post-rationalism. I know that from a descriptive perspective, there are social processes that lead people to become post-rationalists. However, that tells us nothing about the merits of post-rationalism.

comment by Gordon Seidoh Worley (gworley) · 2018-11-13T20:25:40.951Z · LW(p) · GW(p)

I liked a lot of this an appreciate the format.

One of the most pernicious issues I've encountered in these discussions is the conversation that goes "hey, look at this cool thing that rationality isn't very good at; I'm pretty sure we can do better" and the response is usually "so what, that's already part of rationality, and any failure to achieve that is due to people being still aspiring rationalists". I'm not sure its possible to convey in words why from the post-rationalist side this looks like a mistake, because from the other side it looks like trying to cut off a part of the art that you as a rationalist already have access to. All I can offer as way of evidence that this is a reasonable view from the post-rationalist side is that people that end up in the post-rationalist camp generally have a story of like "oh, yeah, I used to think that, but then this one thing flipped my thinking, everything clicked, and I was surprised at how dramatically little I understood or was capable of before" and this is happening to people who were already trained as rationalists.