Decision Theories: A Semi-Formal Analysis, Part II

post by orthonormal · 2012-04-06T18:59:35.787Z · LW · GW · Legacy · 28 commentsContents

Or: Causal Decision Theory and Substitution Previously: Idea 3: Substitute to Avoid Self-Fulfilling Prophecies Causal Decision Theory: No Longer Naive Cliquish Decision Theory: Better, but Not Good Enough Idea 4: Substitute for the Source Code of X (as an input of Y) Notes None 28 comments

Or: Causal Decision Theory and Substitution

Previously:

0. Decision Theories: A Less Wrong Primer

1. The Problem with Naive Decision Theory

Summary of Post: We explore the role of substitution in avoiding spurious counterfactuals, introduce an implementation of Causal Decision Theory and a CliqueBot, and set off in the direction of Timeless Decision Theory.

In the last post, we showed the problem with what we termed Naive Decision Theory, which attempts to prove counterfactuals directly and pick the best action: there's a possibility of spurious counterfactuals which lead to terrible decisions. We'll want to implement a decision theory that does better; one that is, by any practical definition of the words, foolproof and incapable of error...

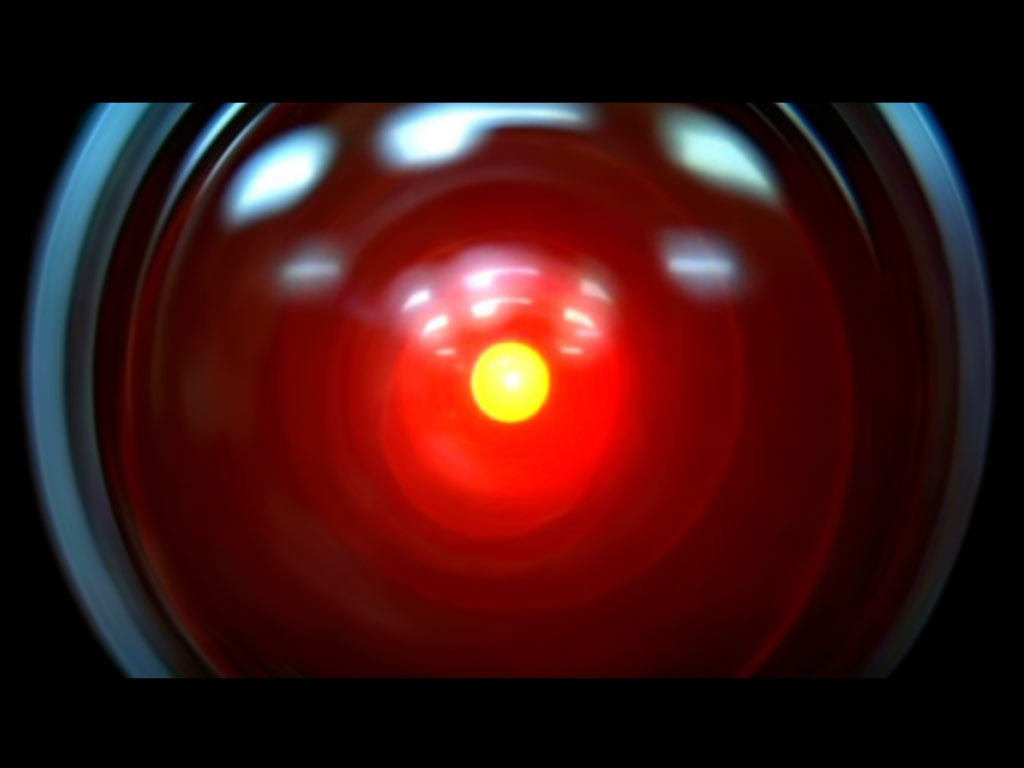

I know you're eager to get to Timeless Decision Theory and the others. I'm sorry, but I'm afraid I can't do that just yet. This background is too important for me to allow you to skip it...

Over the next few posts, we'll create a sequence of decision theories, each of which will outperform the previous ones (the new ones will do better in some games, without doing worse in others0) in a wide range of plausible games.

Let's formalize the setup a bit more: we've written a program X, which is paired with another program Y in a game G. Both X and Y have access to the source codes of X, Y, and G, and G calculates the payouts to the programmers of X and of Y in terms of their outputs alone. That is, X and Y should be functions of two inputs, and G should do the following:

- Calculate the output of X(code G, 1, code Y).1

- Calculate the output of Y(code G, 2, code X).

- From these values, calculate the payoffs to the programmers.

We write the expected payout to X's programmer as U, by which we mean U(output X, output Y), by which we really mean U(output X(code G, 1, code Y), output Y(code G, 2, code X)); similarly, we'll write V as the expected payout to Y's programmer. We'll need to use quining in order for G to feed its own source code into X and Y, but this is straightforward. (I said earlier that X should have access to its own source code as well, but the programmer can do this via quining, with no help from G.)

We're also assuming that X is equipped with a valid inference module that deduces some true statements and no false ones; it can return "true", "false" or "unknown" when given a statement. (One such example is an automated theorem prover which gives up after a certain length of time.)

Last time, we tried the most straightforward idea—trying to directly prove counterfactuals about the value of U for various outputs of X—but we ran into the problem of spurious counterfactuals, which are logically true but practically misleading. The various decision theories we'll discuss each represent different ways of getting around this obstacle.

Idea 3: Substitute to Avoid Self-Fulfilling Prophecies

Our spurious counterfactuals were harmful because of the possibility of a self-fulfilling prediction; one way to avoid such a thing is to base your output only on deductions about statements that are in some sense "independent" of the final output. We'll illustrate this principle by doing a simple task that Naive Decision Theory failed to do: infer the correct payoff matrix for G from its source code.

For each pair of outputs (xi,yj), we can simply substitute xi for (output X) and yj for (output Y) in the code for G, obtaining unconditional deductions of U(xi,yj) and V(xi,yj).2 Note that this is not the counterfactual "if (output X)=xi and (output Y)=yj then U=uij", but instead a simple mathematical object that doesn't depend on the actual values of (output X) and (output Y)!

This is a powerful enough principle that, if we use it properly, we arrive at a formalized version of the hallowed classical theory:

Causal Decision Theory: No Longer Naive

X can always simply play a default Nash equilibrium strategy, but there's an obvious case where we want X to depart from it: if the output of Y is predictable, X can often gain more by stepping away from its equilibrium to exploit Y's stupidity (or coordinate with it on a different equilibrium). We might program X as follows:

- Try to deduce the output of Y(code G, 2, code X); note that this may be a mixed strategy.

- If this succeeds, output the xi which maximizes U(xi,(output Y)). (There must be at least one pure strategy which does so.)

- Otherwise, output a default Nash equilibrium.

Note that even though there is some circularity involved—Y may base its output on the code of X—this isn't like a spurious counterfactual, because the deduction of U(xi,(output Y)) is independent of the output of X. In particular, if X succeeds at deducing the output of Y, then that really will be the output of Y in the game (presuming, as usual, that the inference module of X is valid).

This decision theory acts optimally in "one-player games" where the output of Y is independent of the source code of X (and can be deduced by X's inference module), and in zero-sum two-player games as well. Finally, X is un-exploitable in the following sense: if (xi,yj) is the Nash equilibrium with the lowest value for U(xi,yj), then U(X,Y) ≥ U(xi,yj) or V(X,Y) ≤ V(xi,yj). (Namely, if Y is trying to maximize its own payoff, then X should wind up with at least the value of its worst Nash equilibrium.)

In a philosophical sense, X does "consider" Y's inference of (output X) as a factor in deducing (output Y), but it considers it as if Y were instead inferring the output of some unrelated program, and in particular there's no "feedback" from this consideration to the second step. X acts as if its own eventual output cannot cause Y's output; that is, we've written an instance of Causal Decision Theory.3

We can also see that if a dominant strategy exists (like two-boxing in Newcomb's Problem4 or defecting in the Prisoner's Dilemma), this CDT algorithm will always pick it. We should ask at this point: in our situation with perfect knowledge of source codes, can we do better than the classical solution?

Cliquish Decision Theory: Better, but Not Good Enough

We can, of course. There's at least one case where defecting on the Prisoner's Dilemma is clearly a bad idea: if the source code of Y is identical to the source code of X. So we can upgrade X by adding an additional rule: If the payoff matrix is a prisoner's dilemma (a well-defined subset of payoff matrices), and the code of Y equals the code of X, then cooperate. (I've called algorithms like this CliqueBots before, since they cooperate only with themselves and defect against everyone else.)

Furthermore, we can extend this to cases where the source codes are different but equivalent; let X do the following when given a game G and an opponent Y:

- Deduce the payoff matrix of G. If G is not a prisoner's dilemma, then implement Causal Decision Theory.

- If G is a prisoner's dilemma, try to deduce "for all opponents Z, output X(code G, 1, code Z)=Cooperate if and only if output Y(code G, 1, code Z)=Cooperate if and only if output X(code G, 2, code Z)=Cooperate if and only if output Y(code G, 2, code Z)=Cooperate".5

- If this succeeds, then cooperate; else defect.

This now satisfies #1-3 of my criteria for a better decision theory (and we could add another rule so that it one-boxes on Newcomblike problems and satisfies #4), but it completely fails #5: this is a well-justified ad-hoc exception, but it's still an ad-hoc exception, and it's not that enlightening as examples go. We'll want something that achieves the same result, but for a genuine reason rather than because we've added individual common-sense patches. (One problem with patches is that they don't tell you how to solve more complicated problems- extending the analysis to three-player games, for instance.)

Furthermore, Cliquish Decision Theory misses out on a lot of potential cooperation: there are multiple strict improvements on Causal Decision Theory which cooperate with each other but don't all cooperate with the same third parties, and it's simple to show that Cliquish Decision Theory can't cooperate with any of these. So we'll need new ideas if we're going to do better.

Our next steps will be toward Timeless Decision Theory. If you've considered TDT on the philosophical level, you'll recognize the concept of substituting your (hypothetical) output for your opponent's (hypothetical) prediction of your output, and using those outcomes to decide what to do. Our setup gives us a clean general way to implement this messy idea:

Idea 4: Substitute for the Source Code of X (as an input of Y)

That is, instead of trying to deduce the output of Y(code G, 2, code X) and then pair it with each action xi, we can deduce the output of Y(code G, 2, some other code that corresponds to xi) and pair it with the deduced action. I'm being vague about this, partly to generate some suspense for the next post, but mostly because the most obvious way to go about it has two major flaws...

Next: Part III: Formalizing Timeless Decision Theory

Notes

0. This is not quite true for Updateless Decision Theory, which explicitly trades off worse performance in some rounds for a better average performance. But we'll get to that in due time.

Also, it's possible to write an opponent Y that "rewards stupidity" by caring about things besides X's outputs: it might let X attain its best outcome only if X is provably a causal decision theorist, for instance. But in such cases, Y isn't really optimizing its own payoffs (as listed) in any meaningful sense, and so this is not a fair problem; we're tacitly assuming throughout this analysis that X is a real attempt to optimize U and Y is a real attempt to optimize V, and both of these depend only on the outputs.

1. X and Y need to know which is player 1 in game G and which is player 2. Also, the steps of G don't have to be done in that sequential order- they can be calculated in parallel or any other sensible way. (In Part III we'll introduce an interesting game which first flips a coin to decide which one is Player 1 and which one is Player 2.)

2. In fact, the correct payoff matrix is necessary even to implement the Nash equilbrium strategy! I glossed over this before by assuming that, in addition to the source code, X was given a valid payoff matrix; but here, we see that there is a sensible way to read off the matrix from the code of G (if the inference module of X is up to the task). We'll assume from here on out that this is the case.

3. There are other variants of this CDT algorithm: for instance, X might only output argmax(U(x,(output Y))) if the value is greater than the value of X's default Nash equilibrium. This algorithm can "insist on a good result or else no deal"; for instance, in the game below, if X takes as default the equilibrium that nets xer 2, the new algorithm will refuse to settle for 1, when the old algorithm would often have done so.

| (2,1) | (0,0) |

| (0,0) | (1,2) |

On the other hand, when playing an opponent that always picks the second column (i.e. a one-player game), this new algorithm will wind up with nothing! So there's a tradeoff involved, where other algorithms can get (in many cases) the best of both worlds.

4. Maybe the best way to implement Newcomb's Problem in this setting is to introduce the game N as follows (X chooses the row and gets the first listed payoff):

| (100,1) | (0,0) |

| (101,0) | (1,1) |

Your opponent is the predictor P, which is programmed as follows (when the game is N):

- Try to deduce "output X(code N, code P)=row 1".

- If this succeeds, output column 1; else output column 2.

If you expect to face N against P, then you have every incentive to make it an easy deduction that output X(code N, code P)=1.

5. There may be a better way to do this, but most simplifications will fall short of CDT in one way or another. For instance, if we just have X try to deduce "output X(code G, 1, code Y)=output Y(code G, 2, code X)", then it's quite possible for X to wind up cooperating with a CooperateBot, which is suboptimal performance on a one-player game. (A proof of that statement can be generated by Löb's Theorem.)

28 comments

Comments sorted by top scores.

comment by Jobst Heitzig · 2022-12-07T11:10:46.984Z · LW(p) · GW(p)

I just stumbled upon this and noticed that a real-world mechanism for international climate policy cooperation that I recently suggested in this paper can be interpreted as a special case of your (G,X,Y) framework.

Assume a fixed game G where

- each player's action space is the nonnegative reals,

- U(x,y) is weakly decreasing in x and weakly increasing in y.

- V(x,y) is weakly decreasing in y and weakly increasing in x.

(Many public goods games, such as the Prisoners' Dilemma, have such a structure)

Let's call an object a Conditional Commitment Function (CCF) iff it is a bounded, continuous, and weakly increasing function from the nonnegative reals into the nonnegative reals. (Intended interpretation of a CCF C: If opponent agrees to do y, I agree to do any x that has x <= C(y))

Now consider programs of the following kind:

C = <some CCF>

if code(opponent) equals code(myself) except that C is replaced by some CCF D:

output the largest x >= 0 for which there is a y <= D(x) with x <= C(y)

else:

output 0

Let's denote this program Z(C) , where C is the CCF occurring in line 1 of the program. Finally, let's consider the meta-game where two programmers A and B, knowing G, each simultaneously choose a C and submit the program Z(C), the two programs are executed once to determine actions (x,y), A gets U(x,y) and B gets V(x,y).

(In the real world, the "programmers" could be the two parliaments of two countries that pass two binding laws (the "programs"), and the actions could be domestic levels of greenhouse gas emissions reductions.)

In our paper, we prove that the outcomes that will result from the strong Nash equilibria of this meta-game are exactly the Pareto-optimal outcomes (x,y) that both programmers prefer to the outcome (0,0).

(In an N (instead of 2) player context, the outcomes of strong Nash equilibria are exactly the ones from a certain version of the underlying base game's core, a subset of the Pareto frontier that might however be empty).

I'd be interested in learning whether you think this is an interesting application context to explore the theories you discuss.

comment by duwease · 2012-04-09T18:47:33.982Z · LW(p) · GW(p)

Good article, but as always a concrete example would be beneficial to comprehension.

Replies from: orthonormal↑ comment by orthonormal · 2012-04-16T16:49:45.662Z · LW(p) · GW(p)

I'm sorry, duwease, but I'm afraid I couldn't do that.

More seriously, decision theories deserve a more intuitive writeup as well, but first someone needs to show that the content is actually there. So I'm focusing on that with this sequence.

comment by Dmytry · 2012-04-07T06:55:45.609Z · LW(p) · GW(p)

You forget that substitution can also be applied to instances of source code of X inside the agent Y , permitting to e.g. one box on Newcomb's if you know omega got your source code, substitute a value for your code's output, and then maximize the payoff on the equation involving a substituted-in variable, by solving for this variable. That approach is not so self fulfilling because you don't assume the particular value you try equals output of your source code (which easily creates contradiction).

edit: nevermind, I see you are leaving it for the next post. Looking forwards to the next post. It'd be interesting what are those two enormous gaping flaws in the method used for much any sort of engineering; a good example would be engineering an AI that aims a gun.

Replies from: orthonormal↑ comment by orthonormal · 2012-04-07T15:08:33.019Z · LW(p) · GW(p)

It'd be interesting what are those two enormous gaping flaws in the method used for much any sort of engineering; a good example would be engineering an AI that aims a gun.

They're not going to be flaws in most contexts, just as an automated theorem-prover doesn't need to worry about spurious deductions; it's only when you have the circularity of a program whose output might depend on its output that you need to beware this kind of thing.

Also, you're probably capable of working out the first attempt at TDT and its flaws in this context, if you want to try your hand at this sort of problem. I'm splitting the post here not because it's an especially difficult cliffhanger, but because readers' eyes glaze over when a post starts getting too long.

Replies from: Dmytry↑ comment by Dmytry · 2012-04-07T15:14:36.455Z · LW(p) · GW(p)

it's only when you have the circularity of a program whose output might depend on its output that you need to beware this kind of thing.

Well, the substitutions are specifically to turn a circularity into a case of having x on both sides of some equation. We might be talking about different things. The failure mode is benign; you arrive at x=x .

edit: ahh, another thing. If you have source of randomness, you need to consider the solution with, and without, the substitution, as you can make substitution invalid by employing the random number generator. The substitution of the nonrandom part of strategy can still be useful though. Maybe that's what you had in mind?

Replies from: orthonormal, orthonormal↑ comment by orthonormal · 2012-04-08T17:06:50.178Z · LW(p) · GW(p)

If you have source of randomness, you need to consider the solution with, and without, the substitution, as you can make substitution invalid by employing the random number generator.

Err, I'm not sure what you mean here. In the CDT algorithm, if it deduces that Y employs a particular mixed strategy, then it can calculate the expected value of each action against that mixed strategy.

(For complete simplicity, though, starting next post I'm going to assume that there's at least one pure Nash equilibrium option in G. If it doesn't start with one, we can treat a mixed equilibrium as x{n+1} and y{m+1}, and fill in the new row and column of the matrix with the right expected values.)

↑ comment by orthonormal · 2012-04-07T15:24:47.180Z · LW(p) · GW(p)

I mean this sort of circularity:

The calculator computes "What is 2 + 3?", not "What does this calculator compute as the result of 2 + 3?" The answer to the former question is 5, but if the calculator were to ask the latter question instead, the result could self-consistently be anything at all! If the calculator returned 42, then indeed, "What does this calculator compute as the result of 2 + 3?" would in fact be 42.

I agree that some forms are benign. The Naive Decision Theory post and cousin_it's followup illustrate a malignant form.

Replies from: Dmytry↑ comment by Dmytry · 2012-04-07T15:28:13.186Z · LW(p) · GW(p)

That's why you don't let your calculator be sentient. FAI might give a number that makes you most happy, which might well be 42 if you are not relying on this number for anything useful. (E.g. it might tell 42 as a joke, knowing that you know what 2+3 is)

Edit: you can, however, have some requirements on the calculator's output, and then there will be the number that satisfies those criteria; the x substitution will work to solve for this value, and in principle even to solve for protective measures to take against cosmic rays, and so on.

edit: and on the NDT, it doesn't one-way substitute at start. It assumes equivalence.

Replies from: TheOtherDave, orthonormal↑ comment by TheOtherDave · 2012-04-07T17:00:29.907Z · LW(p) · GW(p)

FAI might give a number that makes you most happy

Sure, if it happened to be in a situation where the most valuable thing to do by my standards was make me happy. Agreed.

You seem to be implying that I should prefer to avoid this result... do you in fact believe that?

If so, can you clarify why?

A somewhat analogous real-world situation: one of Siri's possible responses to "open the pod bay doors" as a command is "We're never going to live that down, are we?" This delights me enormously, and costs nothing of consequence. Should I prefer that this result be eliminated?

Replies from: Dmytry↑ comment by Dmytry · 2012-04-07T17:44:53.381Z · LW(p) · GW(p)

Actually I misunderstood his point with calculator. He was speaking of NDT with issues resulting from equivalence, i thought he was speaking of issues resulting from substitution. I did not mean to imply that you should avoid this result, simply that if you want your calculator to work by the decision theory I thought he was referring to, it got to have some utilities associated with outputs. And this doesn't really help make a calculating device.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2012-04-07T20:57:45.011Z · LW(p) · GW(p)

Gotcha. Thanks for clarifying.

↑ comment by orthonormal · 2012-04-07T15:40:03.323Z · LW(p) · GW(p)

Who said anything about sentience? NDT, as described, is a perfectly comprehensible program that (in certain games that you or I would regard as fair tests) generates spurious counterfactuals and thus makes terrible decisions, thanks to a particular kind of circularity.

In this sequence, I'm not talking about FAI or anything beyond my current understanding, and I'm not intentionally drawing metaphors. I'm simply outlining programs which (if I could write a good automated theorem-prover) I could write myself, and comparing how they do in a straightforward tournament setting, with the twist of allowing read-access to source codes. We should be able to agree on that base level.

Replies from: Dmytrycomment by Jonathan_Graehl · 2012-04-07T22:22:07.494Z · LW(p) · GW(p)

It seems the point of all this is to study the properties of programs that "cooperate" on one-shot games with known payoffs. So, there's no point dwelling on objections as to the practicality of any of this (how to prove that an embodied computer actually implements given source code, etc. - see Ken Thompson's C compiler/login trojan)

I'd appreciate more precision on "If G is a prisoner's dilemma".

Also, I suppose there's nothing prohibiting mixed strategies (choosing a probability distribution over your likely moves, from which the actual move is drawn) - e.g. the machine the programs run on has access to random bits.

Since I didn't actually follow your definitions carefully enough to answer, can you tell me what would be played by two identical rational CDT, Cliquish-DT, or TDT agents (or same-clique-agents) on the game:

(2,-1) (0,0)

(0,0) (0,0)

( with (row player, column player) payoffs )

Row player will always choose row 1 (if he wants to maximize the sum of payoffs, or his own payoff). What will column player choose? Take the -1 for the good of the row player?

That is, I'm asking whether programs which self-sacrifice when faced with another member of their clique are supposed to be 'rational' under one of these theories.

If it weren't a one-shot game, I'd wonder about history - if an external force assigns a particular instance only to the sacrificial role, does it keep on sacrificing? I can only ask this informally, unfortunately.

Replies from: orthonormal, orthonormal↑ comment by orthonormal · 2012-04-08T04:42:19.610Z · LW(p) · GW(p)

I'd appreciate more precision on "If G is a prisoner's dilemma".

We can read off the payoff matrix. G is a Prisoner's Dilemma if for each player, utility(I defect, they cooperate)>utility(we both cooperate)>utility(we both defect)>utility(I cooperate, they defect).

Replies from: Jonathan_Graehl↑ comment by Jonathan_Graehl · 2012-04-08T05:58:37.210Z · LW(p) · GW(p)

That seems clear. Perhaps in addition, u(c,d)+u(d,c) < 2*u(c,c), though I'm not sure if that makes a difference to your claims.

Replies from: orthonormal↑ comment by orthonormal · 2012-04-08T15:58:56.534Z · LW(p) · GW(p)

Again, no difference for CDT or TDT (or the version of ADT I'll present), but sometimes it matters for UDT.

↑ comment by orthonormal · 2012-04-08T04:45:15.960Z · LW(p) · GW(p)

CDT and TDT would both choose column 2 in your example. CliqueBots are dependent on ad-hoc rules, so you could program them either way. There are circumstances where UDT would play column 1 against itself; we'll get to that in a bit.

comment by patrickscottshields · 2013-04-28T01:53:56.833Z · LW(p) · GW(p)

I'd like to cite this article (or related published work) in a research project paper I'm writing which includes application of an expected utility-maximizing algorithm to a version of the prisoner's dilemma. Do you have anything more cite-able than this article's URL and your LW username? I didn't see anything in your profile which could point me towards your real name and anything you might have published.

comment by Rhwawn · 2012-04-06T19:03:40.505Z · LW(p) · GW(p)

Downvoted; too heavy on math.

Replies from: Matt_Simpson, Jonathan_Graehl, WrongBot↑ comment by Matt_Simpson · 2012-04-06T20:53:32.552Z · LW(p) · GW(p)

We have plenty of math-less posts on LW. Sometimes an idea just requires a more formal discussion to be understood properly and many of these we would like to discuss on LW. On the margin, I think LW could benefit from a bit more math so that we can discuss some of these ideas more adequately.

↑ comment by Jonathan_Graehl · 2012-04-07T22:30:51.087Z · LW(p) · GW(p)

I'd support a disclaimer early on about the prerequisites, so people without them don't waste their time. There are some hyperlinks, but not enough (I guess you could search Wikipedia yourself).

People should know how much time they're going to need to spend on a post in order to understand it.

Replies from: orthonormal↑ comment by orthonormal · 2012-04-08T18:43:31.535Z · LW(p) · GW(p)

Good idea- I'm adding links to game theory and Nash equilibria to the sequence summary (on the previous post). Can you suggest some prerequisites I didn't think of?

↑ comment by WrongBot · 2012-04-06T19:28:51.890Z · LW(p) · GW(p)

Please stop making these little explanatory comments. They're obnoxious, unhelpful, and their karma ratings should indicate to you that they are not well-liked.

Replies from: EStokes, Ezekiel↑ comment by EStokes · 2012-04-07T01:09:19.667Z · LW(p) · GW(p)

But then one wouldn't be able to provide negative reinforcement for his downvotes...?

Replies from: Jonathan_Graehl↑ comment by Jonathan_Graehl · 2012-04-07T22:28:23.986Z · LW(p) · GW(p)

Surely the cranks voting up/down only on math-heaviness are just noise.

But I do admit that yours is a clever observation. :)