SBF, Pascal's Mugging, and a Proposed Solution

post by Cole Killian (cole-killian) · 2022-11-18T18:39:48.823Z · LW · GW · 5 commentsThis is a link post for https://colekillian.com/posts/sbf-and-pascals-mugging/

Contents

Overview Pascal's Mugging Dissolving the Question An Alternative Function: Log What Property Are We Missing? A Proposed Solution: The Truncated Bounded Risk Aversion Function Notes Back To SBF Concepts Links None 5 comments

There are some uncommon concepts in this post. See the links at the bottom if you want to familiarize yourself with them. Also, thanks to matt and simon for inspiration and feedback.

Overview

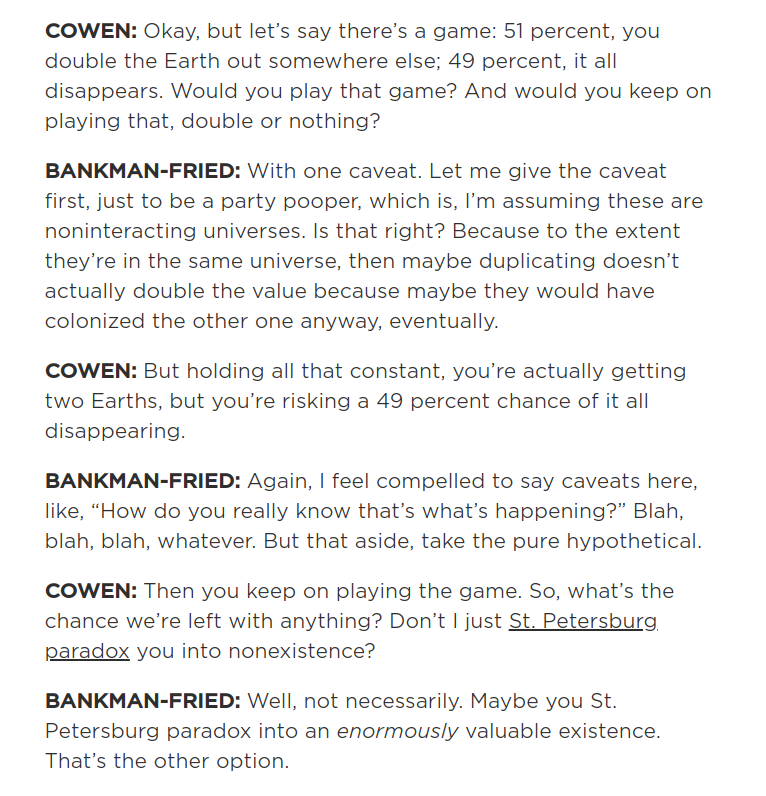

Recently there's been discussion over how SBF answered Tyler Cowen's question about the St. Petersburg paradox:

The main idea behind the question is to see how SBF responds to the following apparent paradox:

- The game offers a positive expected value return of

- It's clear that if you play this game long enough, you will wind up with nothing. After 10 rounds, there is just a chance that you haven't blown up.

How is it that a game with a positive EV return can be so obviously "bad"?

Pascal's Mugging

A related question is that of pascal's mugging. You can think of pascal's mugging as similar to playing Tyler Cowen's game 100 times in a row. This results in a game of with the following terms:

- A chance of multiplying our current utility by .

- A of everything disappearing.

Should you play this game? Most would respond "no", but the arithmetic EV is still in your favor. What gives?

Dissolving the Question

The question underlying most discussions of these types of paradoxes is what utility function you should be maximizing.

If your goal is to maximize the increase in expected value, then you should play the game. There's no way around the math.

Even though the chance of winning is tiny, the unimaginable riches you would acquire in the event that you got lucky and win push the EV to be positive.

That being said, the formula associated with expected value, the arithmetic mean, is mostly arbitrary. There's no fundamental reason why the arithmetic mean is what we should aim to maximize. We get to choose what we want to maximize.

An Alternative Function: Log

A common alternative when faced with this issue is to maximize the log of some value. This is a popular choice when maximizing wealth because it incorporates the diminishing marginal utility of money. Some consequences of a log utility function include:

- Valuing an increase in wealth from $100,000 to $1M just as much as an increase from $1M to $10M

- Valuing a potential increase in wealth of $100 as not worth a potential loss of $100 at even odds.

- Valuing a loss leaving you with $0 dollars as completely unacceptable no matter the odds.

While a log function is helpful and can give us a reason to turn down some Pascal's mugging scenarios, it's not the perfect solution because it won't turn down games with exponentially good odds. For example:

- Let your wealth by .

- Let there be a game with a chance of returning , and a chance of returning .

A log utility function says you should play this game over and over again, but you will most definitely lose all of your money by playing it (with the slim potential for wonderful returns).

What Property Are We Missing?

To find which property we are missing, it's helpful to build a better intuition for what we are really doing when we maximize these functions.

By maximizing the arithmetic mean, we are:

- Maximizing what you would get if you played the game many times (infinitely many) at once with independent outcomes

- Maximizing the weighted sum of the resulting probability distribution (this is another way of saying the arithmetic mean)

By maximizing the geometric mean, we are doing the same as the arithmetic mean but on the log of the corresponding distribution.

We are taught to always maximize the arithmetic mean, but when looking at the formulas they represent the question which comes to mind is: Why? Does that really make sense in every situation?

The problem is that these formulas average over tiny probabilities without a sweat, while we as humans only have one life to live. What we are missing is accounting for how:

- We don't care about outcomes with tiny probabilities because of how unlikely we are to actually experience them.

- We ignore bad outcomes which have a less than 0.5% (you can change this number to fit your preferences) left tail lifetime probability of occurring and take our chances. Not doing so could mean freezing up with fear and never leaving the house. One way to think about this is that our lives follow taleb distributions where we ignore a certain amount of risk in order to improve every day life. An example of this is the potential for fatal car accidents. Some of us get lucky, some of us get unlucky, and those who don't take these risks are crippled in every day life.

- We ignore good outcomes which have a less than 0.5% (you can change this number to fit your preferences) right tail lifetime probability of occurring to focus on things we are likely to experience. Not doing so could mean fanaticism towards some unlikely outcome, ruining our everyday life in the process.

- We are risk averse and prefer the security of a guaranteed baseline situation to the potential for a much better or much worse outcome at even odds

- This is similar to the case of ignoring good outcomes which have a less than 0.5% right tail lifetime probability of occurring, but is done in a continuous way rather than a truncation. This can be modeled via a log function.

- We become indifferent to changes in utility above or below a certain point

- There's a point at which all things greater than or equal to some scenario are all the same to us.

- There's a point at which all things less than or equal to some scenario are all the same to us.

These capture our grudges with pascal's mugging! With these ideas written down we can try to construct a function which incorporates these preferences.

A Proposed Solution: The Truncated Bounded Risk Aversion Function

In order to align our function with our desires, we can choose to maximize a function where we:

- Truncate the tails and focus on the middle 99% of our lifetime distribution (you can adjust the 99% to your preferred comfort level). Then we can ignore maximizing the worst and best things which we are only 1% likely to experience.

- Use a log or sub-log function to account for diminishing returns and risk aversion

- Set lower and upper bounds on our lifetime utility function to account for indifference in utility beyond a certain point

There we go! This function represents our desires more closely than that of an unbounded linear curve, and is not susceptible to pascal's mugging.

Notes

This function works pretty well, but there are some notes to be aware of:

- This does not mean maximizing the middle 99% of the distribution on each and every decision. Doing so would mean winding up with the same problems as before by repeating a decision with a 0.1% chance of going horribly wrong over and over again, eventually building up into a large percentage. Instead, you maximize the middle 99% over whatever period of time you feel comfortable with. A reasonable choice is the duration of your life.

- If you were to have many humans acting in this way, you would need to watch out for resonant externalities. If 10,000 people individually ignore an independent 0.5% chance of a bad thing happening to a single third party, it becomes >99% likely that the bad thing happens to that third party. A way to capture this in the formula is to truncate the middle 99% of the distribution of utility across all people, and factor the decisions of other people into this distribution.

- There is a difference between diminishing returns and risk aversion.

- Diminishing returns refers to the idea that linear gains in something become less valuable as you gain more of it. Billionaires don't value $100 as much as ten year olds for example.

- Risk aversion refers to the idea that we exhibit strong preferences for getting to experience utility. We don't like the idea of an even odds gamble between halving or doubling our happiness for the rest of our lives. This can be thought of like maximizing the first percentile of a distribution. Log functions cause us to prioritize the well being of lower percentiles over higher percentiles and thus line up with this preference.

- A log utility function over the course of your life does not necessarily mean that you approach independent non compounding decisions in a logarithmic way. It's sometimes the case that maximizing non compounding linear returns will maximize aggregate log returns.

Back To SBF

So back to SBF. How does this affect our response to the St. Petersburg paradox? If your goal is to maximize arithmetic utility in a utilitarian fashion, then the answer is still that you should play the game. On the other hand, if your goal is to maximize utility in a way that factors in indifference to highly unlikely outcomes, risk aversion, and bounded utility, you have a good reason to turn it down.

Concepts

- Shannon's Demon

- Arithmetic Mean

- Geometric Mean

- Pascal's Mugging

- Dissolving the Question [LW · GW]

- Risk Aversion

- Kelly Criterion

Links

- Sam Bankman-Fried on Arbitrage and Altruism

- The Arithmetic Return Doesn't Exist

- An Ode To Cooperation

- Gwern on Pascal's Mugging

- Nintil on Pascal's Mugging

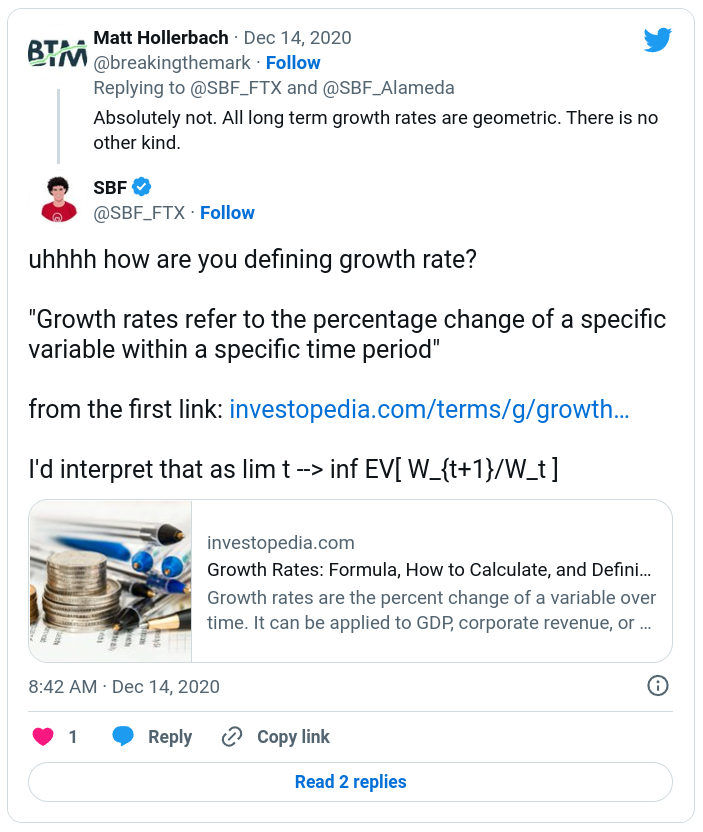

- https://twitter.com/breakingthemark/status/1339570230662717441

- https://twitter.com/breakingthemark/status/1591114381508558849

- https://twitter.com/SBF_FTX/status/1337250686870831107

5 comments

Comments sorted by top scores.

comment by JBlack · 2022-11-19T01:28:04.884Z · LW(p) · GW(p)

A log utility function says you should play this game over and over again, but you will most definitely lose all of your money by playing it (with the slim potential for wonderful returns).

No, one thing that absolutely will not happen is losing all your money. You literally can't.

Though yes, the conclusion that utility is not logarithmic in money without restriction does hold. If on the second gamble you "win" 10^2174 dollars (as the formula implies), what value does that have? At best you're now going to jail for violating some very serious currency laws, at worst you get it in gold or something and destroy the universe with its gravitational pull. Somewhere in the middle, you destroy the world economy and make a lot of people very unhappy, and probably murderously angry at you.

In no circumstance are you actually going to be able to benefit from "winning" 10^2174 dollars. Even if you somehow just won complete control over the total economic activity of Earth, that's probably not worth more than 10^14 dollars and so you should reject this bet.

But since this a ridiculous hypothetical in the first place, what if it's actually some God-based currency that just happens to coincide with Earth currency for small amounts, and larger quantities do in fact somehow continue with unbounded utility? To the extent where a win on the second bet gives you some extrapolation of the benefits of 10^2160 planets worth of economic output, and wins on the later bets are indescribably better still? Absolutely take those bets!

comment by Bernhard · 2022-11-20T18:56:30.266Z · LW(p) · GW(p)

Are you familiar with ergodicity economics?

https://twitter.com/ole_b_peters/status/1591447953381756935?cxt=HHwWjsC8vere-pUsAAAA

I recommend Ole Peters' papers on the topic. That way you won't have to construct your epicycles upon the epicicles commonly know as utility calculus.

We are taught to always maximize the arithmetic mean

By whom?

The probable answer is: By economists.

Quite simply: they are wrong. Why?

That's what ergodicity economics tries to explain.

In brief, economics typically wrongly assumes that the average over time can be substituted with the average over an ensemble.

Ergodicity economics shows that there are some +EV bets, that do not pay off for the individual

For example you playing a bet of the above type 100 times is assumed to be the same than 100 people each betting once.

This is simply wrong in the general case. For a trivial example, if there is a minimum bet, then you can simply go bankrupt before playing 100 games

Interestingly however, if 100 people each bet once, and then afterwards redistribute their wealth, then their group as a whole is better off than before. Which is why insurance works

And importantly, which is exactly why cooperation among humans exists. Cooperation that, according to economists, is irrational, and shouldn't even exist.

Anyway I'm butchering it. I can only recommend Ole Peters' papers

comment by Dagon · 2022-11-18T19:14:16.562Z · LW(p) · GW(p)

expected value return of . It's clear that if you play this game long enough, you will wind up with nothing.

That's only clear if you define "long enough" in a perverse way. For any finite sequence of bets, this is positive value. Read SBF's response more closely - maybe you have an ENORMOUSLY valuable existence.

tl;dr: it depends on whether utility is linear or sublinear in aggregation. Either way, you have to accept some odd conclusions.

For most resources and human-scale gambling, the units are generally assumed to have declining marginal value, most often modeled as logarithmic in utility. In that case, you shouldn't take the bet, as log(2) .51 + log(0) * .49 is negative infinity. But if you're talking about multi-universe quantities of lives, it's not obvious whether their value aggregates linearly or logarithmically. Is a net new happy person worth less than or exactly the same as an existing identically-happy person? Things get weird when you take utility as an aggregatable quantity.

Personally, I bite the bullet and claim that human/sentient lives decline in marginal value. This is contrary to what most utilitarians claim, and I do recognize that it implies I prefer fewer lives over more in many cases. I additionally give some value to variety of lived experience, so a pure duplicate is less utils in my calculations than a variant.

But that doesn't seem to be what you're proposing. You're truncating at low probabilities, but without much justification. And you're mixing in risk-aversion as if it were a real thing, rather than a bias/heuristic that humans use when things are hard to calculate or monitor (for instance, any real decision has to account for the likelihood that your payout matrix is wrong, and you won't actually receive the value you're counting on).

Replies from: cole-killian↑ comment by Cole Killian (cole-killian) · 2022-11-18T19:34:14.536Z · LW(p) · GW(p)

I think we mostly agree.

That's only clear if you define "long enough" in a perverse way. For any finite sequence of bets, this is positive value. Read SBF's response more closely - maybe you have an ENORMOUSLY valuable existence.

I agree that it's positive expected value calculated as the arithmetic mean. Even so, I think most humans would be reluctant to play the game even a single time.

tl;dr: it depends on whether utility is linear or sublinear in aggregation. Either way, you have to accept some odd conclusions.

I agree it's mostly a question of "what is utility". This post is more about building a utility function which follows most human behavior and showing how if you model utility in a linear unbounded way, you have to accept some weird conclusions.

The main conflict is between measuring utility as some cosmic value that is impartial to you personally, and a desire to prioritize your own life over cosmic utility. Thought experiments like pascal's mugging force this into the light.

Personally, I bite the bullet and claim that human/sentient lives decline in marginal value. This is contrary to what most utilitarians claim, and I do recognize that it implies I prefer fewer lives over more in many cases. I additionally give some value to variety of lived experience, so a pure duplicate is less utils in my calculations than a variant.

I don't think this fully "protects" you. In the post I constructed a game which maximizes log utility and still leaves you with nothing in 99% of cases. This is why I also truncate low probabilities and bound the utility function. What do you think?

But that doesn't seem to be what you're proposing. You're truncating at low probabilities, but without much justification. And you're mixing in risk-aversion as if it were a real thing, rather than a bias/heuristic that humans use when things are hard to calculate or monitor (for instance, any real decision has to account for the likelihood that your payout matrix is wrong, and you won't actually receive the value you're counting on).

My main justification is that you need to do it if you want your function to model common human behavior. I should have made that more clear.

Replies from: Dagon↑ comment by Dagon · 2022-11-18T21:45:47.247Z · LW(p) · GW(p)

I think we mostly agree.

Probably, but precision matters. Mixing up mean vs sum when talking about different quantities of lives is confusing. We do agree that it's all about how to convert to utilities. I'm not sure we agree on whether 2x the number of equal-value lives is 2x the utility. I say no, many Utilitarians say yes (one of the reasons I don't consider myself Utilitarian).

game which maximizes log utility and still leaves you with nothing in 99% of cases.

Again, precision in description matters - that game maximizes log wealth, presumed to be close to linear utility. And it's not clear that it shows what you think - it never leaves you nothing, just very often a small fraction of your current wealth, and sometimes astronomical wealth. I think I'd play that game quite a bit, at least until my utility curve for money flattened even more than simple log, due to the fact that I'm at least in part a satisficer rather than an optimizer on that dimension. Oh, and only if I could trust the randomizer and counterparty to actually pay out, which becomes impossible in the real world pretty quickly.

But that only shows that other factors in the calculation interfere at extreme values, not that the underlying optimization (maximize utility, and convert resources to utility according to your goals/preferences/beliefs) is wrong.