Meta releases Llama-4 herd of models

post by winstonBosan · 2025-04-05T19:51:06.688Z · LW · GW · 4 commentsContents

4 comments

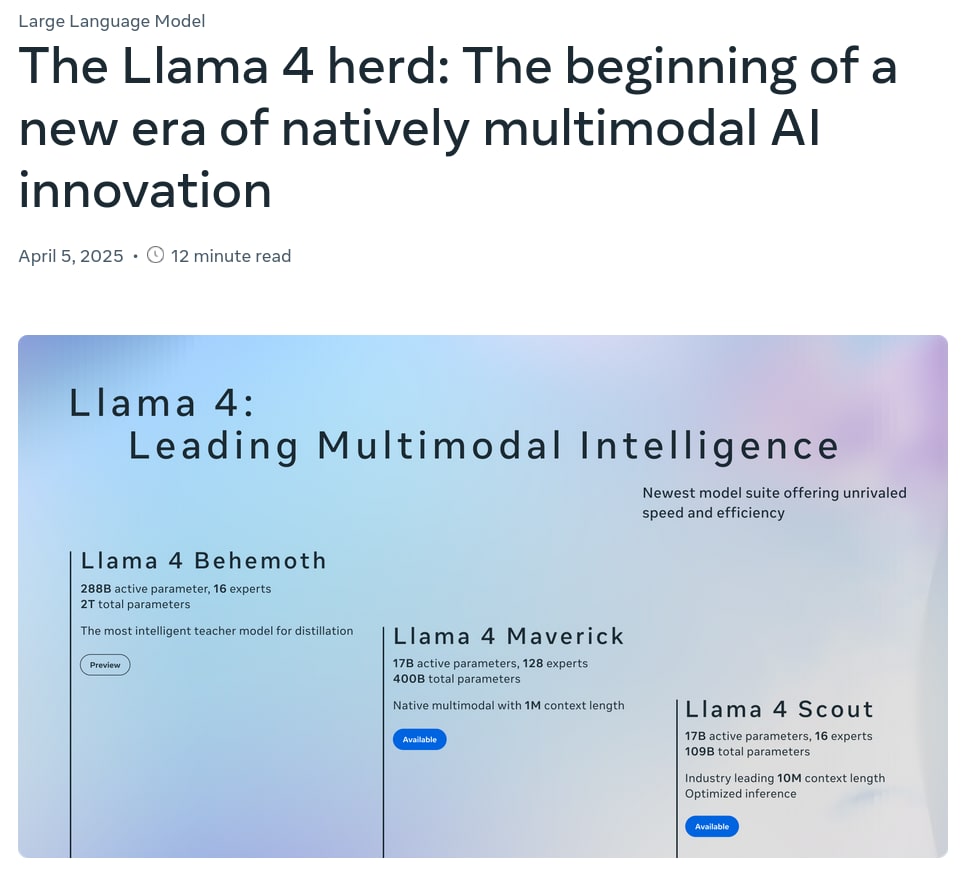

Meta released 3 models from the Llama 4 family herd today.

- The 109B (small) and 400B (mid) models are MoE models with 17B active param each. The large model is 2T MoE model with 288B active param instead.

- The small model is called Scout. The middle model is called Maverick. The big one is called Behemoth.

- Natively multimodal and much longer context length. 10M (!!) for the small one and 1M for the mid sized one.

- Maverick is currently number 2 on LLM arena. (Number 5 if style controlled)

- We don't have the 2T big model available yet. It is used as a distillation model for Maverick and Scout.

- Behemoth beats Claude 3.7 (not thinking), GPT 4,5 and Gemini 2.0 Pro in various benchmarks (LiveCodeBench, Math-500, GPQA Diamond, etc)

- Scout is meant to work on a single H100.

https://ai.meta.com/blog/llama-4-multimodal-intelligence/

4 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2025-04-05T21:44:20.634Z · LW(p) · GW(p)

The announcement post says the following on the scale of Behemoth:

we focus on efficient model training by using FP8 precision, without sacrificing quality and ensuring high model FLOPs utilization—while pre-training our Llama 4 Behemoth model using FP8 and 32K GPUs, we achieved 390 TFLOPs/GPU. The overall data mixture for training consisted of more than 30 trillion tokens

This puts Llama 4 Behemoth at 5e25 FLOPs (30% more than Llama-3-405B), trained on 32K H100s (only 2x more than Llama-3-405B) instead of the 128K H100s (or in any case, 100K+) they should have. They are training in FP8 (which gets 2x more FLOP/s per chip than the easier-to-work-with BF16), but with 20% compute utilization (2x lower than in dense Llama-3-405B; training MoE is harder).

At 1:8 sparsity (2T total parameters, ~250B in active experts), it should have 3x lower data efficiency than a dense model (and 3x as much effective compute, so it has 4x effective compute of Llama-3-405B even at merely 1.3x raw compute). Anchoring to Llama-3-405B, which is dense and has 38 tokens per parameter compute optimal with their dataset, we get about 120 tokens per active parameter optimal for a model with Behemoth's shape, which for 288B active parameters gives 35T tokens. This fits their 30T tokens very well, so it's indeed a compute optimal model (and not a middle-sized overtrained model that inherited the title of "Behemoth" from a failed 128K H100s run).

In any case, for some reason they didn't do a large training run their hardware in principle enables, and even then their training run was only about 2 months (1.5 months from total compute and utilization, plus a bit longer at the start to increase critical batch size enough to start training on the whole training system). (Running out of data shouldn't be a reason to give up on 128K H100s, as a compute optimal 1:8 sparsity model would've needed only 90T tokens at 750B active parameters, if trained in FP8 with 20% compute utilization for 3 months. Which could just be the same 30T tokens repeated 3 times.)

Replies from: igor-2↑ comment by Petropolitan (igor-2) · 2025-04-06T22:34:17.786Z · LW(p) · GW(p)

Muennighoff et al. (2023) studied data-constrained scaling on C4 up to 178B tokens while Meta presumably included all the public Facebook and Instagram posts and comments. Even ignoring the two OOM difference and the architectural dissimilarity (e. g., some experts might overfit earlier than the research on dense models suggests, perhaps routing should take that into account), common sense strongly suggests that training twice on, say, a Wikipedia paragraph must be much more useful than training twice on posts by Instagram models and especially comments under those (which are often as like as two peas in a pod).

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2025-04-06T23:01:38.269Z · LW(p) · GW(p)

The loss goes down; whether that helps in some more legible way that also happens to be impactful is much harder to figure out. The experiments in the May 2023 paper show that training on some dataset and training on a random quarter of that dataset repeated 4 times result in approximately the same loss (Figure 4). Even 15 repetitions remain useful, though at that point somewhat less useful than 15 times more unique data. There is also some sort of double descent where loss starts getting better again after hundreds of repetitions (Figure 9 in Appendix D).

This strongly suggests that repeating merely 3 times will robustly be about as useful as having 3 times more data from the same distribution. I don't know of comparably strong clues that would change this expectation.

Replies from: igor-2↑ comment by Petropolitan (igor-2) · 2025-04-07T09:42:46.673Z · LW(p) · GW(p)

I'm afraid you might have missed the core thesis of my comment, let me reword. I'm arguing one should not extrapolate findings from that paper on what's Meta training now.

The Llama 4 model card says the herd was trained on "[a] mix of publicly available, licensed data and information from Meta’s products and services. This includes publicly shared posts from Instagram and Facebook and people’s interactions with Meta AI": https://github.com/meta-llama/llama-models/blob/main/models/llama4/MODEL_CARD.md To use a term from information theory, these posts probably have much lower factual density than curated web text in C4. There's no public information how fast the loss goes down even on the first epoch of this kind of data let alone several ones.

I generated a slightly more structured write-up of my argument and edited it manually, hope it will be useful

Let's break down the extrapolation challenge:

- Scale Difference:

- Muennighoff et al.: Studied unique data budgets up to 178 billion tokens and total processed tokens up to 900 billion. Their models were up to 9 billion parameters.

- Llama 4 Behemoth: Reportedly trained on >30 trillion tokens (>30,000 billion). The model has 2 trillion total parameters (~288B active).

- The Gap: We're talking about extrapolating findings from a regime with ~170x fewer unique tokens (comparing 178B to 30T) and models ~30x smaller (active params). While scaling laws can be powerful, extrapolating across 2 orders of magnitude in data scale carries inherent risk. New phenomena or different decay rates for repeated data could emerge.

- Data Composition and Quality:

- Muennighoff et al.: Used C4 (filtered web crawl) and OSCAR (less filtered web crawl), plus Python code. They found filtering was more beneficial for the noisier OSCAR.

- Llama 4 Behemoth: The >30T tokens includes a vast amount of web data, code, books, etc., but is also likely to contain a massive proportion of public Facebook and Instagram data.

- The Issue: Social media data has different characteristics: shorter texts, different conversational styles, potentially more repetition/near-duplicates, different types of noise, and potentially lower factual density compared to curated web text or books. How the "value decay" of repeating this specific type of data behaves at the 30T scale is not something the 2023 paper could have directly measured.

- Model Architecture:

- Muennighoff et al.: Used dense Transformer models (GPT-2 architecture).

- Llama 4 Behemoth: Is a Mixture-of-Experts (MoE) model.

- The Issue: While MoE models are still Transformers, the way data interacts with specialized experts might differ from dense models when it comes to repetition. Does repeating data lead to faster overfitting within specific experts, or does the routing mechanism mitigate this differently? This interaction wasn't studied in the 2023 paper.

Conclusion: directly applying the quantitative findings (e.g., "up to 4 epochs is fine", RD* ≈ 15) to the Llama 4 Behemoth scale and potential data mix is highly speculative.

- The massive scale difference is a big concern.

- The potentially different nature and quality of the data (social media) could significantly alter the decay rate of repeated tokens.

- MoE architecture adds another layer of uncertainty.

The "Data Wall" Concern: even if Meta could have repeated data based on the 2023 paper's principles, they either chose not to (perhaps due to internal experiments showing it wasn't effective at their scale/data mix) or they are hitting a wall where even 30T unique tokens isn't enough for the performance leap expected from a 2T parameter compute-optimal model, and repeating isn't closing the gap effectively enough.

P. S.

Also, check out https://www.reddit.com/r/LocalLLaMA, they are very disappointed how bad the released models turned out to be (yeah I know that's not directly indicative of Behemoth performance)