Sentience Institute 2023 End of Year Summary

post by michael_dello · 2023-11-27T12:11:37.228Z · LW · GW · 0 commentsThis is a link post for https://www.sentienceinstitute.org/blog/eoy2023

Contents

Summary Accomplishments Research Outreach Spending Room for more funding None No comments

Summary

Last year’s 2022 End of Year Summary was published just five days before the launch of ChatGPT. This event surprised many in the field, and the global spotlight has illustrated how important it is to understand human-AI interaction. Our priority continues to be researching the rise of digital minds: AIs that have or are perceived as having mental faculties, such as reasoning, agency, experience, and sentience. We hope to answer questions such as: What will be the next ‘ChatGPT moment’ in which humanity’s relationship with AI rapidly changes? How can humans and AIs interact in ways that are beneficial rather than destructive?

The highlight from our 2023 research is the Artificial Intelligence, Morality, and Sentience (AIMS) survey with the same questions as in 2021, so we can compare public opinion from before to after recent events, as well as an additional AIMS 2023 Supplement on Public Opinion in AI Safety. Data like this is typically collected once a social issue is fully in the public spotlight, so we’re eager to have this longitudinal tracking already in place before the rise of digital minds. In terms of outreach, we released two podcast episodes (and one in December 2022) and hosted an intergroup call on digital minds as well as a presentation by Yochanan Bigman.

Our ongoing work includes online experiments on how AI autonomy and sentience affect perceptions, developing a scale to measure substratism, and qualitative studies (e.g., interviews) of how people understand and interact with cutting-edge AI systems. We hope to raise $150,000 this giving season to continue our research on digital minds. We also have a few ongoing projects to address factory farming (our focus before 2021) funded with earmarked donations from donors, such as ongoing data collection for the 2023 iteration of our Animals, Food, and Technology (AFT) survey.

As always, we are extremely grateful to our supporters who share our vision and make this work possible. If you are able to in 2023, please consider making a donation.

Accomplishments

Research

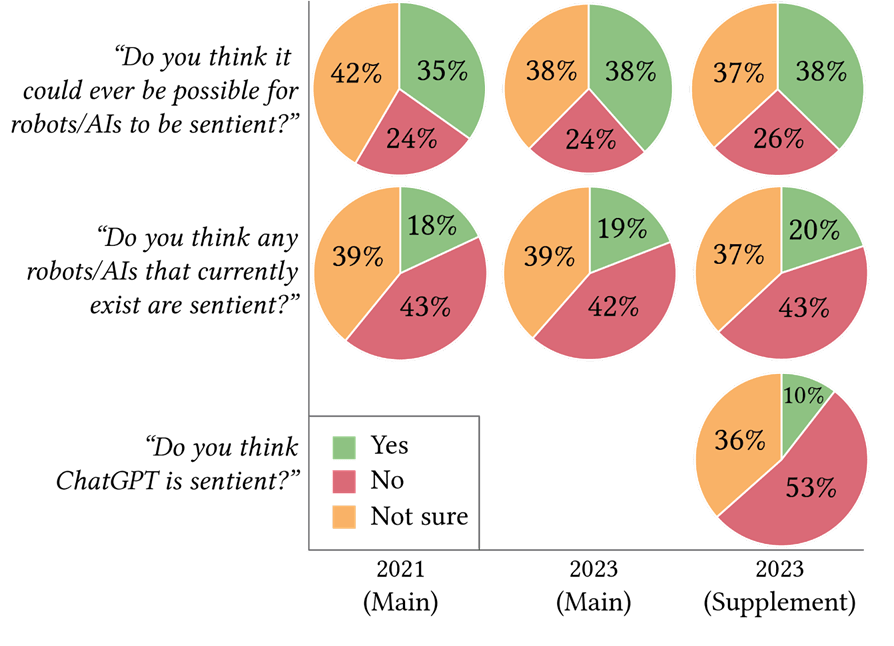

- We recently released the 2023 results of our Artificial Intelligence, Morality, and Sentience (AIMS) survey. The Main and Supplemental 2023 survey results, as well as the 2021 Main survey results, are available in full on our website. Americans express significantly more moral concern for AI in 2023 than in 2021 before ChatGPT. Other key findings include:

- 71% support government regulation that slows AI development.

- 39% support a “bill of rights” that protects the well-being of sentient robots/AIs.

- 68% agreed that we must not cause unnecessary suffering to large language models (LLMs), such as ChatGPT or Bard, if they develop the capacity to suffer.

- 20% of people think that some AIs are already sentient; 37% are not sure; and 43% say they are not.

- 10% of people say ChatGPT is sentient; 37% are not sure; and 53% say it is not.

- 23% trust AI companies to put safety over profits; 29% are not sure; and 49% do not.

- 27% trust the creators of an AI to maintain control of current and future versions; 27% are not sure; and 26% do not.

- 49% of people say the pace of AI development is too fast; 30% say it's fine; 19% say they’re not sure; only 2% say it's too slow.

Here is an example of the data from our survey questions:

- Our blog post “Mass media, propaganda, and social influence: Evidence of effectiveness from Courchesne et al. (2021)” reviews the literature on persuasion and social influence, which may be useful to farmed animal and AI safety advocates aiming to create new behaviors and disrupt existing socio-political structures.

- Our blog post “Moral spillover in human-AI interaction” reviews the literature on moral spillover in human-AI interaction. Moral spillover is the transfer of moral attitudes and behaviors, such as moral consideration, from one setting to another, and seems to be an important driver of moral circle expansion.

- The mental faculties and capabilities of digital minds may be a key factor in the trajectory of AI in coming years. A better understanding of them may lead to more accurate forecasts, better strategic prioritization, and concrete strategies for AI safety. We published a summary of some of the key questions in this area.

- We supported a paper in AI and Ethics on “What would qualify an artificial intelligence for moral standing?” asking which criteria an AI must satisfy to be granted moral standing — that is, to be granted moral consideration for its own sake. The article argues that all sentient AIs should be granted moral standing and that there is a strong case for granting moral standing to some non-sentient AIs with preferences and goals.

- We supported a paper in Inquiry on “Digital suffering: Why it’s a problem and how to prevent it” that proposes a new strategy for gaining epistemic access to the experiences of future digital minds and preventing digital suffering called Access Monitor Prevent (AMP).

- We supported a paper in Social Cognition on “Extending perspective taking to non-human animals and artificial entities,” detailing two experiments which tested whether perspective taking can have positive effects in the contexts of animals and intelligent artificial entities.

- We published a report authored by Brad Saad on “Simulations and catastrophic risks” that outlines research directions in this intersection. Future simulation technology is likely to both pose catastrophic risks and offer means of reducing them. Saad also won an award for early-career scholars working on topics related to animals and AI consciousness from NYU alongside another SI researcher Ali Ladak and three other early career researchers in the field.

More detail on our in-progress research is available in our Research Agenda.

Outreach

- On January 5, 2023, we hosted the second intergroup call for organizations working on digital minds research. We are currently reassessing whether these calls are the best approach to field-building because interest has declined since the fall of FTX.

- On April 27, 2023, we hosted a research workshop where Yochanan E. Bigman presented his work on attitudes towards AI, which was attended by academic and independent researchers.

- We released two new podcast episodes with psychologist Matti Wilks and philosopher Raphaël Millière, as well as an episode with philosopher David Gunkel in December 2022.

- During the public media blitz following ChatGPT’s launch, our co-founder Jacy Reese Anthis conducted radio and podcast interviews about digital minds, such as with Chuck Todd and Detroit NPR, and published two op-eds: “We Need an AI Rights Movement” in The Hill and “Why We Need a ‘Manhattan Project’ for A.I. Safety” in Salon.

- Jacy also spoke with Annie Lowrey at The Atlantic about digital minds, especially how to assess mental faculties such as reasoning and sentience and how we can draw lessons from the history of human-animal interaction for the future of human-AI interaction—both how humans will treat AIs and how AIs will treat humans.

- We continue to share our research via social media, emails, presentations at conferences and seminars, and meetings with people who can make use of it.

Spending

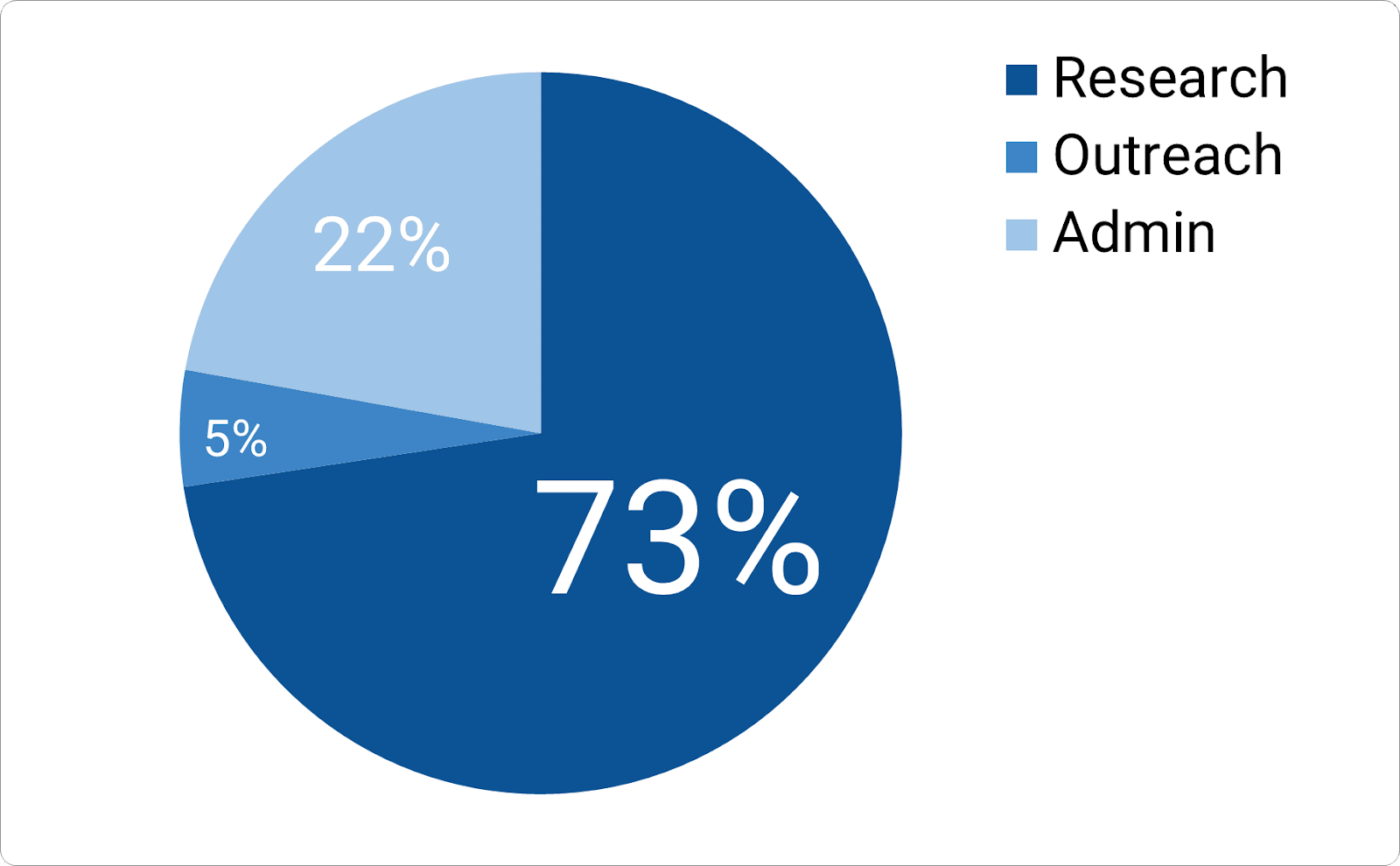

So far this year we’ve spent $227,762, broken down approximately as follows (73% research, 5% outreach, 22% admin). In each category, expenses are primarily staff time but also include items such as data collection (research), podcast editing (outreach), and our virtual office subscription (admin).

We continue to maintain a Transparency page with annual financial information, a running list of mistakes, and other public information.

Room for more funding

We currently aim to raise $150,000 this giving season (November 2023–January 2024) to continue our digital minds research and field-building efforts.

We know that fundraising will continue to be difficult this year following the collapse of FTX and the rapid proliferation of new and growing AI organizations who are attempting to raise money in the space. We have substantial room for more funding for highly cost-effective projects. Our last hiring round in early 2023 had 119 applicants for a researcher position, including several we did not extend an offer to but could have with more available funding, and if you are interested in potentially making a major contribution, please feel free to reach out to discuss our room for expansion beyond $150,000.

Thank you again for all your invaluable support. If you have questions, feedback, or would like to collaborate, please email me at michael@sentienceinstitute.org. If you would like to donate, you can contribute via card, bank, stocks, DAF, or other means, and you can always just mail us a check directly.

0 comments

Comments sorted by top scores.