Taming Infinity (Stat Mech Part 3)

post by J Bostock (Jemist) · 2024-05-15T21:43:03.406Z · LW · GW · 0 commentsContents

The Trick: Distribution Families Maths, Lots of Maths, Skippable: <\Maths> Back to the Harmonic Oscillator T instead of B True Names Conclusions None No comments

The canonical example of quantum mechanics in action is the harmonic oscillator, which is something like a mass on a spring. In classical mechanics, it wobbles back and forth periodically when it is given energy, if it's at a position , wobbling about and moving with velocty we can say its energy contains a potential term proportional to , and a kinetic term proportional to , with an overall form:

We could try and find a distribution over and , but continuous distributions tend not to "play well" with entropy. They're dependent on a choice of characteristic unit. Instead we'll go to the quantum world.

One of the major results of quantum mechanics is that systems like this can only exist in certain energy levels. In the harmonic oscillator these levels are equally-spaced, with a spacing proportional to the frequency associated with the classical oscillator. Since the levels are equally-spaced, we can think about the energy coming in discrete units called "phonons".

Our beliefs about the number of phonons in our system can be expressed as a probability distribution over :

This is progress: we've reduced an uncountably infinite set of states to a countable one, which is a factor of infinity! But if we do our normal trick and try to find the maximum entropy distribution, we'll still hit a problem: we get for all .

The Trick: Distribution Families

Thinking back to our previous post, an answer presents itself: phonons are a form of energy, which is conserved. Since we're uncertain over , we'll place a restriction on of our distribution. We can solve the specific case here, but it's actually more useful to solve the general case.

Maths, Lots of Maths, Skippable:

Consider a set of states of a system . To each of these we assign a real numeric value written as . We also assign a probability constrained by the usual condition.

Next, define and .

Imagine we perform a transformation to our distribution, such that the distribution is still valid and remains the same. We will consider an arbitrary transformation over elements :

Now let us assume that our original distribution was a minimum of , which can also be expressed as .

The solution for this to be equal to zero in all cases is the following relation:

We can plug this back into our equation to verify that we do in fact get zero:

The choice of a negative value for is so that our distribution converges when values of extend up to , which is common for things like energy. We will then get a distribution with the following form:

Where parameterizes the shape of the distribution and normalizes it such that our probabilities sum to . We might want to write down in terms of :

But we will actually get more use out of the following function :

First consider the derivative :

Which gives us the remarkable result:

We can also expand out the value of :

And get this in terms of too! We also get one of the most important results from all of statistical mechanics:

Now use the substitution:

To get our final result:

So is not "just" a parameter for our distributions, it's actually telling us something about the system. As we saw last time, finding the derivative of entropy with respect to some constraint is absolutely critical to finding the behaviour of that system when it can interface with the environment.

<\Maths>

To recap the key findings:

- The probability of a system state with value is proportional to

- This parameter is also the (very important to specify) value of

- We can define a function

Which we can now apply back to the harmonic oscillator.

Back to the Harmonic Oscillator

So we want to find a family of distributions over . We can in fact assign a real number to each value of n, trivially (the inclusion if you want to be fancy). Now we know that our distribution over must take the form:

But we also know that the most important thing about our system is the value of our partition function :

Which is just the sum of a geometric series with , :

Which gives us and in terms of :

T instead of B

Instead of , we usually use a variable for a few reasons. If we want to increase the amount of in our system (i.e. increase ) we have to decrease the value of , whereas when gets big, just approaches the minimum value of and our probability distribution just approaches uniform over the corresponding . Empirically, is often easier to measure for physical systems, and variations in tend to feel more "linear" than variations in .

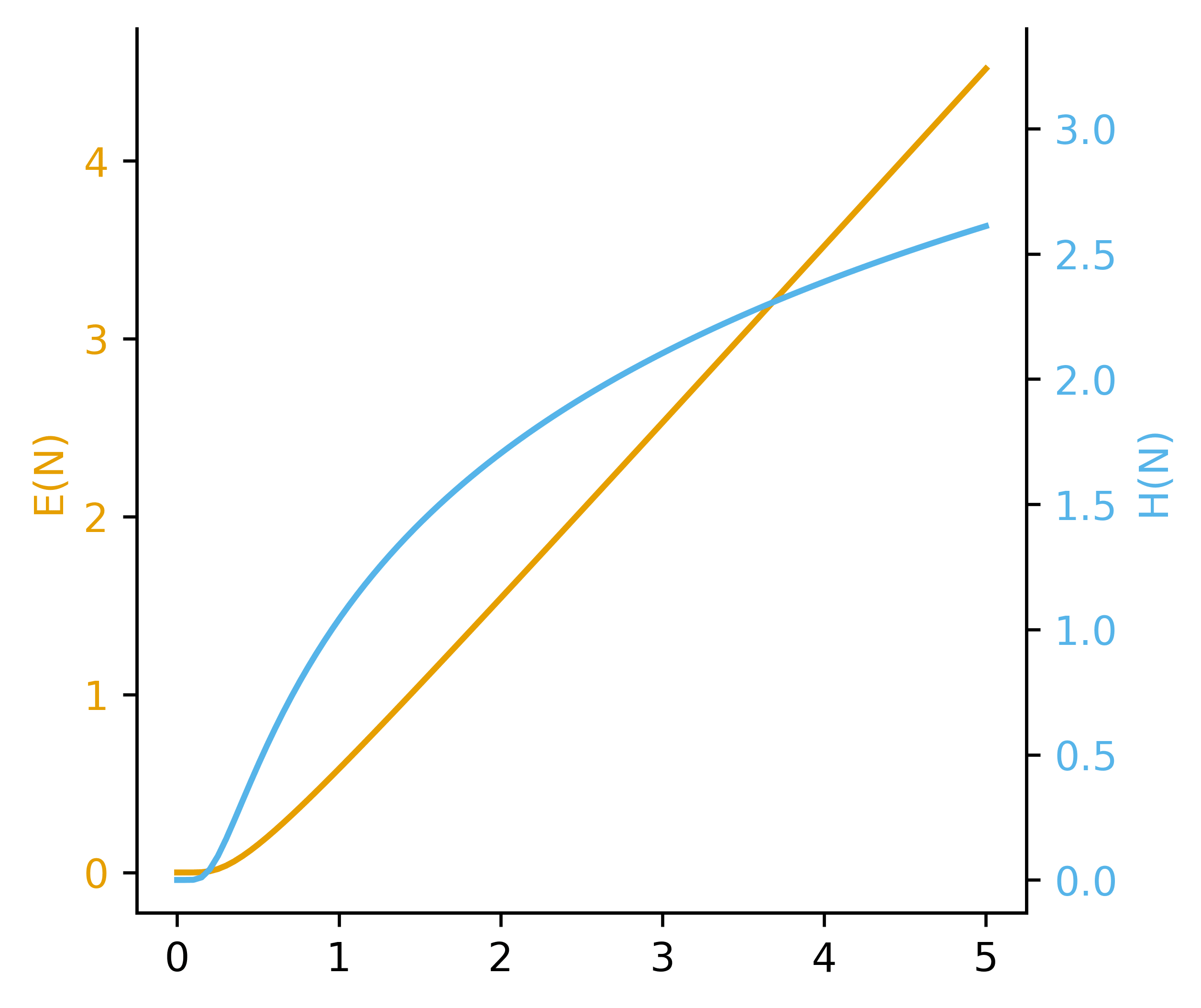

Let's plot both and of our systems as a function of :

converges on the line . Rather pleasingly the energy of a quantum harmonic oscillator is actually proportional to , not . This little correction is called the "zero point energy" and is another fundamental result of quantum mechanics. If we plot the energy instead of , it will converge on . converges on .

These are general rules. is in general proportional to , and is almost always

So far we've ignored the fact that our values of actually correspond to energy, and therefore there must be a spacing involved. What we've been calling so far should actually be called where is the energy of a single phonon. This is the spacing of the ladder of energy levels.

If we swap into our equations and also substitute in the energy (we will omit the when talking about energy) we get the following equations:

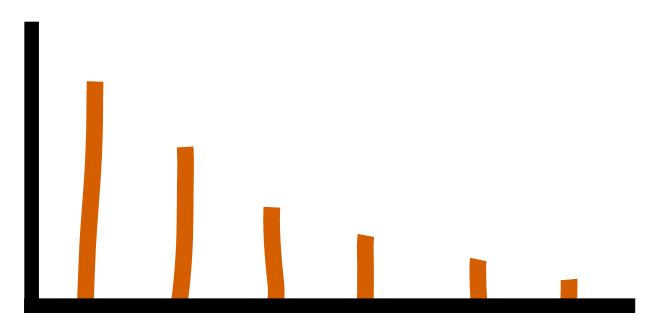

Both functions now have a "burn-in" region around , where the function is flat at zero. This is important. This region is common to almost all quantum thermodynamic systems, and it corresponds to a phenomenon when . When this occurs the exponential term can be neglected for all states except the lowest energy one:

Showing doesn't respond to changes in . This is the same as saying that the system has a probability of being in the lowest energy state, and therefore of having .

True Names

stands for temperature. Yep. The actual regular temperature appears as the inverse of a constant we've used to parameterized our distributions. is usually called in thermodynamics, and is sometimes called the "inverse temperature".

In thermodynamics, the energy of a system has a few definitions. What we've been calling should properly be called , which is the internal energy of a system at constant volume.

Entropy in thermodynamics has the symbol . I've made sure to use a roman for our entropy because (italic) in thermodynamics is a sort of adjusted version of energy called "enthalpy".

In normal usage, temperature has different units to energy which is because, if written as energy, the temperature would be a very small number. It is also because they were discovered separately. Temperature is measured in Kelvin , which are converted to energy's Joules with something known as the Boltzmann constant . For historical reasons which are absolutely baffling, thermodynamics makes the choice to incorporate this conversion into their units of , so . This makes entropy far, far more confusing than it needs to be.

Anyway, there are two reasons why I have done this:

- I want to avoid cached thoughts. If you already know what energy and entropy are in a normal thermodynamic context, you risk not understanding the system properly in terms of stat mech

- I want to extend stat mech beyond thermodynamics. I will be introducing a framework for understanding agents in the language of stat mech around the same time this post goes up.

Conclusions

- Maximum-entropy distributions with constrained always take the form

- This represents the derivative , if represents energy, we can write as

- is inverse to which, if is energy, is the familiar old temperature of the system

We have learnt how to apply these to one of the simplest systems available. Next time we will try them on a more complex system.

0 comments

Comments sorted by top scores.