What Value Epicycles?

post by Gordon Seidoh Worley (gworley) · 2017-03-27T21:03:54.479Z · LW · GW · Legacy · 2 commentsThis is a link post for https://mapandterritory.org/what-value-epicycles-f8358678a23a

Contents

Update 2017–05–15 None 2 comments

A couple months ago Ben Hoffman wrote an article laying out much of his worldview. I responded to him in the comments that it allowed me to see what I had always found “off” about his writing:

To my reading, you seem to prefer in sense making explanations that are interesting all else equal, and to my mind this matches a pattern I and many other have been guilty of where we end up preferring what is interesting to what is parsimonious and thus less likely to be as broadly useful in explaining and predicting the world.

After some back in forth, it turns out what I was really trying to say is that Ben seems to prefer adding epicycles to make models more complete while I prefer to avoid them.

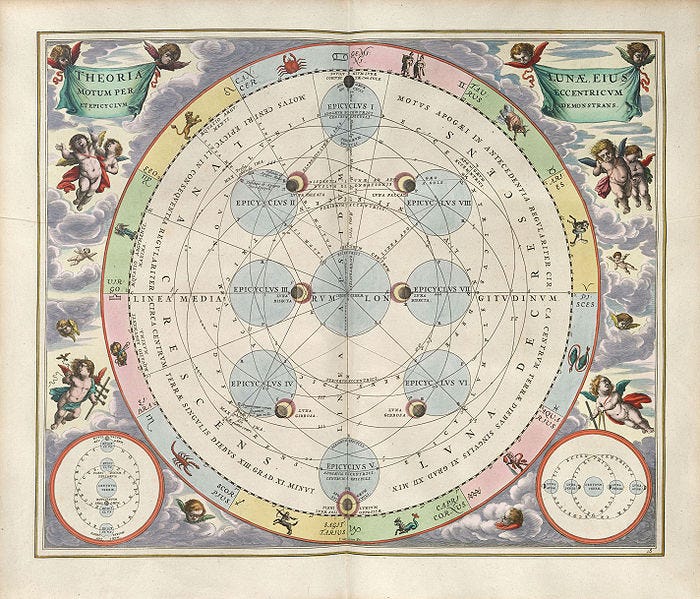

Epicycles come from Ptolemaic astronomy which puts the Earth at the center of the universe with everything, including the Sun, orbiting around it. To make this geocentric model fit with observations of retrograde motion, though, required the introduction of epicycles, imagined spheres in orbit around Earth on which the planets rotated. It’s now part of the mythology of science that over time extra epicycles had to be added to correct additional observational anomalies until it became so complex that epicycles had to be thrown out in favor of the simpler heliocentric model. And although it seems this story is more misunderstanding than truth, “epicycle” has become the metonym for adding parts to a theory to make it work.

Epicycles have a bad rap. After all, they proved to be part of an incorrect model and are now associated with anti-scientific adherence to maintaining tradition because they were needed to support the cosmology backed by the Catholic church. But I mean to use “epicycle” here in a neutral rather than pejorative way to simply mean making a theory more complete by adding complexity to it. It’s not necessarily bad to “add epicycles” to a model, and in fact doing so has its uses.

Epicycles let you immediately make an existing theory more complete. For example, if you have a unified theory of psyche, adopting something like dual process theory instantly gives you additional explanatory power by allowing the model to decompose concepts that may have been confused in a unified psyche. In turn, 3-part and 4-part psyche models add more epicycles in the form of more parts or subagents to give yet more complete theories that can explain the existence of contradictory thoughts. That these models lead away from more limited models that neuroscience has given us strong evidence for is beside the point because these models help people explain, predict, and change thinking and behavior.

But asking models to be useful to a purpose doesn’t necessarily imply adding epicycles. If our purpose is correspondence with reality then the principle of parsimony may serve better. The better known half of Occam’s Razor, the principle of parsimony is to make things as simple as possible but no simpler. I learned this miserly modeling skill from Donald Simmons in his seminal work on evolutionary psychology, The Evolution of Human Sexuality, where he warns against the temptation to construct convenient etiological myths that often implicitly and unnecessarily inject complexity into evolutionary processes.

To wit, it would be presumptuous to say that giraffe necks got longer to reach high leaves, since to do so is to suppose evolution is goal-directed. Instead what we can justifiably say is that giraffes with longer necks had a reproductive advantage that led them to leave more descendants than shorter-necked giraffes and thus longer necks became more common. The latter explanation might sound overly cautious to the point of being convoluted, but it differs importantly from the former by not implying additional complexity where none is needed, specifically by not making evolution into a teleological process. The simple evolutionary explanation is enough, though we may wish to further study why long necks were reproductively advantageous and may conclude it’s because they allowed giraffes to reach higher leaves.

The advantage of parsimonious explanations lies with probability: the fewer the propositions you multiply together the higher their combined likelihood can be, so a theory with less complexity is more likely to be true, all else equal. This is, incidentally, also the case against epicycles: adding them necessarily decreases the likelihood that a theory is true. Yet epicycles are often useful enough for us to posit their existence, so truth may not always be our highest value. It’s in this light that we must consider if simpler explanations are always better.

To the extent you want to be right — to have an accurate map of the territory — you by necessity have an equal want to not be wrong. When you learn something new, as much as you want to change your thinking to integrate the new information, you have an opposite want to find the new information already accounted for so that no update is necessary. You don’t want to have metaphorical comets shattering your epicycles all the time: you mostly want them to pass cleanly between the orbits because there is space in your model for them. But if you’re lost at sea and need to navigate by the stars, and positing the existence of epicycles is the only thing that allows you to find your way home, then suppose the epicycles exist with all your heart and worry about comets later when you’re out of existential danger.

And this perhaps explains the evolution of my thinking. Over time I solve more of the problems that used to vex me, and I suffer less; and as I find greater contentment I can more discard epicycles in favor of parsimony. That I still have a (relatively) complex theory of mind perhaps suggests I am still out at sea, trying to find my way home.

Update 2017–05–15

Val says something similar but with different emphasis.

2 comments

Comments sorted by top scores.

comment by gathaung · 2017-03-27T21:43:08.236Z · LW(p) · GW(p)

I think a nicer analogy are spectral gaps. Obviously, no reasonable finite model will be both correct and useful, outside of maybe particle physics; so you need to choose some cut-off of you model's complexity. The cheapest analogy is when you try to learn a linear model, e.g. PCA/SVD/LSA (all the same).

A good model is one that hits a nice spectral gap: Adding a couple of extra epicycles gives only a very moderate extra accuracy. If there are multiple nice spectral gaps, then you should keep in mind a hierarchy of successively more complex and accurate models. If there are no good spectral gaps, then there is no real preferred model (of course model accuracy is only partially ordered in real life). When someone proposes a specific model, you need to ask both "why not simpler? How much power does the model lose by simplification?", as well as "Why not more complex? Why is any enhancement of the model necessarily very complex?".

However, what constitutes a good spectral gap is mostly a matter of taste.

comment by Gordon Seidoh Worley (gworley) · 2017-03-27T21:04:43.624Z · LW(p) · GW(p)

here's the tl;dr money quote for you:

To the extent you want to be right — to have an accurate map of the territory — you by necessity have an equal want to not be wrong. When you learn something new, as much as you want to change your thinking to integrate the new information, you have an opposite want to find the new information already accounted for so that no update is necessary. You don’t want to have metaphorical comets shattering your epicycles all the time: you mostly want them to pass cleanly between the orbits because there is space in your model for them. But if you’re lost at sea and need to navigate by the stars, and positing the existence of epicycles is the only thing that allows you to find your way home, then suppose the epicycles exist with all your heart and worry about comets later when you’re out of existential danger.