LBIT Proofs 8: Propositions 53-58

post by Diffractor · 2020-12-16T03:29:34.423Z · LW · GW · 0 commentsContents

No comments

Theorem 4: exists for all infradistributions over a finite set , and equals

So, as a recap, here's how it works. . The Renyi entropy for is:

Where is taken over functions with (we have finitely many points, we're summing it up over all of those). And our task is to take the limit as tends to 1.

Our first step is to let , and with this reindexing, we can instead take the limit as tends to 0, and get the same answer. So, we're trying to find:

Well, we don't even necessarily know it exists. Looking at this, in the limit, if is such that , then will tend to 1 for all . And so, of that will tend to 1, and ln of that will tend to 0 from below, and hopefully the really tiny negative number cancels out with the fact that we're also dividing by a really tiny negative number. To do our approximation work, we're going to need that is bounded above 0 by something. We can show that the limit exists if we were dealing with a family of that are bounded above 0.

Accordingly, our proof strategy is going to be getting some bounds (for all sufficiently close to 0) on how much the results differ if we're taking the sup over all appropriate , vs if we're looking purely at the that are bounded above 0 by some (small, but not varying with ) number. Then, we can get some upper bounds and lower bounds, which we will be able to show converge in the limit. The problem is that the bounds won't be tight enough to produce the same limit, because we're blowing things up with a division by . So, all we're going to be able to conclude is that the relevant sequence has all of its limit points lying in an interval. However, the lower our "bound above 0" cutoff is, the tighter the interval will be. So, if we take the limit as our "bound above 0" cutoff shrinks to 0, the interval will shrink to a point, and we will have shown that the limit does in fact exist.

Let's get started on our upper bounds and lower bounds, respectively. Fix some which, importantly, won't vary with (yes, this will lead to a bit of weirdness where we start looking at behavior when ). For notation, is maximizing which fulfill the relevant property of . Accordingly, should be less than 1 divided by the number of possible finite outcomes. So, we can start establishing our upper bound as:

And the natural logarithm is monotonic, so

And then we multiply both sides by a negative number, flipping the sign, so:

And bam, we have an upper bound. The lower bound is significantly more tricky. Let's start with our first question. Given a , how can we adjust it to a that fulfills that property? Well, just have:

We should check that A: this still adds up to 1, and B: that when , . For later, we'll also need to check C: what's the critical value where this transformation switches from increasing the value to decreasing the value? Let's hit A first.

Excellent. For B, we can quickly check that this function is still in range by inspection if is 1, because we're dividing a number by a slightly bigger number. For being above , observe that:

And finally, let's check the threshold where this starts moving the value of the function down instead of up. Instead of having to do a bunch of fancy equations, let be the uniform function, with function-mass on each outcome. We're adding an equal amount to everything, and then dividing by some amount, so we get another uniform function. Which, to sum up to 1, must be unchanged. so is the critical value where the value of our start decreasing in value (if they're above it), or increasing (if they're below it).

Armed with our way of translating functions so that they do indeed fulfill our desired bound, let's look at the difference between: and

To begin with, by monotonicity,

then, by the fact that we have a finite Lipschitz constant for infradistributions when the associated function is bounded above 0. Let be small enough that , this makes the Lipschitz constant not depend on .

Now, let's try to evaluate the distance between those two functions, and how it scales with . The distance between functions is the maximum difference in values they assign to input points. Remember, if , has an equal or higher value, so the supremum is gonna mimic there. But for everything else, is actually going to be higher, and the supremum will mimic instead. So, we have:

Now, what we really want to study is how that above quantity varies with . Fortunately, with is an analytic function at , so we can take the Taylor expansion of both terms to see what's happening around 0 in terms of .

And, graphing the function in the sup with Desmos, with as the variable, and and as sliders, it's always maximized at 1. So then we get:

I should note something about the big-O notation. From trying it out on some test values, the coefficient gets larger as decreases. So we don't have to worry about the coefficient in the big-O blowing up unboundedly, the lower bound on our possible tames it. Putting it all together, we get (for sufficiently close to 0),

And our big-O doesn't depend on what q happens to be. Given any q at all, when we rescale it to get our , our analysis always works until the big-O terms start manifesting, but they're biggest for the smallest 's, and the lowest our gets is , so we can pick a sufficiently large constant to make everything work regardless of the . Also, our big-O absorbed the Lipschitz constant. Now, because our big-O doesn't care about what is, we have:

Pretty much, since the last two terms of our bound are uniform in , and all our since we designed it that way, we get the above bound. Now, we can take the to get

and multiply to get:

Thus, our upper bound on the sequence as a whole is:

and our lower bound on the sequence as a whole for small is

Let's show convergence for that top one, as it'll eventually let us crack the bottom one as well. Our task now is to show upper and lower bounds for the sequence (as )

Augmented with our nice bounds on how low can be, let's see if we can find some other function that's close to for near 0, so we can swap out for that.

Well... if we take the Taylor expansion of w.r.t. around 0, then we get . So what if we swapped out for ? The second part of that is negative, so it's below 1. And, since, for all , , once we get to , it's guaranteed to never undershoot 0 and be a legit function. Let's assess the difference between the two functions:

Again, the reason this works is, because we have a uniform lower bound on our , they're all in , which bounds how bad the big-O constants can be, so this difference is uniform across our . The difference between and is . By Lipschitzness of on functions bounded above (which happens for low enough ), we can transfer this difference outside the , and the Lipschitz constant absorbs into the big-O, so:

and then, transferring this to all (the big-O is uniform), we have:

and so, we get a lower bound where

Now, for the upper bound. This is easy, because by graphing, we can see that is always true if and . Thus, by monotonicity,

and, again, transferring this to all , and then taking the and multiplying by our negative constant, we have:

Alright, we've got some bounds. We can keep poking more at reexpressing the bounds, but remember that since we still haven't shown that anything in particular converges, we're gonna keep getting bounds on our bounds on our bounds. At some point we need to ground out and get that some actual limit exists, and use that as a tool to solve all the rest of the bounds.

Let's look at for inspiration. We can notice something interesting. For a particular , we can perfectly reexpress this as , where . Our analogue of summing to 1 is , and our analogue of being in is that . In fact, all of this form correspond to a , and vice-versa, just have . So, using as a shorthand for "we're selecting among that fulfill these properties as that's isomorphic to selecting a ", we would be able to go:

Now, with this reexpression, fixing a particular , are we able to solve the following equation?

We're just slicing off the smallest nontrivial bit of the problem we can to hopefully get one of these damn bounds to converge to something. And it turns out, we actually can solve this one! The teensy little problem is that L'hopital's rule only applies to differentiable functions, and this... isn't exactly differentiable. So we have to dig deep into the guts of the proof behind L'hopital's rule in order to show that applying the L'hopital procedure pretty much solves this, even though our function isn't differentiable (remember, h is Lipschitz, not differentiable). Once we've solved this, we'll be able to start chaining backwards and solve everything.

Our first order of business is to show that , as a function of is differentiable almost everywhere, concave, and monotonically decreasing. First, for concavity of , observe that:

And, as gets bigger, by monotonicity of , gets smaller. So, is monotonically decreasing, and concave. Now, from math stackexchange, the composition of a concave function with a monotonically increasing concave function ( in this case) is concave. Thus, is monotonically decreasing in , and concave. It's 0 when , and slopes down into the negative.

Now, any line from a point on the graph of to another point on it must have a very important property. It slopes down, since this function is monotonically decreasing in . So, given some and , there's a linear function with where, regardless of ,

(this is just the line between two spots on our resulting concave composite function). And then we can go:

The first inequality is by concavity for in . Multiplying by produces:

and, then, I'm gonna explain this following inequality in a bit, where it came from.

So, the equality makes sense, we just use the link between our line and ln of , see above just under "net takeaway". The inequality... what the heck is that about? Well, is a parabola opening down, it's concave. So, plugging in for the produces a bigger value than .

Ok, so our equation as a whole is concave. It's also monotonically decreasing because (ln of of...) starts off at 0 and just heads down from there, and we're multiplying by bigger and bigger positive numbers as increases.

And also, according to math stackexchange, concave functions can only be non-differentiable at countably many points! So,

the above function is concave in , is monotonically decreasing in , and only has countably many non-differentiable points. So as not to rewrite this each time, we'll switch our variable from to , and abbreviate this as , unpacking as needed.

Now that we know our function is nice, we can turn to verifying the important core needed to apply L'hopital's rule to solve the limit. And once that's done, we can start chaining backwards to solve our whole problem.

The key part that works for our function in particular, though it may fail in the general case, is an analogue of Cauchy's mean value theorem. We're about to make a bit of a mathematical leap here. We're going to let the derivative be set-valued at nondifferentiable points. As a toy example, consider the function . if , the derivative is well-defined. If , then, even though we don't have a derivative here, there's a left-derivative and a right-derivative. So, we can consider the "derivative" at 0 for to be any number between -1 and 1.

Now, the particular thing we need to go through the L'hopital proof for our specific case is: For and , there's a , and a possible choice of derivative (remember, we've got the set-valued derivatives now) where:

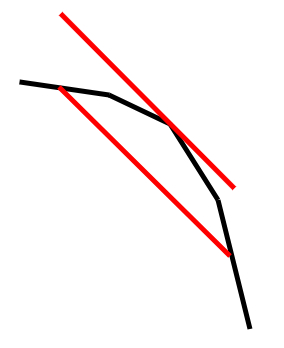

We can make the argument for this more intuitive by drawing the following picture.

Remember, our function \theta is concave, and note that that the left-hand-side of our equation is just going "what's the slope of the line" (with positive sign). From concavity, we can just translate said line up until it becomes a tangent line of our function, and that gets us our value of where there's a possible derivative that induces the above equality (because no matter what, and this also enforces the appropriate sign). So, we do indeed have our analogue of Cauchy's mean value theorem working here. Drawing a line segment between two points on the graph of , there's a point in between them where the possible derivative matches up with the slope of the line segment.

Now, define the following two functions:

The inf and sup is also taken over possible choices of derivative, by the way. And so, regardless of our choice of and , as long as , from our analogue of Cauchy's Mean Value Theorem,

Now that we've established this, lock in place and let limit to 0.

That last equality was because approaches 1, so by normalization for , approaches 1, so approaches 0, and multiplying by dosn't change that, and the bottom doesn't limit to 0.

So, we have our result that, for all our relevant ,

And now we'll use the squeeze theorem. So, what's the limit as heads to 0 for and ?

Well, the former is:

and as gets incredibly close to 0 (forcing to do so as well), the turns into 0, and the turns into 1. So, this produces:

And now, since is concave in and monotonically decreasing... Well, no matter which slope we choose for the nondifferentiable points, the shallowest possible slope is at 0. The slope is gonna be negative. Multiplying it by a negative means that we're trying to minimize a positive number. So, we want the shallowest slope we can possibly get, which would mean plugging in. Bam, no dependence on anymore.

And, consulting the Wikipedia page for the Gateux Derivative, this is the Gateaux Derivative of at 1 in the direction of !

So, we finally have solved one of our limits, it's:. Now, what about ? Well, a similar analysis applies.

and as gets incredibly close to 0 (forcing to do so as well), the turns into 0, and the turns into 1. So, this produces:

And now, since is concave in and monotonically decreasing... Well, the slope is shallowest at 0, and steepest at itself. The slope is gonna be negative. Multiplying it by a negative means that we're trying to maximize a positive number. So, we want the steepest slope we can possibly get, which would mean plugging in. So, we have:

Now, the sup in this case is just sup over derivatives, we know which to put in. In particular, since is concave in and monotonically decreasing, the steepest possible derivative we could have is the derivative where the nearby point approaches from higher .

Now, remember, is a concave function and monotonically decreasing (in ). If we graphed the derivative (in ), it'd be monotonically decreasing and always at 0 or less. There's discontinuities in the graph of the derivative, the graph of the derivative would look like a staircase going down. But remember, there are only countably many discontinuities. We're taking the lowest value of the derivative (read: furthest away from 0), so we can turn it into a (discontinuous) function where, at the "stairstep" discontinuity points, we stay at the bottom of the stairstep. And so, the question is, "if we stick to the bottom of the step at points where there's a step jump, and travel towards 0 ie goes to 0, do we have a limit?" Well, the value of that "staircase" derivative function is monotonically increasing (going from well below 0 to less below 0) as goes to 0, and it's got an upper bound of 0, so yeah, there's a limit.

But, in order to identify the limit as goes to 0 of the lowest possible derivative with just "the derivative at 0", we've got a bit of a problem. There's some free choice in which derivative value to assign at discontinuity points. Given free choice in the matter, do all those choices lead to hitting the same value at 0? Well, let's say we took the upper value of the staircase. Given any where there's a discontinuity, there's a smaller where the derivative is well-defined (because there's only countably many points where there's a discontinuity), which attains a value closer to 0 than the closest-to-0 value on the stairstep, because our derivative function stairstep is monotonically increasing as ticks down. So, even the "take the upper value for each stairstep discontinuity" function can have any particular value exceeded by a sufficiently low value for when we take the lower value for the stairstep discontinuities. So, it has the same limiting value. Which, again, is the derivative at 0. So, we get:

Alright! We got the same value for an upper bound as a lower bound! Awesome! Now we can get started solving some limits, finally! From here on out, we'll do a lot of backing up to stuff we've already shown. First, we've already shown that:

and, in the limit, both the left-hand side and right-hand-side have the same limit. Namely, . The Gateaux derivative of at 1 in the direction of . So,

Alright, we've got our first toehold where we were actually able to solve a damn limit. Let's back up a bit and try somthing more ambitious. Let's attempt to solve

Again, we're going to impose upper and lower bounds. The tricky part in this case is that the previous limit was just for a particular function. Once we start taking the supremum over a bunch of different functions, we can't necessarily guarantee that they all limit at the same rate. But, let's try to reduce it to the case of one particular function.

To begin with, regardless of and , . Why is this the case? Well, the former is a concave function, monotonically decreasing, that starts at 1 where . And the latter is the tangent line to the former function at . So, the tangent line lies above the function. Thus, we can go:

and then, since all the Gateux derivatives are 0 or less, we can move the sup inside (changes nothing), and get:

Ok, cool, we've got a lower bound. Now, what about an upper bound? Well, pick an where is extremely extremely close to . We can get:

And bam, we have an upper bound.

Now, let's take the limits of our lower bound and upper bound. Our upper bound limit is:

Our lower bound limit is:

But fortunately, that supremum doesn't change with ! It's just a constant. So the function inside the is continuous and differentiable, and so is everything, so we can solve this with vanilla L'hopital's rule.

Sadly, these two bounds are different. We only have that

Or, heck, the limit might not even exist! But all limit points must be in that interval. But... this argument worked independently of our choice of . So, we can select it so the derivative is as close as we want to the supremum derivative! No matter how tight the interval is, we can always back up, pick a different , and make the interval smaller! Thus, the limit must exist, and we have:

Bam, that's another limit out of the way. Going back in the proof, we showed earlier that

(it was just a reexpression), so that nets us another limit,

Now... let's back up to showing what

is. From earlier work, our upper bound (for small enough ) was:

and our lower bound was:

Well, we know what the upper bound limits to already. We do need to check that the thing doesn't mess with anything. We're going to Taylor-expand about 1. The interval of convergence is so this Taylor expansion works for a bit away from 1, not just in the limit. Taylor-expanding the ln for the lower bound produces:

Now, converges to 0 as , so we can neglect all terms but the first (because any later term would converge to 0 as or faster, so even after getting blown up by dividing by , they'd still shrink to 0). And the error term in the lower bound, again, even after getting blown up, doesn't affect the limit. So, since our error term is too small to affect the limit, both the upper bound and lower bound limit to the same thing, . And so, by the squeeze theorem,

Ok, cool. That's another limit we were able to show to exist. But what about our original thing, back from the start of the proof, that we wanted to show? We wanted to solve

Well, our upper bound was:

and our lower bound was:

Again, our upper bound limits to , and our lower bound... well, hang on, there's a additional term in there, which is large enough to affect the limit. Again, doing the Taylor-expansion of around 1, and dropping all terms but the first since they decline as or faster and so don't affect the limit, our upper bound turns into:

Ok, so we know what that limit is. But for our lower bound, when we do the Taylor expansion (neglecting higher-order terms), we get:

And then using our result above, and cancelling out some stuff, the lower bound limit is:

So all we have is that lies in

Uuuugh. We weren't able to show that the limit exists, just that all limit points are in that interval. But wait a minute, we've got a finite width interval because we picked a ahead of time. What if we redo the argument with a much smaller ? Can we get this interval down to a single point if we pick smaller and smaller ? Well, yes.

To begin, our upper bound, , as gets bigger, is monotonically decreasing, and bounded below by . So it does have a limit. The argument for this is that, as \delta gets smaller, is getting bigger, so we're picking amongst more functions. Thus, we've got more freedom to make the Gateaux derivative close to 0 (the derivatives are always 0 or negative), and flipping the sign around, we manage to move our positive quantity lower (or it stays the same). So that takes care of the "monotonically decreasing" part. And obviously, no matter the , we have less freedom to pick a function make our derivative close to 0 than maximal freedom in picking said function. But is the limit point as shrinks to 0 actually equal to ? Well, we can pick a concrete function that attains a value arbitrarily close to that, and then get a that includes said function, so we do have:

Now, let's tackle our upper bound.

Ah good, we get the same bound. Therefore, since we can narrow our window width to 0 with decreasing , and regardless of , the limit points of our original sequence of interest are in the window,

Where the supremum is taken over that are in and , or and , related by . We're finally done!

Proposition 53: For cohomogenous infradistributions,

So, we already know from Theorem 4 that

So that just leaves the last inequality to show.

And because the infradistribution is cohomogenous, always equals 1, so we have

And we're done. Well, not quite, we still need that alternate characterization of the form:

The way that this shakes out is that we'll be using Sion's minimax theorem on the characterization of Entropy as:

In order to invoke Sion's minimax theorem to swap the order of the inf and the sup, we have four things to check. so we need to check that is a compact convex subset of a vector space. Yes, this is the case because only has finitely many points in it, that's one down, it's just a subset of . Also, , so we need to check that is a convex subset of a topological vector space. Convexity comes from convexity of infradistributions, and the minimal points of (due to cohomogenity being exactly those with ) must form a convex set, because you can't decrease the value any more and stay in , and the space of signed measures over , as well as the term, is embedded in (for suitably large ).

That just leaves two things. We need to check that for all ,

is continuous and concave (we'll actually show it's linear), and we need to check that for all , the function

is continuous and convex.

Continuity for both of these is obvious, because the cross-entropy is a continuous function. That leaves showing linearity for the first function, and convexity for the second one.

Use for your function

It can be unpacked a bit more as:

Then we can go:

And we're done with linearity there, linear functions are concave. Time for the next one.

Use for your function

It can be unpacked a bit more as:

Now, we can go:

Now, is a concave function, not a convex one. However, the negative sign flips it around to be convex, so we have:

So it's convex. We can now invoke Sion's minimax theorem and go:

And cross-entropy is minimized when , and equals the entropy of so we have:

And that's the last bit we have for this.

Proposition 54: For all infradistributions, , entropy is upper bounded by the logarithm of the number of states.

Looking back at the proof of Proposition 50, we can see that regardless of infradistribution, it holds up to here

And note that, in an infradistribution, always for minimal points, but the inf enforces that by taking the limit, so the entropy for general infradistributions can be reexpressed as:

And going through the proof of Proposition 50 again, the final more general formulation of entropy we get for arbitrary infradistributions is:

Now, cross-entropy is always non-negative, so that means

Further, the entropy is bounded above by , because, letting be the uniform distribution,

Therefore,

And we're done.

Proposition 55: For all infradistributions, entropy is concave.

Proposition 56: For all infradistributions, entropy doesn't increase after deterministic pushforward.

To begin,

The reason for that last inequality is that, regardless of and ,

so

So, by monotonicity,

So then, regardless of ,

so then this property transfers to the limit, and

and then for the supremum,

And then multiplying by a negative flips the sign of the inequality. That's where it came from. So, we're currently up to:

Now, where to go from here? Well... given a that sums to 1 over all points in , it's basically a probability distribution. We can define a that's supported over the image of as , just the pushforward. Or, more prosaically, . Ah, there's what we were looking for. Also, by normalization, .

Hm. Well, how many can we hit? Well, could be any probability distribution over . so, we'd be able to induce any probability distribution as long as it's support is in the image of . So, we can reexpress as:

and, by monotonicity, we can take the sup over more stuff since they'll always produce a lesser result.

Wait a second, these are only defined on the image of . But the values produced by only depends on what functions do on the image of . So, we should just be able to extend in an arbitrary way s.t. they still look like probability distributions over , that doesn't affect the values at all.

And, again, by monotonicity, we can just restrict back to "must sum up to 1".

Alright now, let's abbreviate this as:

And then we notice something interesting. This can be viewed as a function that maps an to a via , and then maps to . This composition form means we can reexpress it as:

And we're done!

Proposition 57: For cohomogenous infradistributions,

First, use our reexpression of what the entropy means for cohomogenous infrdistributions to go:

Now, from what the semidirect product looks like, the points in the semidirect product (or at least, this nabs all the minimal points) looks like selecting a from , and a selection function s that takes each and assigns it a minimal point from . Then just take and add on the term. So, we can rewrite the inf as "pick a from , then pick a from each , which we'll write as "

So, the resulting measure (not probability distribution!!) produced by that has the measure on the point being:

And so the total amount of measure present (our analogue of the term) would be:

And the actual probability distribution on the point , ignoring the term, would be

Thus, we can rewrite our entropy as:

We can cancel out and move the outside to yield:

And now we'll be abusing notation somewhat to economize on space, we'll be writing as and writing as . With these space abbreviations, we have:

and break up the sum to yield:

And since is fixed (from the perspective of the inside), we can go "ah, the optimal to pick doesn't depend at all on what or is, our task is just to make the chunk associated with as negative as possible to make the negative sum as a whole as large as possible" so we can rewrite as:

And then, we can rewrite as to get:

And now, we can start breaking down the accordingly. We can select any probability distribution over , so we could think of our choice of distribution as choosing a distribution over , and a bunch of conditional distributions over for each . With this reexpression, we get:

Now, since is always a probability distribution and that second ln term is a constant and doesn't depend on , we can pull it out and get:

Now we need to be really finicky about that last ln piece. It's negative. Swapping the constant in front of it for a 1 would make the inf chunk more negative, but then there's a negative sign in front, so the sup chunk would get bigger, and so would the inf chunk at the very start. So, we have:

And now that there's no dependence on which point was picked from , we can pull it out of the inf to yield:

Moving the negative sign inwards,

Moving the negative sign further inwards,

And abbreviating the cross-entropy, we have:

And now we can go "y'know, we can optimize the conditional probabilities individually when we see an x. Murphy's distribution and my distribution, once a particular x gets locked in, is disconnected from all the other x, so we can move that inf inwards". So, we can reexpress as:

And now we use our form for the entropy of an infradistribution to yield:

And then, by optimizing both components individually we can get

And then, using our form of entropy for cohomogenous infradistributions, and unpacking a bit, we have

Putting it all together, we have:

And we're done!

Proposition 58: For cohomogenous infradistributions, , with equality when the infradistributions are crisp.

By Proposition 57,

And then, is a special case of the semidirect product where is always , so this turns into:

And then, because there's points with in a cohomogenous infradistribution, we have:

This is only part of the proof however, we need to show that we have equality for crisp infradistributions, so we've gotta dig back through the proof of Proposition 57 and show that the two inequalities we have turn into equalities. Looking back through the proof, the first inequality we had was:

There's two critically important things to note. First is that, in the crisp infradistribution case, all minimal points have . Second is that, for a direct product, the target we're selecting points from on the second stage is always , and there's no dependence on in that selection. So, reexpressing the part immediately before the inequality, what we have is:

And then we can move the out to get:

Which is exactly the reexpression of that second thing that we'd normally have an inequality for. So, the proof of Proposition 57, for crisp infradistributions and the direct product, proceeds with equalities past the first inequality, and stopping right before the second one in the proof of Proposition 57 we had the line:

Reexpressing the first term for the direct product, and noting that we made it past the first inequality, it would be:

But then, the entropy of is just a constant, so you can pull it out and get:

And we're done, we've shown our result for crisp infradistributions!

0 comments

Comments sorted by top scores.