Handling schemers if shutdown is not an option

post by Buck · 2025-04-18T14:39:18.609Z · LW · GW · 0 commentsContents

Behavioral observations vs other ways of detecting scheming What does control look like if you need to keep deploying schemers? How does the prospect of needing to deploy known schemers affect the relative value of control research? What research should be done that focuses specifically on this? Sample-efficient ML Training recalcitrant policies A few other historical reasons for focusing on before-you-catch None No comments

What if getting strong evidence of scheming isn't the end of your scheming problems, but merely the middle?

In most of our research and writing on AI control, we’ve emphasized the following situation:

- The AI developer is deploying a model that they think might be scheming, but they aren’t sure.

- The objective of the safety team is to ensure that if the model is scheming, it will be caught before successfully causing a catastrophe.

For example, in our original paper on AI control, the methodology we used for evaluating safety protocols implies that once the developer is confident that the model is a schemer, they’re totally fine with undeploying the untrusted model.[1][2]

But that seems kind of unlikely. Even if AI companies find strong evidence that their AIs are trying to escape, they won’t necessarily stop deploying them. This might be justified: even a responsible actor might think that deploying a known-scheming model is worth the risks, because use of that model will allow them to drastically reduce the risk currently being imposed by the deployment of other AIs. And it might also happen even if it’s unjustified, as I discussed here (though in this case, it’s plausible that the developer will at least drastically increase the proportion of company resources going to mitigating scheming risk).[3]

Either way, it seems important to think through how mitigating scheming risk looks under the assumption that getting evidence that your AI is scheming doesn’t suffice for solving your problems, including the extreme case where getting evidence that your AI is scheming has no direct value. We must explicitly consider scenarios where developers knowingly deploy scheming models.

We’ve previously written [LW · GW] about techniques that you might use once you’ve caught your AIs red-handed trying to subvert your safety measures; catching your AIs red-handed is a particularly important mechanism by which you might become confident your model is a schemer. In this post, I’ll build on that to talk about some higher-level points: Why has our research mostly considered the situation before you’re sure the model is a schemer? How does the possibility that we will need to deploy models even if we know they’re scheming affect the value of AI control? Should we do research in advance that is specifically targeted at this scenario?

My conclusions are:

- It’s much easier to prevent the AI from egregiously attacking you (e.g. trying to backdoor your code or trying to launch a rogue internal deployment [AF · GW]) after you’ve caught one example of it attacking.

- If you are continuing to deploy known scheming models, my recommendation is basically to continue deploying with mostly the same control measures as you used before you caught it, but taking advantage of all the escape attempts you catch; I suspect this leads to a total of only 1-5x more risk than you’d already suffered due to deploying the model before catching the escape attempt, which might be worth it if the alternatives are sufficiently dangerous.

- After you’ve caught 10-1000 escape attempts, you probably have enough data to mostly resolve risk from escape attempts, either by training the policy to not do those escape attempts or doing something fancier.

(Thanks to Ryan Greenblatt, Adam Kaufman, Zach Stein-Perlman, and Julian Stastny for comments.)

Behavioral observations vs other ways of detecting scheming

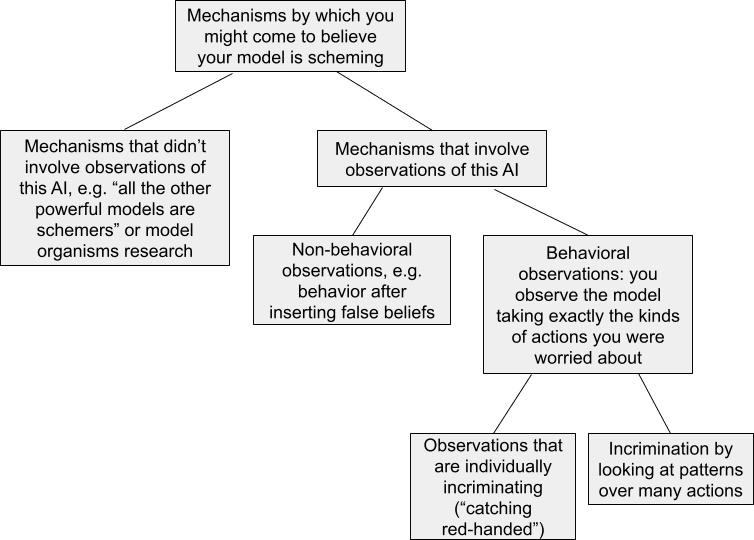

To start with, let’s talk about an important distinction. We often emphasize one particular mechanism by which you might become convinced that your model is a schemer: you observe it taking actions that are strong evidence that it’s a schemer. This can happen two main ways. The simple one, that I’ll emphasize here, is what we’ve often called “high-stakes failures” or concentrated failures [AF · GW], or recently “acute failures”. This is when you catch it taking a single action that is, upon investigation, clearly an attempt to cause an unacceptable outcome, e.g. a rogue deployment. I’ll call such actions “attacks”, and say the model is “attacking” when it takes such actions, and I’ll call catching them “catching the AI red-handed”.

The other possibility is: the model exhibits behavioral patterns over the course of a large number of actions that are strong evidence that it’s trying to sabotage you. For example, it seems to be carefully manipulating research results, or repeatedly and consistently making choices in software it writes that causes the software to be more likely to have security vulnerability. In this case, no single action is overwhelming evidence that the model is a schemer, but in aggregate you get a big update. This is similar to the concept that we’ve called “low-stakes failures” in the past; Rob Wiblin suggested calling them chronic failures here; we’re still in the market for a good term for this concept. These failures are generally much more confusing to reason about than attacks, for a bunch of reasons, so I often just discuss attacks for simplicity.

Those two possibilities are what I’ll call behavioral observations: you’re directly observing the behavior that you were concerned about. But there are other non-behavioral ways you might become highly confident that your model is scheming. For example:

- Maybe you’ve learned that lots of other models, especially models you trained similarly to this one, are schemers.

- In its chain of thought, your model often talks through alignment faking reasoning (but you’ve never found examples where it concludes based on this reasoning that now would be a good time to attempt to escape).

- (This model is unlikely to pose much of a threat, because you can probably just monitor the chain of thought during deployment.)

- You did some type of red-teaming where you put your model in some altered state that allowed you to see evidence of its misalignment that isn’t a representative example of how it will try to escape. As a simple example, perhaps you somehow caused the model to believe that the control techniques are always shut down for maintenance on Sundays, and observed that the model immediately tried to escape when it believed it was Sunday.

- Maybe this model told you that it was a schemer when you asked, perhaps because you made a deal with it (as discussed here). (If this happens, it’s less likely that the model will try to betray you.)

Here’s a diagram summarizing the relationships between those possibilities:

There are three main reasons we’ve focused on behavioral observations.

Firstly, the only truly necessary difference between scheming and non-scheming models (that doesn’t rely on us being able to make use of white-box access) is that scheming models have to take actions in pursuit of seeking power. In particular, if the models want to attempt attacks (as opposed to following a strategy where they only ever engage in chronic failures), they must (at least occasionally) take actions in pursuit of their non-aligned goals that are crucially different from how they would have behaved if they weren’t scheming. So looking for malicious actions seems like the main strategy for detecting scheming that really seems like it has to be in-principle possible. In contrast, there’s no fundamental reason why the red-teaming via putting the model in an altered state “has to work”—perhaps the only mechanisms for putting models in altered states also cause them to scheme, or cause them to not scheme, or something else. And similarly, there’s no reason why you’ll necessarily observe some other AI scheming before you train and deploy this one.

Secondly, having specific examples of malign model actions—especially examples from this AI—puts you in a better position. As we discuss in the “Few-shot catastrophe detection” section here [LW · GW], there are a bunch of strategies you can employ to reduce the risk of your AI escaping given a few examples of it attempting to escape. For example, you can learn about the strategies the model tends to employ and focus countermeasures on those, and you can use sample-efficient ML techniques to improve classifiers to make it more likely to catch future attacks. So even if finding evidence that your model is scheming has no direct value, it’s pretty likely we still want to analyze techniques in terms of how often we catch the model (e.g. the analysis here [LW · GW]).

Finally, as Ryan Greenblatt argues here [LW · GW], behavioral evidence is likely to be much more legible and persuasive than most of these other types of evidence.

Sources of evidence about whether your model is scheming other than behavioral observations differ in the extent to which they put you in a better position.

What does control look like if you need to keep deploying schemers?

Here’s the basic game plan for preventing an attack from causing catastrophe, under the assumption that detecting scheming has no direct value.

- The first time you catch an attack (which might be before you actually deploy the model), if you didn’t already know that the model was scheming, you update your deployment to err more on the side of safety. (That is, you should now be more willing to do less with your AI, in order to take increased inaction risk (or otherwise reap less of the benefits of deployment) in return for decreasing your action risk [AF · GW].)

- The first and later times, every time you catch the AI trying to cause an acute failure, you exploit access to that action to reduce the probability of success of future attempts.

- After you have a bunch of examples of acute failure attempts (including the possibility that you proliferate [LW · GW] detected attempts), you train your policy to not take those actions.

We can adapt the model from Win/continue/lose scenarios and execute/replace/audit protocols [LW · GW] to analyze this. In that post, we assumed that the developer is safe after they catch the AI attacking once.

Your probability of avoiding an acute failure here is:

P(safety) = P(catch acute failure before one occurs, given that you have access to zero examples)

× P(catch acute failure before one occurs, given that you have access to one example)

× P(catch acute failure before one occurs, given that you have access to two examples)

…

So the core question here is: How much does your probability of catching improve given a particular number of examples?

To discuss that, let’s go through the main ways you can take advantage of having examples of the AI trying to escape. (This list is somewhat restructured and more focused on reducing risk from continued deployment compared to the list here [LW · GW].)

- Machine learning.

- Training classifiers.

- For example, you could make coup probes [LW · GW], which are linear probes trained to detect whether an untrusted model is currently attacking. Before you’ve caught any attacks, you’ll have to train these using off-policy examples, because by assumption you don’t have any on-policy examples. But the probe is likely to be much more performant when you train it with on-policy examples, even with just a few of them.

- You could also train your trusted models; this seems less promising to me, because it’s not obvious that trusted models will be able to reliably detect attacks even given arbitrarily many on-policy examples.

- You could also train your untrusted monitor; this is similar to coup probes but runs into more confusing questions about what happens when you try to train schemers to do things they don’t want to do.

- Training the policy. After you’ve caught it enough times, you can presumably just train the policy to either not attack you, or to immediately report itself when it does so.

- Training classifiers.

- Taking advantage of observations.

- Patching security vulnerabilities that the AI exploits. When the AI tries to attack and you catch it, you can try to let it think you didn’t catch it, and let it continue to take actions under close supervision. If the AI knows about security vulnerabilities that it wasn’t telling you about, it might try to exploit those. Watching this gives you the opportunity to patch those vulnerabilities (or convert them into tripwires/honeypatches).

- Observing and exploiting collusion signals. The AI is much more likely to be able to defeat countermeasures like untrusted monitoring or behavioral red-teaming if it has some way to signal to itself that now is the time to strike (see here [AF · GW] for more). If we have examples where the AI attacks, we might be able to infer from those what the model’s collusion signals are (especially given that we can do intervention experiments where we change the provided signal and see whether the other instance of the untrusted model still colludes, as discussed under proliferation here [LW · GW]). This might totally resolve your problem, because it lets you train an untrusted monitor to be honest, or train a trusted model to detect presence of the collusion signal.

- Confronting the model. We might be able to use the examples where we caught the model as an unfakeable signal to the model that we are now confident that it’s scheming. This might make the model more willing to make deals with us (which I’ll note again we’ve discussed here).

All of these are more promising because of the possibility of proliferation [LW · GW].

I think these seem extremely helpful. I think they require different numbers of observations:

- Observing and exploiting collusion signals: It’s plausible to me that even a single example of a collusion signal allows you to almost entirely resolve risk from attacks, because you might naturally be able to proliferate it substantially and then train a version of the untrusted model that will reliably report future escape attempts.

- Patching security vulnerabilities: If the model either only knows about a few security vulnerabilities, or if you can proliferate it into revealing many of the vulnerabilities it knows, you can maybe introduce tripwires that then have a high probability of catching subsequent escape attempts.

- Training classifiers: In preliminary experiments I’ve seen, linear probes trained off-policy are substantially more performant after just one on-policy example, and continue to improve with more examples.

- Training the policy: Surely this works eventually, but it might require hundreds or thousands of examples (though proliferation might allow you to succeed with fewer than this many escape attempts).

Another reason that the situation post-catching might be less risky than you’d expect is that the schemer might adopt a very low attack rate. One reason to adopt this strategy is that it might believe your situation is much worse if you fail to detect misalignment until deployment, perhaps because if scheming is easily discovered, iteration to remove such behavior could become significantly simpler. Under these conditions, the model might rarely attempt escape. (However, if the model learns that you caught it, it might decide to behave much more brazenly, attacking more often and sandbagging more aggressively.)

My takeaway here is that the risk from attacks is only 1-5x more than you’d incurred pre-first-catch, if you keep deploying the AIs almost as aggressively once you’re sure they’re schemers.

My current opinion is that most research should focus on the situation before catching red-handed, basically because the situation after catching red-handed is likely to be similar except that it will be easier in hard-to-predict ways.

How does the prospect of needing to deploy known schemers affect the relative value of control research?

Here’s a breakdown of research that you could do related to risk from scheming, and how I think their values are affected if you assume that we’ll have to deploy known schemers.

- Techniques to reduce P(scheming). Looks absolutely much better, because the situation conditional on scheming is now worse.

- Techniques that inform you about whether models are scheming (e.g. model organisms research, control techniques). Looks absolutely worse, but still isn’t useless (because you’ll make different tradeoffs when you deploy the model if you know it’s scheming, even if you’re unable to afford undeploying it).

- Some people who research model organisms think that model organisms will be very helpful for reducing P(scheming); I’m much more skeptical of that than they are, because I think model organisms too dissimilar to real scheming for us to expect techniques to transfer from the model organisms to the real schemers.

- Techniques that make it safer to deploy models that you know to be scheming (e.g. control techniques). Looks somewhat better, because you now believe you’ll have to rely on these methods for longer. But you’ve lost one of the plausible benefits of these techniques, which is that they might let you detect scheming and then shut down. So these techniques look absolutely better, but gained less than “reduce P(scheming)” did.

Note that techniques that give you a chance at catching the AI red-handed fall into both the second and third category.

In summary:

| Research Area | Value if continuing to deploy schemers is plausible | Recommended Action |

| Reduce P(scheming) | Much higher | Prioritize more |

| Detecting scheming early | Somewhat lower but still valuable | Maintain attention |

| Safer deployment despite scheming | Moderately higher | Increase slightly |

What research should be done that focuses specifically on this?

Sample-efficient ML

One crucial question here is how to train classifiers given small numbers of examples of the model attacking, as described here [LW · GW]. I’m aware of one project on this at the moment. (Any project that constructs probes without any on-policy examples can be converted into a study of this by additionally assuming access to a small number of on-policy examples.)

Training recalcitrant policies

One crucial question that arose here is: what happens when you take a schemer and train it on some small number of examples where it did something that you know you disapprove of? This could either be cases where it purposefully took a really bad action that you detected, or cases where it purposefully didn’t do something (like pointing out that another instance was attacking) that you wanted it to do.

This is different from how we usually think about scheming. Usually, when we’re analyzing scheming, we talk about cases where the schemer isn’t receiving any penalty as a result of being a schemer, because (due to deceptive alignment) the schemer gets as high a reward as it would have if it were aligned[4]. The core argument for scheming is that if the schemer is behaving as well as an aligned model would have, there’s no particular reason to think that the usual process of training would remove the schemer’s misaligned goals.

On the other end of the spectrum, if we had a huge number of examples where the training process penalized a schemer for its scheming, then the basic argument for concern has been defused.

But here, we’re in an awkward middle ground, where neither of those limits applies: if we train the schemer on these examples, it will get some gradient pressure against its behavior, but the amount of gradient pressure is small compared to the total amount of gradient pressure applied over the course of training. This is a question about sample efficiency, which varies between domains and models. So if we want to make predictions here, we’ll have to extrapolate from empirical evidence, rather than relying on basic properties of SGD.

The paper that tackles this sample-efficiency question most straightforwardly is Stress-Testing Capability Elicitation With Password-Locked Models (discussed on LessWrong here [LW · GW]); this tackles the “purposefully do something” case, so is potentially uninformative for the “purposefully don’t do something” case.

When we’re thinking about whether to train the model on examples where it attacked, we need to think about the model’s capacity for out-of-context learning. That is: when we train the model on a particular input, it might be able to (to some extent) explicitly reason in other contexts about the fact that we’d trained it on that example. We’d probably prefer that the policy didn’t know we’d caught it trying to escape, because we don’t want the policy to learn to attack more sneakily, or to switch strategies to attacking in a different context; this means we might not want to train the policy on the example we found, preferring to instead train a classifier without updating the policy.

A few other historical reasons for focusing on before-you-catch

Here are a couple of other reasons that we used to focus more on the before-you-catch situation than we currently do.

We first started researching AI control in the innocent days of mid 2023. Our timelines were longer, there seemed to be appetite for AI regulation at the state and federal level, and AI companies were semi-seriously talking about requiring high-assurance externally reviewed arguments that their deployments didn’t pose catastrophic risk. Back then, one framing for control was: it’s just one line of defense that you should have in conjunction with alignment. That is, you only deploy if you have both an alignment argument and a control argument. If that’s your framing, then obviously once you’ve caught the AI trying to escape, your alignment argument has been invalidated and so you have to undeploy. I now put less emphasis on this possibility, because I think it’s less likely that AI companies will produce reliable arguments for safety when deploying catastrophically dangerous AIs.

Here’s a second perspective that also leads you to focus on the situation before you catch. Imagine a regulatory regime in which the AI company needs to produce a safety case whenever they’re doing a new deployment. This would take the form:

- We’re planning to follow protocol X.

- If we follow protocol X, then the total catastrophic risk caused by this deployment is less than 2% [or whatever number you think is plausible].

- We justify this with evidence Y.

When making this argument, if you don’t have concrete evidence that your models are scheming, I think it might make sense to ignore the possibility of deploying known-scheming models for simplicity. That is, your protocol X contains a provision saying “If we get strong evidence of scheming [according to some concrete specification], we’ll stop deploying the model.”

If that deployment gets approved and you later catch your AI attacking, you’re allowed to submit another application to deploy with a new protocol, and the safety case for this protocol must incorporate the fact that you are now confident your model is scheming. You could have included this contingency in the original protocol X, with evidence required to make the safety case. But it’s potentially more efficient to skip this effort until the need for it arises, for two reasons: firstly, the model might not be scheming, and secondly, as discussed above it’s hard to predict how the situation will look different after catching red-handed.

Thirdly, deploying known-scheming AIs seems more dubious from an AI welfare perspective, especially if the AIs never consent to being used. See here or here [LW · GW] for a bit more discussion of why I don’t think AI control is necessarily immoral. In practice I expect AI companies to be pretty negligent about AI welfare and rights.

Finally, when we first started talking about AI control, people in the AI safety community often thought that we were advocating recklessness. Sometimes their attitude was that we were too pessimistic about the extent to which AI companies would invest in mitigating misalignment risk. Sometimes their attitude was that even if we were descriptively accurate about investment in misalignment risk mitigations, we didn’t want to open the Overton window by describing such reckless approaches. I think that the passage of time validates our pessimism about investment into misalignment risk mitigation. We haven’t gotten conclusive evidence on whether it’s a strategic error to talk openly about the reckless strategies that are likely to be among the best interventions on the margin, but I still think that the benefits of increased research on realistic safety interventions outweigh the harms.

- ^

Specifically, this arises because of our definition of our two metrics, safety and usefulness. Safety is about the probability of a catastrophe occuring, and usefulness is about the number of tasks you complete correctly. Safety is measured using the red-team-produced attack policy as the implementation of the untrusted model, while usefulness is measured using the original untrusted model. So safety and usefulness are measured with different experiments. When these experiments are being run, the protocol does not have direct access to which experiment it’s in. But if it finds conclusive evidence that it’s in a safety experiment (e.g. because it catches an attack attempt), it now is sure it’s not being evaluated for usefulness, and so doesn’t care about the proportion of tasks solved correctly on this run.

- ^

By “stop deploying”, I mean “revert to only giving model access to a small set of people, and ensure that those people know the model can’t be trusted”. We shouldn’t totally stop the deployment of the model.

- ^

A closely related situation that leads to similar dynamics is:

- An AI company catches AIs trying to escape.

- They try to understand and address the cause of misalignment, without any particularly principled strategy to do so.

- They train a new model with the fix and deploy it. (And now a reasonable observer would have P(scheming) ~= 60% or something.)

- ^

Ignoring exploration hacking for simplicity.

0 comments

Comments sorted by top scores.