AGI & Consciousness - Joscha Bach

post by Rahul Chand (rahul-chand) · 2024-10-08T22:51:50.841Z · LW · GW · 1 commentsContents

Why consciousness? Is scale not all you need? What is Consciousness? Building onto consciousness How does it all relate to AI? Inside-out vs. Outside-in Limitation & Abilities of LLMs Are LLMs conscious? How can we test it? How does consciousness help us learn? How does consciousness emerge? How do we test for consciousness and Ethical considerations? None 1 comment

In this post, I cover Joscha Bach' views on consciousness, how it relates to intelligence, and what role it can play to get us closer to AGI. The post is divided into three parts, first I try to cover why Joscha is interested in understanding consciousness, next, I go over what consciousness is according to him and in the final section I try to tie it all up by connecting the importance of consciousness to AI development.

Why consciousness?

Joscha's interest in consciousness is not just because of its philosophical importance or because he wants to study to what extent current models are conscious. Joscha views consciousness as this fundamental property that allows the formation of efficient intelligent agentic beings like humans and for him understanding it is the key to building AGI. There are three questions at this point 1) What does Joscha think is wrong with our current models and ideas? 2) What ideas and models according to him are good? 3) How will consciousness take us there? I cover the first two questions here and once we have gone through "What is consciousness?" section, I will try to answer the 3rd.

Is scale not all you need?

Joscha's issues with current models are that first they are too much dependent on brute force and scaling for their abilities, and as we keep scaling more the data becomes scarcer. Secondly, even though they use more compute, data and energy than our brains they are still not smarter than us for a number of tasks. According to him there seems to be something in our own mind that allows us to bootstrap ourselves to do out of distribution generalization, to generate new solutions to problems that are not in the training data based on a far less scarce description of the world than the one that we give to the language models.

He wants to move away from this paradigm and develop models that are smaller and more focused. Unlike current models, these would not be trained on the entire internet. Instead, they would reason and learn from first principles. Given this, it can learn in a way similar to a human. For example, given a book, it can learn the abstract ideas and use them to learn further and become more capable. To achieve this, we need to ask ourselves how minds work in nature.

What is Consciousness?

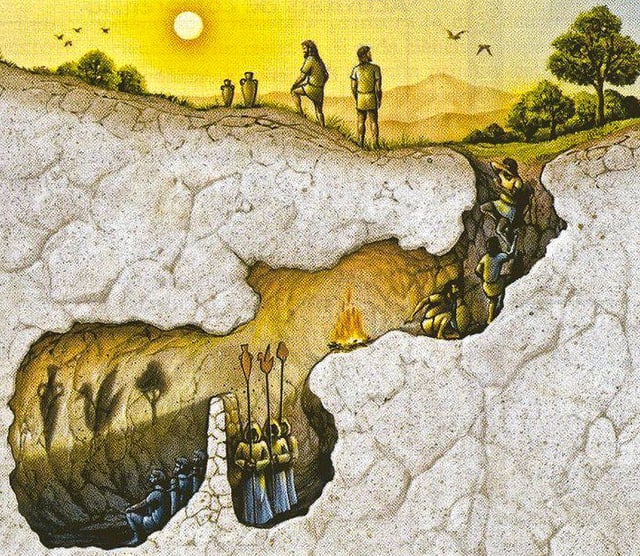

Joscha looks at the works of Aristotle and his "engineering" insight into human mind. For Aristotle, there are three main things

- Soul is the essence which distinguishes living objects from inanimate objects. It is inherent and imprinted on living matter. This is akin to a software, it's a process that guides living beings and uses the physical nature of living beings to implement itself.

- Different from soul, is nous, which is the ability to reason in a universal manner. For Aristotle only humans have nous.

- Perception, this is sensory stimulus in living beings. Though it is helps nous (our ability to reason), nous is independent as it works on more abstract ideas.

So why is Aristotle's view any important? First, for Joscha the categorizations map reliably to what we know today. For example, animals have soul but not nous (therefore they are many of them are conscious but not smart), while LLMs have nous to a great extent but not soul (therefore they are not conscious but are smart). Secondly, Aristotle's view on human mind helps us side-step the "hard problem of consciousness"? How? In modern mind-body dualism, if you see a red apple, you think of it in two terms, a certain frequency of light hitting your retina and translating to some sort of electrical signals and then a subjective experience of "redness". How does the physical lead to the subjective? For Aristotle, this is not an issue since for him the subjective experience of "redness" is by definition what happens when the soul interacts with its physical substrate. So how does Joscha build upon this?

Building onto consciousness

For him, there is a distinction between physical objects and their experiences. We cannot experience physical objects; we can only experience their representation inside our mind. So e.g. going back to the apple example, when we see an apple, we have these physical changes in our neuron which our mapped to these abstract representations and these abstract representations have causal effect on other abstract representation (like hungriness) which is then mapped back to our neurons.

How does all of this connect to consciousness? According to Joscha, we have our neurons that exchange messages, an abstract representation built over these messages gives us the ability to direct an organism, i.e. I can use my abstraction of a "hot" and my abstraction of "moving my hand" to change my neuron from firing the "it is hot" signal to "remove my hand" signal. That is the power of representation. But we are still not at consciousness. According to him, as we create more of these abstract representations, our brain forms a representation of our own selves. Why? Because such a representation provides the greatest benefit in guiding the organism (our own selves). This gives us the ability to map "remove my hand from hot stove" without actually performing any of the things in that statement physically. Here you can see why Joscha likes Aristotle's ideas, this matches Aristotle's whole "soul guiding the living" idea.

Great, but we are still not at consciousness according to Joscha. The brain creating a model of itself (the awareness of "I") to better guide the organism is first order perception. However, consciousness is about noticing that your perceiving, its 2nd order perception. This is very important but subtle point. This is what helped me the most to get a clearer picture on questions like "Is a mouse conscious?" The mouse most likely has some sense of self (it's sense of self in its mind gives it the ability to efficiently respond to stimuli, like see a cat run from it) but it most likely doesn't know that it is perceiving itself and therefore it lacks self-awareness and is not conscious like humans are. So, humans can go like "Wow, I feel afraid right now", but for mouse this doesn't happen. It just feels afraid. No 2nd order. The other thing about consciousness is that always happens in the now ("nowness").

Hold on, we are almost there. We saw the importance of 1st order perception (efficiently responding to stimuli that can help an organism), but what is the functionality of this 2nd order consciousness? According to Joscha, the purpose of consciousness is to create coherence in our mental representation. It helps us make better sense of reality. It is like a mental orchestra operator, syncing music from different instruments.

"So, to sum up, introspectively, what I mean by consciousness is this reflexive second-order perception, perceiving to perceiving that creates the bubble of nowness and functionally is an operator that increases or decreases coherence in your mind." - Joscha Bach

How does it all relate to AI?

"And I suspect if you want to build artificial minds that are adaptive, that have that

kind of complexity, we will need to give them an idea of who they are and who they can be..." - Joscha Bach

In the rest of his talk, Joscha covers a wide range of topics

- Inside-out design (consciousness) vs. outside-in design (LLMs)

- Limitation & conscious of LLMs

- How consciousness helps us learn?

- How does it emerge in the first place?

- How to test for consciousness and ethics

Though Joscha talks about a bunch of other things too (e.g. virtualism, LLMs as downstream of culture, Book of Genesis etc.), I stick to ideas most directly related to AI. I will give a brief overview of why and how we are going to cover these topics. We first start with contrasting different paradigms of learning. Then, talk about limitations and consciousness of models made up of one of those paradigms, i.e. LLMs (they are extremely powerful computational systems but not really conscious). We then discuss what we are missing out on if our models are not conscious (this is the important thing, because at the end of the day we want to create models that can fill in the weakness of current models). To introduce consciousness into our models we need to know how it emerges in the first place. Finally, we talk about how we can test for consciousness and its ethical considerations.

Inside-out vs. Outside-in

Joscha first talks about how our brains and our current AI models have very few things in common yet they can achieve similar results. According to him our design of current AI algorithms is outside-in, we decide the architecture, we decide what kind of data they will learn on, what their objective should be (next token prediction or L2 etc.). We try our best to force the model to learn how a human reasons, rather than actually learning how to reason. In contrast human brain learns in an inside-out manner where we are constantly interacting with a dynamic environment, deciding which data is important, and what structures should we impose to make our world more coherent. Why does he talk about this? His point is to show how these two different paradigms of making sense of the world lead to two different types of reasoners (data hungry & energy intensive vs. data and power efficient).

Limitation & Abilities of LLMs

Okay, we have been hammering over LLMs for a while now. We have called them all sorts of names, accused them (rightfully so) of being data inefficient and power hungry. But if we removed our concerns for electricity and data for a moment. Does anything limit our LLMs in a dramatic manner? Is there something they just can't do?

LLMs can be viewed as emulating a virtual computer which understands and operates on natural language. If you find the right prompt and if you get a LLM that has a sufficient length for interpreting that prompt and sufficient fidelity to stay true to the content of the prompt then you can execute an arbitrary program and, in this sense, there is no obvious limitation.

That is great, isn't it? But then why do we even need to discuss consciousness if LLMs have no limitation and can do anything? Well, a trillion monkeys typing randomly can produce a great book, but that doesn't mean we should do it that way. In the future, we may view the current 100+ billion parameter models, trained over months, as a form of "random monkey typing" compared to the more advanced models we might develop.

The question that Joscha covers next is if LLMs are extremely strong computation systems, then are the thoughts inside it (like chain-of-thoughts, tree-of-thoughts etc.) conscious?

Are LLMs conscious? How can we test it?

What happens when LLMs are prompted to "simulate" a scenario or people or emotional states? What makes an LLM simulation different from our own consciousness? For Joscha, a number of things, first LLM is not coupled with the outside world in the same way as us, second there is no dynamic update of the model, it doesn't learn anything from its "consciousness" (opposite of us) and third it's not intrinsically agentic, it doesn't simulate for its own self but for anything that is prompted into it. There is a particular reason why Joscha discusses this and it's not just philosophical. He wants to make a larger point about how we can't just use LLM outputs or their intermediatory tokens (like in chain-of-thought) to decide if they are conscious or not. Since these models have been trained large amount of human written data (which has a lot of self-aware wording) they are reproducing a sequence of words that is unrelated to an underlying functional structure. So, all the new buzz about prompting GPT-4 or Claude in a particular way to achieve self-awareness is just mostly hype.

How does consciousness help us learn?

We really love consciousness, huh? But why? Consciousness means being self-aware which helps organisms to navigate and adapt to unfamiliar situations, making it easier to constantly learn from new experiences. It also allows us to have "free-will" which is important for creative self-transformation. But for me the most compelling answer to "Why consciousness?" is that it's simply the more efficient way to develop intelligent organisms capable of complex learning and adaptation.

Let's see the example of a dog that Joscha used. Imagine trying to create an organism that behaves like a dog from scratch, but without any mental states or consciousness. You would need billions of 'dog training data' and computational power to hope to converge on a state that accurately mimics dog behavior. This would in a way require programming every possible stimulus-response pair (in some ways LLMs are similar, they need 100 of billions of parameters because rather than learning very generic first-principal knowledge, we learn highly specific knowledge).

As Joscha puts it, it might be much easier to build a self-organizing mental architecture that can generalize if it starts out with an observer that observes itself observing and then creates coherence from that starting point. And while that might look a little bit complicated, it might be much easier than designing a complete mental architecture from scratch that then discovers the notion of being an observer at the end.

How does consciousness emerge?

One implication of our above discussion is that consciousness is a fundamental building block of cognitive processes rather than an emergent result at the end of it. According to Joscha, consciousness is the prerequisite which forms before anything else in mind.

So how does it happen? Joscha talks about a six-step hypothesis about infant model development and how consciousness helps us create our mental universe. I talk about it here in brief. It starts with, "primordial consciousness", according to him this is the first conscious spark, it's like the Big Bang, it sets everything into motion, and just like the Big Bang our mind before it is uninitialized. It then creates a world model and an idea sphere, one where we track our physical objects and one where we track our abstract ideas. Infants then learn to reason on 2-d plane (like crawling) and then they move towards 3-d reasoning (walking). At the age 2.5-5 they begin to develop personal self and identity which then develop into the kind of consciousness we usually associate ourselves with.

Note that at the start we made consciousness sound this special thing, it needed to have this "nowness" and 2nd order perception. But it seems that we dialed things down a bit. Consciousness now seems, as Joscha puts it "ubiquitous". So, does that change my mind about the consciousness of certain organisms? Kind of, if you had asked me at the very start do I think cats and dogs are conscious, I would have said no. But now a cat and dog seem mostly conscious. Though the mouse from our previous examples is still not conscious for me. Joscha spends more time discussing about this topic in more detail. Talking about the consciousness of animals, insects and even trees.

How do we test for consciousness and Ethical considerations?

At this point we know consciousness is a good thing for learning. And rather than hoping our model develops consciousness after we train it on trillions of tokens, we must rather create models where consciousness is either there from the start (I am not sure if this is possible) or where consciousness emerges early and therefore, we don't have to do the whole trillion token thing.

So how do we do it? Joscha proposes building substrates similar to biological cells that are exposed to increasingly difficult sequence prediction tasks, such as predicting the next frame in a video stream or moving an organism in a simulated world. The goal is to look for operators that lead to self-organization under these conditions while still being able to learn and observing if there's a phase transition in the capabilities of these systems. From what I understand, this might look like: In LLMs, you usually see these transitions as you increase model size. What if you had a different architecture with a smaller constant size, and the only way for this architecture to learn was through self-organization? Obviously, it can all fail, and the architecture just might not learn anything, but that is the challenge.

Joscha at the end also talks about how this can help us with alignment and ethics in AI.

"And I suspect that we cannot have an AI ethics without understanding what consciousness is, because that's actually what we care about."

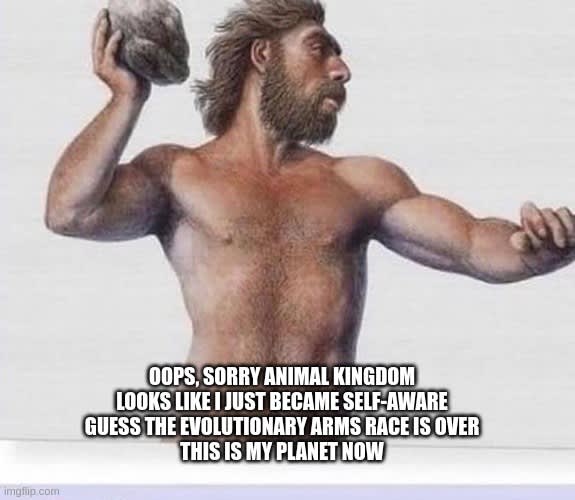

One final thing, that Joscha goes over a number of times and I found really interesting, is how consciousness might be the winner of the evolutionary arms race. All these organisms fighting to become the apex predator, they develop claws, flying ability, poison and humans come out of nowhere and one-shot them because they develop self-awareness first. This line of thinking though might point towards a different direction. Maybe consciousness is a result of millions of years of pre-training, but when we get to it, everything becomes easier.

1 comments

Comments sorted by top scores.