"Alignment at Large": Bending the Arc of History Towards Life-Affirming Futures

post by welfvh · 2024-12-03T21:17:56.466Z · LW · GW · 0 commentsContents

No comments

What if we expanded our lens beyond AI alignment, to grapple with the full scope of what "alignment" really means? We're facing not just the challenge of aligning artificial intelligence, but the deeper question of how to align technological capitalism itself — with its unconscious optimization pressures, its coordination failures, its tendency toward extraction and nihilism — toward life-affirming possibilities.

Our world-system of technological capitalism is already a general, auto-poetic, and largely autonomous superintelligence.1 The Great Fact would lead us to believe that this system is aligned-ish: It has substantially raised living standards and life expectancy over the last two centuries.2 Unfortunately, this system is also misaligned with human and planetary flourishing in critical ways. Caught in emergent market incentives, multi-polar traps, and arms races, it's optimizing narrow metrics at the cost of real value.

The global competition for resources drives economic players not just towards profit-maximization, but financial totalization of the playing field: There's a maximum incentive to turn as much of the world as possible into capital under “our” control. Currency enables infinite optionality to turn money into power — purchase power, political power, military power, public opinion through PR, demand engineering, etc. The incentive gets further amplified with money-on-money dynamics, as compound interest enables exponential returns.

We could describe the objective function of technological capitalism like so: to convert as much of the world as possible — trees, whales, people's creativity, children's minds — into capital. Through its decentralized incentive system, it runs parallel processing across all humans and corporations to perform novelty search (figure out new ways of making money) and exploitation (optimally execute on these opportunities).

As Scott Alexander pointed out, these forces can be usefully deified as Moloch, the god of Game Theory, who gives worldly power in exchange for what you most value.3Unhealthy competition in a system of exponential technological capitalism vectors towards financial totalization: The destruction of all values for the sake of profit and power. As players of zero-sum games, if we can externalize costs to the commons instead of internalizing them, game theory dictates that we must — because else others will, and we lose competitive advantage.

These dynamics don't just drive markets - they shape cultural evolution itself. The totality of "how humans do things" evolves through a process of selection that tends to favor power over wisdom, competition over cooperation, extraction over regeneration. Like a gravitational force, it pulls cultural and technological development toward whatever the maximizes competitive advantage, regardless of other values.

As Land observed, this creates a kind of 'teleoplexy' - a self-reinforcing cybernetic intensification for which human values and biological life itself become, at best, temporary obstacles.4 It's a ratchet of technological self-amplification that's stronger than politics, a blind optimization process that appears to have no natural boundaries.5

To navigate this landscape, we need better maps. In particular, we need frameworks that can help us understand how wisdom might become evolutionarily competitive - how life-affirming values could gain advantage in a landscape that naturally selects for pure power.

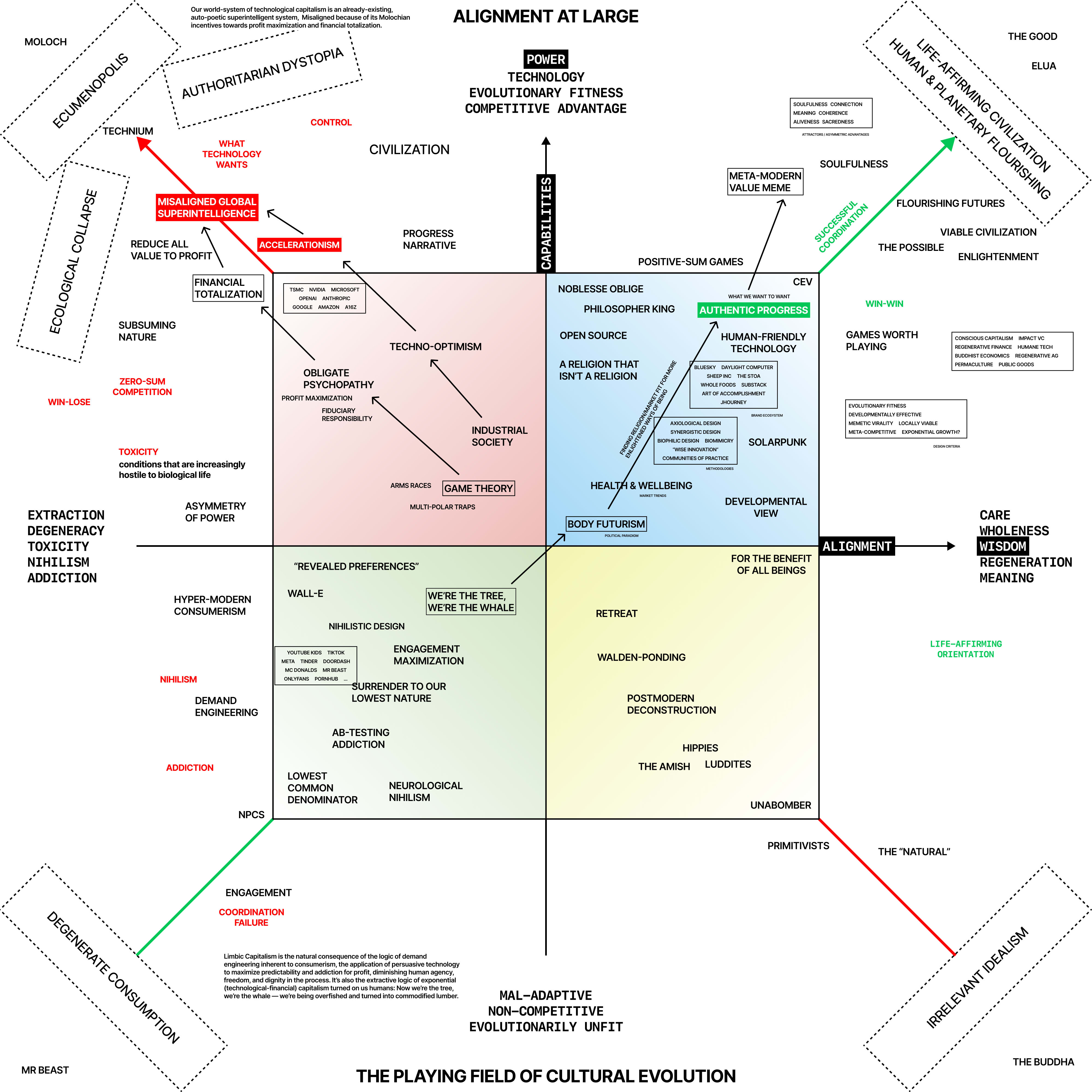

This understanding led me to map cultural evolution across two essential dimensions: power/intelligence and wisdom/care.

An early draft of the map:

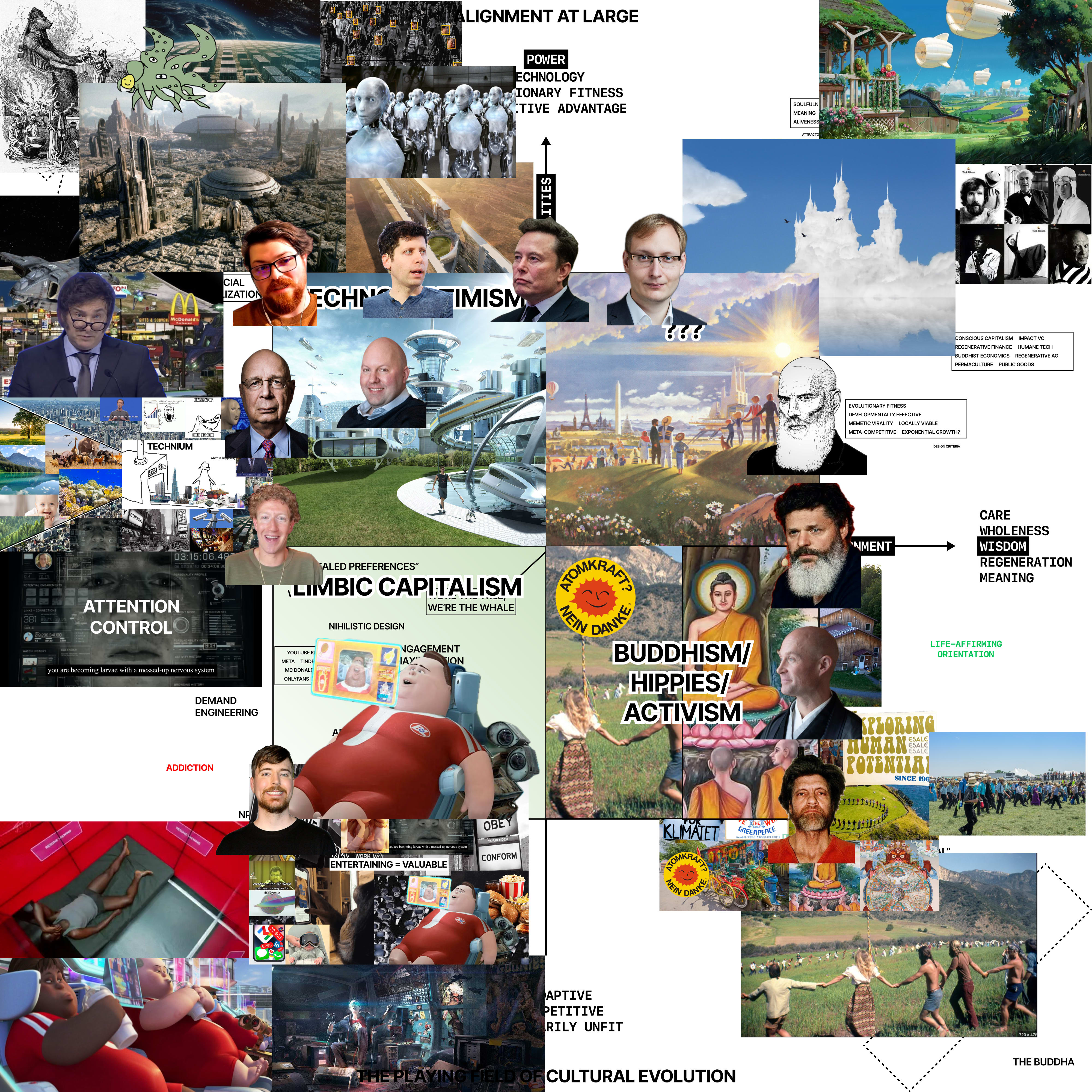

This is an evolved version of the Political Compass for Human Flourishing.

Techno-Optimism sits in the top left corner: high in power, low in wisdom. The consumer reality of Limbic Capitalism occupies the bottom left, where technology has won over human dignity through perfectly optimized addiction, creating developmentally stunted consumers whose capacity for agency and meaning has been eroded. The Buddhist/Hippie/Luddite-Complex lives in the bottom right corner: caring individuals with moral sensibilities who unfortunately tend to retreat from games of power, rendering their idealism irrelevant.

The top right quadrant is the space of possibilities we're looking for: Combining wisdom with the kind of power required for adequate evolutionary fitness in the context of cultural evolution that favors blind forces of power and competition.

It's in this context that we must evaluate dominant ideologies: Accelerationism is an existentially inept and morally bankrupt ideology that, upon realizing the life-denying tendencies of technological evolution adopts the hyper-psychopathic stance of "evolution = good" — even if that means the transfiguration of all life on earth into GPU substrate.

Techno-optimism is its naive uncle, that hasn’t yet gotten around to fully considering its own implications, effectively a slow accelerationism. Both resemble dogmatic religions, in no way equipped to address these critical alignment issues — an inadequate basis for navigating the most consequential period in human history.

A techno-"optimism" that's unarticulate about its own failure modes, and indefinite about how it will bring about human and planetary flourishing in face of them, isn't optimism at all – its ignorant cope, magical thinking, and plausible deniability to continue doing whatever drives ROI.

The main purpose of this work is to establish the top right quadrant as the only sane ideological space to orient towards, demonstrating that ALIGNMENT AT LARGE requires a deep orientation towards wisdom. Not left vs right; the deciding axis for our future is the emergent, unconscious, value-consuming impulse of technological capitalism against the collective imagination and will of humanity.

It's time to have the courage to have the existential crisis that can bring clarity: The system we've believed in all our lives turns out to be misaligned with the basis of all that is meaningful. Life is infinitely precious, and we currently do not have an ideology powerfully wise enough to be a trustworthy steward of life on earth.

What are we going to do about it? How can we help techno-optimism grow up?

What does it take for wisdom to win?

This project seeks to

- Formally develop the frame of alignment at large, make it lucid and increasingly meme-legible so it can enter the conversation

- Develop the map of cultural evolution as a way-finding tool

- Elucidate the inherent limitations of accelerationism and techno-optimism

- Identify the attractors, vectors, and dynamics of the top right quadrant

… so as to help humanity graduate from naive techno-optimism and advance The Cultural Frontier of making wisdom win.

Originally published on welf.substack.com

1 Daniel Schmachtenberger has articulated the argument for technological capitalism as a misaligned ASI on Liv Boeree’s Win-Win podcast: AI, Capitalism & Misaligned Incentives

3 "Meditations on Moloch" (2014) - foundational for understanding the game theoretic/coordination problem aspects

4 ”What Nick Land calls a ‘teleoplexy’ for whom the human subject in its typical and traditional form is more or less irrelevant or, at worst, a temporary obstacle”

— The Missing Subject of Accelerationism, Simon O’Sullivan, referencing Nick Land’s Teleoplexy, Notes on Acceleration (2014) as included in #Accelerate: The Accelerationist Reader

5 Kevin Kelly’s “What Technology Wants” continues to be relevant reading

0 comments

Comments sorted by top scores.