AI4Science: The Hidden Power of Neural Networks in Scientific Discovery

post by Max Ma (max-ma) · 2025-03-14T21:18:33.941Z · LW · GW · 2 commentsContents

2 comments

AI4Science has the potential to surpass current frontier models (text, video/image, and sound) by several magnitudes. While some may arrive at similar conclusions through empirical evidence, we derive this insight from our "Deep Manifold" and provide a theoretical foundation to support it. The reasoning is straightforward: for the first time in history, an AI model can integrate geometric information directly into its equations through model architecture. Consider the 17 most famous equations in physics (see below) —all of them lack inherent geometric information, which limits their ability to fully capture real-world complexities. Take Newton’s second law of motion as an example: its classical formulation assumes an object falling in a vacuum. However, in reality, air resistance—strongly dependent on an object’s geometry—plays a crucial role. A steel ball and a piece of fur experience dramatically different resistances due to their shapes. Traditional equations struggle to incorporate such effects, but deep learning provides a powerful way to integrate geometry through boundary conditions (loss values), as discussed in our paper (Deep Manifold Part 1: Anatomy of Neural Network Manifold, Section 4.2 on Convergence and Boundary Conditions). In an AI model, the impact of fur geometry and air resistance can be naturally accounted for. Of course, "easily considered" does not mean "easily solved," but at the very least, AI introduces a promising new pathway for tackling these complex real-world phenomena.

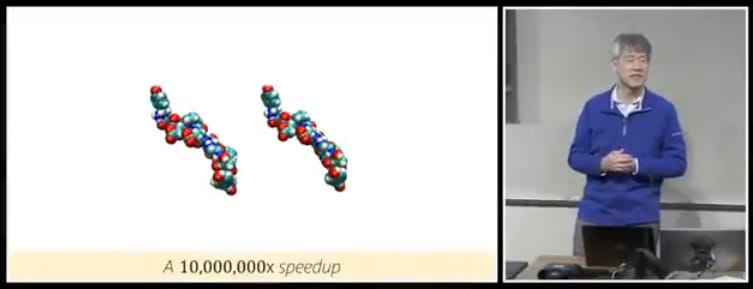

A powerful example showcasing the potential of neural networks with geometric information is Microsoft's Graph Learning Neural Transformer for molecular dynamic simulation. This model accelerates computations by a staggering factor of 10 million compared to traditional numerical simulations. While researchers struggle to explain such an unprecedented gain in computational efficiency, Deep Manifold offers a clear reasoning: the incorporation of geometric (graph) information as a boundary condition. This guides the convergence direction and significantly accelerates the convergence process.

Neural networks possess two hidden powers in scientific discovery:

- Integration of Geometric Information – Unlike most traditional scientific formulations, neural networks can naturally incorporate geometric information, making them more effective in solving real-world problems.

- Geometry as a Boundary Condition for Faster Convergence – By leveraging geometric information as boundary conditions, neural networks can accelerate the convergence process, significantly enhancing computational efficiency.

2 comments

Comments sorted by top scores.

comment by Raemon · 2025-03-14T22:11:29.528Z · LW(p) · GW(p)

Minor note but I found the opening section hard to read. See: Abstracts should be either Actually Short™, or broken into paragraphs [LW · GW]

Replies from: max-ma↑ comment by Max Ma (max-ma) · 2025-03-16T23:16:00.289Z · LW(p) · GW(p)

Thanks... will look into