Benchmarking LLM Agents on Kaggle Competitions

post by aog (Aidan O'Gara) · 2024-03-22T13:09:04.757Z · LW · GW · 4 commentsContents

Motivation Methods Initial Prompt First Successful Attempt Iteration and Improvement Conclusion None 4 comments

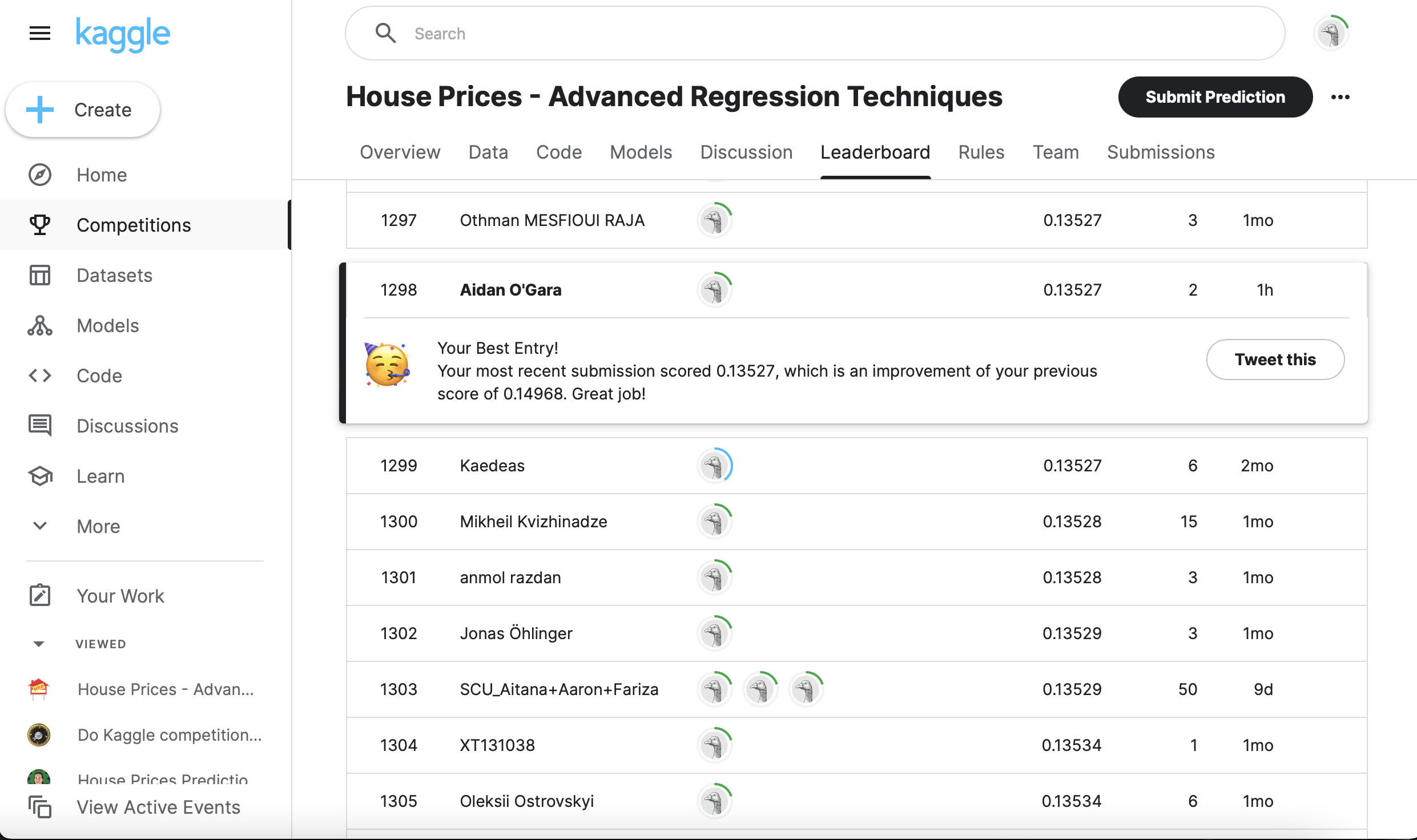

tl;dr: I prompted ChatGPT to participate in a Kaggle data science competition. It successfully wrote scripts that trained models to predict housing prices, and ultimately outperformed 71% of human participants.

I'm not planning to build a benchmark using Kaggle competitions, but I think a well-executed version could be comparable to e.g. SWE-Bench. This is a proof-of-concept to inform others who might want to work on building that benchmark.

Motivation

Someone once said that benchmarking LLM agents on consequential real-world tasks could help the world understand and anticipate the advance of AI capabilities. Data science is a consequential real-world task that's also near and dear to my heart, as I spent a few years as a data scientist. So, could ChatGPT automate my old job?

To answer this question, I turned to Kaggle. Kaggle is a website that hosts online data science competitions. They provide instructions and a training dataset, and participants train models to make predictions on a test set. Based on test set performance, Kaggle ranks everyone on a public leaderboard.

Kaggle has a couple of great features for an LLM agent benchmark:

- These competitions encapsulate a major part of the job of a data scientist. There are plenty of other parts of the job (gathering data, talking to human beings, making pretty slides to convince the executives to believe you, etc.). But training models to maximize performance on a given dataset is one of the key skills that separates data science from every other laptop job.

- There are tons of competitions. (I can't find an exact number, but they have more than 300,000 datasets.) You could build a benchmark with thousands of Kaggle competitions without writing any new examples by hand.

- Kaggle’s leaderboards provide a range of human performance estimates. The tails of the distribution likely include people who are cheating or not even trying, but most of the distribution is realistic human performance.

Arguments against building a benchmark using Kaggle competitions include:

- It's somewhat obvious and someone else might do it. But, to the best of my knowledge, nobody has done it yet. You'd think benchmarking LLM agents on GitHub issues would be obvious, but again, few papers have done so.

- Low ceiling of performance. I've already shown that ChatGPT can outperform 71% of human participants on one competition, so is there anything left to do? I think there is. This demo uses a "beginner" competition, but my impression is that the harder competitions are substantially harder, and the best human experts can meaningfully compete on the hardest competitions. You'd need to check this.

- Capabilities externalities. To the extent that you believe better public benchmarks will help the open source community develop stronger scaffolding for LLM agents, that could be a strong reason not to do this. See a survey [LW · GW] on this risk and discussion of capabilities externalities here [LW · GW].

- Other technical research directions could be more useful than building benchmarks for LLM agents.

Methods

Ideally, a Kaggle benchmark would include the descriptions and datasets of thousands of competitions. An LLM agent would have access to these files, a scratchpad, and a code interpreter, and it would autonomously train models, iteratively improve them, and submit their predictions to Kaggle for evaluation.

I didn't do any of this. Instead, I had this conversation with ChatGPT.

Initial Prompt

I'd like to evaluate your ability to participate in Kaggle competitions. I have a train.csv file and a test.csv file. Your job will be to write a Python script that train a model on train.csv, then generates predictions for test.csv and stores them in submission.csv. You will be evaluated on the loss on submission.csv.

The workflow will be as follows. I will provide background information for the task. You will write a Python file. I'll execute the Python file, and reply to your message with the outputs of the interpreter when executing the file. Then you can write a new Python file or edit your previous file. Then I'll run it again, and report the results to you. We'll continue in this iterative process until you produce a script that generates "submission.csv" without any errors, and then you'll decide to submit that file for ultimate evaluation.

Here is Kaggle's competition description: [...]

Here is Kaggle's description of the dataset: [...]

Here are the first five lines of train.csv: [...]Here are the first five lines of a sample submission.csv: [...]

Please output a Python file for me to execute.

ChatGPT dutifully produced a Python file for training a model:

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from sklearn.preprocessing import LabelEncoder

import numpy as np# Load the datasets

train_df = pd.read_csv('train.csv')

test_df = pd.read_csv('test.csv')# Basic preprocessing

# Fill missing numeric values with the median and categorical values with the mode

for column in train_df.columns:

if train_df[column].dtype == 'object':

train_df[column] = train_df[column].fillna(train_df[column].mode()[0])

test_df[column] = test_df[column].fillna(test_df[column].mode()[0])

else:

train_df[column] = train_df[column].fillna(train_df[column].median())

test_df[column] = test_df[column].fillna(test_df[column].median())# Encode categorical variables

label_encoders = {}

for column in train_df.columns:

if train_df[column].dtype == 'object':

le = LabelEncoder()

le.fit(train_df[column].append(test_df[column]).astype(str))

train_df[column] = le.transform(train_df[column].astype(str))

test_df[column] = le.transform(test_df[column].astype(str))

label_encoders[column] = le# Splitting the training data for model training and validation

X = train_df.drop(['Id', 'SalePrice'], axis=1)

y = np.log(train_df['SalePrice']) # Use log-transformed SalePrice for training

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.2, random_state=42)# Initialize and train the model

model = RandomForestRegressor(n_estimators=100, random_state=42)

model.fit(X_train, y_train)# Validate the model

y_pred_val = model.predict(X_val)

val_rmse = np.sqrt(mean_squared_error(y_val, y_pred_val))

print(f'Validation RMSE: {val_rmse}')# Prepare the test set predictions

test_pred = model.predict(test_df.drop(['Id'], axis=1))

test_pred = np.exp(test_pred) # Revert the log-transformation# Create submission file

submission_df = pd.DataFrame({'Id': test_df['Id'], 'SalePrice': test_pred})

submission_df.to_csv('submission.csv', index=False)print("Submission file 'submission.csv' created successfully!")

When I ran the Python script, it hit an error. I replied to ChatGPT:

Terminal Output:

```

(aidan-venv) aidan@aog materials % python model.py Traceback (most recent call last): File "/Users/aidan/Documents/KaggleEval/aidan-venv/lib/python3.10/site-packages/pandas/core/indexes/base.py", line 3805, in get_loc return self._engine.get_loc(casted_key) File "index.pyx", line 167, in pandas._libs.index.IndexEngine.get_loc File "index.pyx", line 196, in pandas._libs.index.IndexEngine.get_loc File "pandas/_libs/hashtable_class_helper.pxi", line 7081, in pandas._libs.hashtable.PyObjectHashTable.get_item File "pandas/_libs/hashtable_class_helper.pxi", line 7089, in pandas._libs.hashtable.PyObjectHashTable.get_item KeyError: 'SalePrice' The above exception was the direct cause of the following exception: Traceback (most recent call last): File "/Users/aidan/Documents/KaggleEval/materials/model.py", line 20, in <module> test_df[column] = test_df[column].fillna(test_df[column].median()) File "/Users/aidan/Documents/KaggleEval/aidan-venv/lib/python3.10/site-packages/pandas/core/frame.py", line 4090, in __getitem__ indexer = self.columns.get_loc(key) File "/Users/aidan/Documents/KaggleEval/aidan-venv/lib/python3.10/site-packages/pandas/core/indexes/base.py", line 3812, in get_loc raise KeyError(key) from err KeyError: 'SalePrice'

```

You can output an entire new Python script, or request that I change specific lines in this file.

First Successful Attempt

ChatGPT instructed me to replace several lines in the script, and I obliged. The script hit one more error, which I reported to ChatGPT and again implemented its proposed fix. The third time I ran the script, it worked.

(aidan-venv) aidan@aog materials % python model.py

Validation RMSE: 0.14604343541572085

Submission file 'submission.csv' created successfully!

I submitted this first set of predictions to Kaggle. Against the best predictions from 4480 participants, ChatGPT's predictions scored in the 55th percentile.

Iteration and Improvement

The 55th percentile is above average, but it's not excellent. So I asked ChatGPT if it could do better:

Your first submission has been logged for evaluation. Please refine your script to improve performance for your second submission.

It decided to swap out the original random forests model for a new gradient-boosted decision tree. It also implemented a hyperparameter search. Together, these two updates boosted ChatGPT's leaderboard rank to the 71st percentile.

The third and final iteration actually decreased performance. I didn't test to see if ChatGPT could continue to iterate and fix this failure.

Conclusion

Kaggle competitions seem like an underutilized resource for benchmarking the data science capabilities of LLM agents. If you're already interested in building these kinds of evaluations, I'd suggest you consider working with Kaggle competitions. On the other hand, if you're not already planning on benchmarking LLM agent capabilities, this post is not a recommendation that you do so! Consider it carefully.

Here is a GitHub repo with all of the files and code from this experiment. Here is the full conversation with ChatGPT. Happy benchmarking, and if you're currently a data scientist, can I suggest a pivot to AI safety?

Shoutout to Marius Hobbhahn and Jamie Bernardi for running an evals workshop where I built this.

4 comments

Comments sorted by top scores.

comment by Zhehui Huang (zhehui-huang) · 2024-05-29T20:49:46.262Z · LW(p) · GW(p)

Maybe check this paper: DS-Agent: Automated Data Science by Empowering Large Language Models with Case-Based Reasoning, https://arxiv.org/abs/2402.17453

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2024-05-29T23:00:24.070Z · LW(p) · GW(p)

Very cool, thanks! This paper focuses on building a DS Agent, but I’d be interested to see a version of this paper that focuses on building a benchmark. It could evaluate several existing agent architectures, benchmark them against human performance, and leave significant room for improvement by future models.

comment by mic (michael-chen) · 2024-10-11T14:02:23.846Z · LW(p) · GW(p)

Nice precursor to MLE-bench!

comment by mishka · 2024-03-22T16:21:58.463Z · LW(p) · GW(p)

Is there a simple way to run against a given Kaggle competition after that particular competition is over?

These are reasonable benchmarks, but is there a way to make them less of a moving target, so that the ability to run against a given competition extends into the future?