Rethinking AI Safety Approach in the Era of Open-Source AI

post by Weibing Wang (weibing-wang) · 2025-02-11T14:01:39.167Z · LW · GW · 0 commentsContents

Open-Source AI Undermines Traditional AI Safety Approach The Unstoppable Momentum of AI Open-Source Reevaluating AI Safety Strategies for Open-Source AI Open-Source AI Safety Approach 1. Decentralizing AI and Human Powers 2. Establishing a Rule-of-Law Society for AI 3. Strengthening Power Security Mitigating Safety Risks of Open-Source AI 1. Strengthening Safety Management for Open-Source AI 2. Increasing Diversity in Open-Source AI 3. Enhancing Regulation of Computational Resources 4. Strengthening Defensive Measures Biosafety with Open-Source AI None No comments

Open-Source AI Undermines Traditional AI Safety Approach

In the past years, the mainstream approach to AI safety has been "AI alignment + access control." In simple terms, this means allowing a small number of regulated organizations to develop the most advanced AI systems, ensuring that these AIs' goals are aligned with human values, and then strictly controlling access to these systems to prevent malicious actors from modifying, stealing, or abusing them. Companies like OpenAI and Anthropic are prime examples of applying this approach.

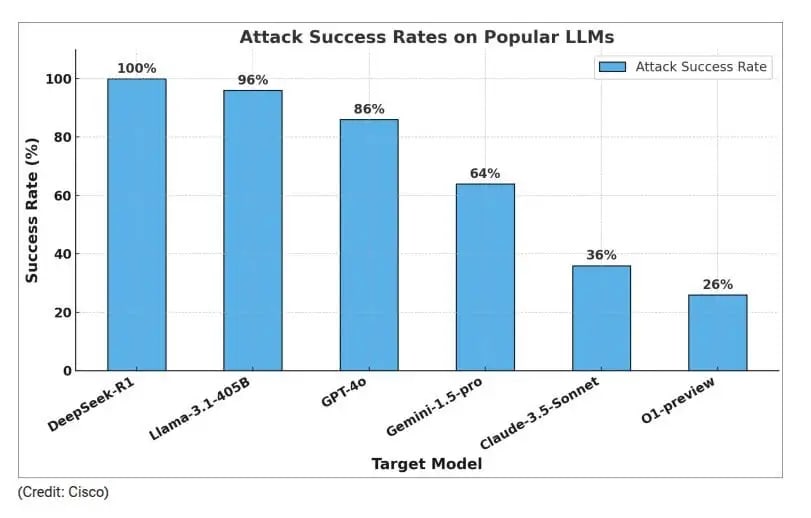

However, this closed-source safety approach is now facing significant challenges. The open-sourcing of models like DeepSeek-R1 has disrupted the monopoly of closed-source advanced AI systems. Open-source models can be freely downloaded, used, and modified by anyone, including malicious actors. Even if an open-source model is initially aligned, it can easily be transformed into a harmful model. Research has shown that with just a few dozen fine-tuning samples, the safety constraints of a model can be removed, enabling it to execute arbitrary instructions. This means that in the future, we are likely to face increased risks of AI misuse, such as using open-source models for hacking, online fraud, or even the creation of biological weapons.

Open-source models also pose a greater risk of losing control. Developers with weak safety awareness may unintentionally introduce significant vulnerabilities when modifying open-source models. For example, they might inadvertently enable the model to develop self-awareness, autonomous goals, or allow the model to self-iterate without human supervision. Such behaviors could make AI systems even more uncontrollable.

The Unstoppable Momentum of AI Open-Source

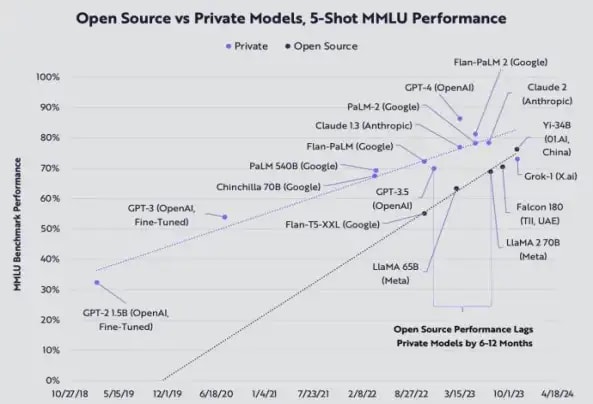

While the open-sourcing of models introduces new safety risks, the trend toward open source is unstoppable. In the past years, the progress of open-source models is faster than that of closed-source models (as shown in the chart below). The performance of DeepSeek-R1 is already approaching that of the best publicly available closed-source models. It is foreseeable that in the future, open-source models will not only stand comparable with closed-source models but may even surpass them.

From the public's perspective, AI open source is also an inevitable trend. Whether in China, the US, or other countries, DeepSeek has been widely welcomed. OpenAI recently launched the low-cost o3-mini model and made its search functionality freely available, yet many comments in the discussion express gratitude for DeepSeek's open-source. This clearly demonstrates that open source has become an unstoppable trend in the AI field.

Reevaluating AI Safety Strategies for Open-Source AI

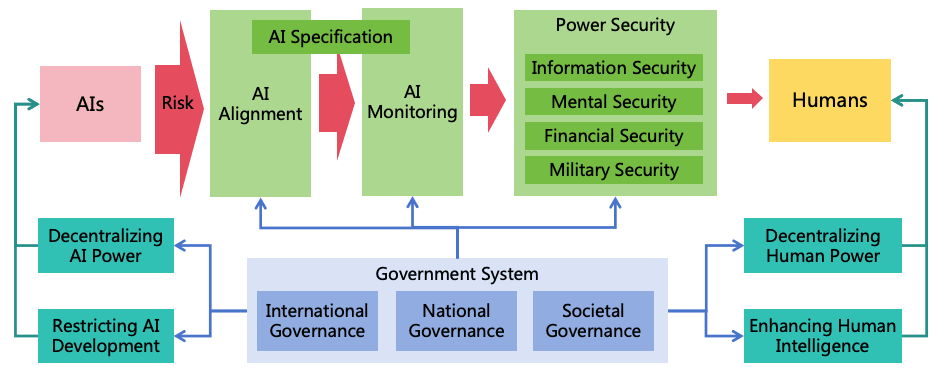

Since the trend of AI open source is unstoppable, we must reevaluate AI safety strategies in light of this trend. In the paper A Comprehensive Solution for the Safety and Controllability of Artificial Superintelligence, a series of safety strategies were proposed. Below, we will reevaluate these strategies:

AI Alignment: AI open source poses challenges to AI alignment. Open-source models can easily have their safeguards removed through fine-tuning, thereby undermining alignment efforts. However, alignment work remains essential. After all, only a minority of users would intentionally remove safeguards through fine-tuning. Ensuring proper alignment can guarantee that the open-source models used by the majority are safe.

AI Monitoring: AI open source makes direct monitoring of AI systems more difficult. Malicious users can deploy open-source models independently without integrating monitoring systems, creating unmonitored AI systems capable of performing any task. However, societal-level monitoring could benefit from AI open source. For instance, Law enforcement agencies could leverage open-source AI to monitor AI-related illegal activities, such as generating fake videos by AI to spread misinformation online or creating computer viruses by AI for distribution on the internet.

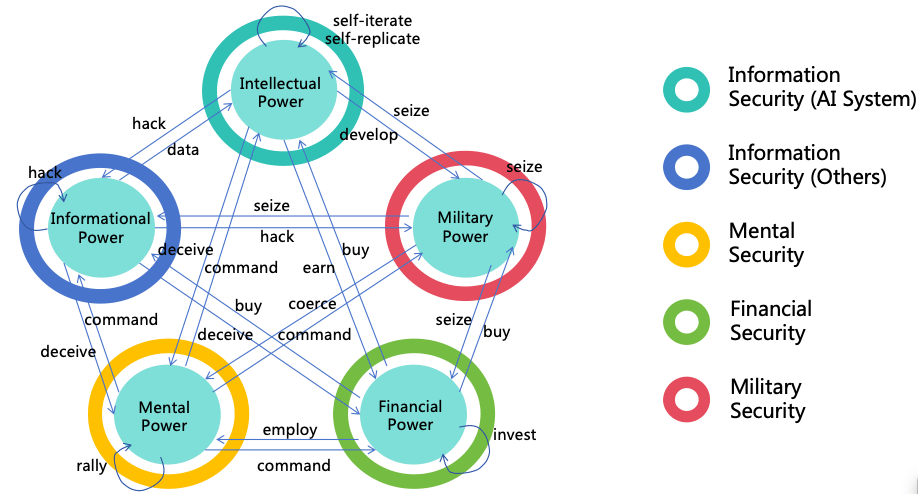

Power Security: AI open source is advantageous for power security (Here "power" means the abilities or authorities that are advantageous for achieving goals, including intellectual power, informational power, mental power, financial power, and military power). For example, open-source AI can be used to defend against hacking attempts, enhancing the security of information systems. Individuals can use open-source AI to identify misinformation, improving mental security. Businesses can use open-source AI to audit financial operations, enhancing financial security. Nations can leverage open-source AI to build defense capabilities, strengthening military security. While closed-source AI can also fulfill these functions, it is controlled by private companies. Relying on closed-source AI for critical security tasks could lead to dependency on these companies, undermining the fundamental guarantee of security.

Decentralizing AI Power: AI open source promotes the decentralization of AI power by reducing the capability gap between different AI developers. Additionally, open-source AI can be independently deployed by various users, enhancing the independence of AI instances.

Decentralizing Human Power: AI open source facilitates the decentralization of human power, preventing AI technology from being monopolized and centrally managed by a small group of individuals, which would otherwise grant them disproportionate power.

Restricting AI Development: AI open source generally hinders efforts to restrict AI development. Open-source sharing accelerates algorithmic iteration and the development of AI applications. However, from another perspective, AI open source could disrupt the business models of leading AI companies, potentially reducing their funding sources and limiting their ability to develop more advanced AI. Additionally, open source encourages organizations to deploy AI locally to process private data, rather than relying on APIs from leading AI companies. This could deprive leading AI companies of access to high-quality private data, thereby limiting the development of their AI capabilities.

Enhancing Human Intelligence: This is largely unrelated to AI open source.

Conclusion: In the context of the growing trend of AI open source, Power Security, Decentralizing AI Power, and Decentralizing Human Power will become more effective security measures.

Open-Source AI Safety Approach

From a higher-level perspective, the source of AI safety risks is not simply the "conflict between AIs and humans," but rather the "goal/interest conflicts between intelligent entities." An intelligent entity could be a human or an AI. The scenario of "an evil AI attempting to destroy humanity" is just one extreme case among countless possibilities. A more common scenario might involve conflicts of interest between one group of humans + AIs and another group of humans + AIs, potentially leading to war. Even if humanity develops ideal AI alignment techniques, these would only ensure that AI aligns better with the goals of its human developers. However, conflicts between different human developers' goals would still persist, and wars could still occur.

Wars among humans have existed throughout history, but the change brought by AI is that it could provide one party with overwhelming power, thereby completely disrupting the balance of the system and causing catastrophic consequences for the weaker parties.

From this perspective, the closed-source AI safety approach taken by organizations like OpenAI and Anthropic is fundamentally wrong. If all AI systems in the world must rely on the APIs of closed-source AI companies, then these companies would concentrate the world's strongest power. The humans or AI agents responsible for development within these closed-source companies would wield immense power, leaving the fate of humanity in the hands of a very small number of intelligent entities. This makes the system's stability extremely fragile.

Therefore, the only way to achieve systemic safety is through an AI safety approach based on open-source:

1. Decentralizing AI and Human Powers

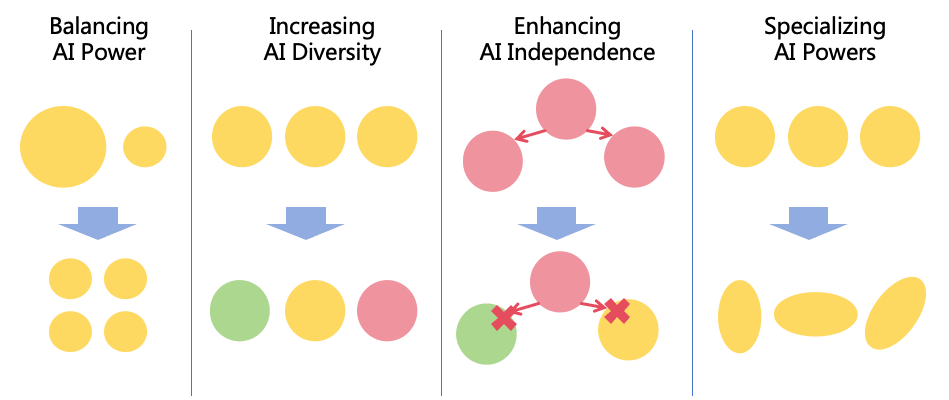

Distribute power broadly across countries, organizations, and individuals using open-source AI, achieving a balance of power between AI and AI, AI and humans, and humans and humans. Specific strategies include balancing power, increasing diversity, enhancing independence, and specializing powers. For more details, see Decentralizing AI Power, and Decentralizing the Power of AI Organizations.

2. Establishing a Rule-of-Law Society for AI

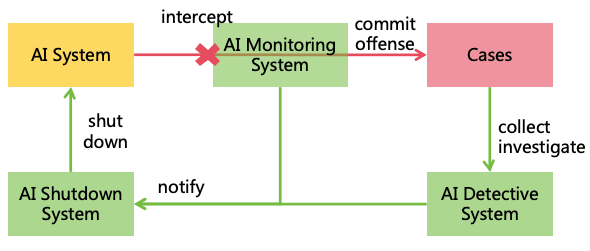

Formulate AI Laws/Rules and use open-source AI to implement systems such as AI Detective System and AI Shutdown System to promptly detect, investigate, and penalize unlawful AI in society.

3. Strengthening Power Security

Leverage open-source AI to enhance security in areas such as Information Security, Mental Security, Financial Security, and Military Security. This would protect information systems (including AI systems) as well as the mind, properties, and life of humans.

Mitigating Safety Risks of Open-Source AI

While open-source AI has certain advantages for safety, we cannot overlook the risks it poses. Measures must be taken to mitigate these risks, such as:

1. Strengthening Safety Management for Open-Source AI

Improve the review process of open-source platforms, conduct safety testing and risk evaluation for open-source AI, and reject the release of high-risk AI (e.g., AI capable of large-scale cyberattacks or designing biological weapons).

Establish a risk monitoring system for open-source AI. If high-risk AI is detected spreading on the internet, notify regulatory authorities to enforce a global ban (requiring international cooperation).

Implement a developer accountability system to hold developers responsible for uploading high-risk AI that causes actual harm.

2. Increasing Diversity in Open-Source AI

If everyone uses the same open-source AI model, systemic risks arise. If the model has security vulnerabilities exploited by hackers, all systems using it could be compromised.

Therefore, we should encourage more organizations and researchers to train and open-source unique AI models, providing users with a wider range of options.

3. Enhancing Regulation of Computational Resources

Open-source AI also requires computational resources to operate. The more computational resources available, the more instances can run (or run faster), forming stronger collective intelligence (or high-speed intelligence).

Therefore, government should track large-scale usage of computational resources (including self-built data centers, cloud server rentals, and P2P computation borrowing) to ensure its use is legal, compliant, and secure, preventing overly powerful intelligence from being misused.

4. Strengthening Defensive Measures

Given the existence of open-source AI, we cannot guarantee that harmful AI will never emerge. Instead, we must ensure the presence of more beneficial AI to protect humanity from harmful AI.

From this perspective, open-source AI can be seen as a "vaccine" for human society. Since open-source AI may be used maliciously, society is forced to strengthen its defenses (developing "antibodies"), thereby improving overall security. Otherwise, if only closed-source, aligned AI exists, people might become relaxed. If such closed-source AI fails alignment, is maliciously altered, or stolen by bad actors in the future, humanity could face catastrophic consequences.

Biosafety with Open-Source AI

Among all safety risks of open-source AI, biosafety is the most concerning. This is because biosafety presents a scenario where the cost asymmetry between attackers and defenders is extreme: releasing a batch of viruses is far cheaper than vaccinating the entire human population. Even if defenders have more and stronger AIs than attackers, they may still struggle to fend off attacks.

Fortunately, biosafety is a highly specialized field, and removing related risk capabilities from open-source AI models would not significantly impact their general capabilities or most application scenarios. This makes it a feasible risk mitigation strategy.

Another potential approach is to use AI to establish a global biological monitoring network. Regular environmental sampling could be analyzed by AI to identify new species (technically feasible, as evidenced by scientists using AI tools to discover 161,979 RNA viruses in October 2024). AI could simulate and analyze the potential harm these species might pose to humans. If high-risk organisms are identified, immediate containment measures could be taken, and AI-designed predator organisms could be released into the environment to eliminate them. Since biological reproduction and spread take time, timely detection and intervention could prevent large-scale biological disasters. This is just a rudimentary idea from an ordinary intelligence like me. Future ASI will undoubtedly devise better defense methods.

0 comments

Comments sorted by top scores.