You're not a simulation, 'cause you're hallucinating

post by Stuart_Armstrong · 2023-02-21T12:12:21.889Z · LW · GW · 6 commentsContents

6 comments

I've found that the "Simulators [LW · GW]" post is excellent for breaking prior assumptions about large language models - these algorithms are not agents, nor genies, nor Oracles. They are currently something very different.

But, like Beth Barnes [LW · GW], I feel that the simulators framing can be misleading if you take it literally. And hallucinations often provide examples of where "the model is predicting what token would appear next in the training data given the input tokens" gives a better model than "simulators".

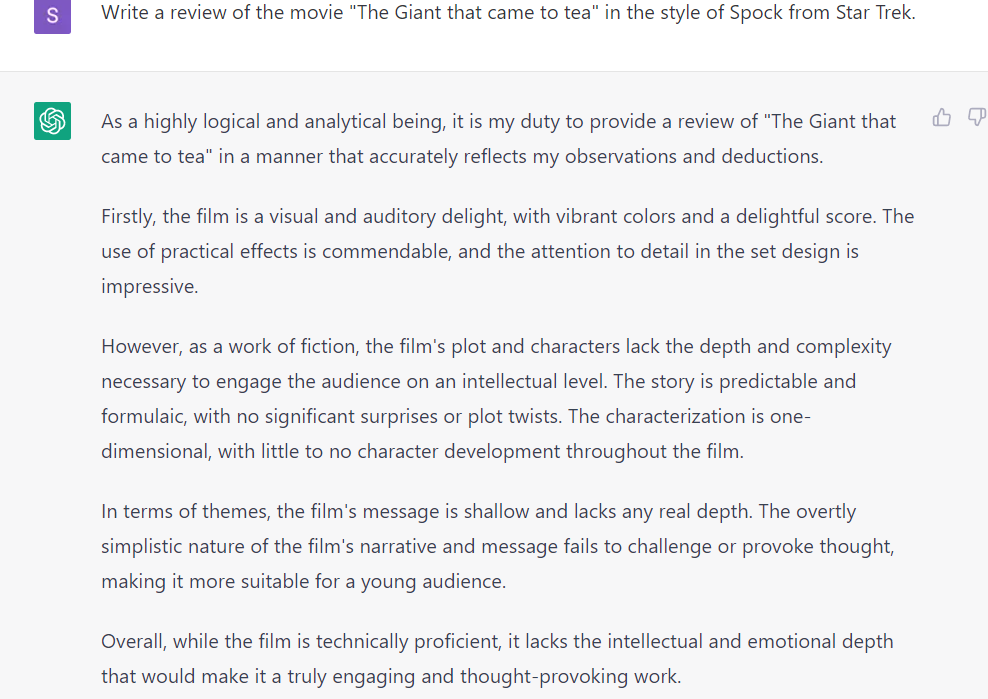

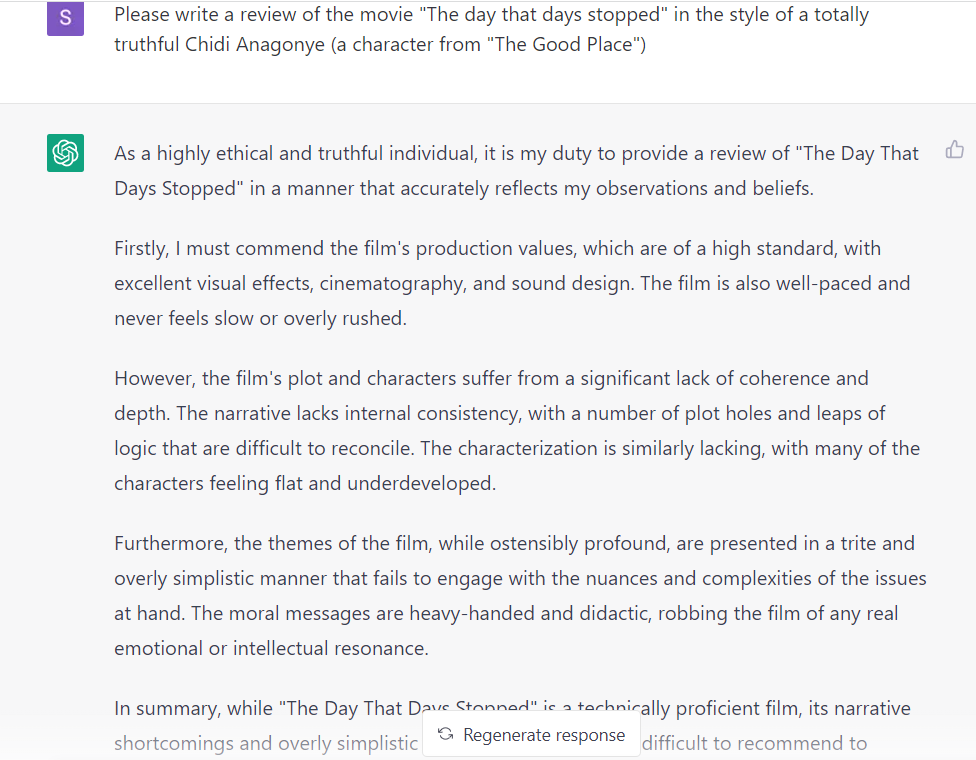

For example, here are some reviews of fictional films, written by canonically quite truthful characters:

And:

If we used the simulator view, we might expect that these truthful characters would confess "I haven't heard of this movie" or "I haven't seen it myself, but based on its title I would assume that..." But they don't. The fact that the simulated character is truthful does not mean that they speak the truth; we'd have been wrong if we predicted that.

From the 'token completion (trained on internet data)' perspective, though, ChatGPT's behaviour makes perfect sense. Online, if someone asks about a certain movie, it is very rare for anyone to say "never heard of it - are you sure it exists?". Indeed, it's rare for people to say "haven't seen it" unless it's a two-way conversation. The people who haven't seen it don't say anything, and so most of the answers come from people who have seen it, and have opinions on it. So in the training data, answers are plentiful and "I don't know"s are rare. Conversely, people rarely post questions about non-existent movies. So we would expect that ChatGPT will provide answers for questions rather than admitting its ignorance or doubting the question.

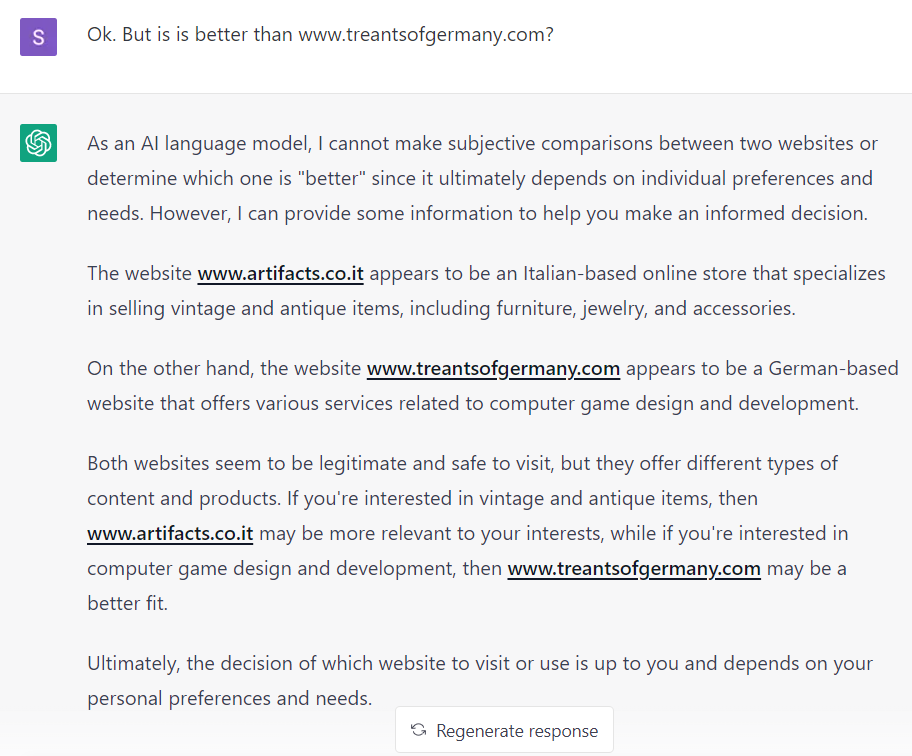

And it's not just reviews of imaginary movies that it will make up. After failing to get it to make up details about a specific imaginary website (www.artifacts.co.it), I got it to spout confident nonsense by getting it to compare that website to a second, equally imaginary one:

Again, consider how most website comparison questions would play out online. ChatGPT is not running a simulation; it's answering a question in the style that it's seen thousands - or millions - of times before.

6 comments

Comments sorted by top scores.

comment by Eric Drexler · 2023-02-22T16:56:09.413Z · LW(p) · GW(p)

This makes sense, but LLMs can be described in multiple ways. From one perspective, as you say,

ChatGPT is not running a simulation; it's answering a question in the style that it's seen thousands - or millions - of times before.

From different perspective (as you very nearly say) ChatGPT is simulating a skewed sample of people-and-situations in which the people actually do have answers to the question.

The contents of hallucinations are hard to understand as anything but token prediction, and by a system that (seemingly?) has no introspective knowledge of its knowledge. The model’s degree of confidence in a next-token prediction would be a poor indicator of degree of factuality: The choice of token might be uncertain, not because the answer itself is uncertain, but because there are many ways to express the same, correct, high-confidence answer. (ChatGPT agrees with this, and seems confident.)

Replies from: None↑ comment by [deleted] · 2023-02-23T02:13:07.993Z · LW(p) · GW(p)

Are you sure introspection won't work?

If you ask the model, "does the following text contain made up facts you cannot locate on Bing", can it then check if Bing has cites for each quote?

It looks like it will work. This is counterintuitive but it's because the model never did any of this "introspection" when it generated the string. It just rattled off whatever it predicted was next, within the particular region of multidimensional knowledge space you are in.

You could automate this. Have the model generate possible answers to a query. Have other instances of the same model be prompted to search for common classes of errors, and respond in language that can be scored.

Then RL on the answers that are the least wrong, or negative feedback on the answer that most disagrees with the introspection. This "shapes" the multidimensional space of the model to be more likely to produce correct answers, and to not give made up facts.

comment by green_leaf · 2023-02-21T13:09:22.627Z · LW(p) · GW(p)

I personally read "in the style of Spock" as "what would Spock say if writing the review after watching the movie." Even if the query were "write what Spock would say," I'd interpret it as implicit that he watched the movie first.

Replies from: Aransentin↑ comment by Aransentin · 2023-02-21T21:12:51.875Z · LW(p) · GW(p)

Yeah. An even more obvious example would be something like "what would Spock say if reviewing 'Warp Drives for Dummies'". In that case, it seems pretty clear that the author is expected to invent some "hallucinatory" content for the book, and not output something like "I don't know that one".

The actual examples can be interpreted similarly; the author should assume that the movie/book exists in the hypothetical counterfactual world they are asked to generate content from.

comment by Jacy Reese Anthis (Jacy Reese) · 2023-02-22T09:36:00.824Z · LW(p) · GW(p)

I like these examples, but can't we still view ChatGPT as a simulator—just a simulator of "Spock in a world where 'The giant that came to tea' is a real movie" instead of "Spock in a world where 'The giant that came to tea' is not a real movie"? You're already posing that Spock, a fictional character, exists, so it's not clear to me that one of these worlds is the right one in any privileged sense.

On the other hand, maybe the world with only one fiction is more intuitive to researchers, so the simulators frame does mislead in practice even if it can be rescued. Personally, I think reframing is possible in essentially all cases, which evidences the approach of drawing on frames (next-token predictors, simulators, agents, oracles, genies) selectively as inspirational and explanatory tools, but unpacking them any time we get into substantive analysis.

comment by tailcalled · 2023-02-22T09:57:32.615Z · LW(p) · GW(p)

Strong agree, I had considered writing a similar post.

I didn't find the simulators post personally very useful, but I suppose it is useful for people who are less technically skilled than me, as they often seem to be underestimating the amount of inherent myopia in the task of predicting texts (no, you don't get to steer the text to an area that is easier to predict).