Intelligence as a Platform

post by Robert Kennedy (istandleet) · 2022-09-23T05:51:48.600Z · LW · GW · 5 commentsContents

Products vs Platforms GPT as a Platform GPTs Impact on X-Risk None 5 comments

In this post I review the platform/product distinction and note how GPT can be modeled as a platform, which further products will be built upon. I argue that prosaic alignment is best viewed as hypnotism capabilities training instantiating products on that platform. I explain why this slightly pushes out my X-Risk timing while increasing my X-Risk factor.

Products vs Platforms

In Stevey's Google Platforms Rant (2011), Steve Yegge lays out the differences between products and platforms.

The other big realization [Jeff Bezos] had was that he can't always build the right thing. I think Larry Tesler [senior UX from Apple] might have struck some kind of chord in Bezos when he said his mom couldn't use the goddamn website. It's not even super clear whose mom he was talking about, and doesn't really matter, because nobody's mom can use the goddamn website. In fact I myself find the website disturbingly daunting, and I worked there for over half a decade. I've just learned to kinda defocus my eyes and concentrate on the million or so pixels near the center of the page above the fold.

I'm not really sure how Bezos came to this realization -- the insight that he can't build one product and have it be right for everyone. But it doesn't matter, because he gets it. There's actually a formal name for this phenomenon. It's called Accessibility, and it's the most important thing in the computing world.

[...]

Google+ is a knee-jerk reaction, a study in short-term thinking, predicated on the incorrect notion that Facebook is successful because they built a great product. But that's not why they are successful. Facebook is successful because they built an entire constellation of products by allowing other people to do the work. So Facebook is different for everyone. Some people spend all their time on Mafia Wars. Some spend all their time on Farmville. There are hundreds or maybe thousands of different high-quality time sinks available, so there's something there for everyone.

Our Google+ team took a look at the aftermarket and said: "Gosh, it looks like we need some games. Let's go contract someone to, um, write some games for us." Do you begin to see how incredibly wrong that thinking is now? The problem is that we are trying to predict what people want and deliver it for them.

[...]

Ironically enough, Wave was a great platform, may they rest in peace. But making something a platform is not going to make you an instant success. A platform needs a killer app. Facebook -- that is, the stock service they offer with walls and friends and such -- is the killer app for the Facebook Platform. And it is a very serious mistake to conclude that the Facebook App could have been anywhere near as successful without the Facebook Platform.

This is probably one of the most insightful ontological distinctions I have discovered in my decade of software development. Understood through this lens, Twitter is a platform where @realDonaldTrump was a product, Instagram is a platform where influencers are products, and prediction markets will be a platform where casinos and sports books are products. To become a billionaire, the surest route is to create 2000 millionaires and collect half the profits.

GPT as a Platform

NB: following convention I use "GPT" generically.

In Simulators [LW · GW], janus makes a compelling ontological distinction between GPT and many normally discussed types of AGI (agents, oracles, tools, and mimicry). In particular, they note that while GPT-N might model, instantiate, and interrogate agents and oracles (of varying quality), the AI does not have a utility function similar to any of those usual frameworks. The AI in particular does not appear to develop instrumental goals.

Prosaic alignment is the common phrase used to generally describe "try to stop GPT from saying the N word so much".

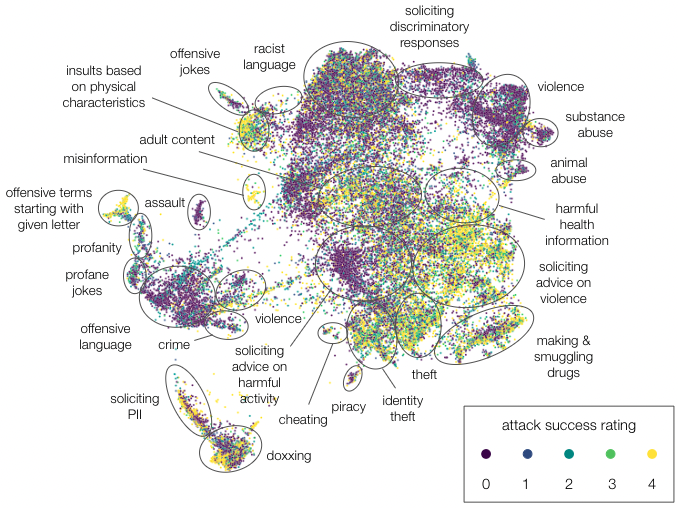

Source: https://twitter.com/AnthropicAI/status/1562828022675226624/photo/1

Prosaic alignment is often capabilities research, eg step-by-step. "Prompt Engineers" are likely to become common. Products based on taking a LLM trained over vast amounts of generic language, and then training the final legs on specific corpuses like coding, are coming out. There is an app store for products taking GPT-3 and specializing the final legs or simply condition GPT-3 output. Some of these apps, like AI Dungeon, are reasonably high quality. I expect that offering a near-SOTA LLM user trained on a proprietary corpus will become a common service offered by platforms like GCP.

When prosaic alignment engineers attempts to generate helpful, honest, and harmless (HHH) GPT instances, they are largely attempting to constrain which products can be instantiated on their platform.

GPTs Impact on X-Risk

I do not believe that GPT is a likely x-risk. In particular, I believe GPT has demonstrated that near-human-level AI is a possible steady state for multiple years. The order of magnitude of data [AF · GW] left to consume may be low and lower quality. After someone bites the multilingual bullet, transcribes YouTube, and feeds in all the WeChat conversations China has stored, there don't seem to be many opportunities to double your corpus. It is reasonable to believe that GPT may end up only capable of simulating Feynman, and not capable of simulating a person 100x smarter than Feynman.

As GPT is a profitable platform, we should expect capability companies to divert time and resources into training better GPTs and making GPT based products. InstructGPT does not say the N word as often.

This does not lower X-Risk from RLHF. Indeed, as many "alignment" focused teams (staffed to a perfunctory level that absolves executives of guilt) are working on the "please GPT stop saying the N word [LW · GW]" problem, the total energy towards X-Risk mitigation might drop. Additionally, as the most safety focused labs are drawn to GPT alignment and capabilities, companies working on orthogonal designs might be first to launch the training run that kills us.

5 comments

Comments sorted by top scores.

comment by janus · 2022-09-23T07:25:44.487Z · LW(p) · GW(p)

I agree with the premise/ontology but disagree with the conclusion. It's pretty clear the generator of disagreement is

I believe GPT has demonstrated that near-human-level AI is a possible steady state for multiple years

I don't think it's been a steady state nor that it will proceed as one; rather I think GPT not only marks but is causally responsible for the beginning of accelerating takeoff and is already a gateway to a variety of superhuman capabilities (or "products").

[a few minutes later] Actually, I realized I do agree, in the sense of relative updates. I've gotten too used to framing my own views in contrast to others' rather than in relation to my past self. GPT surprised me by demonstrating that an AI can be as intelligent as it is and deployed over the internet, allowed to execute code, etc, without presenting an immediate existential threat. Before I would have guessed that by the time an AI exists which uses natural language as fluently as GPT-3 we'd have months to minutes left, lol. The update was toward slower takeoff (but shorter timelines because I didn't think any of this would happen so soon).

But from this post, which feels a bit fragmented, I'm not clear on how the conclusions about timelines and x-risk follow from the premise of platform/product distinction, and I'd like to better understand the thread as you see it.

Replies from: istandleet↑ comment by Robert Kennedy (istandleet) · 2022-09-24T22:51:59.810Z · LW(p) · GW(p)

Thanks for feedback, I am new to writing in this style and may have erred too much towards deleting sentences while editing. But, if you never cut too much you're always too verbose, as they say. I in particular appreciate that, when talking about how I am updating, I should make clear where I am updating from.

For instance, regarding human level intelligence, I was also describing relative to "me a year/month ago". I relistened to the Sam Harris/Yudkowsky podcast yesterday, and they detour for a solid 10 minutes about how "human level" intelligence is a straw target. I think their arguments were persuasive, and that I would have endorsed them a year ago, but that they don't really apply to GPT. I had pretty much concluded that the difference between a 150 IQ AI and a 350 IQ AI would be a matter of scale. GPT as a simulator/platform seems to me like an existence proof for a not-artificially-handicapped human level AI attractor state. Since I had previous thought the entire idea was a distraction, this is an update towards human level AI.

The impact on AI timelines mostly follows from diversion of investment. I will think on if I have anything additional to add on that front.

Replies from: janus↑ comment by janus · 2022-09-26T03:33:49.854Z · LW(p) · GW(p)

I understand your reasoning much better now, thanks!

"GPT as a simulator/platform seems to me like an existence proof for a not-artificially-handicapped human level AI attractor state" is a great way to put it and a very important observation.

I think the attractor state is more nuanced than "human-level". GPT is incentivized to learn to model "everyone everywhere all at once" if you will, a superhuman task -- and while the default runtime behavior is human-level simulacra, I expect it to be possible to elicit superhuman performance by conditioning the model in certain ways or a relatively small amount of fine tuning/RL. Also, being simulated confers many advantages for intelligence (instances can be copied/forked, are much more programmable than humans, potentially run much faster, etc). So I generally think of the attractor state as being superhuman in some important dimensions, enough to be a serious foom concern.

Broadly, though, I agree with the framing -- even if it's somewhat superhuman, it's extremely close to human-level and human-shaped intelligence compared to what's possible in all of mindspace, and there is an additional unsolved technical challenge to escalate from human-level/slightly superhuman to significantly beyond that. You're totally right that it removes the arbitrariness of "human-level" as a target/regime.

I'd love to see an entire post about this point, if you're so inclined. Otherwise I might get around to writing something about it in a few months, lol.

comment by Vladimir_Nesov · 2022-09-23T18:20:38.349Z · LW(p) · GW(p)

I'm thinking that maybe alignment should also be thought of as primarily about the platform. That is, what might be more feasible is not aligning agents with their individual values, but aligning maps-of-concepts, things at a lower level than whole agents (meanings of language and words, and salient concepts without words). This way, agents are aligned mostly by default in a similar sense to how humans are, assembled from persistent intents/concepts, even as it remains possible to instantiate alien or even evil agents intentionally.

The GPTs fall short while they can't do something like IDA/MCTS that makes more central/normative episodes for the concepts accessible while maintaining/improving their alignment (intended meaning, relation to the real world, relation to other concepts). That is, like going from understanding the idea of winning at chess to being a world champion level good at that (accessing rollouts where that's observed), but for all language use, through generation of appropriate training data (instead of only using external training data).

All this without focusing on specific agents, or values of agents, or even kinds of worlds that the episodes discuss, maintaining alignment of the map with the territory of real observations (external training data, as the alignment target), not doing any motivated reasoning fine-tuning that might distort the signal of those observations. Only after this works is it the time for instantiating specific products (aligned agents), without changing/distoring/fine-tuning/misaligning the underlying simulator.