Book Review: Existential Risk and Growth

post by jkim2 · 2021-10-11T01:07:27.320Z · LW · GW · 1 commentsContents

Summary TLDR: Longer summary: 1. Interesting Takeaways: 2. More interesting but less confident takeaway: Caveats & Criticisms 1. Technological change can be exogenous 2. Mostly true for static risks, less true for transition risks 3. Some findings only apply for the chosen parameters 4. Existential risk policy is not a global collective utility-maximising decision Questions & Thoughts None 1 comment

Many questions about existential risk are speculative and hard to answer. Even specific ones, like "How have the odds of nuclear war changed after the United States withdrew from the Iran deal?", are still multi-layered and rather chaotic. Because of this, there haven't been many attempts at answering general questions ones even when they could be more significant. One such question is: what's the effect of economic growth on existential risk?

Leopold Aschenbrenner's paper "Existential Risk and Growth" (2020) begins to answer this. Since it seems like hasn't gotten as much attention as it should, hopefully more people will read this paper after this book review. Since there's this good summary already out there, and already good discussion over this [EA · GW], I'll instead summarise that discussion with additional thoughts that are mine to save time for anyone who's interested. Any errors included here are mine.

Summary

TLDR:

Continued economic growth is needed to better mitigate risks of existential catastrophes. Or as Aschenbrenner states:

It is not safe stagnation and risky growth that we must choose between; rather, it is stagnation that is risky and it is growth that leads to safety.

Longer summary:

Out of existential risks that are human-induced many could be caused by some sort of technological progress. As Nick Bostrom imagines, the process of technological development is like "pulling balls out of a giant urn".

But because technology can outpace safety mechanisms that can regulate it, economic growth could be our best shot to develop the capacity for these safety mechanisms. Examples of dangerous technologies and thier respective safety mechanisms include climate change / carbon capture technology, artificial intelligence / successful alignment.

The paper argues that if humanity is currently in a "time of perils" [EA · GW], which is when we are advanced enough to have developed the means for our destruction but not advanced enough to care about safety, stagnation doesn't solve the problem because we would just stagnate at the same level of risk. Eventually a nuclear war would doom humanity. Intead, faster economic growth could initially increase risk, but would also help us get past the time of perils more quickly.

As economic growth makes people richer, they will invest more in safety, protecting against existential catastrophes. This is because the value of safety increases as people get richer, due to the diminishing marginal returns of consumption. As you grow richer, using an extra dollar to purchase consumption goods gives you less additional utility. However, as your life becomes better and better, you stand to lose more and more if you die. As a result, the richer people are, the greater the fraction of their income they're willing to sacrifice to protect their lives. That is, the fraction of resources that will be allocated to safety depends on how much value people put on their own lives.

One example given is the 1918 pandemic:

Today, we are putting much of life on hold to minimize deaths. By contrast, in 1918, nonpharmaceutical interventions were milder and went on only for a month on average in the U.S, even though the Spanish Flu was arguably deadlier and claimed younger victims. We are willing to sacrifice much more today than a hundred years ago to prevent deaths because we are richer and thus value life much more.

This means that without economic growth, society remains complacent to these long term issues. Most people only care about short-term benefits to their wellbeing, and neglect the consequences that their actions will have on generations 100, 200, 300 years into the future.

As a result, rapid economic growth would temporarily increase the likelihood of existential risk but would later dramatically decrease it. Examples of when innovation has helped humanity overcome large threats includes smallpox.

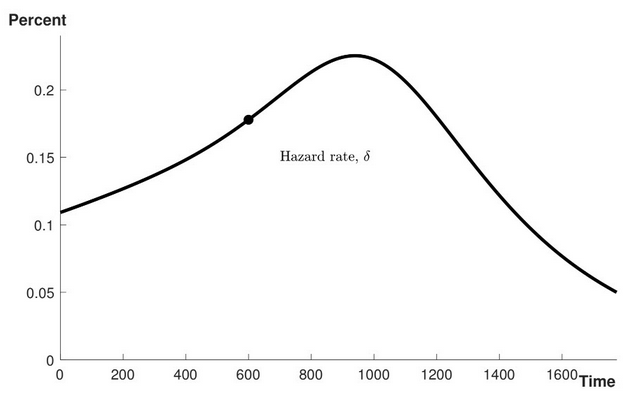

So in the end, the likelihood of existential risk against time follows a Kuznets-style inverted U-shape as below:

Apart from this main argument, there are also several interesting minor points made.

A division based on Ben Snodin's comprehensive post [EA · GW]:

1. Interesting Takeaways:

i) Richer people will care more about safety than consumption, increasing spending on existential risk reduction.

ii) World history will have a 'time of perils' where there is an existential threat that quickly needs to be addressed (this comes from a simple and belieable set of parameters presented in the paper). From a longtermist perspective, this is action-relevant because the time of perils can be considered as an existential risk version of the environmental Kuznets curve, where environmental degradation rises at first, but eventually falls as society becomes richer and more willing to spend on measures that improve environmental quality.

iii) Constantly spending on existential risk reduction versus optimally allocating over time doesn't make a difference to existential risk

2. More interesting but less confident takeaway:

i) If you think economic growth is more or less inevitable, the sign of the impact of economic growth on existential risk won't change over time and you're not sure whether that sign is positive or negative, then you might as well speed up economic growth. The specific reasoning is given by Phillip Trammell.

Apart from the value of these takeaways, this work is important for other interesting reasons. This thinking can also be applied to other constructs. For example, if you're talking about the consumption of meat, you could argue that since meat production is static and continues over time, you might want to 'get over the curve' quickly. That is, maximise economic growth, thereby worsening meat consumption, before achieving solutions such as high quality plant based meat or widespread veganism. Additionally, Snodin makes a good point for why having this kind of work is helpful as a singal:

A separate consideration is one of signalling / marketing: it might be the case that creating an academic literature on the impact of economic growth on existential risk will mean that academics, philanthropists and, eventually, policymakers and the general public take existential risk more seriously.

Caveats & Criticisms

Here are potential caveats to the paper's argument, and several criticisms:

1. Technological change can be exogenous

The paper assumes that technological change is endogenous, relying on the Romer model which claims technological change is mostly driven by profit incentives. While the vast majority technological change is indeed endogenous, a lot is also driven by governments (through academia or agencies), non-profits, or even accidental discoveries. This also cannot be underestimated especially because the impacts of technological change are heavily tail-ended, where low probability events can have far greater impacts. Under this model of exogenous technological change, there could be different recommendations. For example, It would not be as permissible to raise the risk of existential risk temporarily because it would not decrease it by very much in the long term.

2. Mostly true for static risks, less true for transition risks

The argument goes that while economic growth may somewhat accelerate the existential risk in the short term, it will be worth it since in the long term the odds decrease by far greater. For example, take the chance of nuclear war occurring every year as 0.2%. As time approaches infinity, and safety stagnates, the likelihood of nuclear war is certain. Given this, the paper argues we should increase it to 0.8% for a short period of time because it will then dropping it to 0.05%, even 0 through safety measures. Taking the integral of this function of time and risk, the minimum value is when economic growth occurs and tapers the risk curve.

The problem with this is that this is mostly true for static risks, which are constant over longer periods of time. This is less true for transition risks, or sudden risks that demand immediate action. Significant examples for these are Artificial General Intelligence (AGI), which would presumably seek the extinction of the huamn race and Biotechnology, where regulation would be necessary the moment technology arrives. Unless AGI is aligned computationally, it may result in true disaster. This is also complicated by the fact that economic growth is what drives AGI development.

3. Some findings only apply for the chosen parameters

The main parameters that the paper chooses to build its model are the following (as Shulman organises) [EA · GW]:

𝜀: controls how strongly total production of consumption goods influences existential risk. The larger 𝜀, the larger the existential risk.

𝛽: controls how strongly the total production of safety goods influences existential risk. THe larger 𝛽, the larger the existential risk.

𝛾: the constant in the agent’s isoelastic utility function. Generally, 𝛾 controls how sharply the utility gain from an extra bit of consumption falls of as consumption increases.

The most serious objections are:

- 𝜀 and 𝛽 aren’t really fixed. That is, the chance of existential risk are actually more complex. In the end, the likely path of the hazard rate will come down to details. Some examples of such details are which technologies get developed in which order, how dangerous they turn out to be, and how stable and robust important institutions are.

- If 𝜀 >> 𝛽, then it is certain that there going to be extinction. Because of this, we might as well assume 𝜀 > 𝛽 and act on the belief because it could result in an infinitely great future. But if we act on this basis, we will live a far unhappier life as we'll spend most resources on safety. Alternatively, couldn't we live in a world where there is exclusively "safe" technology? For example, banning any form of AI and only using basic computing abilities.

- The hazard rate equation vastly simplifies how the state of the world could influence existential risk. There's not much empirical evidence to back up the accuracy of this equation.

- The model argues the scale effect 𝜀 vs 𝛽, is critical for determining whether we will avert existential catastrophe. But isn’t this just an artefact of the model, which follows from the assumed relationship between existential risk, total consumption, and total safety production?

- Building an unsafe AGI parallelizes better than work for building a safe AGI. Unsafe AGI benefits more in expectation from having more computing power than safe AGI. Both claims of this argument from Elizier Yudkowsky [LW · GW] both imply that slower growth is better from an AI x-risk viewpoint.

4. Existential risk policy is not a global collective utility-maximising decision

Shulman makes a convincing criticism [EA · GW] that argues against the assumption that we can coordinate economic growth. He points out that if this assumption were true, the world wouldn't have a lot of these problems to begin with. In his words:

It also would have undertaken adequate investments in surveillance, PPE, research, and other capacities in response to data about previous coronaviruses such as SARS to stop COVID-19 in its tracks. Renewable energy research funding would be vastly higher than it is today, as would AI technical safety. As advanced AI developments brought AI catstrophic risks closer, there would be no competitive pressures to take risks with global externalities in development either by firms or nation-states.

Conflict and bargaining problems he argues are entirely responsible for war and military spending, central to the failure of addressing climate change, and the threat of AI catastrophe. He points out solving these problems, rather than the actual speed of economic growth is how to reduce the risk of existential risk even when there is high consumption.

Questions & Thoughts

In the end, this work is important because if true, it's a convincing argument for why economic growth is not only ok but is actually necessary. On top of that, it offers a more general and abstract look at existential risk unlike a lot of granular and specific work done about particular technologies or political situations.

Some followup questions off the back of this worth thinking about :

- How can we compare the value of these two options?

- Increasing safety research (ex) a philanthropist investing in AI safety research)

- Improved international coordination (ex) moving from a decentralised national allocation of safety spending to a more organised international effort)

- What would happen if you use a different growth model — in particular, one that doesn't depend on population growth?

If this was interesting, I also recommend Radical Markets by Eric Posner and Glen Weyl. This website contains its major ideas - how markets can be made more equal to confront large sociopolitical shifts in coming years.

1 comments

Comments sorted by top scores.

comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2021-10-11T10:46:35.399Z · LW(p) · GW(p)

I find such stylised models quite unhelpful - not so much "wrong" as "missing the point". X-risks aren't a generalised and continuous quantity; they're very specific discrete events - and to make the modelling more fun, don't have any precedents to work from. IMO outside-view economic analysis just isn't useful here, where the notion of a (implicitly iid) hazard rate doesn't match the situation.

X-safety is also not something you can "produce", it's not fungible or a commodity. If you want safety we all have to avoid doing anything unsafe; offsets aren't actually possible in the long run (or IMO short term, but that's an additional argument).

It also would have undertaken adequate investments in surveillance, PPE, research, and other capacities in response to data about previous coronaviruses such as SARS to stop COVID-19 in its tracks.

Trivially yes, but note that Australia and New Zealand both managed national elimination by June 2020 without having particularly good mask policies or any vaccines! China also squashed COVID with only NPIs! Of course doing so on a global scale is more difficult, but it's a coordination problem, not one of inadequate technical capabilities.