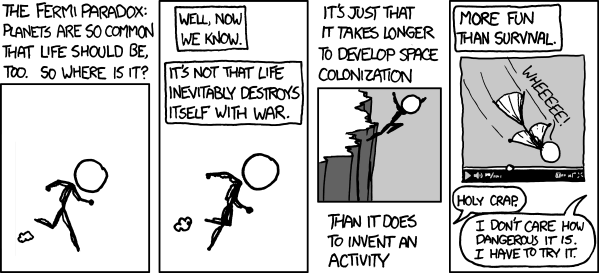

FAI-relevant XKCD

post by Dr_Manhattan · 2011-11-22T13:28:44.070Z · LW · GW · Legacy · 9 commentsContents

9 comments

9 comments

Comments sorted by top scores.

comment by MichaelHoward · 2011-11-22T14:51:26.105Z · LW(p) · GW(p)

If you link to the page instead of the image, it'll save everyone googling it for the mouseover.

Or, put the mouseover text in quotes after the http bit of the link like I did above.

Replies from: Dr_Manhattan↑ comment by Dr_Manhattan · 2011-11-22T14:59:48.285Z · LW(p) · GW(p)

Thanks - I did not have the original link.

Replies from: endoself↑ comment by endoself · 2011-11-22T22:42:36.059Z · LW(p) · GW(p)

The xkcd website has a search function (scroll down just past the comic).

comment by MichaelHoward · 2011-11-23T00:15:28.274Z · LW(p) · GW(p)

I think today's xkcd is more relevant. Has anyone good figures on what's spent on things like cryonics research, or rational attempts to improve humanity's rationality, or maybe existential risk reduction, trying to save the future of the next several billion years and 100 million galaxies, to compare with the stuff on this chart?

comment by JoshuaZ · 2011-11-22T17:05:50.912Z · LW(p) · GW(p)

Sorry, maybe I'm dense. How is this FAI relevant?

Replies from: Dr_Manhattan, dlthomas↑ comment by Dr_Manhattan · 2011-11-22T17:15:50.167Z · LW(p) · GW(p)

"It takes longer to develop value preserving AI technologies than to develop stuff that's cool but dangerous ("more fun than survival")"

↑ comment by dlthomas · 2011-11-22T17:12:47.234Z · LW(p) · GW(p)

Via the notion of a Great Filter, through existential risk generally. It's a bit of a stretch, for sure, but the link is there.

Replies from: JoshuaZ