The Structure of the Pain of Change

post by ReverendBayes (vedernikov-andrei) · 2025-04-13T21:51:53.823Z · LW · GW · 0 commentsContents

Systems Resist Restructuring Core Assumptions About System Transitions Any Initial Change Is a Decline Resistance to Change Increases During the Transition Maximum Resistance Happens Before the Worst Point After the Worst Point, the System Begins to Pull Toward the Better State The More Advanced the System, the Deeper the Drop on the Way to Improvement A Strong Enough Push Can Skip the Resistance Limitations Conclusion None No comments

If there's something in your life that isn't quite good enough and you'd like to change it, chances are you can't just swap one thing for another directly. There are many reasons for that, but this post won’t focus on the various obstacles or how to overcome them. Instead, we'll try to describe the structure of such transitions using a “soft” mathematical model.

The foundation for this post is Section 5 of Vladimir Arnold’s booklet “Hard and Soft Mathematical Models.” I’ll not only summarize the key conclusions from that section but also explain which assumptions they rely on and how exactly the conclusions follow.

This post doesn’t contain any heavy math—it focuses mainly on qualitative results. So even if you're not particularly into mathematics, you should still be able to follow along. But to fully understand this post, knowing of concepts from AP Calculus AB will be more than enough.

Systems Resist Restructuring

For the purposes of this post, we'll use the word “system” to refer to anything whose state can be more or less functional—ranging from a person’s daily habits or a company’s workflow, to processes on the scale of entire industries, nations, or the world. And what we want to understand, on a qualitative level, is this: Why can’t we simply replace something that’s working poorly with something that works better (i.e., something less wrong)?

The core issue is that systems tend to settle into local optima. Their internal processes are more or less tuned, patched together with duct tape, and roughly adjusted to fit one another. If there's an obvious way to improve the system without significant downside, that change usually happens quickly. So even if a system didn’t start out in a local optimum, it typically finds its way there soon enough. But getting out of that local optimum can be hard.

Let’s say we realize that there’s a better configuration of the system nearby in “state space”—a setup that would yield a higher utility value. In order to reach it, though, we’d have to pass through a region of significantly worse performance. Essentially, we would need to dismantle part of the current system and build a new one. At some point, we’d end up with a Frankenstein's monster stitched together from two broken systems. And this hybrid is likely to perform very poorly.

To make this more concrete, let’s look at a couple of examples.

Let’s start with a person who really dislikes their job. You’d think that if they found a better one, they’d want to make the switch. But over the years, their current setup may have become the foundation for several important parts of their life—things that aren’t easy to give up.

Maybe there’s a great coffee shop near the office. The half-hour bus ride has become their habitual trigger for listening to audiobooks. Complaining about the terrible job is a major theme in Friday-night conversations with friends—and, more broadly, a key channel for getting emotional support.

If this person finds a better job, they’ll have to find a new coffee shop, a new time to listen to audiobooks, and a new reason to meet up with friends (as well as new topics to talk about). All of this is solvable—but it actually has to be solved.

Another example: Magical Britain, as depicted in the Harry Potter books. There's a well-known employment problem for wizards. Many jobs can be done with magic, others are outsourced to Muggles—but the political need to provide employment leads to an overinflated Ministry of Magic. I wouldn’t be surprised to find a Department for Oversight of Oversight Departments, or individual Vice Presidents for Goblin Affairs, Centaur Affairs, House-Elf Relations, Gnome Management, Troll Coordination, and so on.

Naturally, all of this consumes countless useless wizard-hours, generates mountains of pointless paperwork, and burns through huge amounts of Galleons. And yet, if someone tried to optimize this bureaucracy by firing all the freeloaders, they'd immediately face some serious questions: What do we do with half the country suddenly unemployed? What happens to all the empty offices and idle Floo fireplaces? Are we going to have to rewrite the entire Hogwarts curriculum?

Again, all of these problems are solvable. But in the moment when you're standing in the middle of the chaos—after tearing down the old system but before building the new one—you’ll probably find yourself thinking wistfully of that old (if not entirely wise) saying: “If it ain't broke, don't fix it.”

Core Assumptions About System Transitions

Now let’s take a closer look at what it means for a system to move from one “more or less okay” state to a “better” one. Here are the assumptions we’ll be working with:

- The utility function is a function of a single variable;

- The function is continuous and smooth (i.e., continuously differentiable);

- The function has two local maxima. The system currently resides in the lower one (with a smaller utility value), and we want to transition to the higher one.

Let’s clarify a few things.

First: what does it even mean to say that the utility function is a function of some number of variables? Here are a few simple examples: “How much useful stuff can I get done in a day, as a function of what time I wake up?” “How well does a department perform, depending on the number of employees?” “How productive is the economy, given various parameters of economic intervention?” and so on.

In other cases, it’s harder to describe everything using numerical parameters. More often, a system's configuration is expressed in procedural terms like, “I start my day by checking email, then do Task A, then Task B, then take a half-hour lunch…”—that kind of thing.

We can try to translate such a description into configuration space. Suppose we divide an ideal workday into time intervals indexed by . Each interval could be represented as a triple of values , where:

- is the cognitive effort spent during that interval,

- is the intrinsic productivity of the task being done,

- and is how helpful the task is from a meta or process-level perspective.

Then the full workday becomes a point in the configuration space: . All that remains is to define a utility function on that space.

Of course, this isn't the best modeling approach in many respects—but it’s a useful illustration of the kind of abstraction we’re talking about.

The second clarification is that a single-variable utility function is, of course, a massive simplification. In real life, any system’s utility function depends on a large number of variables—like in the workday example above.

That said, the behavior of multivariable functions is often not too different from that of single-variable ones (though in some ways it is—e.g., the number of saddle points can increase dramatically). More importantly, we’re not going to directly apply mathematical results from this oversimplified model to real-world systems. Instead, we’re using the simple model as a source of insight. When looking at a real system, we’ll try to reason about it from scratch—borrowing ideas from pure math wherever they seem applicable.

The third point is that assuming the function is continuous and smooth is fairly natural when thinking about real-world systems. Building an accurate model of a real system (other than a few simple, purely mechanical ones) is usually infeasible. But for rough, first-pass models, assuming a smooth and continuous utility function is often good enough.

Now that we’ve laid out our basic assumptions, let’s look at the conclusions we can draw from them.

Any Initial Change Is a Decline

We all know the saying, “Not every change is an improvement, but every improvement is a change.” But here we’re not talking about the end result—we’re focusing on the process of change itself, which comes with its own dynamics.

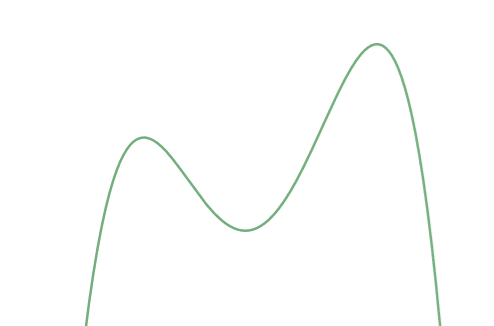

Just by looking at the graph, we can see the issue: if the system is sitting at a local maximum, then every small step in any direction leads to a worse state. Even if the current state is terrible, chances are we’ve spent some time optimizing it—with duct tape, zip ties, and a prayer. The current setup might be a kind of hell, but it’s the most comfortable point that resides in the immediate vicinity of that hellish region we landed in.

Restructuring the system toward a better configuration usually means removing at least some of the dirty hacks that made it marginally functional—because they won’t fit the new design anyway. In effect, we have to dismantle some existing subsystem and build a new one in its place. Naturally, this new subsystem won’t play nicely with the rest of the old configuration. A half-carriage, half-boat contraption almost always looks—and functions—as disastrously as it sounds.

Of course, once the transition to the new configuration is complete, everything will likely be much better—but until then, the jury-rigged monstrosity has to somehow survive.

Interestingly, beyond the qualitative conclusion that “things get worse before they get better,” we can also say something quantitative: if we move from the old configuration to the new one at a constant rate, the rate of degradation will actually increase along the way.

This follows from a simple fact about smooth utility functions: near a local maximum, the function is concave downward, meaning its first derivative (which is negative) is decreasing. As a result, the absolute value of the derivative—that is, the rate of decline—increases.

Informally, you can think of it like this: the fewer duct tape patches remain, the more expensive it becomes to remove each one. Often, parts of a system provide redundancy—if one fails, another can compensate. So removing the first patch only slightly weakens the overall structure. But as you keep going, more and more of the remaining parts are load-bearing. The fewer defenders left in the fortress, the more painful each additional loss becomes.

Resistance to Change Increases During the Transition

Let’s start by defining what we mean by resistance to change. A natural way to express it is in terms of the rate at which the system's state deteriorates: the more costly the next step is, the less motivated we are to take it. In other words, the more painful it is to remove the next piece of duct tape, the more we want to leave it in place.

As we just showed, near the first local maximum, each step makes things worse faster than the previous one. So resistance naturally grows as the transition progresses.

Note, however, that we’re not talking about some kind of knee-jerk conservatism that resists any deviation from the status quo—even beneficial ones. Instead, we’re describing a kind of “locally rational” (if short-sighted) resistance: the worse the immediate outlook for the next step, the stronger the pushback against taking it.

Maximum Resistance Happens Before the Worst Point

The peak of resistance occurs at the inflection point—where the second derivative of the utility function is zero. Since the function is concave downward near the first local maximum and concave upward near the utility valley between the two optima, there must be at least one inflection point in between.

If there are multiple inflection points—i.e., multiple local maxima of “resistance to change”—one (or more) of them will be the highest. Which one exactly isn’t especially important.

Naturally, overcoming resistance requires willpower or some other kind of resource, and at some point, the cost of taking the next step may exceed what we can afford. Just because we managed to push through the early stages doesn’t mean we’ll be able to handle the hardest part.

And even after we’ve pushed past the peak of resistance, the system’s performance will keep getting worse for a while. Yes, the rate of decline will start to decrease—but decline is still happening, and we’ll need to continue dismantling the old system (and degrading its functionality) before we reach the turning point. And that still requires effort.

In other words, even if we grit our teeth and manage to get past the highest point of resistance in a single push, we still have to budget enough strength to make it through the rest of the transition. You could say that you can’t reach the summit without climbing the steepest part of the slope—but even once you’ve done that, you need enough stamina to finish the rest of the ascent. Just remember that in this mountaineering metaphor, we’re not climbing the utility function—we’re climbing the curve of its first derivative.

After the Worst Point, the System Begins to Pull Toward the Better State

This part is fairly intuitive: to the right of the local minimum, the utility function starts increasing again. Every new step now brings local improvement rather than further degradation—so we actually want to keep moving forward.

This turning point usually comes when the new, more effective configuration has been assembled well enough to offset the damage done by the remnants of the old one. At that stage, the legacy parts are not only malfunctioning on their own, but also interfering poorly with the new components during the transition phase.

The More Advanced the System, the Deeper the Drop on the Way to Improvement

There’s a claim in the booklet that the greater the final improvement (i.e., the utility gap between the starting and ending states), the deeper the valley you have to pass through at the local minimum. And that each successive leap to a higher local maximum requires going through an even deeper trough.

Strictly speaking, I wasn’t able to find a mathematical assumption that would make this always true. It’s easy to draw counterexamples—utility functions where each subsequent improvement requires only a minimal dip, like a staircase with shallow notches between the steps.

But here’s a qualitative explanation that makes the intuition plausible. A system can achieve good performance not just by having effective individual parts, but by being well-structured at the meta-level: its subsystems are tightly tuned to each other and can reinforce one another. A significantly better configuration is likely to differ in many key ways, because we’ve already applied most of the “best practices” available within the current paradigm. If there’s a better setup out there, it probably rests on an entirely different ideology. A horse can only go so fast—if we want more speed, we need an engine.

And once the new version is built around a different paradigm, the old and new configurations are likely to be deeply incompatible. As a result, we’ll have to dismantle many subsystems that not only worked well on their own, but also contributed to the functionality of their neighbors. The intermediate collapse, then, can be quite dramatic.

A Strong Enough Push Can Skip the Resistance

In other words, if we manage to move the system not gradually (in “small steps”) but in a jump large enough to land us near the new optimum—skipping over the minimum point—then we enter a region where the system naturally starts pulling toward the better state.

In reality, transitions aren’t perfectly continuous anyway; we tend to move in bigger or smaller leaps. And sometimes, a single leap is large enough that every step after it leads immediately to improvement.

Of course, such a jump is easier to pull off when the system is still relatively unoptimized, and the two local optima are close together. As time goes on, pulling this off gets much harder.

It’s also worth noting that we don’t necessarily have to jump all the way to a state that’s already better than the original. It may be enough to leap just beyond the local minimum—so long as we land on the other side. From there, even if the new state is still pretty bad, the system will begin to be drawn toward the new local maximum rather than back toward the old one.

Put differently: to the left of the local minimum, the system is pulled back toward the old configuration; to the right, it starts drifting toward the new one. Roughly speaking, whichever half-assembled version looks more viable at that point is the one that will feel more natural to finish building.

Limitations

At the beginning of this post, we made a few fairly reasonable assumptions about the system—assumptions we’ve relied on throughout our reasoning. Now it’s time to highlight a couple of important limitations of this model.

First, we haven’t accounted for the possibility that the utility landscape itself might change over time. In real systems, external factors can shift the shape of the utility function significantly, sometimes leading to entirely different dynamics.

Second, we haven’t considered momentum in state-space movement. During a system transition, we might build up so much speed that we overshoot the optimal stopping point (a local maximum) and keep going. We might end up stuck in a worse maximum on the far side of the intended target—or even drift off into uncharted and dysfunctional territory.

If the problem was “hammer in a nail,” we naturally reach for a hammer—but from that point on, everything can start looking like a nail. The same applies to locally useful techniques and strategies (or even entire external teams brought in to implement them): it’s often hard to stop, even after we’ve passed the point where those strategies are still appropriate.

Conclusion

We’ve explored a fairly simple mathematical model describing how a system transitions from one local maximum to another—and we’ve drawn a number of useful insights about how that process tends to unfold. Of course, these conclusions shouldn’t be applied directly to real-world situations. But the general reasoning pattern can often be repeated, leading to similar conclusions based on the specific gears-level models of whatever system you’re dealing with.

Naturally, the fact that improvements often come with pain doesn’t mean we shouldn’t strive for better outcomes. It just means it’s helpful to be aware of the hidden pitfalls—so we’re better prepared to navigate them.

0 comments

Comments sorted by top scores.