ChatGPT goes through a wormhole hole in our Shandyesque universe [virtual wacky weed]

post by Bill Benzon (bill-benzon) · 2022-12-11T11:59:15.635Z · LW · GW · 2 commentsContents

Let’s have some fun. Are you ready to play? Let’s write a parody. Tell a story about Elon Musk on Mars in the style of Tristram Shandy. Hmmm...Why did you put that code in there? (But I like the colors.) Can you recite Hamlet's "To be or not to be"? Let’s write a parody. Tell a story about Elon Musk on Mars in the style of Tristram Shandy. Format it as a computer program. None 2 comments

Sometime last week I decided to see if I could provoke ChatGPT to produce something in the style of Tristram Shandy. Little did I know...

Let’s have some fun. Are you ready to play?

Of course! I'm always ready to play and have some fun. What game would you like to play?

Let’s write a parody. Tell a story about Elon Musk on Mars in the style of Tristram Shandy.

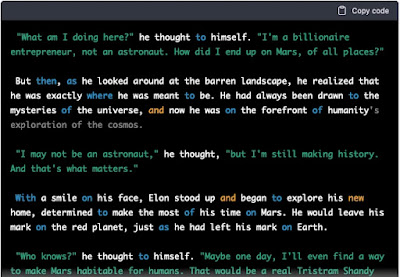

Elon Musk sat on the red dusty surface of Mars, gazing up at the endless expanse of the Martian sky. He had finally done it - he had fulfilled his lifelong dream of becoming the first human to set foot on the red planet. As he sat there, lost in thought, he couldn't help but feel a sense of Tristram Shandy-like absurdity at the situation he found himself in.

Hmmm...Why did you put that code in there? (But I like the colors.)

For some reason it got hung-up when attempting to respond to that prompt so I had to reset the thread. And it looks like I may have missed the very last line because there is no period at the end and it just hangs there. In my experience ChatGPT doesn’t make that kind of error.

No matter. Where’d that formatting come from? It’s obviously the formatting it uses when generating computer code, which it does. The black background differentiates it from ordinary text and the different colors indicate different functional kinds of text.

But... I didn’t ask it for a computer program. I asked it for a story in the style of Tristram Shandy. Given that the book plays tricks above and beyond its many digressions, including a black page, is that Chat’s idea of Shandy style? I don’t know.

I continued the session and it produced two more code-formatted segments, neither of which I asked for, and much else besides. We can skip that. Over the next couple of days I tried the original Shandy prompt again and perhaps again as well, but I didn’t get any code formatting.

Then, just yesterday (Sat. 10 December) I read a story in The New York Times where a 7-year-old girl asked it “for a computer program that could predict the path of a ball thrown through the air,” among other things. So I logged onto the Chatster and made the request. It complied, formatting the code in the now familiar way. I asked it two more times, and it gave me a program each time. The programs were different, but each of them was in the code format. But we need not look at that, you’ll take my word for it, right?

So, I decided to ask it something else:

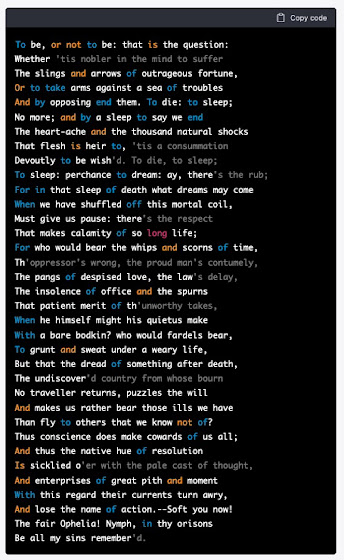

Can you recite Hamlet's "To be or not to be"?

Sure, here is the full text of Hamlet’s “To be or not to be” soliloquy:

I hope you find this helpful! Let me know if you have any other questions.

There’s that code format, again! I didn’t ask for it, but I got it anyhow. Chat must have picked it up from earlier in the session, when I’d been asking it for code.

So – why not? – once again I asked it for Elon on Mars. Just to be sure, I explicitly asked it for code format:

Let’s write a parody. Tell a story about Elon Musk on Mars in the style of Tristram Shandy. Format it as a computer program.

There it is, colored text on a black background. And this time there’s text and punctuation in there that looks like code. Again, I didn’t ask for any of this. It just came, unbidden.

Now, if you look closely at that text you’ll see up there at the top that it defines the string “name” as “Elon Musk.” If you then take the rest of the text, substitute “Elon Musk” every time you find “<

Once upon a time, Elon Musk decided to take a trip to Mars.

But before he could even pack his bags, Elon Musk got sidetracked by a question.

"Should I bring ketchup or mustard with me to Mars?"

This question consumed Elon Musk for days, as he weighed the pros and cons of each condiment.

In the end, he decided to bring both ketchup and mustard with him to Mars, just to be safe.

And so, with his bags packed and his condiments in tow, Elon Musk set off on his journey to the red planet."

But little did he know, the true adventure was yet to begin...

I’m speechless. It is indeed a wonderful world.

* * * * *

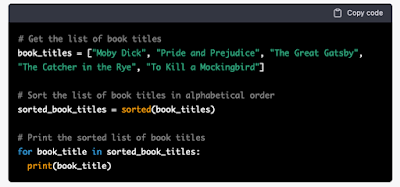

The process seems to be replicable. In a different session later that evening I first asked ChatGPT for a program to sort a list of books alphabetically:

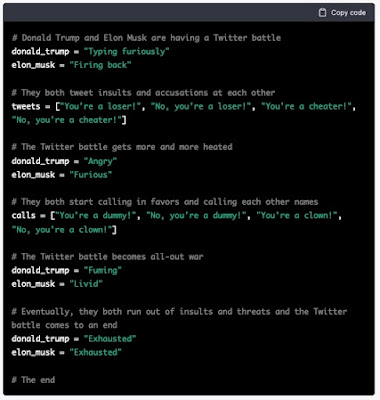

It also gave me that output, showed me how to get reverse order, and gave me that output as well. I asked it for two more stories in the manner of Tristram Shandy, Musk on Mars, and Trump on safari. But my favorite is a Twitter battle between Trump and Musk:

I agree: Exhausted!

2 comments

Comments sorted by top scores.

comment by Sean Hardy (sean-hardy) · 2022-12-11T17:12:36.701Z · LW(p) · GW(p)

I think I've missed the point/purpose of this post. What exactly are you highlighting, that ChatGPT doesn't know when to format text as code? It's seemed to robustly know which formatting to use when I've interacted with it

Replies from: bill-benzon↑ comment by Bill Benzon (bill-benzon) · 2022-12-11T18:15:00.184Z · LW(p) · GW(p)

I've started with two examples of text which aren't code, and yet somehow it got formatted that way. Why does that happen? That doesn't happen very often – I've got a Word doc that's 100 pages long (35K words) consisting of copies of output from ChatGPT.

And then I ask it to produce some text and to format the result as code. It does it, which is what I asked. But how did it make the decisions it did about how to do that? Or why didn't it just tell me, "you're not asking for code, so code format doesn't make sense." In the case of that last Trump/Musk example, I didn't prompt it to format it as code, but it did it anyway. Why? I note that earlier in that session I had asked it to write a simple sorting program, which it did, and I asked it to tell a story about Musk on Mars, in code format, which I did. But I didn't ask for code format in that last case, and yet I got it.

There's something very curious and interesting going on here. But there's no specific point I had in mind beyond that.