How I got so excited about HowTruthful

post by Bruce Lewis (bruce-lewis) · 2023-11-09T18:49:06.556Z · LW · GW · 3 commentsContents

3 comments

This is the script for a video I made about my current full-time project. I think the LW community will understand its value better than the average person I talk to does.

Hi, I'm Bruce Lewis. I'm a computer programmer. For a long time, I've been fascinated by how computers can help people process information. Lately I've been thinking about and experimenting with ways that computers help people process lines of reasoning. This video will catch you up on the series of thoughts and experiments that led me to HowTruthful, and tell you why I'm excited about it. This is going to be a long video, but if you're interested in how people arrive at truth, it will be worth it.

Ten or 15 years ago I noticed how online discussion forums really don't work well for persuading people of your arguments. Instead of focusing on facts, people get sidetracked, make personal attacks, and go in circles. Very rarely do you see anyone change their mind based on new evidence.

This got me thinking about what might be a better format than posts, comments and replies for arguments. I thought about each statement being its own thing, and statements would be linked by whether one statement argues for or against another statement. If you repeat a statement it would use the existing thing rather than making a new one, making it easier to avoid going in circles. And it would encourage staying focused on the topic at hand and not getting sidetracked.

I kept this idea in the back of my head, but didn't do anything with it.

A few years later, I was baffled by the success of Twitter. Its only distinguishing feature at that time was that you were limited to 140 characters. Everybody complained about this. But I started to think the limit was the secret to its success. Yes, it's a pain that you're limited to 140 characters. You have to work hard to make what you want to say concise enough to fit. But the great thing was that everyone else also had to work hard to be concise.

So the idea of forced conciseness stirred around in the back of my mind and started to mix with my other idea about a format for arguments that would help people stay focused. And then a third idea joined them, for making it quick and easy for people when they're ready to change their mind.

In 2018, when political discussion in my country was getting very polarized, these three ideas came to the front of my mind and I started working seriously on them. Or, I should say, working on them as seriously as I could in my spare time while holding an unrelated day job. I did get a working version onto a domain I bought, howtruthful.com, but it didn't get traction.

Then this year, in January, I got an email from my employer saying that they were reducing their workforce, and my role was impacted. My employment was to end 9 months later on October 27. I went through a sequence of responses to this. First I had a sinking feeling, and it seemed unreal. Then later, after I looked at the severance package and bonus for staying the whole 9 months to the exit date, I thought it was all great. I would have enough money to work full time for months on projects that I think are valuable, like HowTruthful. Then, a few months later, I had nagging doubts. Maybe I should find a transfer position and not leave my employer.

There were a lot of considerations in this big career decision, and I set up appointments with 3 different volunteer career coaches who had experience with entrepreneurship. I met with the first one and explained my dilemma, but didn't say anything about HowTruthful. He listened intently, then said, "This is not something I can decide for you. Here's what I suggest you do. Get a piece of paper. Write down all the pros and all the cons of staying here, and see if the decision becomes obvious."

I couldn't help laughing out loud. Then I told him that the project I was considering working on full-time was one for organizing pros and cons. But I took his advice, and the results are right here.

This is an opinion page on HowTruthful. An opinion has three parts: the main statement up at the top, a truthfulness rating, which is the colored circles numbered one through five, and then evidence, both pro and con. For those who haven't seen HowTruthful before I'll explain each of the three parts.

First, the main statement. It's not a question. It's a sentence stated with certainty. As you change your mind about how truthful the main statement is, you don't change the sentence. You only change the truthfulness rating. This is how formal debates work. And even for an issue that you're deciding by yourself, changing the truthfulness rating is faster than editing a sentence to reflect how truthful you think the fact is.

And that brings us to the truthfulness rating. This is your opinion, not the computer's. Like the statement and the evidence, this is by humans, for humans. It's a simple five-point scale. One is false, three is debatable, five is true, and there are just two in-between ratings.

You might be asking, where do you draw the line between each rating? My suggestion, not in any way enforced, is to use this scale in a way that's practical to act upon, not according to any percent probability. For example, the two statements "If I go out today with no umbrella I won't get wet" and "If I drive today with no seatbelt I won't get hurt" could have the same percent probability, but you'd rate one false and the other true based on their practical applications.

OK, so finally there's the evidence. Anything one might say to argue for the main statement goes under Pro. Anything one might say to argue against it goes under Con. Just like the main statement, these each have their own truthfulness rating that can be changed quickly without having to edit the sentence. For example, this first argument for staying at my employer wasn't always rated false. If instead of changing it to false I had to edit it to say "there's no transfer position..." that would have made it an argument against the main statement and I would have had to move it to the other section.

Now when I say "just like the main statement" I really mean it. Because just like with the main statement, there can be sub-arguments for each of the pros and cons. That's what the numbers in parentheses mean. For example, there's one con argument for this first one. If we click through, now we're treating the thing we clicked on as the main statement. And you can keep going down as deep as the argument calls for.

I realize this is very different from other web sites. It's unfamiliar and takes getting used to. But look at the clarity and focus that results once you put it all together. There are so many considerations underneath this decision, but now they're organized under 5 main arguments. I can get the big picture a lot faster.

Everything I've shown you so far is available to use now, and is free. Of course, to be sustainable this has to make money. And I don't think advertising really fits on a site where people are trying to ascertain truth. I'll show you the paid part. I'm going to click the pencil up here to edit my opinion, then expand the editing options here, and change this from private to public. So keeping your opinions to yourself is free, sharing them with the world is the paid version. By charging 10 dollars per year (that's right, year, not month), about the cost of a paperback book, I can make it costly for people to create accounts they know will be shut down for violating the terms of service, while keeping it cheap for people who want to sincerely seek truth. I'm looking for five people in the next week who are also excited about this idea and are willing to invest $10 in addition to their time to help me figure out where to take it from here. But even if you're not one of those, give it a spin in the free functionality and let me know what you think. Just visit howtruthful.com and click the "+ Opinion" button in the lower left. You don't need to log in.

3 comments

Comments sorted by top scores.

comment by DPiepgrass · 2024-05-20T20:52:56.532Z · LW(p) · GW(p)

I like that HowTruthful uses the idea of (independent) hierarchical subarguments, since I had the same idea [LW(p) · GW(p)]. Have you been able to persuade very many to pay for it?

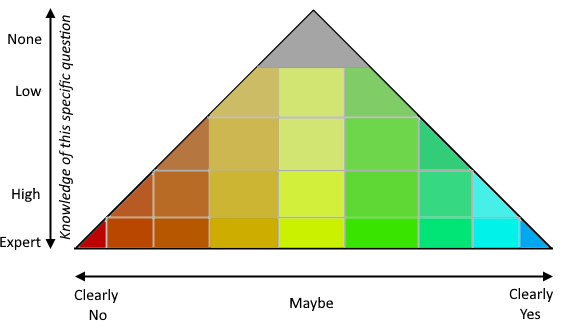

My first thought about it was that the true/false scale should have two dimensions, knowledge & probability:

One of the many things I wanted to do on my site was to gather user opinions, and this does that. ✔ I think of opinions as valuable evidence, just not always valuable evidence about the question under discussion (though to the extent people with "high knowledge" really have high knowledge rather than pretending to, it actually is evidence). Incidentally I think it would be interesting to show it as a pyramid but let people choose points outside the pyramid, so users can express their complete certainty about matters they have little knowledge of...

Here are some additional thoughts about how I might enhance HowTruthful if it were my project:

(1) I take it that the statements form a tree? I propose that statements should form a DAG (directed acyclic graph ― a cyclic graph could possibly be useful, but would open a big can of worms). The answer to one question can be relevant to many others.

(2) Especially on political matters, a question/statement can be contested in various ways:

- "ambiguous/depends/conflated/confused": especially if the answer depends on the meaning of the question; this contestation can be divided into just registering a complaint, on the one hand, and proposing a rewording or that it be split into clearer subquestions, on the other hand. Note that if a question is split, the original question can reasonably keep its own page so that when someone finds the question again, they are asked which clarified question they are interested in. If a question is reworded, potentially the same thing can be done, with the original question kept with the possibility of splitting off other meanings in the future.

- "mu": reject the premise of the question (e.g. "has he stopped beating his wife?"), with optional explanation. Also "biased" (the question proposes its own answer), "inflammatory" (unnecessarily politicized language choice), "dog whistle" (special case of politicized language choice). Again one can imagine a UI to propose rewordings.

- sensitive (violence/nudity): especially if pic/vid support is added, some content can be simultaneously informative/useful and censored by default

- spam (irrelevant and inappropriate => deleted), or copyright claim (deleted by law)

(3) The relationship between parent and child statements can be contested, or vary in strength:

- "irrelevant/tangential": the statement has no bearing on its parent. This is like a third category apart from pro/evidence in favor and con/evidence against.

- "miscategorized": this claims that "pro" information should be considered "con" and vice versa. Theoretically, Bayes' rule [LW · GW] is useful for deciding which is which.

This could be refined into a second pyramid representing the relationship between the parent and child statements. The Y axis of the pyramid is how relevant the child statement is to the parent, i.e. how correlated the answers to the two questions ought to be. The X axis is pro/con (how the answer to the question affects the answer to the parent question.) Note that this relationship itself could reasonably be a separate subject of debate, displayable on its own page.

(4) Multiple substatements can be combined, possibly with help from an LLM. e.g. user pastes three sources that all make similar points, then the user can select the three statements and click "combine" to LLM-generate a new statement that summarizes whatever the three statements have in common, so that now the three statements are children of the newly generated statement.

(5) I'd like to see automatic calculation of the "truthiness" of parent statements. This offers a lot of value in the form of recursion: if a child statement is disproven, that affects its parent statement, which affects the parent's parent, etc., so that users can get an idea of the likelihood of the parent statement based on how the debate around the great-grandchild statements turned out. Related to that, the answers to two subquestions can be highly correlated with each other, which can decrease the strength of the two points together. For example, suppose I cite two sources that say basically the same thing, but it turns out they're both based on the same study. Then the two sources and the study itself are nearly 100% correlated and can be treated altogether as a single piece of evidence. What UI should be used in relation to this? I have no idea.

(6) Highlighting one-sentence bullet points seems attractive, but I also think Fine Print will be necessary in real-life cases. Users could indicate the beginning of fine print by typing one or two newlines; also the top statement should probably be cut off (expandable) if it is more than four lines or so.

(7) I propose distinguishing evidentiary statements, which are usually but not always leaf nodes in the graph. Whenever you want to link to a source, its associated statement must be a relevant summary, which means that it summarizes information from that source relevant to the current question. Potentially, people can just paste links to make an LLM generate a proposed summary. Example: if the parent statement proposes "Crime rose in Canada in 2022", and in a blank child statement box the user pastes a link to "The root cause': Canada outlines national action plan to fight auto theft", an LLM generates a summary by quoting the article: "According to 2022 industry estimates [...], rates of auto theft had spiked in several provinces compared to the year before. [Fine print] In Quebec, thefts rose by 50 per cent. In Ontario, they were up 34.5 per cent."

(8) Other valuable features would include images, charts, related questions, broader/parent topics, reputation systems, alternative epistemic algorithms...

(9) Some questions need numerical (bounded or unbounded) or qualitative answers; I haven't thought much about those. Edit: wait, I just remembered my idea of "paradigms", i.e. if there are appropriate answers besides "yes/true" and "false/no", these can be expressed as a statement called a "paradigm", and each substatement or piece of available evidence can (and should) be evaluated separately against each paradigm. Example: "What explains the result of the Michelson–Morley experiment of 1881?" Answer: "The theory of Special Relativity". Example: "What is the shape of the Earth?" => "Earth is a sphere", "Earth is an irregularly shaped ellipsoid that is nearly a sphere", "Earth is a flat disc with the North Pole in the center and Antarctica along the edges". In this case users might first place substatements under the paradigm that they match best, but then somehow a process is needed to consider each piece of evidence in the context of each subparadigm. It could also be the case that a substatement doesn't fit any of the current paradigms well. I was thinking that denials are not paradigms, e.g. "What is the main cause of modern global warming?" can be answered with "Volcanoes are..." or "Natural internal variability and increasing solar activity are..." but "Humans are not the cause" doesn't work as an answer ("Nonhumans are..." sounds like it works, but allows that maybe elephants did it). "It is unknown" seems like a special case where, if available evidence is a poor fit to all paradigms, maybe the algorithm can detect that and bring it to users' attention automatically?

Another thing I thought a little bit about was negative/universal statements, e.g. "no country has ever achieved X without doing Y first" (e.g. as evidence that we should do Y to help achieve X). Statements like this are not provable, only disproveable, but it seems like the more people who visit and agree with a statement, without it being disproven, the more likely it is [LW · GW] that the statement is true... this may impact epistemic algorithms somehow. I note that when a negative statement is disproven, a replacement can often be offered that is still true, e.g. "only one country has ever achieved X without doing Y first".

(10) LLMs can do various other tasks, like help detect suspicious statements (spam, inflammatory language, etc.), propose child statements, etc. Also there could be a button for sending statements to (AI and conventional) search engines...

comment by Perhaps · 2023-11-22T16:09:33.765Z · LW(p) · GW(p)

I like the ideal, but as a form of social media it doesn't seem very engaging, and as a single source of truth it seems strictly worse than say, a wiki. Maybe look at Arbital, they seem to have been doing something similar. I also feel that dealing with complex sentences with lots of implications would be tough, there are many different premises that lead to a statement.

Personally I'd find it more interesting if each statement was decomposed into the premises and facts that make it up. This would allow tracing an opinion back to find the crux between your beliefs and someone else's. I feel like that's a use case that could live alongside conventional wikis, maybe even as an extension powered by LLMs that works on any highlighted text.

Love to see more work into truth-seeking though, good luck on the project!

Replies from: bruce-lewis↑ comment by Bruce Lewis (bruce-lewis) · 2023-11-24T04:20:36.339Z · LW(p) · GW(p)

Agreed there's a lot of work ahead in making it engaging.

I define "pro" as anything one might say in defense of a statement, and that includes decomposing it. It can also include disambiguating it. Or citing a source.

Thanks for the well-wishes. Only two paid users so far, but I'm getting very useful feedback and will have a second iteration with key improvements.