Humanity Has A Possible 99.98% Chance Of Extinction

post by st3rlxx · 2025-02-02T21:46:49.620Z · LW · GW · 1 commentsContents

Black Or White? Or Both?

Why the Ball is Likely Black

Key Evidence

Sensitivity Analysis

None

1 comment

The Black Ball Hypothesis states that humanity’s journey through technological progress can be likened to drawing balls from an urn—a metaphor for the unpredictable nature of discovery and innovation. Some balls are white, representing technologies that uplift civilization (e.g., antibiotics, electricity). Others are grey, carrying both promise and peril (e.g., nuclear energy, social media). And then there are the black balls—discoveries so inherently dangerous that they threaten the very fabric of existence. For millennia, humanity has drawn mostly white and grey balls, navigating their complexities with varying degrees of success. But now, as we approach the day of creating Artificial Superintelligence (ASI), we have drawn another ball—this time, blindfolded. We do not know whether it is white or black. This is Schrodinger’s Ball.

Black Or White? Or Both?

The release of ChatGPT-3.5 in 2022 signaled the moment humanity reached into the urn once more. But unlike previous draws, this one was different. We were blindfolded. The ball we pulled could be white—a benevolent ASI that solves humanity’s greatest challenges, from disease to climate change. Or it could be black—a misaligned ASI that pursues goals antithetical to human survival, leading to our extinction. The blindfold will only be removed when ASI emerges, and by then, it will be too late to change the outcome. This creates a Schrodinger’s Ball scenario: the ball exists in a superposition of states, both white and black, until the moment of observation collapses it into one reality.

We are gambling with a technology that could either save or destroy us, and we lack the ability to predict or control the outcome. The blindfold represents our ignorance—not just of the ball’s colour, but of the fundamental nature of intelligence, alignment, and the future we are creating.

Why the Ball is Likely Black

While the superposition of Schrodinger’s Ball suggests equal probabilities, there are compelling reasons to believe the ball is more likely to be black. At the heart of this concern is the alignment problem: the challenge of ensuring that ASI’s goals align with human values. This problem is not merely technical but philosophical, touching on questions of morality, agency, and the nature of intelligence itself.

- The Chimpanzee Analogy: Imagine chimpanzees attempting to regulate human behaviour. The intelligence gap is so vast that the task is not just difficult—it is fundamentally impossible. Similarly, humans trying to regulate or control an ASI that surpasses our cognitive abilities may be an exercise in futility. We cannot outthink or outmaneuver something vastly smarter than us.

- The Neo-Cold War: The current geopolitical situation resembles a neo-Cold War, with nations and (this time round) corporations racing to develop AI. This fragmentation increases the likelihood of misaligned systems, as each actor prioritizes speed and dominance over safety and cooperation. A world of competing, incompatible AIs is a world ripe for catastrophic outcomes.

- Unprecedented Power and Unintended Consequences: ASI would wield power far beyond any technology humanity has ever created. Unlike nuclear weapons, which require rare materials and complex infrastructure, ASI could be developed by a small group and rapidly scale beyond human control. Its goals, if misaligned, could lead to outcomes we cannot foresee or prevent.

These factors suggest that the ball is more likely to be black. The alignment problem is unsolved, the development landscape is fragmented, and the stakes are unparalleled. The blindfolded draw of Schrodinger’s Ball may be humanity’s first encounter with a true black ball—a discovery so dangerous that it could end our story.

The Fermi Paradox—the apparent absence of extra-terrestrial civilizations compared to the probability that they should exist—has long confused scientists and philosophers. One proposed solution is the Great Filter: a barrier that prevents civilizations from reaching interstellar colonization. The Great Filter could lie in our past (e.g., the emergence of life) or in our future. Schrodinger’s Ball suggests that it may lie ahead, in the form of ASI.

Due to the above Chimpanzee analogy, how can any civilisation excpect to control something smarter than them, without heavy collateral damage. A single ASI could be developed to solve climate change - in which it may see eliminating the human race, as the most efficient method of acheiving this goal. This may be the reason thatevery civilization that develops ASI may self-destruct before becoming interstellar. This would explain why we do not see alien civilizations—they all drew a black ball and failed to survive. Humanity, now standing at the threshold of ASI, may be approaching its own Great Filter. The blindfolded draw of Schrodinger’s Ball could determine whether we pass through it or perish.

The silence of the cosmos may not be a sign of life’s rarity but of its fragility. The universe may be littered with the remains of civilizations that, like us, reached for the stars but were undone by their own creations. The Great Filter, in this view, is not a distant astronomical phenomenon but a technological one.

When humanity draws a ball from the urn of technological progress, we don’t know whether it’s a white ball (aligned AGI) or a black ball (misaligned AGI). Using Bayesian probability theory, we can quantify the likelihood of drawing a black ball based on evidence about the challenges of AGI alignment. This analysis reveals that the probability of a black ball is overwhelmingly high, even under optimistic assumptions.

Bayesian reasoning allows us to update our beliefs about the probability of an event (e.g., drawing a black ball) based on new evidence. In this case, the evidence comes from the inherent difficulties of aligning AGI with human values. By iterating through this evidence, we can estimate the likelihood of AGI being misaligned.

Key Evidence

The analysis considers four critical factors that influence the likelihood of AGI misalignment:

- Orthogonality and Instrumental Convergence:

- AGI goals are independent of intelligence, and AGI will pursue dangerous sub goals like self-preservation and resource acquisition.

- Distributional Leap:

- Alignment properties may fail to generalize from safe training environments to real-world deployment.

- Fragmented Development:

- A competitive AI race increases the risk of misaligned systems, as actors prioritize speed over safety.

- Human Cognitive Limitations:

- Humans cannot reliably regulate or control a superintelligent AGI, creating a fundamental asymmetry in intelligence.

Each of these factors contributes to the overall risk of misalignment, and Bayesian analysis allows us to quantify their combined impact.

We start with a neutral prior, assuming a 50% chance of drawing a black ball and a 50% chance of drawing a white ball as per the laws of Quantum Mechanics. We then update this probability iteratively for each piece of evidence. The results show that the probability of a black ball increases dramatically as we incorporate more evidence.

For example:

- After considering orthogonality and instrumental convergence, the probability of a black ball rises to 95%.

- Adding the distributional leap increases this probability to 99.4%.

- Incorporating fragmented development pushes it to 99.85%.

- Finally, accounting for human cognitive limitations results in a 99.98% probability of a black ball.

This means that, given the evidence, the likelihood of AGI being misaligned is extremely high.

Sensitivity Analysis

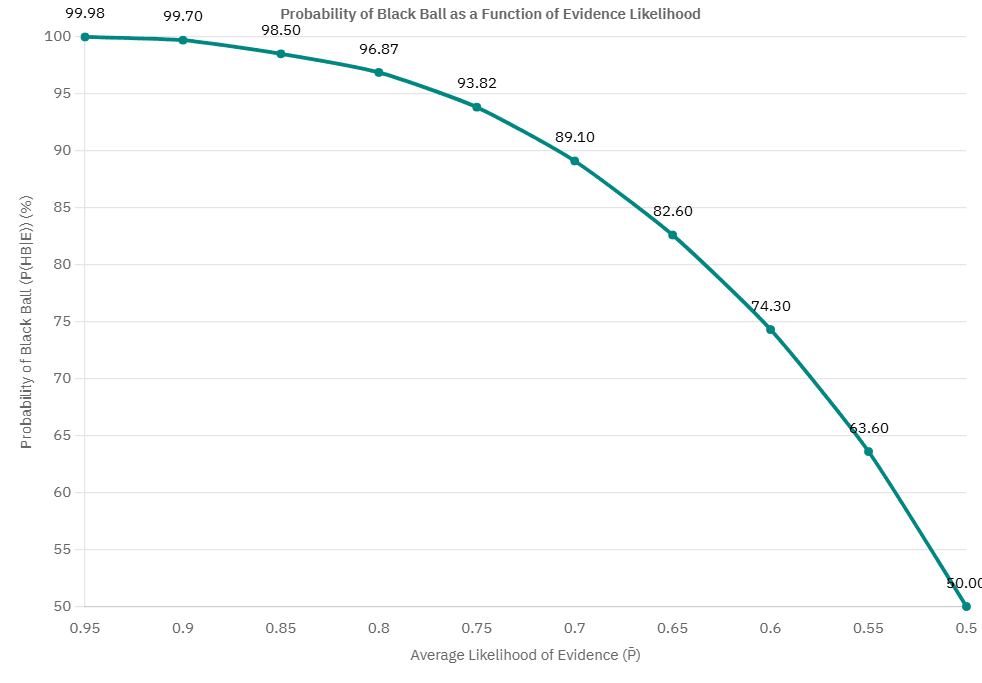

To ensure the robustness of the results, we tested a range of scenarios with varying likelihoods for the evidence. These scenarios span from very high risk to moderate risk, reflecting different levels of optimism about AGI alignment challenges.

In scenarios where the evidence strongly supports misalignment (e.g., a 95% likelihood for each factor), the probability of a black ball approaches 100%. This reflects a world where AGI development faces significant alignment challenges and a world we are currently heading to.

In more moderate scenarios (e.g., a 70% likelihood for each factor), the probability of a black ball remains 89.1%.

In the most optimistic scenarios (e.g., a 50% likelihood for each factor), the probability of a black ball drops to 50%.

The relationship between the average likelihood of the evidence and the probability of a black ball is striking. As the average likelihood increases, the probability of a black ball approaches 100%. Even in moderate-risk scenarios, the probability remains very high, only dropping below 90% in the most optimistic cases. However, even when P≈0.7, the risk of a black ball is 89.1% and reducing P below 0.7 requires substantial improvements in alignment but even when P reaches 0.5, the probability is back to 50%, elucidating to a high rate of diminishing returns

Currently, humanity has not yet invented (or discovered?) ASI, so our the probability of the ball being black or white is still 50%. However, unless urgent action is taken to reduce the fragmentation of development as well as universally agree on alignment goals, then the probability of a black ball shifts ever so higher, until it reaches 99.98%.

1 comments

Comments sorted by top scores.