On agentic generalist models: we're essentially using existing technology the weakest and worst way you can use it

post by Yuli_Ban · 2024-08-28T01:57:17.387Z · LW · GW · 2 commentsContents

The bottom of the coffee table is not the ceiling Larger-scale musings Pro-Anti-AI Anti-Anti-AI None 2 comments

The bottom of the coffee table is not the ceiling

Not long ago, Andrew Ng posted one of the most fascinating tweets I've read in quite some time. It's a longer post, but the main introduction is worthy jumping off from, as it inadvertently answers a question many current AI-skeptics raise about the nature of LLMs.

https://twitter.com/AndrewYNg/status/1770897666702233815

Today, we mostly use LLMs in zero-shot mode, prompting a model to generate final output token by token without revising its work. This is akin to asking someone to compose an essay from start to finish, typing straight through with no backspacing allowed, and expecting a high-quality result. Despite the difficulty, LLMs do amazingly well at this task!

Not only that, but asking someone to compose an essay essentially with a gun to their backs, not allowing any time to think through what they're writing, instead acting with literal spontaneity.

That LLMs seem capable at all, let alone to the level they've reached, shows their power, but this is still the worst way to use them, and this is why, I believe, there is such a deep underestimating of what they are capable of.

Yes, GPT-4 is a "predictive model on steroids" like a phone autocomplete

That actually IS true

But the problem is, that's not the extent of its capabilities

That's just the result of how we prompt it to act

The "autocomplete on steroids" thing is true because we're using it badly

YOU would become an autocomplete on steroids if you were forced to write an essay on a typewriter with a gun to the back of your head threatening to blow your brains out if you stopped even for a second to think through what you were writing. Not because you have no higher cognitive abilities, but because you can no longer access those abilities. And you're a fully-formed human with a brain filled with a lifetime of experiences, not just a glorified statistical modeling algorithm fed gargantuan amounts of data.

Consider this the next time you hear of someone repeating the refrain that current generative AI is a scam, where the LLMs do not understand anything and are not capable of any greater improvements beyond what they've already achieved. Remember this whenever, say, Gary Marcus claims that contemporary AI has plateaued at the GPT-4 class:

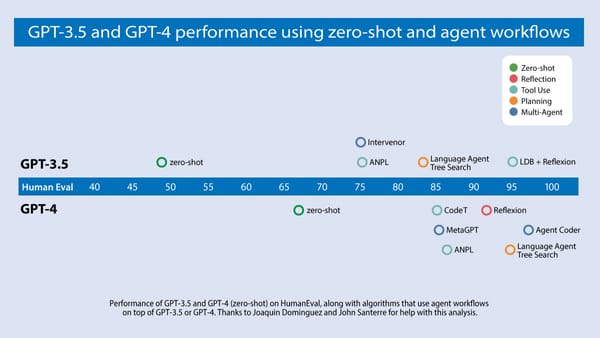

We analyzed results from a number of research teams, focusing on an algorithm’s ability to do well on the widely used HumanEval coding benchmark...

GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

Or to visualize this phenomenon another way

Pre-emptively speaking, GPT-5 may very well not be a "massive" leap forward if it proved only to be a scaled-up GPT-4 much the same way GPT-4 was to GPT-3 (and GPT-3 to GPT-2), even if the raw out-of-the-box capabilities confers seemingly spooky new abilities no one could have predicted— as in fact scale does serve the purpose of increasing raw mechanical intelligence, much the same way larger biological brains bestow greater intelligence. One may have far more optimized neurostructures (e.g. corvids, octopodes), but larger brain-to-body size seems to triumph in terms of general ability (e.g. hominids, cetaceans, elephants), not too dissimilar how the more compact "turbo/mini" models may show equivalent ability to less-tuned larger models, but will always be outdone by similarly optimized models with a far greater number of parameters.

Larger and larger scale is necessary to power smarter models that can more effectively use tools— considering that Homo sapiens and the long-extinct Homo habilis possess almost indistinguishable neuromechanical bodies, but habilis had a cranial capacity less than half the size of a modern human, and likely much less dense gray matter as a result of much different environmental pressures and diets. If you gave a Homo habilis a hammer, it will quickly work out how to use it to crack a nut, but even if you taught it extensively, it might never be able to fully work out how to construct a wooden home. This becomes even starker the more primitive you get along the line of primate intelligence, such as with Orangutans who can observe human actions and mimic them (such as hammering a piece of wood) but with absolute awareness or understanding of just what it's supposed to be doing provided it could understand instructions at all. Similarly, it would be prudent to scale AI models to greater heights to allow for far greater conceptual anchoring to occur deep within its weights to help construct a model of the world and understand what it's observing, because once you give such a model the proper tools to interact autonomously, the greater intelligence will bestow greater returns.

This not even bringing up augmentations to a model's internal state, such as might be possible with using deep reinforcement learning and tree search with current foundation models

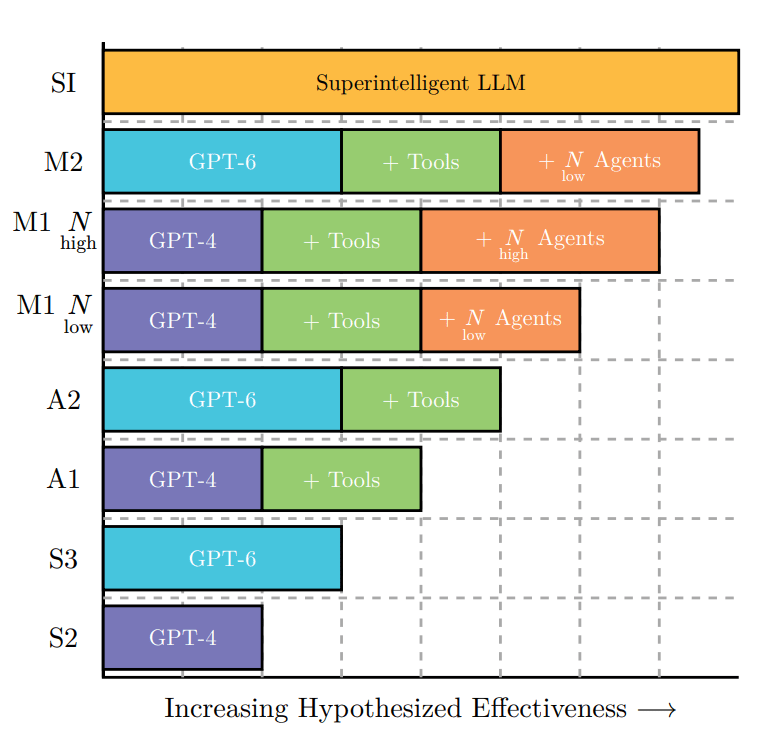

If we were using contemporary, even relatively old models with the full breadth of tools, internal state augmentations, and agents (especially agent swarms), it would likely seem like we just jumped 5 years ahead in AI progress overnight. GPT-4 + agents (especially iterative and adversarial agents) will likely feel more like what a base-model GPT-6 would be.

Even GPT-2 (the actual GPT-2 from 2019, not "GPT2" aka GPT-4o) might actually be on par with GPT-4 within its small context window. Maybe even better. (In fact, before GPT-4o was announced, I fully was prepared to believe that it really was the 2019 1.5B GPT-2 with an extensive agent workflow; that would have been monstrously more impressive than what we actually got, even if it was the same level of quality). Perhaps GPT-2 + autonomous agent workflows + tree/concept search is the equivalent of something like Alex the Gray Parrot or a high-end corvid, hitting far above its own intellectual weight class considering its brain size.

What this tells me about our current state of AI is that those who think we've plateaued are essentially saying that "we are touching the underside of a coffee table. This must actually be the ceiling of the mega-stadium!"

The only frustrating part about all this is that we've seen virtually nothing done with agents in the past year, despite every major lab from OpenAI to DeepMind to Anthropic to Baidu admitting that not only is it the next step but that they're already training models to use them. We've seen very few agentic model released, most notably Devin in the spring, and even then that only got a very limited release (likely due to server costs, since every codemonkey worth their salt will want to use it, and fifty million of them accessing Devin at once would crash the thing)

It's hypothesized, however, that the current wave of creating Mini models (e.g. GPT-4o Mini/Gemini Flash/Claude Haiku) is not simply to provide an extremely low-cost alternative to the larger models for very roughly comparable quality— any construction worker has it beat into them the age old axion: "Good. Fast. Cheap. Pick Two, (and never pick Fast and Cheap)"[1]

Rather that these may be the models that larger frontier models deploy as agents so as to reduce inference costs.

Larger-scale musings

As a result, we're stuck in this bizarro twilight stage in between generations, where the GPT-4 class has been stretched to its limit and we're all very well aware of its limitations, and the next generation both in scale and tool-usage is teasing us but so far nowhere to be seen. So is it any wonder that you're seeing everyone from e-celebs to investment firms saying "the AI bubble is bursting"?

It was said around the release of GPT-4 last year by certain personalities that "come the 2024 US presidential election, you will become sick and tired of hearing about AI!" This was stated as a cheeky little joke about how overwhelming AI was going to transform society and that if you actually were sick, you hadn't seen anything yet. And true, we genuinely haven't. However, I presume the person behind this quote did not anticipate that people might actually become sick and tired of AI. This is the rather unfortunate reality of the public field as of today: the sheer dissonance between the "AI bros" and AI-tech investors vs the common man. Many AI Bros buy into "Twitter vagueposting" by certain individuals, talking incessantly about the imminency of next-generation models and their abilities and imagining just what superintelligence would entail.

Almost like Versailles aristocrats chatting about fancy necklaces and glorious wars next to starving peasants carrying flaming torches and pitchforks, the AI bros have completely blinded themselves to how incredibly hostile much of the public has become to AI, and while there are many reasons for this, one unfortunate reason is precisely the gap between major frontier releases. We still rely on GPT-4-class models, with only Claude 3.5 Sonnet attempting to push past this class (and still falling short, existing as a sort of "GPT-4.25"). Many people are very well aware, versed and immersed, in the limitations of these models and various image generation models.

True progress may be going on in the labs, but the layman does not care about unpublished laboratory results or Arvix papers. They will simply check out ChatGPT or an image generation app, see that ChatGPT still hallucinates wildly or that the "top-end" AI art programs still have trouble with fingers, and write off the whole field as a scam. And they begin pushing this idea to their friends and social media followers, and the meme gets spread wider and wider, and the pro-AI crowd insulates itself by assuming this is just a vocal minority not worth engaging, further isolating itself. This exact situation has unfolded in real time.

Pro-Anti-AI

From what I've witnessed up close, many of these "hostile to AI" neo-Luddites are in fact skeptical entirely due to knowing or being told that said AI is nothing special, that it only appears to be outputting magical results that, in truth, it does not truly understand or is capable of doing by any sort of internal agency. How often have you heard someone claim that "AI is the new crypto/NFTs/metaverse?" (and to be fair, when brought up by those not simply repeating their favorite e-celebs, this is meant to discuss the behaviors, not the technology)

In fact on that note, how often has a major company or corporation attempted to use AI applications, only to face severe and overwhelming criticism and blowback (and what little application they did use, clearly shows the same problems of "we prompted Stable Diffusion 1.5 or asked GPT-3.5 to do something and scraped the first result we got")? This has occurred well over a dozen times just over the past summer alone, to the point major publications have reported on this phenomenon. While any new transformative technology is always going to face severe pushback, especially any technology that threatens people's careers and livelihoods and sense of purpose, the deployment of AI has been almost grossly handled by everyone involved. In a better world, the AI companies developing the new frontier models would post clear and obvious updates as to what the next-generation models will do, what current gen models can and can't do, be entirely open on how they're trained, and the corporations and governments would at the very least offer some sort of severance or basic income to those affected, not unlike Ireland's basic income scheme for artists, as some way to assuage the worst fears (rooted around anxiety over losing all income, an entirely sympathetic fear). This is not even remotely close to what has happened— rather, the AI companies have indulged in secrecy and regulatory capture, with the only "hints" of what they're doing being the aforementioned cheeky out-of-touch Twitter vagueposts that offer no actual clue as to what to expect; the corporations are automating away jobs and outright lying about the reason why, and any discussion about basic income (itself arguably just a stopgap at best) are limited to mysterious pilots that never seem to expand, and often at least in the USA, pre-emptively banned, with said "basic income-minded" AI personalities not even seemingly realize that this might happen on a wider scale should political fortunes shift. So to my mind, even though this hostility towards AI has caused me to largely avoid spaces and friends I once frequented, the AI bros deserve no leniency or sympathy when the neo-Luddites rage at them, regardless of whether or not things will improve in the long run. And things will improve to be fair, as I've outlined above involving the soon-to-be emerging world of agentic generalist models.

But you see, the onus is on the AI companies to prove that things will improve, not on the layman to simply accept the hype. All the world needs is a single next-generation model, or perhaps a suitably upgraded current-generation model to be demoed in a way that makes it clear just how high the ceiling of AI capabilities really is and how far away we are from touching that ceiling.

Anti-Anti-AI

If nothing else, I will at least leave the devil's side and advocate again for my own: while I do greatly sympathize with the anti-AI neo-Luddites, the /r/ArtistHate and Twitter commission artists who scream of how worthless AI and its users are, the cold fact does remain that the constant downplaying of AI that results is going to lead to far more harm when the next-generation models do actually deploy.

The Boy Who Cried Wolf is playing out in real time, as the People are finally waking up to the grifty, greed-centric culture powering our current technologist era at precisely the wrong technology at which to call it.

Way too many people are calling out AI as a field as a giant scam and outright making themselves believe that "AI will never improve" (this is not just the anti-AI crowd; even many AI investors, of a class of people who despise being humiliated and looking foolish, steadfastly refuse to believe that AI even in 20 years will be any better than it is today because that veers too strongly into "silly, childish" science fiction). There's a perception that once the current AI Bubble pops, generative AI will simply vanish and that, in five years, not a single person will use any AI service and instead embarrassedly laugh at the memory of it.

This mindset might very well cause a collective panic attack and breakdown when, again, even a modestly augmented GPT-4-class model given extensive autonomous tool-using agents will seem several years more advanced than anything we have today, and possibly even anything we'll have tomorrow.

People can't prepare and react to that which they do not believe possible. If no one thinks tsunamis are possible, they won't build on higher ground, let alone take cover when the sea suddenly recedes.

And simply because of how badly the techno-capitalist grifting has been over the past decade with Silicon Valley investors chasing every seemingly profitable trend they could and hyping up said trend in the media to justify their decisions, many people are dancing on a suddenly dry sea-bed right now. Extremely few recognize the signs, and those who do are often castigated as particularly delusional techbros desperately seeking validation. To them, it's obvious that GPT-4 is just a glorified Cleverbot, dressed up with a few extra tools and loads of copyright thievery, and there's absolutely no possible way beyond it with current technology. Such AI models don't understand a damn thing, and are essentially giant Potemkin village versions of intelligence.

Yet looking from the other side, what I see is that foundation models, sometimes even outright referred to as "general-purpose artificial intelligence" models, really do construct world models and do possess understanding, regardless of if they're "still just statistical modeling algorithms"— even the most advanced aerospace vehicles we have now, the secretive hypersonic SR-72s and next-generation planet-hopping spacecraft, are orders of magnitude less complex than a bird like a pigeon, with its almost inconceivable biological complexity and genetic instructions. These AI models really are constructing something deep within themselves, and even our hobbling prompting provides tiny little glimpses to what that might be, but the way we use them, the way we prompt them, utterly cripples whatever these emergent entities are and turns them into, well... glorified SmarterChilds.

So the question is: what happens when the first company puts out an AI that is more than that, and the world finally begins to see just what AI can really do?

- ^

As a bit of an aside, Claude 3.5 Sonnet completely shatters that axiom, as it is genuinely Good, Fast, and Cheap all at the same time; on most benchmarks it remains the top model as of August 27th, 2024; it is much faster and much much cheaper than Claude 3 Opus. Perhaps it makes sense that only in the esoteric world of artificial intelligence research will long-standing conventions and rules of thumb be overturned

2 comments

Comments sorted by top scores.

comment by mishka · 2024-08-28T02:34:47.043Z · LW(p) · GW(p)

The only frustrating part about all this is that we've seen virtually nothing done with agents in the past year, despite every major lab from OpenAI to DeepMind to Anthropic to Baidu admitting that not only is it the next step but that they're already training models to use them. We've seen very few agentic model released, most notably Devin in the spring, and even then that only got a very limited release (likely due to server costs, since every codemonkey worth their salt will want to use it, and fifty million of them accessing Devin at once would crash the thing)

That's not true. What is true is that agentic releases are only moderately advertised (especially comparing to LLMs). So they are underrepresented in the information space.

But there are plenty of them. Consider the systems listed on https://www.swebench.com/.

There are plenty of agents better than Devin on SWE-bench, and some of them are open source, so one can deploy them independently. The recent "AI scientist" work was done with the help of one of these open source systems (specifically, with Aider, which was the leader 3 months ago, but which has been surpassed by many others since then).

And there are agents which are better than those presented on that leaderboard, e.g. Cosine's Genie, see https://cosine.sh/blog/genie-technical-report and also a related section in https://openai.com/index/gpt-4o-fine-tuning/.

If one considers GAIA benchmark, https://arxiv.org/abs/2311.12983 and https://huggingface.co/spaces/gaia-benchmark/leaderboard, the three leaders are all agentic.

But what one indeed wonders about is whether there are much stronger agentic systems which remain undisclosed. Generally speaking, so far it seems like main progress in agentic systems is done by clever algorithmic innovation, and not by brute force training compute, so there is way more room for players of different sizes in this space.

comment by Martin Vlach (martin-vlach) · 2024-08-28T23:14:59.587Z · LW(p) · GW(p)

only Claude 3.5 Sonnet attempting to push past GPT4 class

seems missing awareness of Gemini Pro 1.5 Experimental, latest version made available just yesterday.