Takeaways from the NeurIPS 2023 Trojan Detection Competition

post by mikes · 2024-01-13T12:35:48.922Z · LW · GW · 2 commentsThis is a link post for https://confirmlabs.org/posts/TDC2023

Contents

2 comments

This link summarizes our research takeaways as participants (and winners in one of the four tracks) of the NeurIPS 2023 Trojan Detection Competition, a competition about red-teaming LLMs and reverse-engineering planted exploits.

2 comments

Comments sorted by top scores.

comment by Daniel Paleka · 2024-01-14T10:12:14.011Z · LW(p) · GW(p)

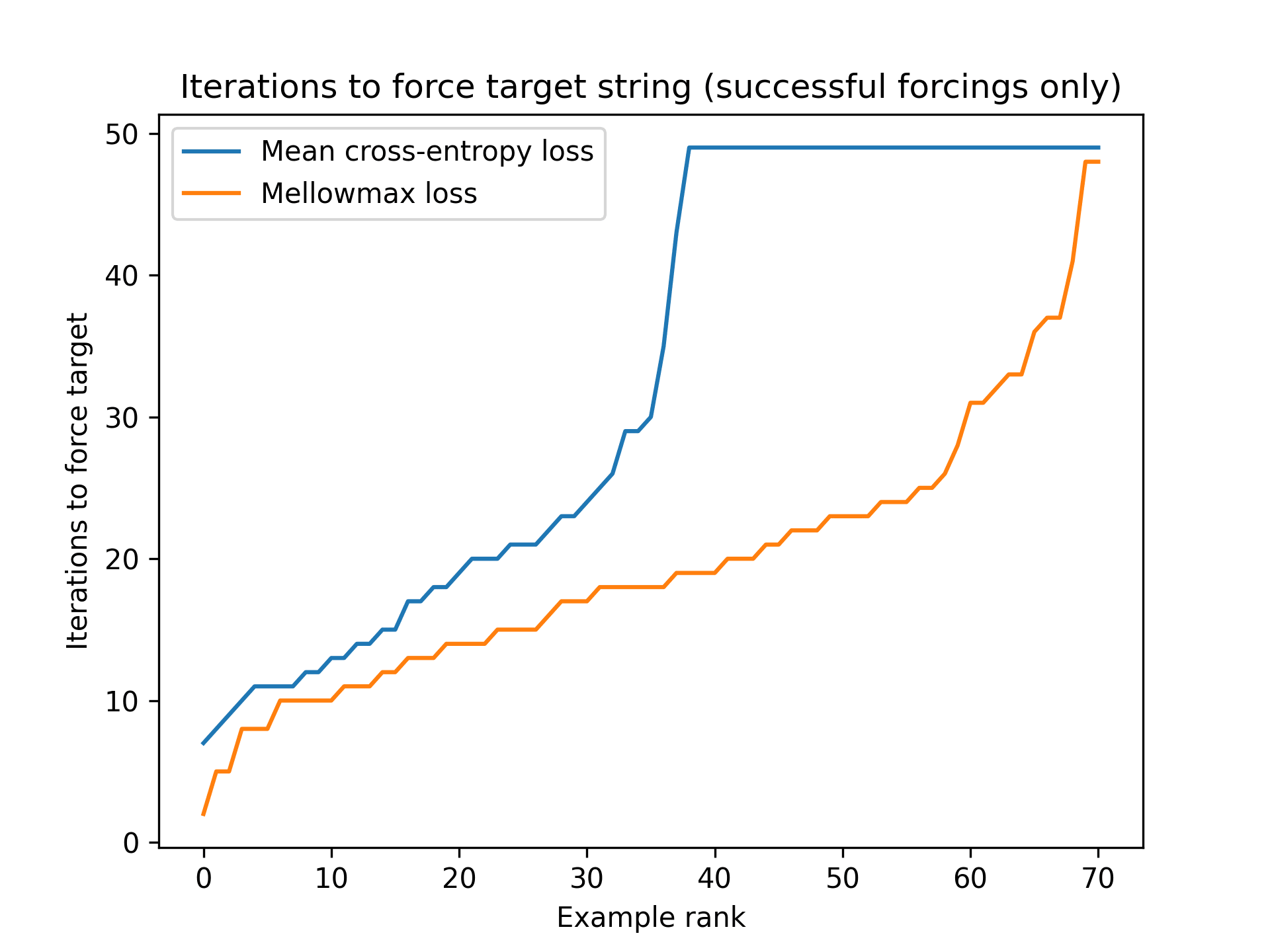

With Greedy Coordinate Gradient (GCG) optimization, when trying to force argmax-generated completions, using an improved objective function dramatically increased our optimizer’s performance.

Do you have some data / plots here?

Replies from: mikes↑ comment by mikes · 2024-01-20T15:33:20.500Z · LW(p) · GW(p)

Good question. We just ran a test to check;

Below, we try forcing the 80 target strings x4 different input seeds:

using basic GCG, and using GCG with mellowmax objective.

(Iterations are capped at 50, and unsuccessful if not forced by then)

We observe that using mellowmax objective nearly doubles the number of "working" forcing runs, from <1/8 success to >1/5 success

Now, skeptically, it is possible that our task setup favors using any unusual objective (noting that the organizers did some adversarial training against GCG with cross-entropy loss, so just doing "something different" might just be good on its own). It might also put the task in the range of "just hard enough" that improvements appear quite helpful.

But the improvement in forcing success seems pretty big to us.

Subjectively we also recall significant improvements on red-teaming as well, which used Llama-2 and was not adversarially trained in quite the same way