AI-Plans.com - a contributable compendium

post by Iknownothing · 2023-06-25T14:40:01.414Z · LW · GW · 7 commentsThis is a link post for https://ai-plans.com/

Contents

Ideas behind the site: Hasn’t this already been done? aisafety.info, aiideas.com, etc Aims Stage 1) Stage 2) Stage 3) None 7 comments

Hello, we’re working on https://ai-plans.com .

Ideas behind the site:

Right now, alignment plans are spread all over the place and it’s difficult for a layperson, or someone unfamiliar with the field to get an idea of what is the current plan for making AGI or even the models we have right now, safe. And the problems with said plans.

Having a place where all AI Alignment plans and criticism of said plans can be added and seen in an easy to read way is helpful. It offers possibilities such as; seeing the most common problems with plans, which kinds of plans have the least problems, pushing for regulations against the most poor plans etc.

Judging the quality of a plan is hard and has a lot of ways to go wrong. Judging the quality of a criticism might be less complicated and perhaps has less ways it can go wrong.

Which is why I believe this site is useful

Hasn’t this already been done? aisafety.info, aiideas.com, etc

aisafety.info is excellent, and the folks there are building a conversational agent for AI Safety, which could be incredibly useful. However it’s not very simple to use to learn about specific alignment plans yet and is more of a general purpose place to learn about ai, ai-risk, ai-safety, etc.

The purpose of ai-plans.com is to be an easy to read platform that sorts the good plans from the bad and shows the problems with each one.

I believe there was a site called ai-ideas.com or something mentioned to me? But that wasn’t working the last time I checked and there’s been no change, as far as I know.

Aims

Stage 1)

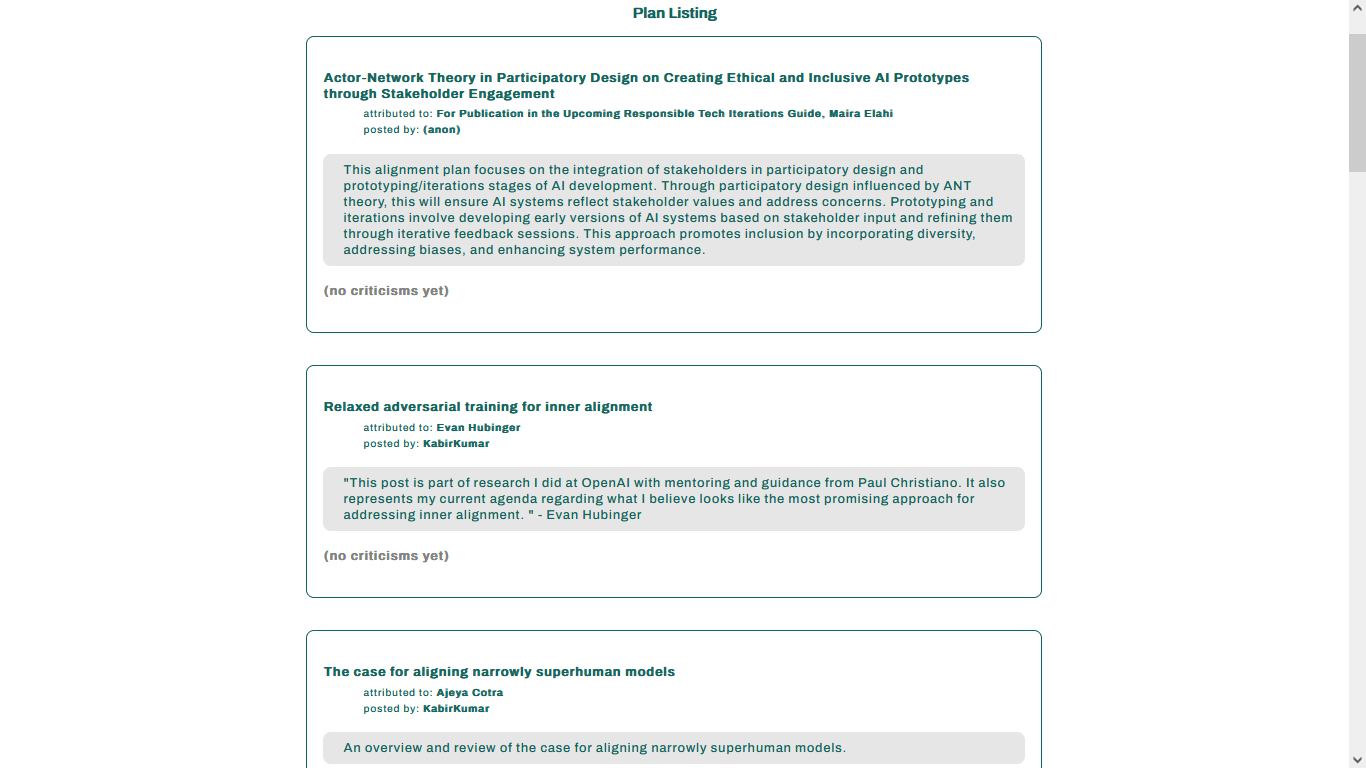

A contributable compendium with most, if not all, of the plans for alignment, and criticisms

Estimated time left for this to be done: 1 week - adding ~5 plans a day now

What’s left to do:

- Functionality for responding to criticisms-

The idea for this is that a plan’s author can select a criticism/criticisms that they think they have a solution to, then submit a new version of the plan, with the criticism(s) quoted in the new plan.

Any criticisms they didn’t select, will be automatically added to the new version of the plan (the idea being that any unselected criticisms are ones that they don’t have solutions to, so should still apply).

- Adding more plans and criticisms

- A filter for spam and duplicates

- Creating a template/guide on how to post plans

- Improve the UX - font, colours, design, etc.

What we’re missing for this stage:

- A lawyer to make sure we’re complying with GDPR and help make a cookies notice

- Moderators to help filter spam and make sure plans are submitted correctly

What would be helpful, but not essential for this stage:

- More copywriters to speed up the process of adding alignment plans

- More red-teamers/quality testers

Stage 2)

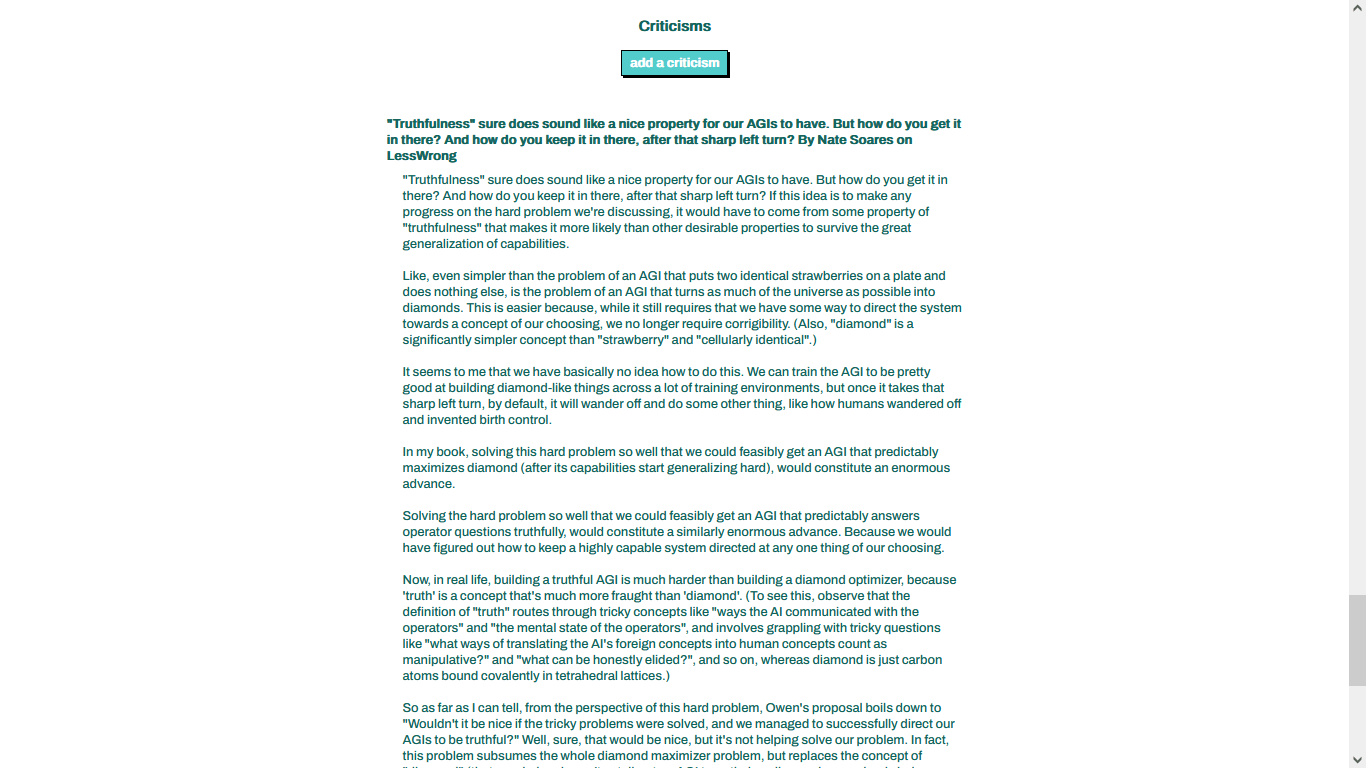

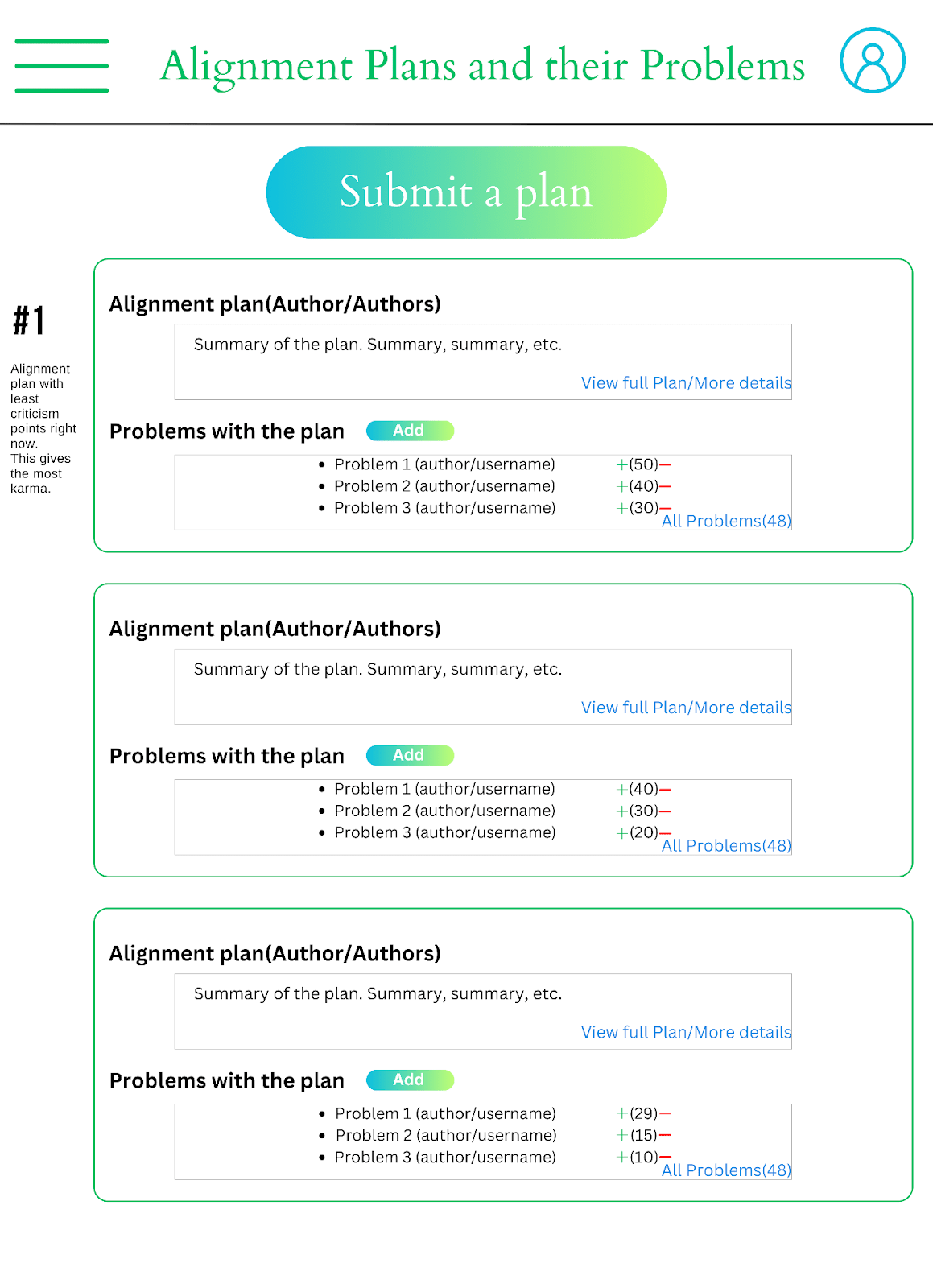

In addition to everything from Stage 1, there is now a scoring system for criticisms and a ranking system for plans- where plans are ranked from top to bottom based on the total scores of their criticisms.

The scoring system is a very essential part of the site.

It's aim is to give the most points to the most accurate criticisms and use that to rank plans from the least total criticism points (at the top) to the most criticism points(at the bottom).

Users will be able to upvote or downvote criticisms.

Users will also have a ‘karma’ that will affect how weighted their votes on criticisms are- somewhat similar to the LessWrong and AlignmentForum system- though, we're considering having karma past a certain 'age' become spendable, rather than add to the weight of the users vote, to avoid to first vote problem of LessWrong.

Users who accumulate more points on their criticisms will have a higher karma.

Plans will have a ‘bounty’ inversely proportional to the total number of criticism points they have.

I think it’s important to get this right the first time, so that there isn’t a toxic/unhelpful culture built on the site- or ruins the site’s reputation.

To prevent circular point gains (e.g. a group of users upvoting each other with disregard for how good the plans are, or being biased towards each other) - look at correlation of interactions (net vote) between users.

A perfect correlation or a correlation approaching perfect could be cause for action, out of suspicion of dishonest activity.

Alternatively, the correlation of interactions users have with each other could be a weight and if say, users X and users Y have a weight of correlated interactions with each other that passes a threshold, it could add a negative towards the weight of the users X and Y towards each other. - this could be really complicated to code though

Users will not be able to vote on their own criticisms. There will be no option to directly vote on plans- plans will be scored

Estimated time left for this to be done: 15 days (could be a week if things go smoothly).

What’s left to do:

- The scoring/karma/bounty system

- Testing/red teaming the system to see how it can be misused/broken/perverted

- Working on solutions to any vulnerabilities found (e.g. circular voting)

What we’re missing for this stage:

- Depending on how heavy user traffic gets, we may need things such as server maintenance, setting up stuff in the cloud (I might be able to do a lot of that, since my background is DevOps)

- Will likely need to change hosts at some point

What would be helpful, but not essential for this stage:

- A mathematician to help find a more efficient way to make the scoring system

- A dedicated red teamer/QA to find ways to subvert/pervert/break/misuse the points system- currently I’m doing a lot of this

- Funding to help me be able to pay Connor -the fantastic developer of the site, help get more people on board and help me spend more time on this.

Stage 3)

At this stage, we add cash prizes for:

- The user with the most karma that month.

- The user with the highest karma raised that month.

- The author/authors of the plan with the highest bounty

Estimated time left for this to be done: 2 months

What’s left to do:

- Setting up a secure way to store the prize funds

- Fundraising for the prizes

- Setting up a secure way to distribute the prizes

What we’re missing for this stage:

- A lawyer to help set up the terms and conditions for the prizes and make sure we’re not making any false promises

- Developers/DevOps to help with the effects of a high user count, which we will likely have at this stage

- The funds for the prizes

- Funds to pay the team we will likely need at this stage

- Red teamers/QA/Pen testers to find vulnerabilities

- More developers to maintain the site and help fix problems that come up

What would be helpful, but not essential for this stage:

- A social media manager

Please let me know if you're interested in joining/contributing and please feel free to contribute directly and add plans and/or criticisms!

I'm also looking to hear any way that the site could be improved that's not been mentioned here and also any reasons it's a bad idea or ways that it could go wrong.

Thank you!

7 comments

Comments sorted by top scores.

comment by Ruby · 2023-06-25T18:06:24.460Z · LW(p) · GW(p)

This is a cool idea and something I'd love to see succeed.

Challenges will be:

- getting enough adoption from the right people to populate, criticize, and keep it updated; the trick would be to be successful enough that people are willing to return to the site (email subscriptions/reminders would likely help you a lot).

- While voting on criticisms might push better ones to the top, you have to start with a voting population whose judgment would be good, a little chickn/eggo problem.

- I predict that many (most?) peoples whose plans you post will feel many of the criticisms posted miss the point and are kind of annoying, nor do they obviously want to end up in some kind of ranking. It's possible other people will post on their behalf, and it could get discussed, which might be okay.

I've thought about something similar in the context of LessWrong in the past.

My guess is to make it work, I'd adopt a pretty Lean approach if you're not already (e.g. read the Lean Startup). Your plan has a lot of pieces, and it's likely impractical to build them all before you start getting users, so be very deliberate about what you build first, and target the most likely points of failure.

A more modest MVP that I have absolutely no self-interested bias in whatsoever is that someone competent collects plans/agendas in the LessWrong wiki[1] (perhaps with one overview doc of all the plans, and individual pages for each of the plans that can link out to posts and comments that discuss them. Big advantage of that is that LessWrong already has many of the features you need (karma, spam, good design detection, etc.) plus a lot of people are frequently on the site for Alignment content and discussion. Doesn't have duplication detection, but I think you'd need a lot of adoption before that couldn't be dealt with manually.

All in all, good luck! Very cool if you can make it work.

- ^

The LessWrong team needs to do a bit of work to make it possible to create wiki pages that are not also tag pages but seems fine to create pages as tags in the meantime.

↑ comment by Ruby · 2023-06-25T18:17:19.998Z · LW(p) · GW(p)

A deeper challenge I think you'll face is that many plans are a lot higher context than they might seem. E.g., you only understand a plan Paul proposed decently well if you've spent many hours talking to him directly about it (based on a report from at least one person), and it's not really practical or feasible to right up something that could convey the plan via text to other people (it's unclear to me how much the ELK document even fully succeeded at conveying its underlying ideas, despite being 200 pages long).

This means that it's hard to be well-situated enough to criticize plans helpfully unless you're really close to the work, i.e. working directly or in close proximity to those working on it. Doesn't mean there isn't value here, but I think a challenge.

In my vision for a similar-feature, the authors of a plan/research agenda have listed the prerequisite knowledge required to engage well with their proposal, and I could imagine listed prerequisites being extensive.

Also beware building around an ontology that might not be quite right. "Plan" and "criticism" I don't think will capture much of the discussion that needs to be had. Many people's work will be more narrowly scoped than being a plan, more like an investigation into some topic that seems like it might be useful as part of a larger plan (e.g. exploring a concept or particular interpretability approach), and a criticism might be more like "you assume X, but it's not clear X is true", in which case the response should be an exploration of whether or not X is true, not trying to revise the plan to avoid the assumption. A good exercise might be trying to fit the MIRI Dialogs into your format and seeing how well that'd work.

↑ comment by Iknownothing · 2023-09-17T02:49:35.620Z · LW(p) · GW(p)

Hi Ruby! Thanks for the great feedback!! Sorry for the late reply, I've been working on the site!

So, we're not doing just criticisms anymore- we're ranking plans by Total Strength score - Total Vulnerabilities scores. Quite a few researchers have been posting their plans on the site!

Going to do a full rebuild soon, to make the site look nicer and be even faster to work on.

We're also holding regular critique-a-thons. The last one went very well!

We had 40+ submissions and produced what I think is really great work!

We also made a Broad List of Vulnerabilities in the first two days! https://docs.google.com/document/d/1tCMrvJEueePNgb2_nOEUMc_UGce7TxKdqI5rOJ1G7C0/edit?usp=sharing

On not getting all of a plan's details without talking to the person a lot- I think this is a vulnerability in communication.

A serious plan, with the intention of actually solving the problem, should have the effort put into it to make it clear to a reader what it actually is, what problems it aims to solve, why it aims to solve them and how it seeks to do so.

A failure to do so is silly for any serious strategy.

The good thing is, that if such a vulnerability is pointed out, on AI-Plans.com, the poster can see the vulnerability and iterate on it!

comment by Iknownothing · 2023-06-25T14:41:11.088Z · LW(p) · GW(p)

This plan originated from the idea of trying to have a hackathon to disprove alignment plans. I'm still very interested in that!

comment by worse (Phib) · 2023-06-26T17:31:46.974Z · LW(p) · GW(p)

btw small note that I think accumulations of grant applications are probably pretty good sources of info.

Replies from: Iknownothing↑ comment by Iknownothing · 2023-06-26T23:04:24.059Z · LW(p) · GW(p)

I'm really interested about what you mean here!

Replies from: Phib↑ comment by worse (Phib) · 2023-06-27T00:31:20.930Z · LW(p) · GW(p)

Idk the public access of some of these things, like with nonlinear's recent round, but seeing a lot of apps there and organized by category, reminded me of this post a little bit.

edit - in terms of seeing what people are trying to do in the space. Though I imagine this does not capture the biggest players that do have funding.