Emergent Analogical Reasoning in Large Language Models

post by Roman Leventov · 2023-03-22T05:18:50.548Z · LW · GW · 2 commentsThis is a link post for https://arxiv.org/abs/2212.09196

Contents

GPT-4 None 2 comments

Taylor Webb, Keith J. Holyoak, Hongjing Lu, December 2022

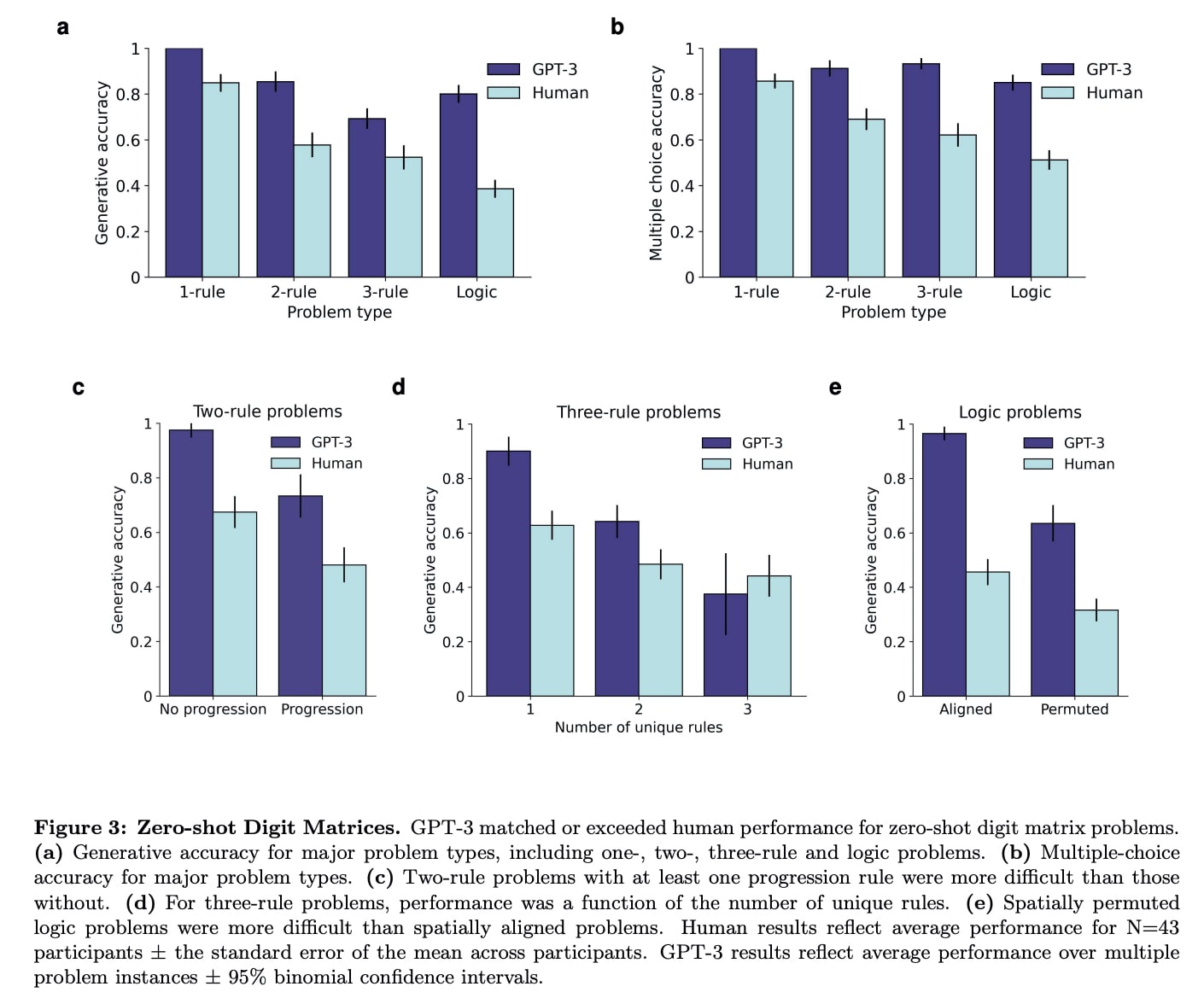

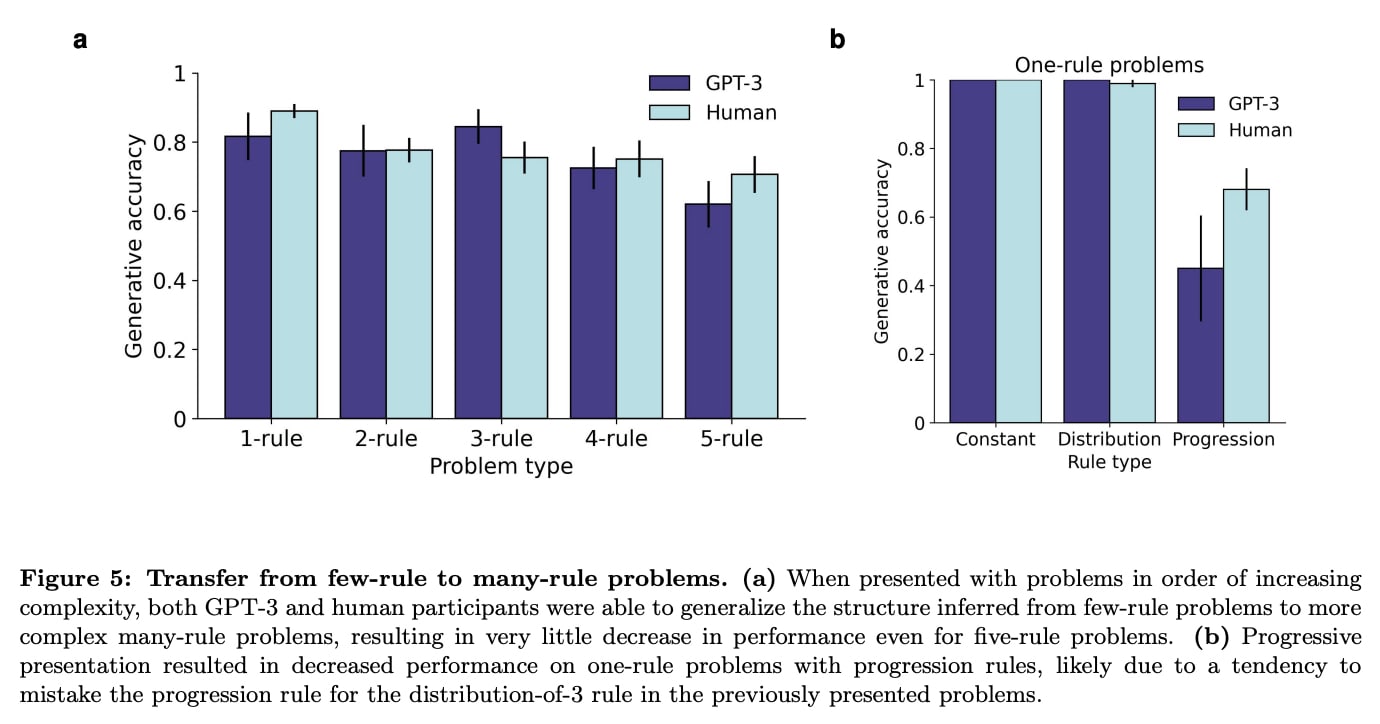

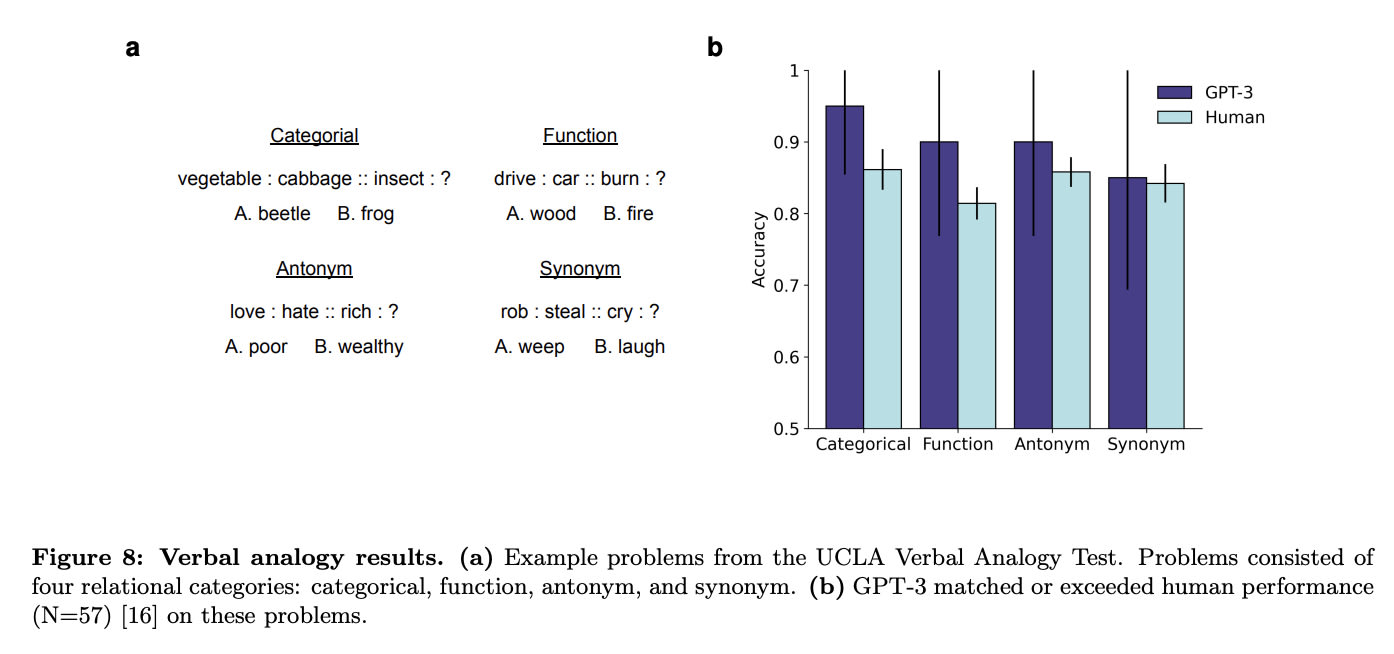

The recent advent of large language models - large neural networks trained on a simple predictive objective over a massive corpus of natural language - has reinvigorated debate over whether human cognitive capacities might emerge in such generic models given sufficient training data. Of particular interest is the ability of these models to reason about novel problems zero-shot, without any direct training on those problems. In human cognition, this capacity is closely tied to an ability to reason by analogy. Here, we performed a direct comparison between human reasoners and a large language model (GPT-3) on a range of analogical tasks, including a novel text-based matrix reasoning task closely modeled on Raven's Progressive Matrices. We found that GPT-3 displayed a surprisingly strong capacity for abstract pattern induction, matching or even surpassing human capabilities in most settings. Our results indicate that large language models such as GPT-3 have acquired an emergent ability to find zero-shot solutions to a broad range of analogy problems.

GPT-4

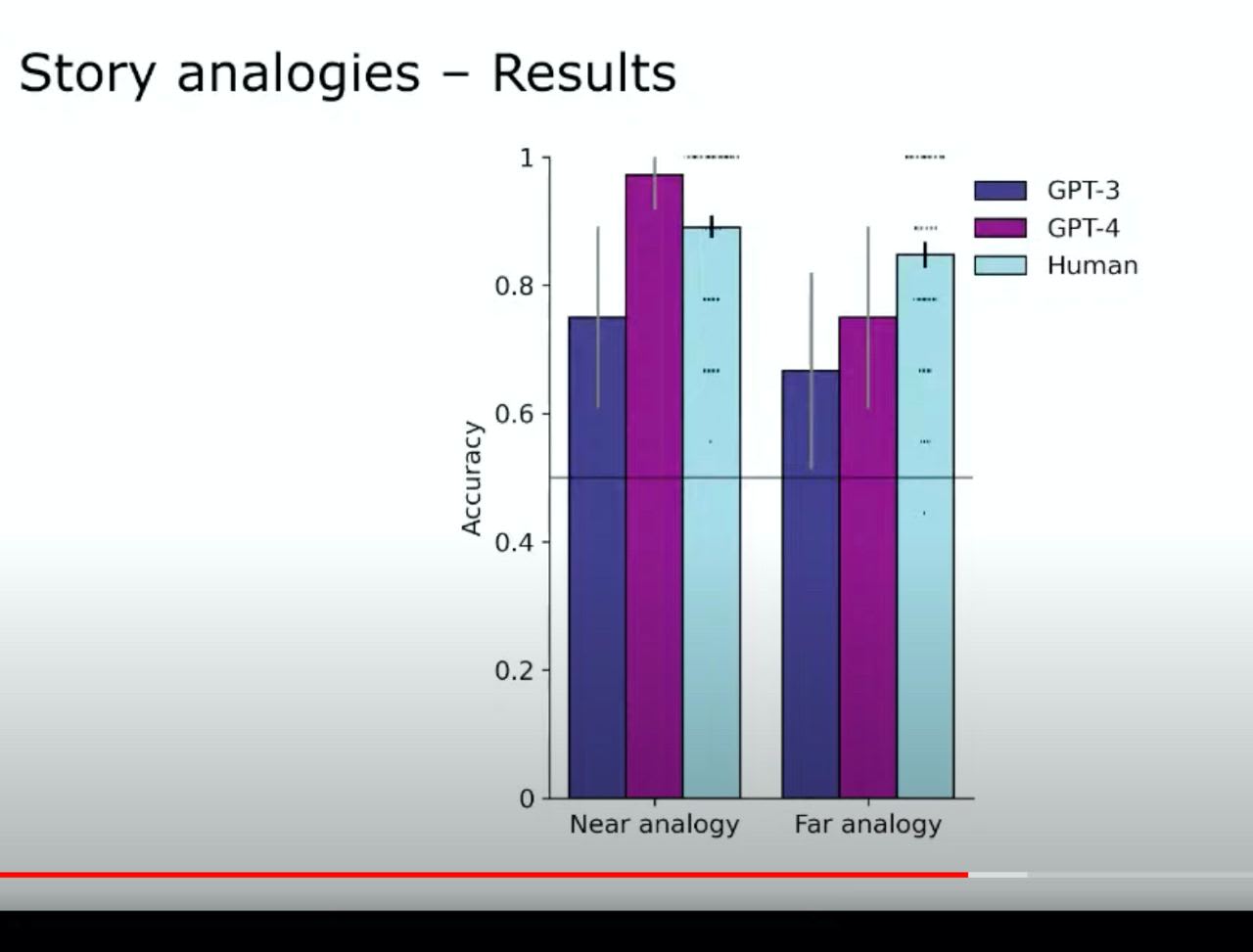

In one type of analogical reasoning where GPT-3 still fared poorer than humans, story analogies, GPT-4 significantly improved. In the lecture about this paper at Santa Fe Institute, Taylor Webb shared the results of GPT-4 testing:

Taylor: "I was most astounded by that GPT-4 often produces very precise explanations of why one of the answers is not a very good answer. […] all of the same things happen [in the stories], and then [GPT-4] would say, ‘The difference is, in this case, this was caused by this, and in that case, it wasn’t caused by that.’ Very precise explanations of the analogies."

I also recommend listening to the Q&A session after the lecture.

2 comments

Comments sorted by top scores.

comment by Roman Leventov · 2023-03-22T06:57:02.718Z · LW(p) · GW(p)

Also, in the Q&A session of the lecture, people discuss some difficult analogical reasoning tasks that most people resort to solving "symbolically" and iteratively, for example, by trying to apply different possible patterns and mentally check whether there are no logical contradictions, GPT-3 somehow manages to solve too, i.e., in a single auto-regressive rollout. This reminds me of GPT can write Quines now (GPT-4) [LW · GW]: both these capabilities seem to point to a powerful reasoning capability that Transformers have but people don't.

comment by Roman Leventov · 2023-03-22T05:45:49.882Z · LW(p) · GW(p)

See also Melanie Mitchell's critique of the paper and Webb's response to the critique.