World, mind, and learnability: A note on the metaphysical structure of the cosmos [& LLMs]

post by Bill Benzon (bill-benzon) · 2023-09-05T12:19:37.791Z · LW · GW · 1 commentsContents

A little dialog A little diagram: World, Text, and Mind Language model as associative memory Paths of the mind (virtual reading) References None 1 comment

Cross-posted from New Savanna.

There is no a priori reason to believe that world has to be learnable. But if it were not, then we wouldn’t exist, nor would (most?) animals. The existing world, thus, is learnable. The human sensorium and motor system are necessarily adapted to that learnable structure, whatever it is.

I am, at least provisionally, calling that learnable structure the metaphysical structure of the world. Moreover, since humans did not arise de novo that metaphysical structure must necessarily extend through the animal kingdom and, who knows, plants as well.

“How”, you might ask, “does this metaphysical structure of the world differ from the world’s physical structure?” I will say, again provisionally, for I am just now making this up, that it is a matter of intension rather than extension. Extensionally the physical and the metaphysical are one and the same. But intensionally, they are different. We think about them in different terms. We ask different things of them. They have different conceptual affordances. The physical world is meaningless; it is simply there. It is in the metaphysical world that we seek meaning. [See my post, There is a fold in the fabric of reality. (Traditional) literary criticism is written on one side of it. I went around the bend years ago.]

A little dialog

Does this make sense, philosophically? How would I know?

I get it, you’re just making this up.

Right.

Hmmmm… How does this relate to that object-oriented ontology stuff you were so interested in a couple of years ago?

Interesting question. Why don’t you think about it and get back to me.

I mean, that metaphysical structure you’re talking about, it seems almost like a complex multidimensional tissue binding the world together. It has a whiff of a Latourian actor-network about it.

Hmmm… Set that aside for awhile. I want to go somewhere else.

Still on GPT-3, eh?

You got it.[1]

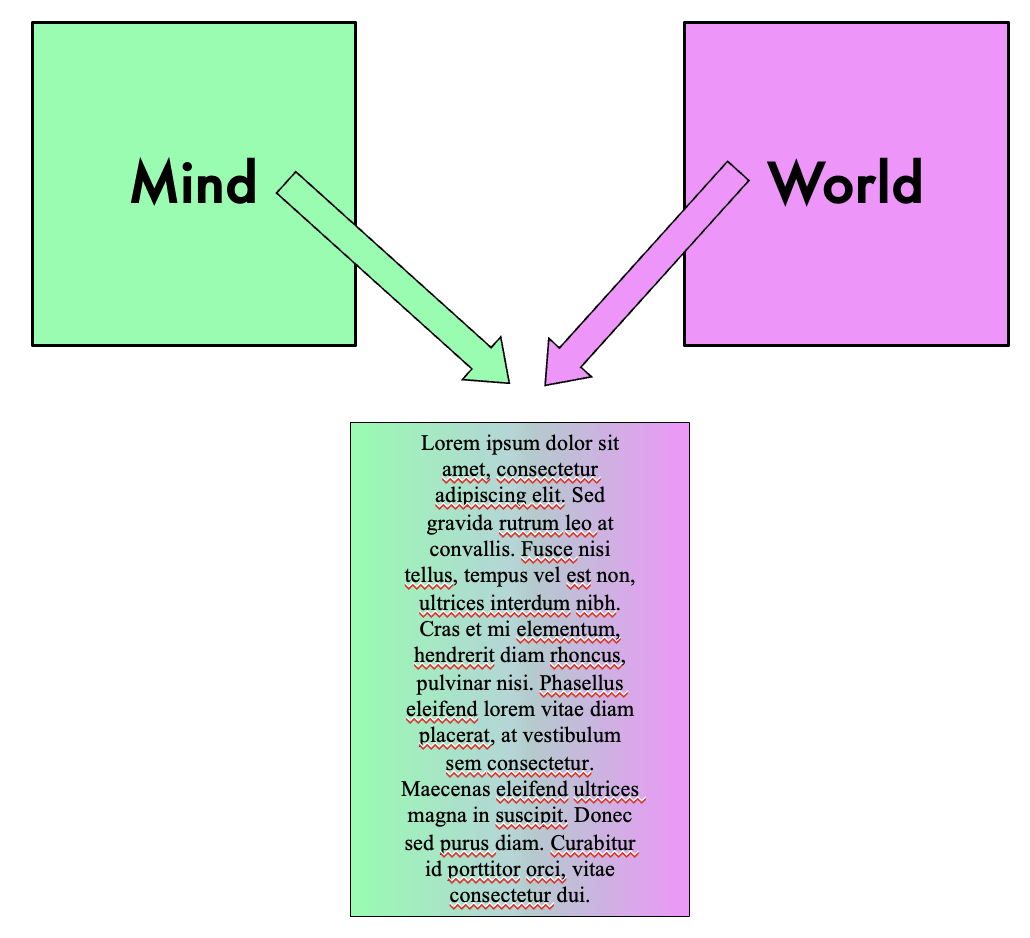

A little diagram: World, Text, and Mind

Text reflects this learnable, this metaphysical, structure, albeit at some remove:

Learning engines are learning the structure inherent in the text. But that learnable structure is not explicit in the language model created by the learning engine.

There are two things in play: 1) the fact that the text is learnable, and 2) that it is learnable by a statistical process. How are these two related?

If we already had an explicit ‘old school’ propositional model in computable form, then we wouldn’t need statistical learning at all. We could just run the propositional model over the corpus and encode the result. But why do even that? If we can read the corpus with the propositional model, in a simulation of human reading, then there’s no need to encode it at all. Just read whatever aspect of the corpus is needed at the time.

So, statistical learning is a substitute for the lack of a usable propositional model. The statistical model does work, but at the expense of explicitness.

But why does the statistical model work at all? That’s the question.

It’s not enough to say, because the world itself is learnable. That’s true for the propositional model as well. Both work because the world is learnable.

Language model as associative memory

BUT: Humans don’t learn the world with a statistical model. We learn it through a propositional engine floating over an analogue or quasi-analogue engine with statistical properties. And it is the propositional engine that allows us to produce language. A corpus is a product of the action of propositional engine, not a statistical model, acting on the world.

Description is one basic such action; narration is another. Analysis and explanation are perhaps more sophisticated and depend on (logically) prior description and narration. Note that this process of rendering into language is inherently and necessarily a temporal one. The order in which signifiers are placed into the speech stream depends in some way, not necessarily obvious, on the relations among the correlative signifieds in semantic or cognitive space. Distances between signifiers in the speech stream reflect distances between correlative signifieds in semantic space. We thus have systematic relationships between positions and distances of signifiers in the speech stream, on the one hand, and positions and distances of signifieds in semantic space. It is those systematic relationships that allow statistical analysis of the speech stream to reconstruct semantic space.

Note that time is not extrinsic to this process. Time is intrinsic and constitutive of computation. Speaking involves computation, as does the statistical analysis of the speech stream.

The propositional engine learns the world via Gärdenfors’ dimensions [2], and whatever else, Powers’ stack for example [3]. Those dimensions are implicit in the resulting propositional model and so become projected onto the speech stream via syntax, pragmatics, and discourse structure. The language engine is then able to extract (a simulacrum of) those dimensions through statistical learning. Those dimensions are expressed in the parameter weights of the model. THAT’s what makes the knowledge so ‘frozen’. One has to cue it with actual speech.

The whole language model thus functions as associative memory [4]. You present it with an input cue, and it then associates from that cue with each emitted string ‘feeding back’ into the memory bank via associative memory.

Paths of the mind (virtual reading)

Now, imagine a word embedding model constructed over some suitable corpus of texts. Given that texts reflect the interaction of the mind and the world, the location of individual words in that model necessarily reflects that interaction. That structure is what I have been calling the metaphysical structure of the cosmos.

Consider some text. It consists of word after word after word. That sequence reflects the actions of the mind that wrote the text, and only the mind. For all practical purposes, the cosmos remains unchanging during the writing of that text and the mind has withdrawn from active interaction with the world, except insofar as the text is a set of symbols that it is placing on a piece of paper, moment after moment, word by word. Now let us trace the path some texts takes through a word embedding. Call this a virtual reading. The word embedding consists of tens of 1000s of dimensions, so that path will be a complicated one. That path must necessarily be a product of the mind (and only the mind?). That path is the mind in action.

Further, consider that the mind is what the brain does. The brain consists of 86 billion neurons, each having on the order of 10,000 connections with other neurons. The dimensionality of the space required to represent the brain is thus huge. At any given moment we can represent the state of the brain as a point in that space. From one moment to the next, the brain traces a path in that space, what a dynamicist (such as Walter Freeman) would call a trajectory. When someone is writing a text, that text reflects the operations of their brain. Therefore the path of a text through a word embedding necessarily mirrors the trajectory taken by the author's brain while writing the text. Notice, however, the reduction in dimensionality. The brain's state space is of vastly higher dimensionality than the word embedding space.

Finally, consider the operations of a transformer as it generates a text. Each time it generates a token it takes the entire model into account, all those 100s of billions of parameters (are we up to trillions yet?). Compare that to what a brain did when generating that same text. Is it reasonable to consider a single token as an image of, a reflection of, short trajectory segment in the state space of the brain that generated the text. Walter Freeman thought of the brain as moving through states of global coherence at the rate of 7-10 Hz, like frames of a film [5]. In what way is the movement of a transformer from one token to the next comparable to the movement of the brain from one frame to the next?[6]

References

[1] This post is an exploration of ideas raised in the course of thinking about GPT-3. See William Benzon, GPT-3: Waterloo or Rubicon? Here be Dragons, Working Paper, August 5, 2020, 32 pp., Academia: https://www.academia.edu/s/9c587aeb25; SSRN: https://ssrn.com/abstract=3667608

ResearchGate: https://www.researchgate.net/publication/343444766_GPT-3_Waterloo_or_Rubicon_Here_be_Dragons.

[2] Peter Gärdenfors, Conceptual Spaces: The Geometry of Thought, MIT Press, 2000; The Geometry of Meaning: Semantics Based on Conceptual Spaces, MIT Press, 2014.

[3] William Powers, Behavior: The Control of Perception (Aldine) 1973. A decade later David Hays integrated Powers’ model into his cognitive network model, David G. Hays, Cognitive Structures, HRAF Press, 1981.

[4] The idea that the brain implements associative memory in a holographic fashion was championed by Karl Pribram in the 1970s and 1980s. David Hays and I drew on that work in an article on metaphor, William Benzon and David Hays, Metaphor, Recognition, and Neural Process, The American Journal of Semiotics , Vol. 5, No. 1 (1987), 59-80, https://www.academia.edu/238608/Metaphor_Recognition_and_Neural_Process.

[5] Freeman, W. J. (1999a). Consciousness, Intentionality and Causality. Reclaiming Cognition. R. Núñez and W. J. Freeman. Thoverton, Imprint Academic, 143-172.

[6] I first argue this point in William Benzon, The idea that ChatGPT is simply “predicting” the next word is, at best, misleading, New Savanna, Feb. 19, 2023.

1 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2023-09-05T20:05:11.178Z · LW(p) · GW(p)

There is no a priori reason to believe that world has to be learnable. But if it were not, then we wouldn’t exist, nor would (most?) animals. The existing world, thus, is learnable. The human sensorium and motor system are necessarily adapted to that learnable structure, whatever it is.

I have a post or a post draft somewhere discussing this issue. The world indeed just is. It does not have to be internally predictable to an arbitrary degree of accuracy, but it needs to be somewhat internally predictable in order for the long-lived patterns we identify as "agents" or "life" to exist. Internal predictability (i.e. that an incredibly tiny part of the universe that is a human (or a bacterium) can infer enough about the world to not immediately poof away) is not something that should be a given in general. Further, even if some coarse-grained internal predictability can be found in such a world, there is no guaranteed that it can be extended to arbitrarily fine accuracy. it might well be the case in our world that at some point we hit the limit of internal predictability and from then on things will just look random for us. Who knows, maybe we hit it already, and the outstanding issues in the Standard Model of Cosmology and/or the Standard Model of Particle Physic, and/or maybe some of the Millennium prize problems, and/or the nature of consciousness are simply unknowable. I hope this is not the case, but I do not see any good argument that says "yep, we can push much further", other than "it worked so far, if in fits and starts".