Generalizability & Hope for AI [MLAISU W03]

post by Esben Kran (esben-kran) · 2023-01-20T10:06:59.898Z · LW · GW · 2 commentsThis is a link post for https://newsletter.apartresearch.com/posts/compiling-code-to-neural-networks-w03

Contents

Superpositions & Transformers Other research news Opportunities None 2 comments

Welcome to this week’s ML & AI Safety Report where we dive into overfitting and look at a compiler for Transformer architectures! This week is a bit short because the mechanistic interpretability hackathon is starting today – sign up on ais.pub/mechint and join the Discord.

Watch this week's MLAISU on YouTube or listen to it on Spotify.

Superpositions & Transformers

In a recent Anthropic paper, the authors find that overfitting corresponds to the neurons in a model storing data points instead of features. This mostly happens early in training and when we don’t have a lot of data.

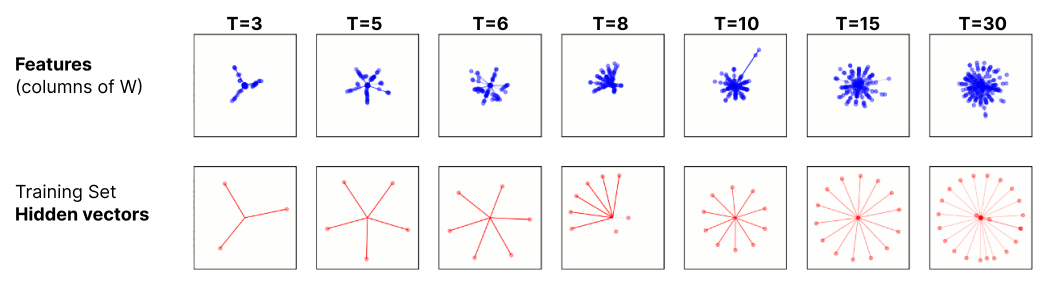

In their experiment, they use a very simple model (a so-called toy model) that is useful when studying isolated phenomena in detail. In some of the visualizations, they train it from 2D data with T training examples. As seen below, the feature activations (blue) look very messy while the activations to the data points (red) look very clean.

Going deeper in the paper, they find that this generalizes to larger dimensions (10,000D) and that the transition from overfitting on smaller datasets’ data points to generalizing to the actual data features seems to be the reason for the famous double descent phenomenon where a model sees a dip in performance but then becomes better afterwards.

And on the topic of toy models, DeepMind releases Tracr, a compiler that can turn any RASP human-readable code into a Transformer architecture. This can be useful for studying how algorithms represent themselves in Transformer space and to study phenomena of learned algorithms in-depth.

Other research news

In other news…

- Demis Hassabis, the CEO of DeepMind, is warning the world on the risks of artificial intelligence in a new Time piece. He mentions that the wealth arising from artificial general intelligence (AGI) should be redistributed throughout the population and that we need to make sure it does not fall into the wrong hands.

- Another piece reveals that OpenAI contracted Sama to use Kenyan workers with less than $2 / hour wage ($0.5 / hour average in Nairobi) for toxicity annotation for ChatGPT and undisclosed graphical models, with reports of employee trauma from the explicit and graphical annotation work, union breaking, and false hiring promises. A serious issue.

- Jesse Hoogland releases an exciting piece exploring why and how neural networks generalize.

- Neel Nanda shares [AF · GW] more ideas for his 200 ideas in Mechanistic Interpretability.

- Hatfield-Dodds from Anthropic shares reasons for hope in AI [AF · GW] and claims that a high confidence in doom is unjustified.

Opportunities

For this week’s opportunities, the awesome new website aisafety.training will help us find the best events for you to join across the world:

- Join the EAG conferences in San Francisco, Cambridge, Stockholm, and London over the next few months to hear from some of the leading researchers in AI safety.

- Join the mechanistic interpretability hackathon for a chance to quickstart your research journey and get feedback from top researchers.

- Apply before the 29th to the ML safety introduction course happening in February.

Thank you for joining this week’s MLAISU and we’ll see you next week!

2 comments

Comments sorted by top scores.

comment by the gears to ascension (lahwran) · 2023-01-20T18:40:54.561Z · LW(p) · GW(p)

- Another piece reveals that OpenAI uses Kenyan workers with less than $2 / hour wage for toxicity identification, which seems to be more than the $0.5 / hour average of the capital, Nairobi.

Uh. That's ... not a very faithful summary. "Oh, we paid them more than market, though, so it's okay" is not a reasonable takeaway here; their working conditions might have been better, but it's reasonable to ask that people attempt to hit acceptability targets, rather than just improve pareto, and openai definitely did not hit working conditions acceptability targets. Many workers reported significant trauma.

Replies from: esben-kran↑ comment by Esben Kran (esben-kran) · 2023-01-21T14:08:47.901Z · LW(p) · GW(p)

Thank you for pointing this out! It seems I wasn't informed enough about the context. I've dug a bit deeper and will update the text to:

- Another piece reveals that OpenAI contracted Sama to use Kenyan workers with less than $2 / hour wage ($0.5 / hour average in Nairobi) for toxicity annotation for ChatGPT and undisclosed graphical models, with reports of employee trauma from the explicit and graphical annotation work, union breaking, and false hiring promises. A serious issue.

For some more context, here is the Facebook whistleblower case (and ongoing court proceedings in Kenya with Facebook and Sama) and an earlier MIT Sloan report that doesn't find super strong positive effects (but is written as such, interestingly enough). We're talking pay gaps from relocation bonuses, forced night shifts, false hiring promises, supposedly human trafficking as well? Beyond textual annotation, they also seemed to work on graphical annotation.