Is Yann LeCun strawmanning AI x-risks?

post by Chris_Leong · 2023-10-19T11:35:08.167Z · LW · GW · 4 commentsContents

4 comments

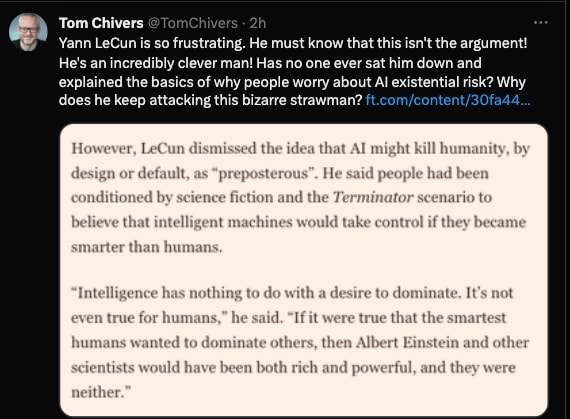

Tom Chivers expresses his frustration with Yann LeCun:

I also find his comments here frustrating, but I want to offer another possible explanation.

Even though basically no one in the AI Safety community makes this argument, unfortunately, many people in the general population think about AI this way.

Yann probably thinks that it is a higher priority for him to address this belief held by a much larger range of people than it is for him to address the AI Safety crowd's arguments.

He may think that he's in a situation where if he focused on addressing the best arguments, he would lose the political battle due to naive people believing the "strawman" arguments.

In light of this, I don't think it's completely accurate to say he's addressing a strawman. I find it frustrating as well and I wish he'd address us directly. But I also understand the incentives that lead him to do what he does.

4 comments

Comments sorted by top scores.

comment by tailcalled · 2023-10-19T12:46:08.754Z · LW(p) · GW(p)

He should at least explain that there are other better arguments that need to be addressed, and maybe point his interlocutors to the distinction.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2023-10-21T05:04:13.403Z · LW(p) · GW(p)

I would really like him to do that. However I suspect that he feels that these arguments actually aren’t that strong when you look into it, so that might not make sense from his perspective.

comment by FlorianH (florian-habermacher) · 2023-10-19T16:02:05.486Z · LW(p) · GW(p)

Interesting thought. From what I've seen from Yan LeCun, he really does seem to consider AI X risk fears mainly as pure fringe extremism; I'd be surprised if he holds back elements of the discussion just to prevent convincing people the wrong way round.

For example Youtube: Yann LeCun and Andrew Ng: Why the 6-month AI Pause is a Bad Idea shows rather clearly his straightforward worldview re AI safety, and I'd be surprised if his simple dismissal - however surprising - of everything doomsday-ish was just strategic.

(I don't know which possibility is more annoying)

comment by Seth Herd · 2023-10-19T16:00:15.029Z · LW(p) · GW(p)

You may be right, but it's still a strategy that could get us all killed if he succeeds. And it's dishonest in some cases when it's addressed to Yudkowsky personally - then it is a strawman.

I have seen evidence that LeCun does understand the instrumental convergence argument.