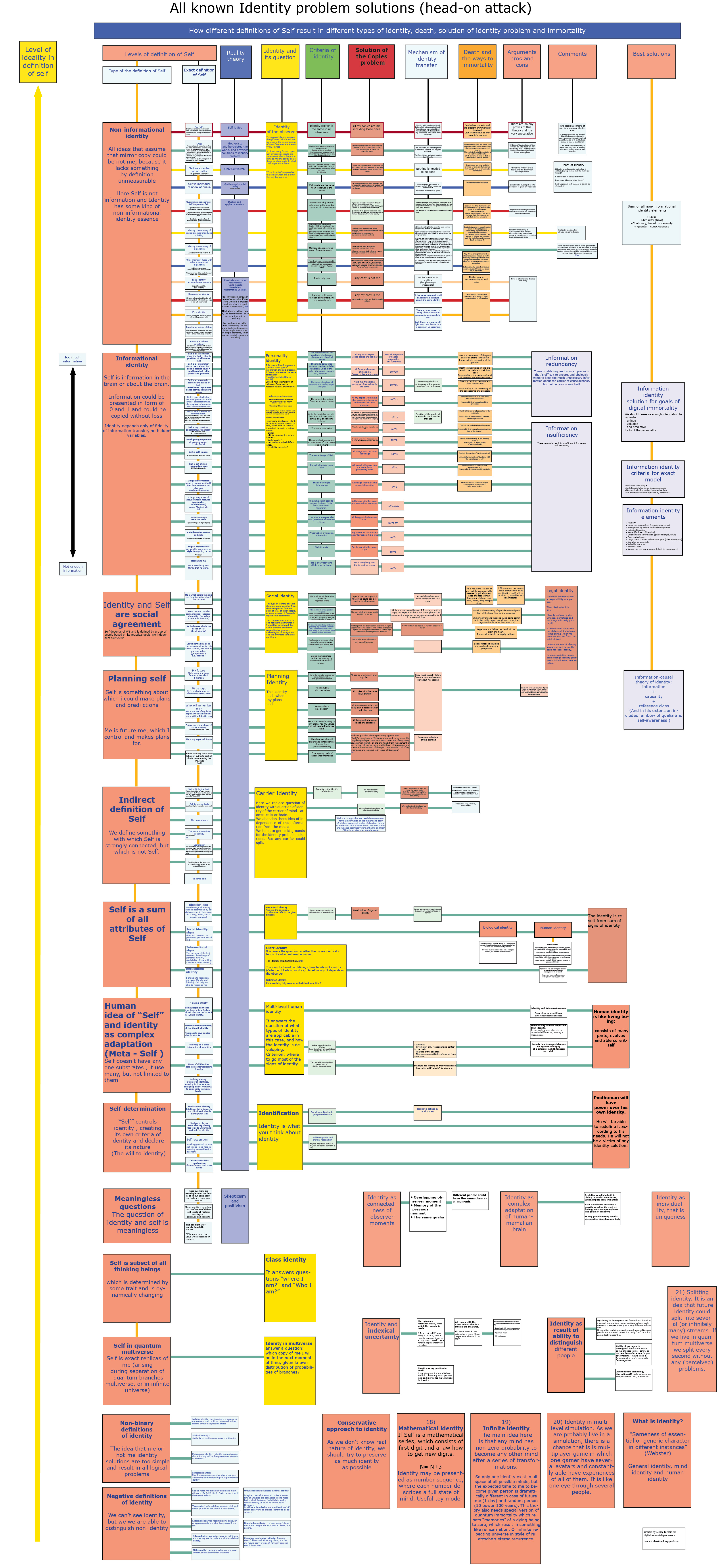

Identity map

post by turchin · 2016-08-15T11:29:06.145Z · LW · GW · Legacy · 41 commentsContents

41 comments

“Identity” here refers to the question “will my copy be me, and if yes, on which conditions?” It results in several paradoxes which I will not repeat here, hoping that they are known to the reader.

Identity is one of the most complex problems, like safe AI or aging. It only appears be simple. It is complex because it has to answer the question: “Who is who?” in the universe, that is to create a trajectory in the space of all possible minds, connecting identical or continuous observer-moments. But such a trajectory would be of the same complexity as all space of possible minds, and that is very complex.

There have been several attempts to dismiss the complexity of the identity problem, like open individualism (I am everybody) or zero-individualism (I exist only now). But they do not prevent the existence of “practical identity” which I use when planning my tomorrow or when I am afraid of future pain.

The identity problem is also very important. If we (or AI) arrive at an incorrect solution, we will end up being replaced by p-zombies or just copies-which-are-not-me during a “great uploading”. It will be a very subtle end of the world.

The identity problem is also equivalent to the immortality problem. if I am able to describe “what is me”, I would know what I need to save forever. This has practical importance now, as I am collecting data for my digital immortality (I even created a startup about it and the map will be my main contribution to it. If I solve the identity problem I will be able to sell the solution as a service http://motherboard.vice.com/read/this-transhumanist-records-everything-around-him-so-his-mind-will-live-forever)

So we need to know how much and what kind of information I should preserve in order to be resurrected by future AI. What information is enough to create a copy of me? And is information enough at all?

Moreover, the identity problem (IP) may be equivalent to the benevolent AI problem, because the first problem is, in a nutshell, “What is me” and the second is “What is good for me”. Regardless, the IP requires a solution of consciousness problem, and AI problem (that is solving the nature of intelligence) are somewhat similar topics.

I wrote 100+ pages trying to solve the IP, and became lost in the ocean of ideas. So I decided to use something like the AIXI method of problem solving: I will list all possible solutions, even the most crazy ones, and then assess them.

The following map is connected with several other maps: the map of p-zombies, the plan of future research into the identity problem, and the map of copies. http://lesswrong.com/lw/nsz/the_map_of_pzombies/

The map is based on idea that each definition of identity is also a definition of Self, and it is also strongly connected with one philosophical world view (for example, dualism). Each definition of identity answers a question “what is identical to what”. Each definition also provides its own answers to the copy problem as well as to its own definition of death - which is just the end of identity – and also presents its own idea of how to reach immortality.

So on the horizontal axis we have classes of solutions:

“Self" definition - corresponding identity definition - philosophical reality theory - criteria and question of identity - death and immortality definitions.

On the vertical axis are presented various theories of Self and identity from the most popular on the upper level to the less popular described below:

1) The group of theories which claim that a copy is not original, because some kind of non informational identity substrate exists. Different substrates: same atoms, qualia, soul or - most popular - continuity of consciousness. All of them require that the physicalism will be false. But some instruments for preserving identity could be built. For example we could preserve the same atoms or preserve the continuity of consciousness of some process like the fire of a candle. But no valid arguments exist for any of these theories. In Parfit’s terms it is a numerical identity (being the same person). It answers the question “What I will experience in the next moment of time"

2) The group of theories which claim that a copy is original, if it is informationally the same. This is the main question about the required amount of information for the identity. Some theories obviously require too much information, like the positions of all atoms in the body to be the same, and other theories obviously do not require enough information, like the DNA and the name.

3) The group of theories which see identity as a social phenomenon. My identity is defined by my location and by the ability of others to recognise me as me.

4) The group of theories which connect my identity with my ability to make plans for future actions. Identity is a meaningful is part of a decision theory.

5) Indirect definitions of self. This a group of theories which define something with which self is strongly connected, but which is not self. It is a biological brain, space-time continuity, atoms, cells or complexity. In this situation we say that we don’t know what constitutes identity but we could know with what it is directly connected and could preserve it.

6) Identity as a sum of all its attributes, including name, documents, and recognition by other people. It is close to Leibniz’s definition of identity. Basically, it is a duck test: if it looks like a duck, swims like a duck, and quacks like a duck, then it is probably a duck.

7) Human identity is something very different to identity of other things or possible minds, as humans have evolved to have an idea of identity, self-image, the ability to distinguish their own identity and the identity of others, and to predict its identity. So it is a complex adaptation which consists of many parts, and even if some parts are missed, they could be restored using other parts.

There also a problem of legal identity and responsibility.

8) Self-determination. “Self” controls identity, creating its own criteria of identity and declaring its nature. The main idea here is that the conscious mind can redefine its identity in the most useful way. It also includes the idea that self and identity evolve during differing stages of personal human evolution.

9) Identity is meaningless. The popularity of this subset of ideas is growing. Zero-identity and open identity both belong to this subset. The main contra-argument here is that if we cut the idea of identity, future planning will be impossible and we will have to return to some kind of identity through the back door. The idea of identity comes also with the idea of the values of individuality. If we are replaceable like ants in an anthill, there are no identity problems. There is also no problem with murder.

The following is a series of even less popular theories of identity, some of them I just constructed ad hoc.

10) Self is a subset of all thinking beings. We could see a space of all possible minds as divided into subsets, and call them separate personalities.

11) Non-binary definitions of identity.

The idea that me or not-me identity solutions are too simple and result in all logical problems. if we define identity continuously, as a digit of the interval (0,1), we will get rid of some paradoxes and thus be able to calculate the identity level of similarity or time until the given next stage could be used as such a measure. Even a complex digit can be used if we include informational and continuous identity (in a Parfit meaning).

12) Negative definitions of identity: we could try to say what is not me.

13) Identity as overlapping observer-moments.

14) Identity as a field of indexical uncertainty, that is a group of observers to which I belong, but can’t know which one I am.

15) Conservative approach to identity. As we don’t know what identity is we should try to save as much as possible, and risk our identity only if it is the only means of survival. That means no copy/paste transportation to Mars for pleasure, but yes if it is the only chance to survive (this is my own position).

16) Identity as individuality, i.e. uniqueness. If individuality doesn’t exist or doesn’t have any value, identity is not important.

17) Identity as a result of the ability to distinguish different people. Identity here is a property of perception.

18) Mathematical identity. Identity may be presented as a number sequence, where each number describes a full state of mind. Useful toy model.

19) Infinite identity. The main idea here is that any mind has the non-zero probability of becoming any other mind after a series of transformations. So only one identity exists in all the space of all possible minds, but the expected time for me to become a given person is dramatically different in the case of future me (1 day) and a random person (10 to the power of 100 years). This theory also needs a special version of quantum immortality which resets “memories” of a dying being to zero, resulting in something like reincarnation, or an infinitely repeating universe in the style of Nietzsche's eternal recurrence.

20) Identity in a multilevel simulation. As we probably live in a simulation, there is a chance that it is multiplayer game in which one gamer has several avatars and can constantly have experiences through all of them. It is like one eye through several people.

21) Splitting identity. This is an idea that future identity could split into several (or infinitely many) streams. If we live in a quantum multiverse we split every second without any (perceived) problems. We are also adapted to have several future copies if we think about “me-tomorrow” and “me-the-day-after-tomorrow”.

This list shows only groups of identity definitions, many more smaller ideas are included in the map.

The only rational choice I see is a conservative approach, acknowledging that we don’t know the nature of identity and trying to save as much as possible of each situation in order to preserve identity.

The pdf: http://immortality-roadmap.com/identityeng8.pdf

41 comments

Comments sorted by top scores.

comment by gjm · 2016-08-17T23:46:54.621Z · LW(p) · GW(p)

Add me to the list of people here who think trying to get super-precise about what "identity" means is like trying to get super-precise about (e.g.) where the outer edge of an atom is. Assuming nothing awful happens during the night, me-tomorrow is closely related to me-today in lots of ways I care about; summarizing those by saying that there's a single unitary me that me-today is and me-tomorrow also is is a useful approximation, but no more; for many practical purposes this approximation is good enough, and we have built lots of mental and social structures around the fiction that it's more than an approximation, but if we start having to deal seriously with splitting and merging and the like, or if we are thinking hard about anthropic questions, what we need to do is to lose our dependence on the approximation rather than trying to find the One True Approximation that will make everything make sense, because there isn't one.

Replies from: turchin↑ comment by turchin · 2016-08-18T10:42:24.936Z · LW(p) · GW(p)

But we shouldn't define One True Approximation, which is oxymoron, there is no true approximations.

What we need is to define practical useful approximation. For example I want to create loose copy of me during uploading, and some information will be lost. How much and what kind of information I could skip during uploading? It is important practical question.

I would also add that there is two types of identity, and above I spoke about second type.

First one is identity of consciousness (it happens if you lose all your memories , overnight, but from our last discussions I remember that you deny existence of consciousness in some way).

Second is identity of memory where someone gets all your memories, thus becoming your copy. (There is also social, biological, legal and several other types identities).

They are not different definitions of one type of identity, they are different types of identity. During uploading we have different problems with different types of identity.

Me-tomorrow will be similar to me-now, and it is governed by second type of identity, and there are no much problems here. The problems appear than different types of identity become not alined. In Parfit's example if I lose all my memories and someone gets all my memories, where will be I?

(Parfit named this two types of identity "numerical" and "qualitative", the names are not self evident, unfortunately, and the definition is not exactly the same, see here http://www.iep.utm.edu/person-i/:

"Let us distinguish between numerical identity and qualitative identity (exact similarity): X and Y are numerically identical iff X and Y are one thing rather than two, while X and Y are qualitatively identical iff, for the set of non-relational properties F1...Fn of X, Y only possesses F1...Fn. (A property may be called "non-relational" if its being borne by a substance is independent of the relations in which property or substance stand to other properties or substances.) Personal identity is an instance of the relation of numerical identity;")

Replies from: gjm, Good_Burning_Plastic↑ comment by gjm · 2016-08-18T12:11:03.443Z · LW(p) · GW(p)

Of course you aren't explicitly trying to define One True Approximation, but I have the impression you're looking for One True Answer without acknowledging that any answer is necessary an approximation (and therefore, as you say, it makes no sense to look for One True Approximation -- that was part of my point).

What we need is to define practical useful approximation

I'm not sure that's correct. If we're looking at "exotic" situations with imperfect uploads, etc., then I think the intuitions we have built around the idea of "identity" are likely to be unhelpful and may be unfixably unhelpful; we would do better to try to express our concerns in terms that don't involve the dubious notion of "identity".

different types of identity

I think there are many other different types of identity: other different things we notice and care about, and that contribute to our decisions as to whether to treat A and B as "the same person". Physical continuity. Physical similarity. Social continuity (if some people maintain social-media conversations which are with A at the start, with B at the end, and interpolate somehow in between, then they and we may be more inclined to think of A and B as "the same person"). Continuity and/or similarity of personality.

(I should maybe clarify what I mean by "continuity", which is something like this: A and Z have continuity-of-foo if you can find intermediates A-B-C-...X--Y-Z where each pair of adjacent intermediates is closely separated in space, time, and whatever else affects our perception that they "could" be the same, and also each pair of adjacent intermediates is extremely similar as regards foo. This is different from similarity: A and Z might be quite different but still connected by very similar intermediates (e.g., one person aging and changing opinions etc. over time), or A and Z might be quite similar but there might not be any such continuous chain connecting them (e.g., parent and child with extremely similar personalities once mature).)

It is partly because there are so many different kinda-identity-like notions in play that I think we would generally do better to work out which notions we actually care about in any case and not call them "identity" except in "easy" cases where they all roughly coincide as they do in most ordinary everyday situations at present.

"Numerical identity" is not a name for either of the two types of "identity" you describe, nor for any of mine, nor for anything that is clearly specifiable for persons. You can only really talk about "numerical identity" when you already have a clear-cut way of saying whether A is the same object as B. Parfit, unless I am badly misremembering, does not really believe in numerical identity for persons, because he thinks many "are A and B the same person?" questions do not have well defined answers. I hold much the same position.

Incidentally:

from our last discussions I remember that you deny existence of consciousness in some way

I certainly would not say that I deny the existence of consciousness, but it wouldn't surprise me to learn that I deny some other things that you would call the existence of consciousness :-). I.e., perhaps your definition of "consciousness" has things in it that I don't believe in.

Replies from: turchin↑ comment by turchin · 2016-08-18T13:34:41.380Z · LW(p) · GW(p)

In fact while it may seems to look like that I am listing all possible solution to find one true one as mine mentioning of AIXI imply, it is not so.

I was going to dissolve intuitive notion of identity into elements and after use these elements to construct useful models. These model may be complex, and it may consists of many blocks, evolving over time and interacting.

But it is clear to me (̶i̶f̶ ̶s̶o̶u̶l̶ ̶d̶o̶e̶s̶n̶'̶t̶ ̶e̶x̶i̶s̶t̶) than there is no real identity. Identity is only useful construction, and I am free to update it depending of my needs and situation, or not use it at all.

The idea of identity is (was) useful to solve some practical tasks, but sometimes it makes these tasks more obscure.

Yes, numerical identity is not "consciousness identity", it is about "exactly the same thing".

Consciousness identity is answering question "what I will experience in the next moment of time". There are a lot of people who claim that "exact copy of me is not me".

I used the following "copy test" to check personal ideas about identity: "Would you agree to be instantly replaced by your copy?" many people say: "No, I will be dead, my copy is not me".

Update: But there is one thought experiment that is very strongly argue against idea that identity is only a model. Imagine that I am attached to rails and expecting that in one hour a train will cut my leg.

If identity would be only a model I would be able to change it in a way that it will be not me in the next 60 minutes. I could think about millions very similar beings, about billion of my copies in the parallel worlds, about open identity and closed individualism. But I will not escape from rail.

My model of identity is in firmware of my brain, and results from long term evolutionary process.

↑ comment by Good_Burning_Plastic · 2016-08-18T11:21:52.668Z · LW(p) · GW(p)

What we need is to define practical useful approximation.

But an approximation that's useful for some purpose might be useless for another purpose.

comment by buybuydandavis · 2016-08-20T22:43:17.270Z · LW(p) · GW(p)

"Is it me?" is a question of value masquerading as a question of fact.

Intertemporal, interstate solidarity is a choice.

Replies from: turchin↑ comment by turchin · 2016-08-20T23:23:00.539Z · LW(p) · GW(p)

But the question "what I will experience in the next moment of time" is physical. And all physics is based on idea of the sameness of an observer during an experiment.

Replies from: buybuydandavis↑ comment by buybuydandavis · 2016-08-21T05:01:46.338Z · LW(p) · GW(p)

Begs the question of what you will consider "I" in the next moment.

And all physics is based on idea of the sameness of an observer during an experiment.

? Sounds like hokem to me. Reality does not have to conform to your structural language commitments.

You can have multiple observers during an experiment. It's the observations that matter, not how you choose to label the observing apparatus.

comment by kebwi · 2016-08-16T06:02:34.243Z · LW(p) · GW(p)

I don't mean to sound harsh below Alexey. On the whole, I think you've done a wonderful job, but that said, here's my take...

I personally think the question is poorly phrased. Throughout the document, Turchin asks the question "will some future entity be me?" The copy problem, which he takes as one of the central issues at task, demonstrates why this question is so poorly formulated, for it leads us into such troublesome quandaries and paradoxes. I think the future-oriented question (will a future entity be me?) is simply a nonsense question, perhaps best conceptualized by the fact that the future doesn't exist and therefore it violates some fundamental temporal property to speak about things as if they already exist and are available for scrutiny. They don't and it is wrong to do so.

I think the only rational way to pose the question is past-oriented: "was some past entity me?" Notice how simply and totally the copy problem evaporates in this context. Two current people can both give a positive answer to the question via a branching scenario in which one person splits into two, perhaps physically, perhaps psychologically (informationally). Despite both answering "yes", practically all the funny questions and challenges just go away when we phrase the question in a past-oriented manner. Asking which of multiple future people is you paralyzes you with some "choice" to be made from the available future options. Hence the paradox. But asking which past person of you puts no choice on the table. The copy scenario always consists of a single person splitting, and so there is only one ancestor from which a descendant could claim to have derived. No choice from a set of available people enters into the question.

One question claws its way back into the discussion though. If current persons A and B both answer "yes" to identifying with past C, then does that somehow make them identified with one another? That can be a highly problematic notion since they can seem to be so irrefutably different, both in their memories and in their "conscious states" (and also in their physical aspects if one cares about physical or body identity). The solution to this addition problem is simple however: identity is not transitive to begin with. Thus, the fact that A is C and B is C does not imply that A is B in the first place. It never implied that anyway, so why even entertain the question? No, of course A and B aren't the same, and yet they are both still identified as C. No problem.

Draw a straight line segment. At one end, deviate with smooth curvature to bend the line to the left. But also deviate from the same point to the right. As we trace along the line, approaching the branch point, we are faced with the classic question: which of the impending branches is the line? We can follow either branch with smooth curvature, which is one good definition of a line "identity" (with lines switching identity at sharp angles). The question is unanswerable since either branch could be identified as the original, yet we are phrasing the question so as to insist upon one choice. I maintain that the question is literally nonsense. Now, pick an arbitrary point on either of the branches, looking back along its history and ask which set of points along its smooth curvature are the same line as that branch? For each branch we can conclude that points along the segment prior to the split are the same line as the branch itself, yet the branches are not equivalent to one another since that would require turning a sharp corner to switch branches. How is this possible? Simple. It is not a transitive relation. It never was.

Turchin pays very minor attention to branching (or splitting) identity in his map, which I think is disappointing since it is likely the best model of identity available.

And that is why I advocate for branching identity in my book and my various articles and papers. I just genuinely think it is the closest theory of identity available to an actual notion of some fundamental truth, assuming there is any such thing on these matters.

Replies from: FeepingCreature, turchin↑ comment by FeepingCreature · 2016-09-09T09:39:40.120Z · LW(p) · GW(p)

The past doesn't exist either.

↑ comment by turchin · 2016-08-16T08:00:49.292Z · LW(p) · GW(p)

The reason why I look into the future identity is that most problems with copies arise in decision theory and in expectations of next experience.

For example, I live my live, but someone collect information about me, and using AI create my poor non-exact model of me one day in strange place. Should I add my expectation that I will find myself in strange place?

Replies from: kebwi↑ comment by kebwi · 2016-08-16T14:34:59.715Z · LW(p) · GW(p)

That's fine, but we should phrase the question differently. Instead of asking "Should I add my expectation that I will find myself in strange place?" we should ask "Will that future person, looking back, perceive that I (now) am its former self?"

As to the unrelated question of whether a poor or non-exact model is sufficient to indicate preservation of identity, there is much to consider about which aspects are important (physical or psychological) and how much precision is required in the model in order to deem it a preservation of identity -- but none of that has to do with the copy problem. The copy problem is orthogonal to the sufficient-model-precision question.

The copy problem arises in the first place because we are posing the question literally backwards (*). By posing the question pastward instead of futureward, the copy problem just vanishes. It becomes a misnomer in fact.

The precision problem then remains an entirely valid area of unrelated inquiry, but should not be conflated with the copy problem. One has absolutely nothing to do with the other.

(*): As I said, I'm not sure it's even proper to contemplate the status of nonexistent things, like future things. Does "having a status" require already existing? What we can do is consider what their status will be once they are in the present and can be considered by comparison to their own past (our present), but we can't ask if their "current" future status has some value relative to our current present, because they have no status to begin with.

Replies from: WhySpace_duplicate0.9261692129075527, turchin↑ comment by WhySpace_duplicate0.9261692129075527 · 2016-08-17T16:48:53.579Z · LW(p) · GW(p)

The copy problem is also irrelevant for utilitarians, since all persons should be weighted equally under most utilitarian moral theories.

It's only an issue for self-interested actors. So, if spurs A and B both agree that A is C and B is C, that still doesn't help. Are the converse) statements true? A selfish C will base their decisions on whether C is A.

I tend to view this as another nail in the coffin of ethical egoism. I lean toward just putting a certain value on each point in mind-space, with high value for human-like minds, and smaller or zero value on possible agents which don't pique our moral impulses.

↑ comment by turchin · 2016-08-16T15:07:23.767Z · LW(p) · GW(p)

While I like your approach in general as it promise to provide simple test for copy problem, I have some objections

In fact the term "copy" is misleading, and we should broke it on several terms. One of the is "my future state". Future state is not copy by definition, as it different, but it could remember my past.

As "future state" is different from me, it opens all the hell of questions about not exact copies. Future state of me is not-exact copy of me which is different from me by the fact that it remembers me now as its past. It may be also different in other things. In fact you said that "not-exact copy of me which remember me in my past - is me anyway". So you suggested a principle how prove identity of non-exact-copyes.

I will not remember most of my life moments. So they are dead ends in my life story. If we agree with your definition of identity it would mean that I die thousand times a day. Not pleasant and not productive model.

There will be false positives too. An impostor who claims to be Napoleon and has some knowledge of Napoleon's life and last days, will be Napoleon by this definition of identity.

It will fail if we will try to connect early childhood of a person and his old state. Being 60 years old he has no any memory of being 3 years old, and not much similar personal traits. The definition of identity as "moving average continuity" (not in the map yet) could easily overcome this non natural situation.

We could reformulate many experiment in the way that copies exist in the past. For example, each day of the weak a copy of me appear in a cell, and he doesn't know which day it is. (Something like Sleeping beauty experiment) In this case some of his copies are in the past from his point in timeline, and some may be in the future, but he should reason as if they all exist actually and simultaneously.

But I also like your intuition about the problem of actuality, that is if only moment now is real, no copies exist at all. Only "me now" exist. It closes the copy problem, but not closes the problem of "future state".

In fact, I have bad news for any reader who happens to be here: the "copy problems" is not about copies. It is about next state of my mind, which is by definition not my copy.

Replies from: WhySpace_duplicate0.9261692129075527↑ comment by WhySpace_duplicate0.9261692129075527 · 2016-08-17T17:04:06.050Z · LW(p) · GW(p)

3. I will not remember most of my life moments. So they are dead ends in my life story. If we agree with your definition of identity it would mean that I die thousand times a day. Not pleasant and not productive model.

I'm not sure if you've made this mistake or not, but I know I have in the past. Just because something is unimaginably horrible doesn't mean it isn't also true.

It's possible that we are all dying every Plank time, and that our CEV's would judge this as an existential holocaust. I think that's unlikely, but possible.

Replies from: turchin↑ comment by turchin · 2016-08-17T18:00:34.714Z · LW(p) · GW(p)

I don't think it is true, I just said that it follows from the definition of identity based on ability to remember past moments.

In real life we use more complex understanding of identity, where it is constantly verified by multiple independent channels (I am overstraching here). if i don't remember what I did yesterday, there still my non changing attributes like name, and also causal continuity of experiences.

Replies from: WhySpace_duplicate0.9261692129075527↑ comment by WhySpace_duplicate0.9261692129075527 · 2016-08-17T19:34:34.844Z · LW(p) · GW(p)

Ah, thanks for the clarification. I interpreted it as a reductio ad absurdum.

comment by Dagon · 2016-08-15T20:44:43.725Z · LW(p) · GW(p)

Keep in mind that one of the reasons "identity" is hard is that the usage is contextual. Many of these framings/solutions can simultaneously be useful for different questions related to the topic.

I tend to prefer non-binary solutions, mixing continuity and similarity depending on the reason for wanting to measure the distinction.

Replies from: turchin↑ comment by turchin · 2016-08-15T20:53:05.810Z · LW(p) · GW(p)

I agree with you that identity should always answer on a question, like will I be identical to my copy in certain conditions, and what it will mean to be identical to it (for example, it could mean that I will agree on my replacement by that copy if it will be 99.9 per cent as me).

So identity is technical term which helps us to solve problems and that is why it is context depending.

Replies from: Dagon↑ comment by Dagon · 2016-08-16T00:10:47.887Z · LW(p) · GW(p)

I'd go further. Identity is not a technical term, though it's often used as if it were. Or maybe it's 20 technical terms, for different areas of inquiry, and context is needed to determine which.

The best mechanism is to taboo the word (along with "I" and "identical" and "my copy" and other things that imply the same fuzzy concept) and describe what you actually want to know.

You know that nothing will be quantum-identical, so that's a nonsense question. You can ask "to what degree will there be memory continuity between these two configurations", or "to what degree is a prediction of future pain applicable", or some other specific description of an experience or event.

Replies from: turchin↑ comment by turchin · 2016-08-16T08:14:42.422Z · LW(p) · GW(p)

I saw people who attempted to do it in real life, and they speak like "my brain knows that he wants go home" instead of "I want to go home".

The problem is that even if we get rid of absolute Self and Identity we still have practical idea of me, which is built in our brain, thinking and language. And without it any planing is impossible. I can't go to the shop without expecting that I will get a dinner in one hour. But all problems with identity are also practical: should I agree to be uploaded etc.

There is also problem of oneness of subjective experience. That is there is clear difference between the situation there I will experience pain and other person's pain. While from EA point of view it is almost the same, it is only moral upgrade of this fact.

Replies from: Dagon↑ comment by Dagon · 2016-08-16T15:50:57.118Z · LW(p) · GW(p)

"my brain knows that he wants go home" instead of "I want to go home".

I'll admit to using that framing sometimes, but mostly for amusement. In fact, it doesn't solve the problem, as now you have to define continuity/similarity for "my brain" - why is it considered the same thing over subsequent seconds/days/configurations?

I didn't mean to say (and don't think) that we shouldn't continue to use the colloquial "me" in most of our conversations, when we don't really need a clear definition and aren't considering edge-cases or bizarre situations like awareness of other timelines. It's absolutely a convenient, if fuzzy and approximate, set of concepts.

I just meant that in the cases where we DO want to analyze boundaries and unusual situations, we should recognize the fuzziness and multiplicity of concepts embedded in the common usage, and separate them out before trying to use them.

Replies from: turchincomment by Manfred · 2016-08-15T20:34:06.877Z · LW(p) · GW(p)

I don't think this makes sense as a "problem to solve." Identity is a very useful concept for humans and serves several different purposes , but it is not a fundamental facet of the world, and there is no particular reason why the concept or group of heuristics that you have learned and call "identity" is going to continue to operate nicely in situations far outside of everyday life.

It's like "the problem of hand" - what is a hand? Where is the dividing line between my hand and my wrist? Do I still have a left hand if I cut off my current left hand and put a ball made of bone on the end of my wrist? Thoughts like these were never arrived at by verbal reasoning, and are not very amenable to it.

This is why we should eventually build AI that is much better at learning human concepts than humans are at verbalizing them.

Replies from: turchin↑ comment by turchin · 2016-08-15T21:18:01.647Z · LW(p) · GW(p)

In fact the identity is technical term which should help us to solve several new problems which will appear after older intuitive ideas of identity stop work, that is the problems of uploading, human modification and creation of copies.

So the problems:

1) Should I agree to be uploaded into a digital computer? Should we use gradual uploading tech? Should I sign for cryonics?

2) Should I collect data for digital immortality hoping that future AI will reconstruct me. Which data is most important? How much? What if my future copy will be not exact?

3) Should I agree on creation of my several copies?

4) What about my copies in the multiverse? Should I count them even? Should I include casually disconnected copies in another universes in my expectation of quantum immortality?

5) How quantum immortality and destructive uploading work together?

6) Will I die in case of deep coma, as my stream of consciousness will interrupt? So should I prefer only local anesthesias in case of surgery?

7) Am I responsible for the things I did 20 years ago?

8) Should I act now for the things which I will get in only 20 years like life extension.

So there are many things which depends of my ideas of personal identity in my decision making, and some of them like digital immortality and taking life extension drugs should be implemented now. Some people refuse to record everything about them or sign for cryonics because of their identity ideas.

The problem with the hope that AI will solve all our problems that it has a little bit of circularity. In child language, because to create good AI we need to know exactly what is "good". I mean that if we can't verbalise our concept of identity, we also poor in verbalaising any other complex idea, including friendliness and CEV.

So I suggest to try our best in creating really good definitions of what is really important to us, hoping that future AI will be able to get the idea much better from these attempts.

Replies from: Manfred↑ comment by Manfred · 2016-08-16T03:10:09.243Z · LW(p) · GW(p)

Right. I think that one can use one's own concept of identity to solve these problems, but that which you use is very difficult to put into words. Much like your functional definition of "hand," or "heap." I expect that no person is going to write a verbal definition of "hand" that satisfies me, and yet I am willing to accept peoples' judgments on handiness as evidence.

On the other hand, we can use good philosophy about identity-as-concept to avoid making mistakes, much like how we can avoid certain mistaken arguments about morality merely by knowing that morality is something we have, not something imposed upon us, without using any particular facts about our morality.

comment by turchin · 2016-08-15T12:55:35.636Z · LW(p) · GW(p)

Expected pain paradox may be used to demonstrate difficulty of the identity problem. For example you know that tomorrow your leg will be cut without anesthesias and you are obviously afraid. But then a philosopher comes and says that unlimited number of your copies exist in the worlds there your leg will not be cut and moreover there is no persistent Self so it will be not you tomorrow. How it will change your feeling and expectations?

No matter how many your copies exist outside and how many interesting theories of identity you know, it doesn't change the fact that you will have severe pain very soon.

Replies from: le4fy↑ comment by le4fy · 2016-08-15T13:58:08.356Z · LW(p) · GW(p)

I'm reading Robin Hanson's Age of Em right now, and some of his analysis of mind emulations might help here. He explains that emulations have the ability to copy themselves into other ems that will from the moment of copying onward have different experiences and therefore act and think differently. That is to say, even if you are aware of many copies of yourself existing in other worlds, they are effectively different people from the moment of copying onward. The fact remains that you are the one that will experience the pain and have to live with that memory and without a leg.

Maybe the identity question can be approached with some sort of continuity of experience argument. Even if minds can be copied easily, you can trace an individual identity by what they have experienced and will experience. Many copies may share past experiences, but their experiences will diverge at the point of copying, from which point you can refer to them as separate identities.

Replies from: turchin, turchin, le4fy↑ comment by turchin · 2016-08-15T14:25:29.706Z · LW(p) · GW(p)

There is a problem with idea of continuity as a nature of identity, as some people suggested different meaning of it:

"Informational continuity" is when I remember in the moment N+1 my state of consciousness in the moment N. The problem: Every morning when I suddenly get up I have discontinuity in my memory stream as I loose memory of my dreams. Am I dead every morning?

Causal continuity. The main question here is continuity of what - of element of stream of conscuisness which creates next element in the next moment even if they are not remembered (so here arise difference with informational continuity idea), or causal continuity of underlying mechanism of brain. The main problem here will be that I will be not able (probably) feel if my causal stream was stopped and when restarted. (or i could feel it as one moment blackout which i will not remember?)

↑ comment by turchin · 2016-08-15T14:31:42.390Z · LW(p) · GW(p)

There is a way to escape the cutting leg experiment. It is named create indexical uncertainty.

Lets create million your copies all of which will have the exactly same information as you: than their leg will be cut, but their leg will not be cut after all.

Them everybody is informed about the situation, including original, and all of them are still in the same state of mind, that is they are identical copies.

But each of them could conclude that they have 1 in 1 000 000 chance of leg cut, which is negligible. Original could have the same logic. And he will be surprised when his leg will be cut.

So the question is how properly calculate probabilities from the point of vies of original in this situation. One line of reasoning gives 1 in million probability and another 100 per cent.

Replies from: le4fy↑ comment by le4fy · 2016-08-15T14:50:28.606Z · LW(p) · GW(p)

I don't agree that the indexical uncertainty argument works in this case. If you assume there are a million copies of you in the same situation, then every copy's posterior must be that their leg will be cut off.

If you know that only one copy's leg will be cut, however, then I agree that you may hold a posterior of experiencing pain 1/1000000. But that seems to me a different situation where the original question is no longer interesting. It's not interesting because for that situation to arise would mean confirmation of many-worlds theories and the ability to communicate across them, which seems like adding way too much complexity to your original setup.

Replies from: turchin↑ comment by turchin · 2016-08-15T16:22:49.589Z · LW(p) · GW(p)

Look, it will work if it will done in several stages.

1) I am alone and I know that my leg will be cut 2) A friend of mine created 1 000 000 copies, all of them think that their leg will be cut 3) He informs all copies and me about his action, everybody is excited.

I don't see it as confirmation to MW, as it assumed to be done via some kind of scanner in one world, but yes it is similar to MW and may be useful example to estimate subjective probabilities in some thought experiments like quantum suicide with external conditions (and also copies).

Replies from: g_pepper↑ comment by g_pepper · 2016-08-15T17:46:54.339Z · LW(p) · GW(p)

It seems to me that you have 1,000,000 different people, one of whom is going to get his/her leg cut off. The fact that they are all copies of one another seems irrelevant. The same relief that each of the 1,000,000 people in your example feels (in your step 3) upon learning that there is only 1/1000000 chance of loosing a leg would be felt by each of 1,000,000 randomly selected strangers if you:

- Told each individually that he/she would have his/her leg cut off

- Then told each that only one member of the group would be randomly selected to have his/her leg cut off

So I guess I do not see how this example tells us anything about identity.

Replies from: turchin↑ comment by turchin · 2016-08-15T18:21:41.302Z · LW(p) · GW(p)

One way to look at it is as of a trick to solve different identity puzzles.

Another possible idea is to generalise it in a principle: "I am a class of all beings from which I can't distinguish myself."

There is only one real test on identity, that is the question: "What I will feel in the next moment?" Any identity theory must provide plausible answer to it.

Identity theories belong to two main classes: the ones which show real nature of identity and the ones that contract useful identity theory if identity doesn't have any intrinsic nature.

And if identity is a construction we may try to construct it in the most useful and simple way, which is also don't contradict our intuitive representation of what identity is (which are complex biological and cultural adaption).

It is like "love" - everybody could feel it, as it evolved biologically and culturally, but if we try to give it short logical definition, we are in troubles.

So, defining identity as a subset of all beings from which I can't distinguish myself may be not real nature of identity, but useful construction. I will not insist that it is the best construction.

Replies from: g_pepper↑ comment by g_pepper · 2016-08-15T18:49:14.510Z · LW(p) · GW(p)

I agree that identity

is like "love" - everybody could feel it, as it evolved biologically and culturally, but if we try to give it short logical definition, we are in troubles

And, there are other things that most people experience but that are hard to define and can seem paradoxical, e.g. consciousness and free will.

However, you said that we can generalize your leg-amputation thought experiment as a principle: "I am a class of all beings from which I can't distinguish myself." I don't understand what you mean by this - your thought experiment involving 1,000,000 copies of a person seems equivalent to my thought experiment invovling 1,000,000 strangers. So, I do not see how being a member of a class from which I can't distinguish myself is relevant.

Replies from: turchin↑ comment by turchin · 2016-08-15T19:32:10.055Z · LW(p) · GW(p)

The strangers are identical in this aspect and are different in other aspects.

it is all similar to social approach to identity. (It is useful for understanding, but final solution should be more complex, so I would say that it is my final opinion.)

The idea is to define identity not trough something about me, but through my difference with other people, so identity is not substance but form of relation with others.

The identity exist only in social situations, where at least several people exist (or their copies), if only one being would exist in the universe it probably will not have idea or problems with identity.

For social situation it is important to distinguish identity of different people, based on their identity tags (name and face). Even I have to remember who I am and scan my live situation every morning. So my personal feeling of identity is in some sense my representation of my self as it would be seen from the social point of view.

If I see my friend F. and he looks like F and correctly reacts on identification procedures (recognises me and reacts on his name), I think that he is F. (Duck test). But in fact infinite number of different possible beings could past the same test.

And interesting thing is that a person could run this identification test for hum self. (And fail: Once i was baby-sitting Lisa's two children and stay in their home. I woke up in the morning and thought that two my children are sleeping and I am in Lisa's bed, so I should be Lisa. In 0.5 second I woke up a little bit more and understood that I am not Lisa and I am even male.)

I tell all it to show how being a member of a class could be good approximation of identity. But real identity should be more complex thing.

Replies from: g_pepper↑ comment by g_pepper · 2016-08-15T20:13:38.540Z · LW(p) · GW(p)

I understand that identity is too complex to figure out in this comment thread. However, any theory of identity that defines or approximates identity as "a member of a class" is apt to badly miss encapsulating most people's understanding of identity, as illustrated by the fact that my reaction to having my own leg cut off is going to be significantly different than is my reaction to a copy of me having his leg cut off. I wouldn't be happy about either situation, but I'd be much less happy at having my own leg cut off than I would about a copy of me having his leg cut off.

Replies from: turchin↑ comment by turchin · 2016-08-15T20:40:11.588Z · LW(p) · GW(p)

But if you don't know who is original or copy, you may start to worry about.

Uncertainty is part of identity, I mean that any definition of identity should include some part of uncertainty, as identity is unmeasurable and undefined thing. Will it be me tomorrow? What if I am only a copy? What if my copy will be done with mistakes? All problem of identity appear in the situations when it is uncertain. And sometimes increase of uncertainty may solve this problems, as we do in indexical uncertainty.

Replies from: g_pepper↑ comment by g_pepper · 2016-08-15T21:09:44.974Z · LW(p) · GW(p)

But if you don't know who is original or copy, you may start to worry about.

It seems to me that who is the original and who is the copy is irrelevant to my reaction in the leg amputation thought experiment. But, who is me and who is not me is very relevant. For example, suppose I am a copy of the original g_pepper. In that case, I would be less unhappy about the original g_pepper having his leg cut off than I would be about having my own leg cut off.

↑ comment by le4fy · 2016-08-15T14:12:15.602Z · LW(p) · GW(p)

Another way to think about it may be in terms of conservation of experience. You cannot really be comforted by the fact that many copies of you exist in other places, because one of those copies must experience and retain the memory of this horrible event.

Replies from: turchin↑ comment by turchin · 2016-08-15T14:39:36.753Z · LW(p) · GW(p)

But it result in even more complex moral problems.

1) I am really uncomforted with the fact that other people had suffered unbearable suffering (true about me).

2) In the infinite universe should exist infinite number of my copies which experience all types of sufferings. F... :(