ChatGPT for translation

post by Varshul Gupta · 2023-08-02T11:57:21.099Z · LW · GW · 0 commentsThis is a link post for https://dubverseblack.substack.com/p/chatgpt-for-translation

Contents

Current translation setup ChatGPT iteration 1 ChatGPT iteration 2 But why ChatGPT? GPT-4 VS GPT-3.5-Turbo Parting thoughts None No comments

This post is picking up from some of the points mentioned in our Q2’23 work post (and continuing our experiments with ChatGPT). One big hurdle we are currently facing is translations not bing contextual / not so vernacular.

(If you just want to jump on to the results: here is the ChatGPT translation based video VS our pre-ChatGPT translation video )

Current translation setup

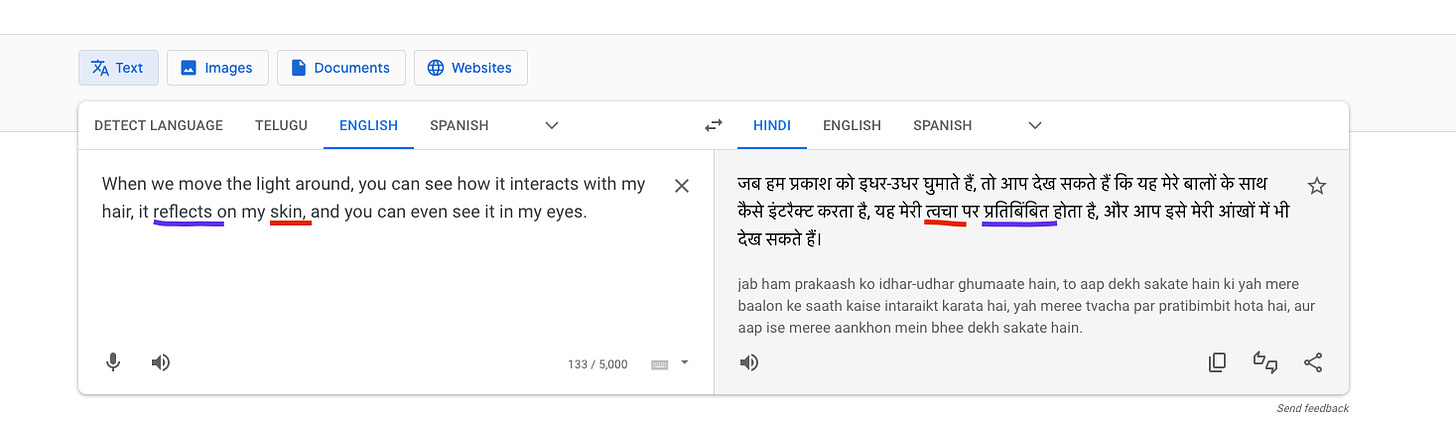

Under the hood, we use google translate, and for one of the instance it converted the word sleek to `चिकना` in a tech video, and without human supervision and/or context, maybe that’s not enough to convey the meaning. If only the API had the option to pass the previous relevant texts, while also being able to pick up some context and tonality etc, it could have the potential to resolve some of these errors (awaiting availability of PaLM 2). Following is an example of translation for a text from a Tech Conference video:

Sample phrase generated from Whisper for Meta Connect ‘22 highlights and translated to Hindi using Google Translate.

So, we looked the other way to see if ChatGPT had some any solutions up its sleeve.

ChatGPT iteration 1

Asking ChatGPT to give the translation for the same text in a more colloquial Hindi (~Hinglish) format provides:

जब हम प्रकाश को घुमाते हैं, तो आप मेरे बालों के साथ कैसे इंटरैक्ट करता है, यह मेरी त्वचा पर छिपकर दिखता है और आप तकनीक से मेरी आंखों में भी इसे देख सकते हैं।

The output isn’t great, and actually loses the context in the first sentence itself. So, ChatGPT’s translation out-of-the-box doesn’t seem to be of a good enough quality.

ChatGPT iteration 2

Next up, changing the prompt a bit, some context and some translation grounding yields the following:

जब हम लाइट को इधर-उधर घुमाते हैं, तो आप देख सकते हैं कि यह मेरे बालों के साथ कैसे इंटरैक्ट करता है, यह मेरी स्किन पर रिफ्लेक्ट होता है, और आप इसे मेरी आंखों में भी देख सकते हैं।

As one can see, the words ‘reflects’, ‘light’ and ‘skin’ are kept as is, just transcribed. This is a useful output for dubbing, as the output speech modality is the one that comes into play and not the text (an important metric). ChatGPT’s translation seems to do a lot better when Google translate’s high quality output is provided as grounding.

But why ChatGPT?

The advantage of ChatGPT is the ability to configure/control the output via text interface (prompting) instead of re-visiting/training the translation algorithm. So to achieve a demo-able setup or to test ideas quickly, prompting is a great way, and might help us get to maybe 60-70% where we need to be.

As we go deeper, one sees that that the colloquial-ness is also relative to content and the audience. For a mythological video, a person with deep roots in Hindi would prefer more native words and not Hinglish, while maybe for an urban person exposed to the tech world, Hinglish works better for a Tech video. This configurability is greatly eased with the text-prompting technique.

GPT-4 VS GPT-3.5-Turbo

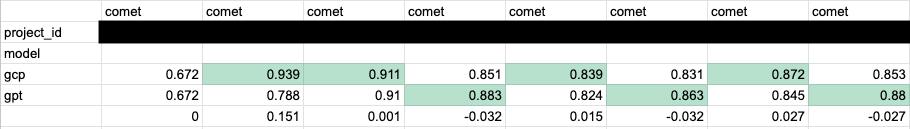

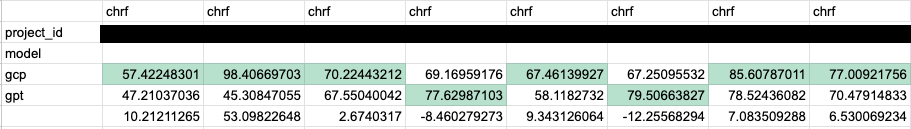

As cost of GPT-4 usage is quite high, we explored GPT-3.5-turbo as well to see if the quality is on par or worth the extra cost diff. Using the OpenAI ChatCompletion endpoint we tried both models, and we observed that GPT-4 is way ahead of GPT-3.5 and google translate sits somewhere between them.

Parting thoughts

But that being said, ChatGPT isn’t the perfect solution (yet!), and has its own short-comings, many of which have been shared broadly across as well in the social media, and our hunch is some will start getting resolved as the LLMs tech continues to improve.

Production system based on prompts is sometimes hard, it does requires a couple of tries to get it right, minor changes to prompt yields unexpected results across the board AND lack of well defined automated metrics and datasets for translations also pose grave roadblocks preventing us iterating over prompts faster. We also observed ChatGPT doesn’t necessarily adhere to the rules mentioned in prompt, and is prone a lot to hallucinations based on the text length of variables passed.

But, in some domains we saw GCP outperforms ChatGPT translations in our internal evaluations, which asserts our rationale around domain specific translations quality/flexibility. Following are some automated metrics (chrf and comet, both higher the better) evaluated on some internal datasets curated ranging across domains like cooking, wikis and pharmacy.

This is just the tip of the problems we are tackling in the translation, and continuing our experiments to find which prompts work best for a situation&user and building scalable production systems around it.

ChatGPT based translations is currently available only for select users as we are still working on scaling this up

0 comments

Comments sorted by top scores.