Taking nonlogical concepts seriously

post by Kris Brown (kris-brown) · 2024-10-15T18:16:01.226Z · LW · GW · 5 commentsThis is a link post for https://topos.site/blog/2024-10-11-nonlogical-concepts/

Contents

Reasoning vs (purely) logical reasoning Logical validity Sequents with multiple conclusions Nonlogical content Navigating the grid of possible implications Logical expressivism Broader consequences Resolving tensions in how we think about science Scientific foundations 'All models are wrong, but some are useful' Black box science AI Safety Applied mathematics and interoperable modeling A vision for software Math Vocabularies Interpretations Category theory None 5 comments

I recently wrote this post for the Topos Institute blog, engaging with some recent work in the philosophy of language which I believe is relevant to foundational questions regarding semantics. Many concepts (which we might call 'fuzzy' or tied up with 'values') aren't amenable to logical formalization, so their input is systemically unrepresented in science and technology building. But, through a change in our perspective about what the role of logic is, we can work with these concepts and build an approach to science that bakes these values in, while still being able to use all the great mathematical logic that we are used to. I believe this clarification about meaning sheds light on the problem of communication and alignment of our intentions with those of automated agents, though I only touch upon it in this introductory blog post.

Reasoning vs (purely) logical reasoning

This section is largely an exposition of material in Reasons for Logic, Logic for Reasons (Hlobil and Brandom, 2024).

Logical validity

Logic has historically been a model of good reasoning. Let denote that the premises (and so on) altogether are a good reason for . Alternatively: it's incoherent to accept all of the premises while rejecting the conclusion. As part of codifying the ('turnstile') relation, one uses logical rules, such as (the introduction rule for conjunction, for generic and ) and (one of two elimination rules for conjunction). In trying to reason about whether some particular inference (e.g. ) is good, we also want to be able to assume things about nonlogical content. The space of possibilities for the nonlogical content of are shown below:

| ? | ? | ? |

We care about good reasoning for specific cases, such as is "1+1=2", is "The moon is made of cheese", and is "Paris is in France". Here, we can represent our nonlogical assumptions in the following table:

Any logically valid inference, e.g. , is reliable precisely because it does not presuppose its subject matter. Not presupposing anything about here means it's incoherent to accept the premises while rejecting the conclusion in any of the eight scenarios. Logical validity requires (willful) ignorance of the contents of nonlogical symbols, whereas good reasoning will also consider the nonlogical contents.

This post will argue that traditional attitudes towards logic fail in both ways: they do in fact presuppose things about nonlogical contents, and their focus on logically-good reasoning (to the exclusion of good reasoning) is detrimental.

Sequents with multiple conclusions

We are used to sequents which have a single conclusion (and perhaps an empty conclusion like to signify the incompatibility of and , also sometimes written ). However, we can perfectly make sense of multiple conclusions using the same rule as earlier: "It's incoherent to reject everything on the right hand side while accepting everything on the left hand side." This gives commas a conjunctive flavor on the left hand side and a disjunctive flavor on right hand side. For example, in propositional logic, we have iff

Nonlogical content

Suppose we buy into relational rather than atomistic thinking: there is more to a claimable than whether it is true or false; rather, the nature of is bound up in its relationships to the other nonlogical contents. It may be the case that is a good reason for . The inference from "California is to the west of New York" to "New York is to the east of California" is good due to the meanings of 'east' and 'west', not logic.[1] It's clearly not a logically-good inference (terminology: call it a materially-good inference, marked with a squiggly turnstile, ). The 16 possibilities for the nonlogical (i.e. material) content of and are depicted in the grid below, where we use to mark a claimable as a "premise" and to mark it as a "conclusion".

Navigating the grid of possible implications

Suppose we wanted to find the cell corresponding to . We should look at the row labeled (" is the premise") and the column labeled (" is the conclusion").

As an example, if is "It's a cat" and is "It has four legs".[2]

We represent above as the in second row, third column. We represent (i.e. that it is not incompatible for it to both be a cat and to have four legs) above as the in first row, fourth column. There are boxes drawn around the 'interesting' cells in the table. The others are trivial[3] or have overlap between the premises and conclusions. Because we interpret as "It's incoherent to reject everything in while accepting everything in ", they are checkmarks insofar as it's incoherent to simultaneously accept and reject any particular proposition. This (optional, but common) assumption is called containment.

If our turnstile is to help us do bookkeeping for good reasoning, then suddenly it may seem wrong to force ourselves to ignore nonlogical content: is not provable in classical logic, so we miss out on good inferences like "It's a cat" "It has four legs" by fixating on logically-good inferences.

Not only do we miss good inferences but we can also derive bad ones. Treating nonlogical content atomistically is tied up with monotonicity: the idea that adding more premises cannot remove any conclusions ( therefore ). For example, let be "It lost a leg". Clearly "It's a cat", "It lost a leg" "It has four legs". This is depicted in the following table (where we hold fixed, i.e. we're talking about a cat).

The in the third row, second column here is the interesting bit of nonmonotonicity: adding the premise defeated a good inference.

Some logics forgo monotonicity, but almost all do presuppose something about nonlogical contents, namely that the inferential relationships between them satisfy cumulative transitivity: and entails . In addition to this being a strong assumption about how purely nonlogical contents relate to each other, this property under mild assumptions is enough to recover monotonicity.[4]

Logical expressivism

If what follows from what, among the material concepts, is actually a matter of the input data rather than something we logically compute, what is left over for logic to do? Consider the inferences possible when we regard ("Bachelors are unmarried") in isolation.

Even without premises, one has a good reason for (hence the ), and is not self-incompatible (hence the ). If we extend the set of things we can say to include , the overall relation can be mechanically determined:

It would seem like there are six (interesting, i.e. non-containment) decisions to make, but our hand is forced if we accept a regulative principle[5] for the use of negation, called incoherence-incompatibility: iff (iff is a conclusion in some context , then is incompatible with ). This is appropriately generalized in the multi-conclusion setting (where a "context" includes a set of premises on the left as well as a set of conclusion possibilities on the right) to iff . Negation has the functional role of swapping a claim from one side of the turnstile to the other.[6] So, to take as an example, we can evaluate whether or not this is a good implication via moving the to the other side, obtaining , which was a good implication in the base vocabulary.

Let's see another example, now by extending our cat example with the sentence ("If it's a cat, then it has four legs"). Here again we also have no freedom in the goodness of inferences involving the sentence . It is fully determined by the goodness of inferences involving just and . The property we take to be constitutive of being a conditional is deduction detachment: iff .

| ... | ||||||

| ... | ||||||

| ... | ||||||

| ... | ||||||

| ... | ||||||

| ... | ... | ... | ... | ... | ... | ... |

(Note, only an abbreviated portion of the 8x8 grid is shown.)

To summarize: logic does not determine what follows from what (logic shouldn't presuppose anything about material contents and purely material inferential relations). Rather, logic gives us a vocabulary to describe those relationships among the material contents.[7] In the beginning we may have had "It's a cat""It has four legs", but we didn't have a sentence saying that "If it's a cat, then it has four legs" until we introduced logical vocabulary. This put the inference into the form of a sentence (which can then itself be denied or taken as a premise, if we wanted to talk about our reasoning and have the kind of dialogue necessary for us to reform it).

This is both intuitive and radical at once. A pervasive idea, called logicism about reasons, is that logic underwrites or constitutes what good reasoning is.[8] This post has taken the converse order of explanation, called logical expressivism: 'good reason' is conceptually-prior to 'logically-good reason'. We start with a notion of what follows from what simply due to the nonlogical meanings involved (this is contextual, such as "In the theology seminar" or "In a chemistry lab"), which is the data of a relation. Then, the functional role of logic is to make explicit such a relation. We can understand these relations both as something we create and something we discover, as described in the previous post which exposits (Brandom 2013).

Familiar logical rules need to be readjusted if they are to also grapple with arbitrary nonlogical content without presupposing its structure (see the Math section below for the syntax and model theory that emerges from such an enterprise). However, the wide variety of existing logics can be thought of as being perfectly appropriate for explicitating specific relations with characteristic structure.[9]

There are clear benefits to knowing one is working in a domain with monotonic structure (such as being able make judgments in a context-free way): for this reason, when we artificially (by fiat) give semantics to expressions, as in mathematics, we deliberately stipulate such structure. However, it is wishful thinking to impose this structure on the semantics of natural language, which is conceptually prior to our formal languages.

Broader consequences

Resolving tensions in how we think about science

The logicist idea that our judgments are informal approximations of covertly logical judgments is connected to the role of scientific judgments, which are prime candidates for the precise, indefeasible statements we were approximating. One understands what someone means by "Cats have four legs" by translating it appropriately into a scientific idiom via some context-independent definition. And, consequently, one hasn't really said something if such a translation isn't possible.

Scientific foundations

The overwhelming success of logic and mathematics can lead to blind spots in areas where these techniques are not effective: concepts which are governed by norms which are resistant to formalization, such as ethical and aesthetic questions. Relegating these concepts to being subjective or arbitrary, as an explanation for why they lie outside the scope of formalization, can implicitly deny their significance. Scientific objectivity is thought to minimize the role of normative values, yet this is in tension with a begrudging acknowledgment that the development of science hinges essentially on what we consider to be 'significant', what factors are 'proper' to control for, what metrics 'ought' to be measured. In fact, any scientific term, if pressed hard enough, will have a fuzzy boundary that must be socially negotiated. For example, a statistician may be frustrated that 'outlier' has no formal definition, despite the exclusion of outliers being essential to scientific practice.

Although the naive empiricist picture of scientific objectivity is repeatedly challenged by philosophers of science (e.g. Sellars, Feyerabend, Hanson, Kuhn), the worldview and practices of scientists are unchanged. However, logical expressivism offers a formal, computational model for how we can reason with nonlogical concepts. This allows us to acknowledge the (respectable, essential) role nonlogical concepts play in science.

'All models are wrong, but some are useful'

This aphorism is at odds with the fact that science has a genuine authority, and scientific statements have genuine semantic content and are 'about the world'. It's tempting to informally cash out this aboutness in something like first-order models (an atomistic semantics: names pick out elements of some 'set of objects in the world', predicates pick out subsets, sentences are made true independently of each other). For example, the inference "It's a cat" "It's a mammal" is explained as a good inference because . This can be seen as an explanation for why any concepts of ours (that actually refer to the world) have monotonic / transitive inferential relations. Any concept of "cat" which has a nonmonotonic inferential profile must be informal and therefore 'just a way of talking'. It can't be something serious, such as picking out the actual set of things which are cats. Thus, we can't in fact have actually good reasons for these inferences, though we can imagine there are good reasons which we were approximating.

Deep problems follow from this worldview, such as the pessimistic induction about the failures of past scientific theories (how can we refer to electrons if future scientists inevitably will show that there exists nothing that has the properties we ascribe to 'electron'?) and the paradox of analysis (if our good inferences are logically valid, how can they be contentful?). Some vocabulary ought be thought of in this representational, logical way, but one's general story for semantics must be broader.[10]

Black box science

Above I argued for a theory-laden (or norm-laden) picture of science; however, the role of machine learning in science is connected to a 'theory-free' model of science: a lack of responsibility in interrogating the assumptions that go into ML-produced results follows from a belief that ML instantiates the objective ideal of science. To the extent this view of science is promoted as a coherent ideal we strive for, there will be a drive towards handing scientific authority to ML, despite the fact it often obfuscates rather than eliminates the dependence of science on nonlogical concepts and normativity. Allowing such norms to be explicit and rationally criticized is an essential component for the practice of science, thus there will be negative consequences if scientific culture loses this ability in exchange for models with high predictive success (success in a purely formal sense, as the informal interpretation of the predictions and the encoding of the informally-stated assumptions are precisely what make the predictions useful).

AI Safety

We can expect that AI will be increasingly used to generate code. This code will be inscrutably complex,[11] which is risky if we are worried about malicious or unintentional harm potentially hidden in the code. One way to address this is via formal verification: one writes a specification (in a formal language, such as dependent-type theory) and only accepts programs which provably meet the specification. However, something can seem like what we want (we have a proof that this program meets the specification of "this robot will make paperclips") but turns out to not be what we want (e.g. the robot does a lot of evil things in order to acquire materials for the paperclips). This is the classic rule-following paradox, which is a problem if one takes a closed, atomistic approach to semantics (one will always realize one has 'suppressed premises' if the only kind of validity is logical validity). We cannot express an indefeasible intention in a finite number of logical expressions. Our material concepts do not adhere to structural principles of monotonicity and transitivity which will be taken for granted by a logical encoding.

Logical expressivism supports an open-ended semantics (one can effectively change the inferential role of a concept by introducing new concepts). However, its semantics is less deterministic[12] and harder to compute with than traditional logics; future research may show these to be merely technological issues which can be mitigated while retaining the expressive capabilities necessary for important material concepts, e.g. ethical concepts.

Applied mathematics and interoperable modeling

A domain expert approaches a mathematician for guidance,[13] who is able to reframe what the expert was saying in a way that makes things click. However, if the mathematician is told a series of nonmonotonic or nontransitive "follows from" statements, it's reasonable for the mathematician to respond "you've made some sort of mistake", or "your thinking must be a bit unclear", or "you were suppressing premises". This is because traditional logical reasoning is incapable of representing material concepts. The know-how of the expert can't be put into such a formal system. However, we want to build technology that permits collaboration between domain experts of many different domains, not merely those who trade in concepts which are crystallized enough to be faithfully captured by definitions of the sort found in mathematics. Thus, acknowledgement of formal (but not logical) concepts is important for those who wish to work on the border of mathematics and application (particularly in domains studied by social sciences and the humanities).

A vision for software

These ideas can be implemented in software, which could lead to an important tool for scientific communication, where both formal and informal content need to be simultaneously negotiated at scale in principled, transparent ways.

One approach to formalization in science is to go fully formal and logical, e.g. encoding all of our chemical concepts within dependent type theory. If we accept that our concepts will be material rather than logical, this will seem like a hopeless endeavor (though still very valuable in restricted contexts). On the opposite end of the spectrum, current scientific communication works best through face-to-face conversations, lectures, and scientific articles. Here we lack the formality to reason transparently and at scale.

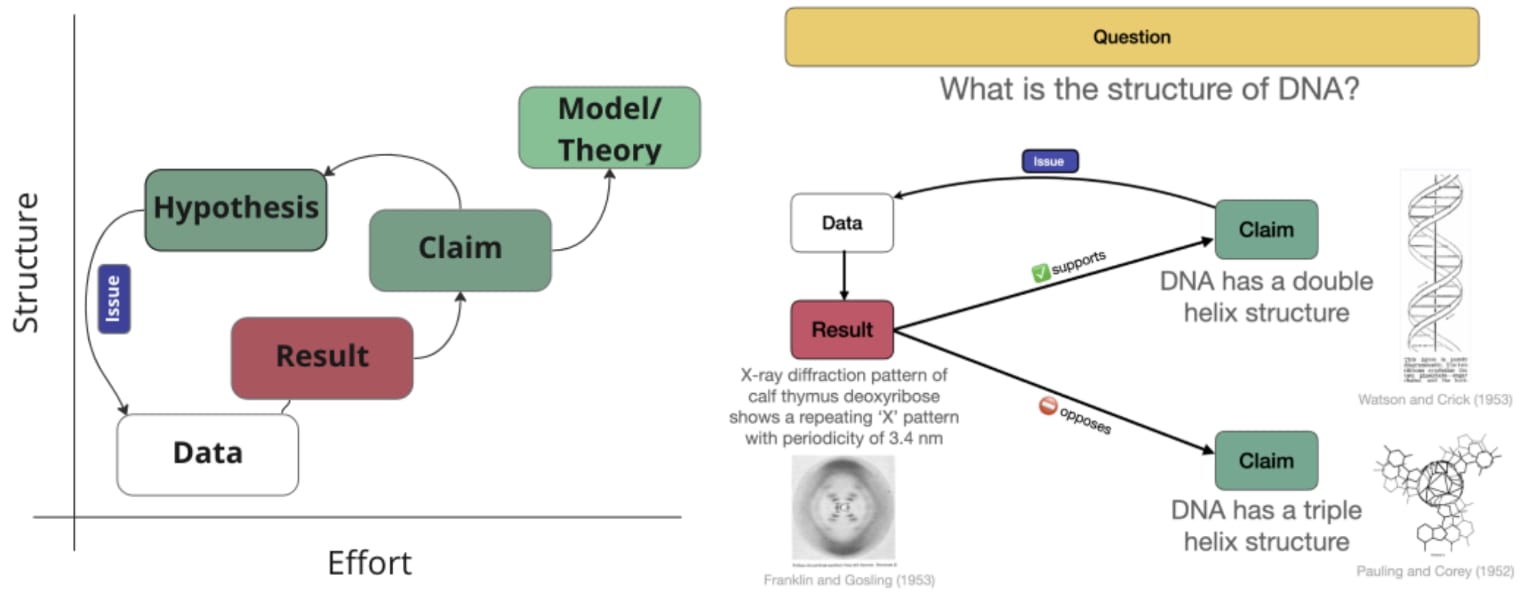

Somewhere in between these extremes lies discourse graphs: these are representations which fix an ontology for discourse that includes claims, data, hypotheses, results, support, opposition. However, the content of these claims is expressed in natural language, preventing any reliable mechanized analysis.

(Figure reproduced from here)

In a future post, I will outline a vision for expressing these building blocks of theories, data, claims, and supporting/opposition relationships in the language of logical expressivism (in terms of vocabularies, interpretations, and inferential roles, as described below). This approach will be compositional (common design patterns for vocabularies are expressible via universal properties) and formal, in the sense of the meaning of some piece of data (or some part of a theory or claim) being amenable to mechanized analysis via computation of its inferential role. This would make explicit how the meaning of the contents of our claims depends on our data, and dually how the meaning of our data depends on our theories.

Math

This section is largely an exposition of material in Reasons for Logic, Logic for Reasons.

There is much to say about the mathematics underlying logical expressivism, and there is a lot of interesting future work to do. A future blog post will methodically go over this, but this section will just give a preview.

Vocabularies

An implication frame (or: vocabulary) is the data of a relation, i.e. a lexicon (a set of claimables: things that can be said) and a set of incoherences, , i.e. the good implications where it is incoherent to deny the conclusions while accepting the premises.

Given any base vocabulary , we can introduce a logically-elaborated vocabulary whose lexicon includes but is also closed under . The of the logically-elaborated relation is precisely when restricted to the nonlogical vocabulary (i.e. logical vocabulary must be harmonious), and the following sequent rules indicate how the goodness of implications including logical vocabulary is determined by implications without logical vocabulary.

The double bars are bidirectional meta-inferences: thus they provide both introduction and elimination rules for each connective being used as a premise or a conclusion. They are quantified over all possible sets and . The top of each rule makes no reference to logical vocabulary, so the logical expressions can be seen as making explicit the implications of the non-logical vocabulary.

Vocabularies can be given a semantics based on implicational roles, where the role of an inference is the set of implications in which is a good inference:

The role of an implication can also be called its range of subjunctive robustness.

To see an example, first let's remind ourselves of our ("The cat has four legs") and ("The cat lost a leg") example, a vocabulary which we'll call :

The role of (i.e. ) in vocabulary is the set of all 16 possible implications except for and .

The role of a set of implications is defined as the intersection of the roles of each element:

The power set of implications for a given lexicon have a quantale structure with the operation:

Roles are naturally combined via a (dual) operation:

A pair of roles (a premisory role and a conclusory role) is called a conceptual content: to see why pairs of roles are important, consider how the sequent rules for logical connectives are quite different for introducing a logically complex term on the left vs the right of the turnstile; in general, the inferential role of a sentence is different depending on whether it is being used as a premise or a conclusion. Any sentence has its premisory and conclusory roles as a canonical conceptual content, written in typewriter font:

Below are recursive semantic formulas for logical connectives: given arbitrary conceptual contents and , we define the premisory and conclusory roles of logical combinations of and . Because is an operation that depends on all of , this is both a compositional and a holistic semantics.

| Connective | Premisory Role | Conclusory Role |

Note: each cell in this table corresponds directly to a sequent rule further above, where combination of sentences within a sequent corresponds to , and multiple sequents are combined via .

There are other operators we can define on conceptual contents, such as and .

Given two sets , of conceptual contents, we can define content entailment:

A preliminary computational implementation (in Julia, available on Github) supports declaring implication frames, computing conceptual roles / contents, and computing the logical combinations and entailments of these contents. This can be used to demonstrate that this is a supraclassical logic:[14] this semantics validates all the tautologies of classical logic while also giving one the ability to reason about the entailment of nonlogical contents (or combinations of both logical and nonlogical contents).

C = ImpFrame([[]=>[:q], []=>[:q,:r], [:q,:r]=>[]], [:q,:r]; containment=true)

𝐪, 𝐫 = contents(C) # canonical contents for the bearers q and r

∅ = nothing # empty set of contents

@test ∅ ⊩ (((𝐪 → 𝐫) → 𝐪) → 𝐪) # Pierce's law

@test ∅ ⊮ ((𝐪 → 𝐫) → 𝐪) # not Pierce's law Interpretations

We can interpret a lexicon in another vocabulary. An interpretation function between vocabularies assigns conceptual contents in to sentences of . We often want the interpretation function to be compatible with the structure of the domain and codomain: it is sound if for any candidate implication in , we have iff .

To see an example of interpretations, let's first define a new vocabulary with .

- : "It started in state "

- : "It's presently in state "

- : "There has been a net change in state".

claims it is part of our concept of 'state' that something stays in a given state, unless its state has changed (hence there is a similar non-monotonicity to the one in , but now with and ). We can understand what someone is saying by or in terms of interpreting these claimables in . The interpretation function and is sound. We can offer a full account for what we meant by our talk about cats and legs in terms of the concepts of states and change.

We could also start with a lexicon and interpretation function and ; we can compute what structure must have in order for us to see as generated by the interpretation of in . Below means that it doesn't matter whether that implication is in :

We can do the same with and .

Interpretation functions can be used to generate vocabularies in via a function in our software implementation, which constructs the domain of an interpretation function from the assumption that it is sound. The following code witnesses how we recover our earlier vocabulary via interpretation functions into the vocabularies and above.

S = ImpFrame([[:x]=>[:y], [:x]=>[:y,:z], [:x,:y,:z]=>[]]; containment=true)

𝐱, 𝐲, 𝐳 = contents(S)

𝐱⁺ = Content(prem(𝐱), prem(𝐱))

f = Interp(q = 𝐱⁺ ⊔ 𝐲, r = 𝐱⁺ ⊔ 𝐳)

@test sound_dom(f) == C

D = ImpFrame([[]=>[:x], []=>[:y], []=>[:x,:y], []=>[:x,:z],

[]=>[:y,:z],[]=>[:x,:y,:z],[:x,:y,:z]=>[]]; containment=true)

𝐱, 𝐲, 𝐳 = contents(D)

f = Interp(q = 𝐱 ∧ 𝐲, r = 𝐱 ∧ 𝐳)

@test sound_dom(f) == CCategory theory

What role can category theory play in developing or clarifying the above notions of vocabulary, implicational role, conceptual content, interpretation function, and logical-elaboration? A lot of progress has been made towards understanding various flavors of categories of vocabularies (with 'simple' maps which send sentences to sentences as well as thinking of interpretation functions as morphisms) with intuitive constructions emerging from (co)-limits. The semantics of implicational roles is closely related to Girard's phase semantics for linear logic. Describing this preliminary work is also left to a sequel post.

- ^

The goodness of this inference is conceptually prior to any attempts to come up with formal definitions of 'east' and 'west' such that we can recover the inferences we already knew to be good.

- ^

This is an inference you must master in order to grasp the concept of 'cat'. If you were to teach a child about cats, you'd say "Cats have four legs"; our theory of semantics should allow making sense of this statement as good reasoning, even if in certain contexts it might be proper to say "This cat has fewer than four legs".

- ^

Not much hinges on whether empty sequent, , is counted as a good inference or not. Some math works out cleaner if we take it to be a good implication, so we do this by default.

- ^

Logic shouldn't presume any structure of nonlogical content, even cautious monotonicity, i.e. from and one is tempted to say (the inference to might be infirmed by some arbitrary claimable, but at least it should be undefeated by anything which was already a consequence of ). By rejecting even cautious monotonicity, this becomes more radical than most approaches to nonmonotonic logic; however, there are plausible cases where making something explicit (taking a consequence of , which was only implicit in , and turning it into an explicit premise) changes consequences that are properly drawn from .

- ^

We might think of logical symbols as arbitrary, thinking one can define a logic where plays the role of conjunction, e.g. . However, in order to actually be a negation operator (in order for someone to mean negation by their operator), one doesn't have complete freedom. There is a responsibility to pay to the past usage of , as described in a previous post.

- ^

The sides of the turnstile represent acceptance and rejection according to meaning "Incoherent to accept all of while rejecting all of ", so the duality of negation comes from the duality of acceptance and rejection.

- ^

Thoroughgoing relational thinking, a semantic theory called inferentialism, would say the conceptual content of something like "cat" or "electron" or "ought" is exhaustively determined by its inferential relations to other such contents.

- ^

We have a desire to see the cat inference as good because of the logical inference "It is a cat", "All cats have four legs" "It has four legs". Under this picture, the original 'good' inference was only superficially good; it was technically a bad inference because of the missing premise. But in that case, a cat that loses a leg is no longer a cat. Recognizing this as bad, we start iteratively adding premises ad infinitum ("It has not lost a leg", "It didn't grow a leg after exposure to radiation", "It was born with four legs", etc.). One who thinks logic underwrites good reasoning is committed to there being some final list of premises for which the conclusion follows. Taking this seriously, none of our inferences (even our best scientific ones, which we accept may turn out to be shown wrong by future science) are good inferences. We must wonder what notion of 'good reasoning' that logic as such is codifying at all.

- ^

In Reasons for Logic, Logic for Reasons, various flavors of propositional logic (classical, intuitionistic, LP, K3, ST, TS) are shown to make explicit particular types of relations.

- ^

Logical expressivists have a holistic theory of how language and the (objective) world connect. Chapter 4 of Reasons for Logic, Logic for Reasons describes an isomorphism between the pragmatist idiom of " entails because it is normatively out of bounds to reject while accepting " and a semantic, representationalist idiom of " entails because it is impossible for the world to make true while making false." This latter kind of model comes from Kit Fine's truthmaker semantics, which generalizes possible world semantics in order to handle hyperintentional meaning (we need something finer-grained in order to distinguish "1+1=2" and " is irrational", which are true in all the same possible worlds).

- ^

At Topos, we believe this is not necessary. AI should be generating structured models (which are intelligible and composable). If executable code is needed, we can give a computational semantics to an entire class of models, e.g. mass-action kinetics for Petri nets.

- ^

See the Harman point: the perspective shift of logical expressivism means that logic does not tell us how to update our beliefs in the face of contradiction; this directionality truly comes from the material inferential relations which we must supply. Logical expressivism shows how the consequence relation is in general deterministic (there is a unique consequence set to compute from any set of premises) under very specific conditions.

- ^

Many wonderful examples in David Spivak's "What are we tracking?" talk.

- ^

Caveat: the base vocabulary must satisfy containment for this property to hold. When the only good implications in the base vocabulary are those forced by containment, one precisely recovers the classical logic consequence relation.

5 comments

Comments sorted by top scores.

comment by cubefox · 2024-10-16T05:16:37.652Z · LW(p) · GW(p)

That's an interesting theory, thanks for this post!

However... We arguably already have a theory of material inference. Probability theory! Why do we need another one? Unlike Brandom's material logic, probability theory is arguably a) relatively simple in its rules (though the Kolmogorov axioms aren't explicitly phrased in terms of inference), and b) it supports degrees of belief and degrees of confirmation. Brandom's theory assumes all beliefs and inferences are binary.

Replies from: kris-brown↑ comment by Kris Brown (kris-brown) · 2024-10-16T17:21:48.172Z · LW(p) · GW(p)

Thanks for the comment! Probability theory is a natural thing to reach for in order to both recover defeasibility while still upholding "logicism about reasons" (seeing the inferences as underwritten by a logical formalism). And of course it's a very useful calculational tool (as is classical logic)! But I don't think it can play the role that material inference plays in this theory. I think a whole post would be needed to make this point clearly[1], but I will try in a comment.

Probability theory is still a formal calculus, which is completely invariant to substituting nonlogical vocabulary for other nonlogical vocabulary (this is good - exactly what we want for a formal calculus). However, the semantics for such nonlogical vocabulary must always always presupposed before one can apply such formal reasoning. The sentences to which credences are attached must be taken to be already conceptually contentful, in advance of playing the role in reasoning that Bayesians reconstruct. This is problematic as a foundation for inferentialists who are concerned with the inferences that are constitutive of what 'cat' means within sentences like "Conditioning on it being a cat, it has four legs".

Footnote 8 applies to trying to understand what we really mean to say as covert probabilistic claims. Laws of probability theory still impose a structure on relations between material concepts (there are still forms of monotonicity and transitivity), whereas the logical-expressivist order of explanation argues that the theoretician isn't entitled to a priori impose such a structure on all material concepts: rather, their job is to describe them.

Logical expressivism isn't committed to assuming inferences and beliefs are binary! There are plenty of friendly amendments to make - it's just that even in the binary, propositional case there's a lot of richness, insight, and work to be done.

- ^

Some of this is downstream of deeper conflicts between pragmatism and representationalism, so I don't see myself as making the kinds of arguments now that could cause that kind of paradigm shift.

↑ comment by cubefox · 2024-10-20T20:48:01.483Z · LW(p) · GW(p)

But if is "It's a cat" and is "It has four legs", and describes our beliefs (or more precisely, say, my beliefs at 5 pm UTC October 20, 2024), then . Which surely means is a materially good reason for . But , so the inference from to is still logically bad. So we don't have logicism about reasons in probability theory. Moreover, probability expressions are not invariant under substituting non-logical vocabulary. For example, if is "It has two legs", and we substitute with , then . Which can only mean the inference from to is materially bad.

Laws of probability theory still impose a structure on relations between material concepts (there are still forms of monotonicity and transitivity), whereas the logical-expressivist order of explanation argues that the theoretician isn't entitled to a priori impose such a structure on all material concepts: rather, their job is to describe them.

I think the axioms of probability can be thought of as being relative to material conceptual relations. Specifically, the additivity axiom says that the probabilities of "mutually exclusive" statements can be added together to yield the probability of their disjunction. What does "mutually exclusive" mean? Logically inconsistent? Not necessarily. It could simply mean materially inconsistent. For example, "Bob is married" and "Bob is a bachelor" are (materially, though not logically) mutually exclusive. So their probabilities can be added to form the disjunction. (This arguably also solves the problem of logical omniscience, see here [LW(p) · GW(p)]).

comment by alex.herwix · 2024-10-24T08:13:39.323Z · LW(p) · GW(p)

Thank you for an interesting post! I have only skimmed it so far and not really dug in to the mathematics section but the way you are framing logic somewhat reminds me of Dewey, J. (1938). Logic: The Theory of Inquiry. Henry Holt and Company, INC.

Are you by any chance familiar with this work and could elaborate on possible continuities and discontinuities?

↑ comment by Kris Brown (kris-brown) · 2024-10-28T13:53:15.663Z · LW(p) · GW(p)

Hi, sorry I'm not directly familiar with that Dewey work. As far as classical American pragmatism goes, I can only point to Brandom's endorsement of Cheryl Misak's transformative new way of looking at it in Lecture 4 of this course, which might be helpful for drawing this connection.