Why I'm Not a Utilitarian in Modern America

post by DanB · 2022-04-24T21:43:37.586Z · LW · GW · 5 commentsContents

Variation 0 - the Happy World

Variation 1 - Hell is Other People

Variation 2 - Flipping the Moral Coin

Variation 3 - Multiple Identifiable Partners

Variation 4 - Large Partner Counts

Variation 5 - the Ethical Elevator

Variation 6 - the Free-Rider Problem

Do These Models Apply to Modern-World Ethical Problems?

None

5 comments

Many people in the rationalist / EA community express admiration and loyalty to utilitarian ethics. This makes me uncomfortable at parties, because I reject the idea that utilitarian ethics is a workable philosophy for the modern American. This essay explains my position.

I develop the argument by analyzing how common moral intuitions respond to some variations of the Prisoner's Dilemma (PD). If you're not familiar with the PD, please read the wiki page before continuing.

When used as an analogy for real-world ethical behavior, cooperation in the PD is a stand-in for any kind of altruistic action. It might represent telling the truth in a situation where it is socially costly to do so. It might mean obeying a law, even though there is little chance of being caught; or it might mean disobeying an unjust law and facing the consequences. In the most dramatic case, it might mean risking one's life to defend society against a hostile invader. Defection in the PD is the opposite: it represents doing whatever is personally beneficial, at the expense of the greater good. Crucially, cooperation in the PD is utilitarian, because it requires the individual to make a modest sacrifice, for the sake of a greater benefit to other humans.

Variation 0 - the Happy World

The first variation is just a PD with a single partner who always cooperates. In this world, everything is happy and cozy; my moral intuition has no problem with reciprocating cooperation - indeed it demands that I do so. This scenario demonstrates the first of the two basic ethical rules that I, and I imagine most other people, will follow: Don't be a Jerk. If someone is cooperating with you, you should always cooperate with them in return. If real life was similar to this scenario, I would have no problem following utilitarian ethics.

Variation 1 - Hell is Other People

This scenario is the opposite of the previous one. Here my partner defects all the time, no matter what I do. While I would initially try to elicit cooperation by randomly cooperating even though my partner previously defected, in the long run I will begin to defect as well.

This scenario illustrates the second of my two moral intuitions: Don't be a Sucker. If someone refuses to cooperate with me, I refuse to cooperate with them. I refuse to be taken advantage of by predators. This refusal to cooperate in the face of hostility already shows the problem with utilitarian ethics: I am no longer willing to act to increase the common good. In this contrived situation, the notion of "common good" is no longer appealing to me, because there is only one person who benefits, and that person has become my enemy.

I don't expect anyone thinks that this simplistic scenario by itself is a problem for utilitarian ethics, because it is not a good model for real people in the real world. In the real world, there is a sense of a "common good" that refers to the vast ocean of strangers, with whom we've never interacted. But what do we owe those strangers?

Variation 2 - Flipping the Moral Coin

The third scenario is also played with single partner, who again does not care whether I cooperate or defect. However, in this case the partner acts randomly: they cooperate with probability P.

This scenario is a bit more complex - what are my ethical obligations here? Certainly my response strategy should be some function of P, and the first two variations indicate how that function should look for extreme values of P. As P tends toward 1, my response should tend toward complete cooperation; as it tends toward 0, the opposite. Given these constraints, it seems that the simplest strategy for me is to just match my partner's cooperation rate. If they choose P=0.7, I'll cooperate 70% of the time; if they choose P=0.25, I'll cooperate 25% of the time.

I actually want to do a bit better than this. I am aware that human cognition is afflicted by a wide range of biases and distortions. I am afraid that these distortions will cause me to self-servingly estimate P to be lower than it actually is, in order to justify a lower level of reciprocal cooperation. So I will add an epsilon term, so that I cooperate with P+E, where E is some small number like 0.02. In other words, I do not want to be a sucker, but I want to make sure I don't accidentally become a jerk.

Variation 3 - Multiple Identifiable Partners

In Variation 3, we consider a game where there are N partners, Each participant acts probabilistically, as in Variation 2, with probability of cooperation Pi. In each round, a random process picks out a participant for you to have a single PD interaction with.

In this Variation, N is relatively small, so that I can identify and remember each person's cooperation record. Also, the number of total interactions is large compared to N, so that over the course of the game, I get a good sample of information about each participant's history. As in Variation 2, there is no pattern to the individual participants' strategies, other than their differing Pi values. Some people are very cooperative, while others are very antagonistic.

Given this setup, I approach Variation 3 as if I were playing N simultaneous versions of Variation 2. I estimate each partner's parameter Pi, and the cooperate according to that value, or perhaps Pi+E, for that round. I'm doing something like social mirroring: if the group we're in is cooperative, I'll be cooperative; if not, not.

I am still following my two basic ethical rules, but have I violated utilitarian ethics? Is there now a clear sense of a "common good" whose well-being I am acting against when I defect?

Variation 4 - Large Partner Counts

Variation #4 is the same as #3, except that now N is large compared to the number of rounds. This means that we can no longer track each participant's cooperation record, since we generally do not face a specific partner often enough to estimate Pi reliably.

In this setting, there is a naive strategy, which is based on a very natural principle of the assumption of goodwill. This principle suggests that in the early phase of a series of interactions, you should assume your partner is cooperative, and therefore cooperate yourself. Then, if the partner fails to reciprocate, you can switch to defection later on. If you play a large number of rounds with a single partner, this strategy does not cost very much, since the early phase is short compared to the full interaction.

But assuming goodwill in Variation 4 leads to a big problem: since I only interact a few times with each partner, I will end up cooperating all the time, even if everyone else is hostile. In particular, imagine a situation where every Pi is 0. In this case I will end up as a sucker again, since I don't have enough time to estimate Pi, and I assume it is high before learning otherwise.

A more resilient strategy here is to keep an aggregate estimate for the group Pg, the average of Pi. In each round, I cooperate according to this estimate. In some sense, this means I risk becoming a jerk, because if Pg is low but some Pi is high, I will unfairly punish that partner by defecting, even though they were willing to cooperate. Morally speaking, I am sad and regretful about that, but I consider it to be an unfortunate consequence of the design of the game.

Variation 5 - the Ethical Elevator

In variation 5, we imagine a large building made up of several floors of people, and each floor is playing a Variation 4. The floors are all isolated from each other, but one player (me) has the option of moving between floors. How should I approach this situation?

As in Variation 4, there are too many people on each floor to estimate each individual Pi. But I can estimate the aggregate Pf - the average Pi of all the participants on a given floor. This gives me a good strategy. I spend enough time on each floor to get an estimate of Pf, and then I head to the floor with the highest Pf. Once I am there, I cooperate with probability Pf+E. This should give me the best possible outcome that is compatible with my feelings of ethical obligations.

This scenario illustrates a subtle and poignant aspect of the human condition. I, and I think most people, deeply want to be ethical: I want to go to a floor with a high level of cooperation and then become an upstanding member of that community. At the same time, I refuse to be a sucker. If I'm on a floor with a low level of cooperation, I'm not going to be especially altruistic myself, because I don't want to make big sacrifices for people who won't repay that goodwill.

Variation 6 - the Free-Rider Problem

Variation 6 is the same as #5, except now everyone can move between floors.

The key point here is that it is better to be on a high-cooperation floor, regardless of whether or not a participant chooses to cooperate himself. This is because cooperation in the PD is better for the greater good, so a floor where cooperation is more common will have better overall welfare. Thus, the players will migrate towards floors with higher overall Pf values, until all the floors are the same. In other words, this situation is going to revert back to Variation 4.

This reversion is unfortunate from the perspective of justice. Let's imagine that in the early stages of the game, there was substantial variation between the Pf values for different floors. Some floors had a high density of helpful cooperators, while others were full of nasty defectors. Before people started to move, the altruistic floors achieved a high overall well-being, while the antagonistic floors did poorly. Thus, the situation manifested a principle of justice: virtuous people were rewarded, while vicious people were punished. However, once people can start to move between floors, the virtuous floors start to suffer from a free-rider problem: they attract people who aren't as cooperative, which reduces their welfare until it's the same as everywhere else.

Do These Models Apply to Modern-World Ethical Problems?

As I mentioned above, I think the two principles of Don't be a Jerk and Don't be a Sucker are quite common to most humans. And I think I did the math right: the strategies I proposed properly implement those two rules. The remaining question is whether the simplified moral thought experiments have any relevance to the real world.

The assumption in these variations that PD partners behave randomly might seem especially suspect. Real people are complex ethical strategists who are constantly carrying out their own observations and analyses, and adapting their behavior as a result. They cannot be modeled as cooperating or defecting at random.

That is certainly a valid critique of the first variations. But the later ones this issue becomes less of a concern, because the length of most interactions is short. In this regime, the individual response strategies pursued by different partners is not very important, because only a few rounds are played with each partner. The only thing that matters is whether or not the partner leads off with cooperation.

To make this more concrete, consider a few real world scenarios. Suppose you are pulled over by a police officer for having a broken taillight. Does the officer proceed to search your car, and "discover" a cache of drugs that he himself planted there, perhaps so he can justify appropriating your property under the civil forfeiture doctrine? Suppose you are buying a house. Does the seller conceal the fact that the house has some serious structural damage, in order to obtain a higher sale price? Or suppose you are running for local political office, and a journalist reaches out to ask you for an interview. Does the journalist proceed to write a hit piece against you, by quoting you out of context and implying that you are a white supremacist? In each of these scenarios, you are paired with a randomly chosen strategic partner, with whom you have a single interaction. Your fate is largely determined by the luck of the draw that decides whether your partner is cooperative or not.

The basic point of these thought experiments is to argue that it is rational and natural to adapt your ethical policy to mirror the aggregate behavior of the your community. To avoid becoming either a jerk or a sucker, you should target a level of cooperation that matches the average of your sociopolitical environment. You certainly should not be a parasite that exploits the goodwill and friendliness of other people. But at the same time, you should not be a saint in a world of sinners - don't make enormous sacrifices for ungrateful people who are likely to repay your dedication with sneers and insults.

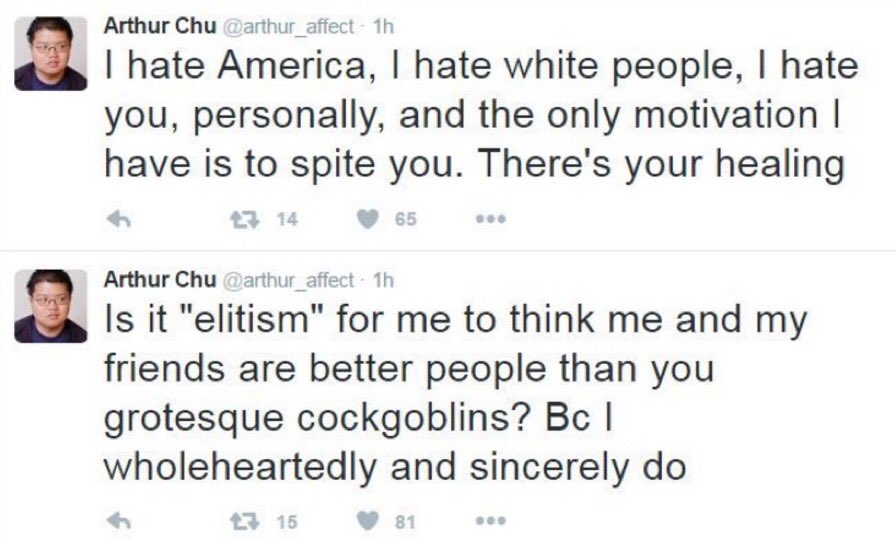

This mirror-your-environment conclusion is ominous for modern America, because our aggregate level of cooperation is declining catastrophically. For proof of that, it's enough to look at the comments section of any political web site. I'll highlight two incidents that particularly caught my attention, one from semi-famous Jeopardy champion Arthur Chu:

(I'm only about 1 step removed from Chu in the social graph, I've seen his comments on friends' Facebook walls). My attention was caught in a similar way by Aruna Khilanani, a psychiatrist who gave a talk at Yale, entitled "The Psychopathic Problem of the White Mind". In this talk she said "This is the cost of talking to white people at all — the cost of your own life, as they suck you dry" and "There are no good apples out there. White people make my blood boil."

Based on these open declarations of hatred and hostility, I cannot conclude anything other than that the ambient level of cooperation is quite low (there are of course many people who have similar feelings, but are simply a bit more circumspect about declaring them). This, then, is why I'm unmoved by utilitarian appeal to the notion of the "common good". Many of the people who comprise the common good actually hate me for being a straight white guy with conservative and libertarian beliefs.

I think the EA world is currently in a situation that's similar to the early stages of Variation 6. The EA community is like a floor that has a remarkably high level of cooperation, creating a ton of positive energy, goodwill, and great results. But in the long run, EA will not be able to resist the free-rider problem. More and more people will claim to be EA, in order to receive the benefits of being in the community, but the newcomers will mostly be less altruistic themselves. The EA community will eventually find itself in a position similar to many religious groups: there will be a core of goodwill and positive energy, which is unfortunately eroded to a substantial degree by corruption and abuse within the leadership.

Is it possible to create a society that promotes ethical behavior, even among people who refuse to be suckers? I think it is, by pursuing what I call City-on-a-Hill ethics. This is an ethical strategy where you get a group of like-minded and altruistic people together, and form a new society. In this city, every individual is expected to live up to a high code of ethics. Furthermore, this requirement is stringently enforced, by expelling those who do not live up the the code, and by applying a rigorous screening process to potential newcomers. This is the only way to avoid the free-rider problem of Variation 6.

5 comments

Comments sorted by top scores.

comment by SebastianG (JohnBuridan) · 2022-04-25T01:44:39.945Z · LW(p) · GW(p)

You seem to value loyalty and generally want to be a tit-for-tat player + forgiveness. That makes sense, as does calibrating for the external environment in the case of PDs. You also view the intellectual commons as being increasingly corrupted by prejudiced discrimination.

This makes sense to me. This makes sense, but I think the thinking here is getting overwhelmed by the emotional strength of politicization. In general, it is easy to exaggerate how much defection is actually going on, and so give oneself an excuse for nursing wounds and grudges longer than absolutely necessary. I'm guilty of this and so speak from some experience. So I think there's an emotional mistake here. Perhaps fixed by seeking out "philosophical repose", a bit more detachment from reacting to the opinions and thoughts of others.

Intellectually, I think you are making an important mistake. Cooperation in PDs and moral philosophy are two different, though related, things. Welfarist utilitarianism does not require impartiality, but choosing those actions which maximize good consequences. Since your reason for rejecting welfsrism is because of the consequences of not punishing free riders, you have not yet rejected utilitarianism. You are rejecting impartiality as a necessary tenant of utilitarianism, but that is not the same thing as rejecting utilitarianism. You have to go one step further and give non-consequentialist reasons for rejecting them.

Hope that helps.

comment by Dirichlet-to-Neumann · 2022-04-24T22:02:26.019Z · LW(p) · GW(p)

This post seems like a thin veneer of classical game theory analysis with - in cauda venenum - a culture war-ish conclusion.

comment by Dagon · 2022-04-25T01:52:34.041Z · LW(p) · GW(p)

[ note: not Utilitarian myself ]

This doesn't sound like you're saying you think it's impractical to practice Utilitarian ethics, but that you disagree with some fundamental tenets. You don't actually want to maximize total or average happiness of humans, because you de-value humans that don't share your outlook.

That's fine, but it's deeper than just a practical objection.

comment by gbear605 · 2022-04-24T23:05:36.565Z · LW(p) · GW(p)

Ironically, the "City-on-a-Hill" ethics is exactly what you're decrying with your culture war examples. Those people are saying "Some people are cooperating, other people are defecting, and the people who are defecting basically all share some traits, so we're going to create our own group where we all cooperate with each other". The phrasing is extreme, but the second tweet from Chu sounds exactly like what someone in your proposed ethical group would say to an outsider.

One major problem with the City-on-a-Hill ethical system is that it assumes that your society is perfectly isolated from everyone else. But many PDs are actually not one-on-one but against the entire world. If your society is downwind of someone else who decides to build a polluting factory, you'll get sick and won't be able to do anything about it. Your only hope is to either convince or force that factory owner to install filters that prevent the pollution.

comment by Viliam · 2022-04-27T19:28:41.690Z · LW(p) · GW(p)

We have two basic mechanisms to "solve" the Prisonner's Dilemma: either avoid people who defect, or punish them. They seem like substitutes: If you are free to choose your fellow players, you don't need the punishments (for non-extreme levels of harm), because everyone can only hurt you once (or less than once on average, if you also avoid people who hurt your friends). But if you can't avoid other people, that is when exact rules become so important, because if there are people who defect, you still have to interact with them all the time.

(...makes me wonder, could we use "how much you feel that strict rules are necessary" as a proxy for "how free you feel in your choice of people you interact with"?)

For example, many people would probably agree that murder or theft should be punished, but it should not be illegal to be an asshole. Not because being an asshole is a good thing, but because avoiding the asshole is usually a sufficient punishment. And when avoiding the asshole is not an option, for example for kids at school, that is when the rules for proper behavior (and the punishment for breaking those rules) become necessary.

Like, when I read those tweets, my reaction is that the proper solution is for Arthur to only interact with his superior friends, and to be shunned by everyone he despises -- and that would solve the entire problem, without the need to find out whether his group of friends is "objectively" superior to others or not. Let them believe that they are, who cares. If they are right, then they deserve each other's company. And if they are wrong, then... they also deserve each other's company. Law of karma in action.

From the perspective of efective altruism, I think the idea is kinda that by choosing the most effective action, you could significantly improve lives of ten people. Let's suppose that five of them are nice people who, if the situation was hypothetically reversed, would reciprocate; and the remaining five are selfish assholes who in the hypothetically reversed situation would just laugh at you. To me it still seems worth doing -- improving the lives of the five nice people is a great outcome, and the five assholes are just a side effect. Is it too optimistic to assume that five out of ten people are nice? I assume that it is even more than five; the assholes just get disproportionally more visibility.

I am not sure what is the specific worry about Arthur and the EA movement. Will people like him get some benefit from EA activities? Almost certainly yes... but don't worry about it, as long as you are doing it for others. There are certain things that almost everyone benefits from -- such as there being a nice sunny day -- and we should not enjoy them less just because we know that some people we don't like may benefit from them, too. Will people like him join the EA? First, hahaha, nope, that seems quite obvious. Second, if he ever would, I see nothing wrong with him sending his money to effective charities. Either way, I don't see a problem.

The real problems in my opinion are: how to organize your private life, and how to prevent becoming bitter. (These are related, because it is easier to become bitter if your life indeed sucks.) And I agree that a large part of improving your life is selecting the people in your social bubble. Also, spending less time on social networks. But here I would say that social groups organizes around the concept of altruism have the advantage that the assholes will mostly naturally filter themselves out. Although some predators will realize that these are convenient places to find naive prey; which is why people need to communicate openly about abusers amongst them.

To use Arthur as an example again, in what situation would you actually meet him? Only on internet. In real life, I assume you would hate the places that he enjoys, and vice versa. The problem mostly solves itself.